Introduction

Simple time series models

ARIMA

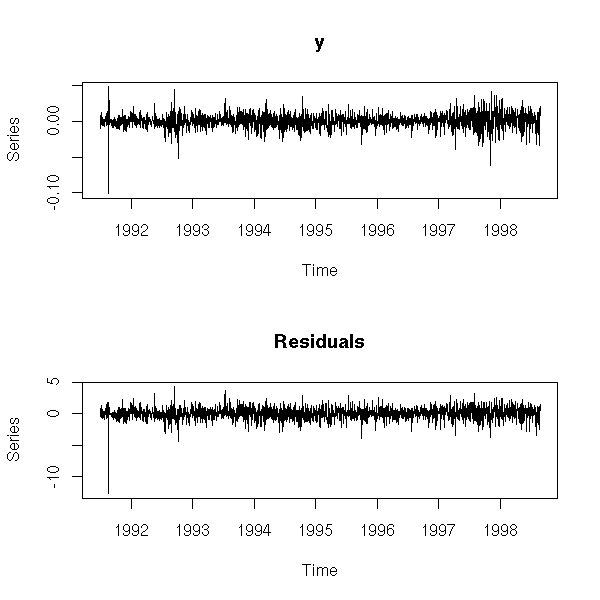

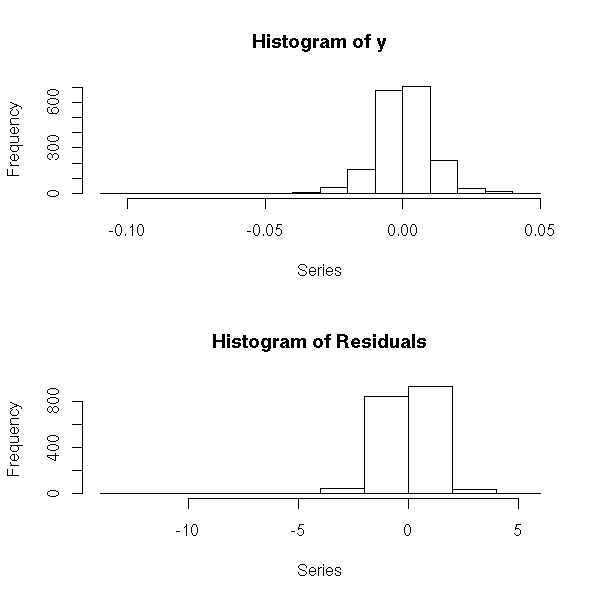

Validating a model

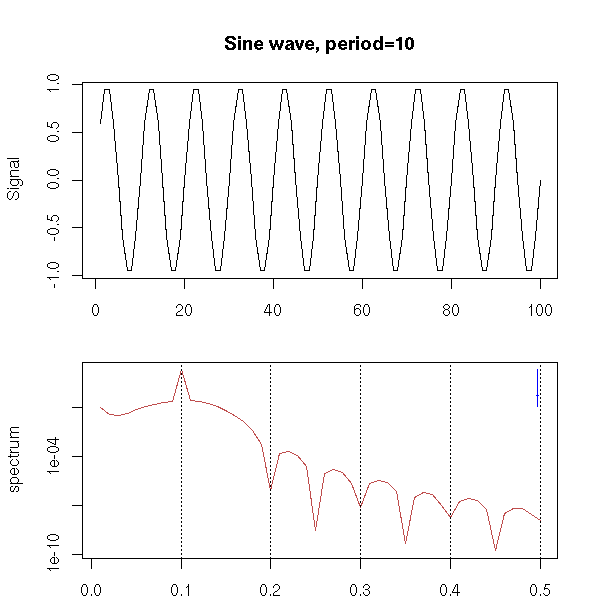

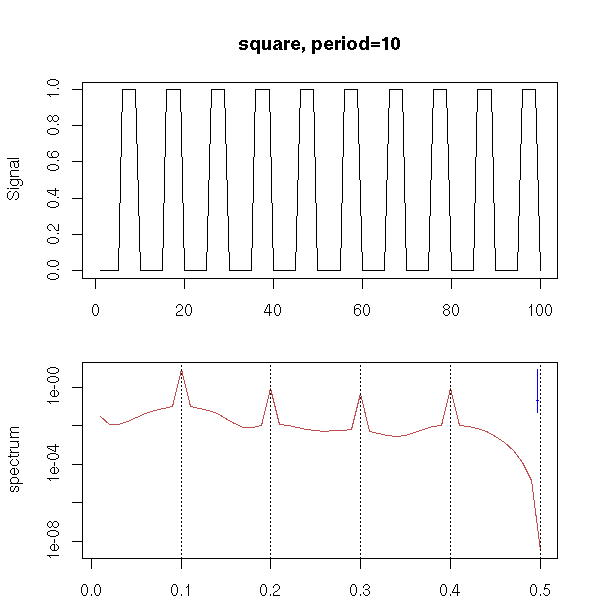

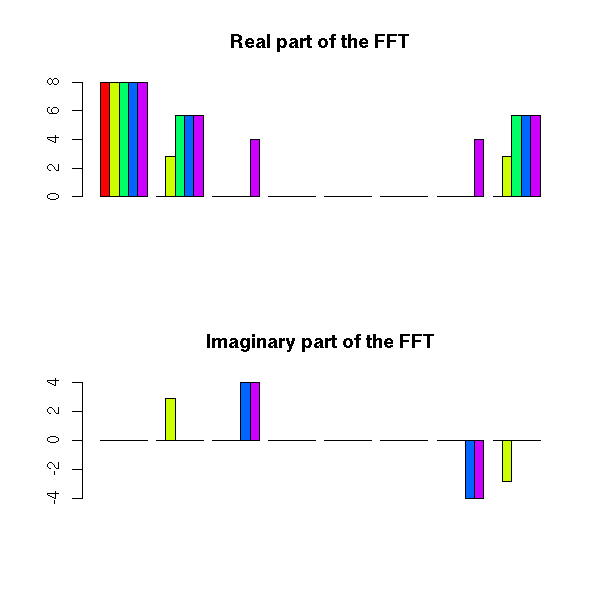

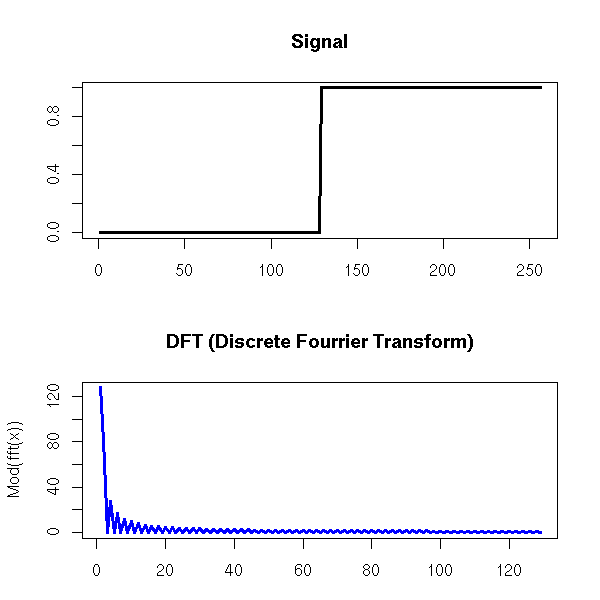

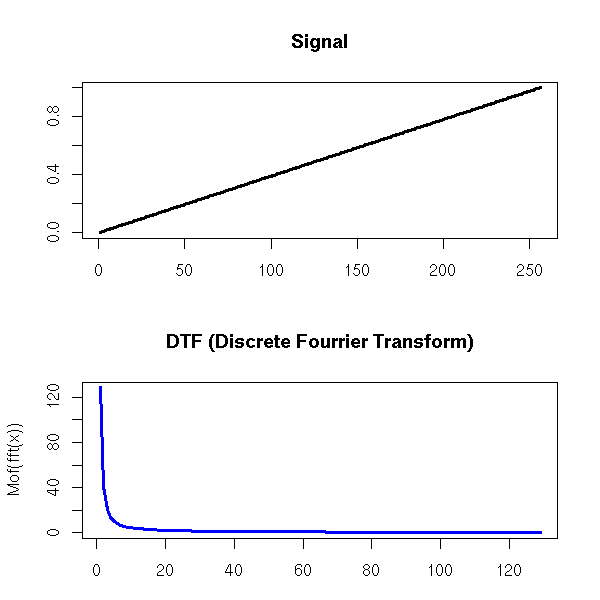

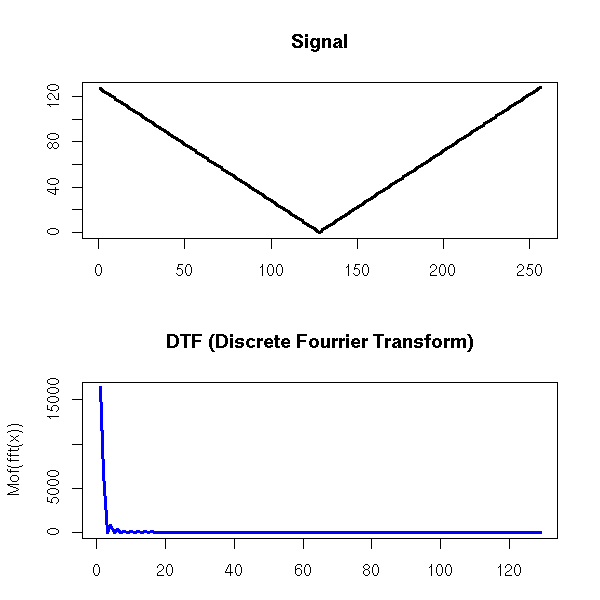

Spectral Analysis

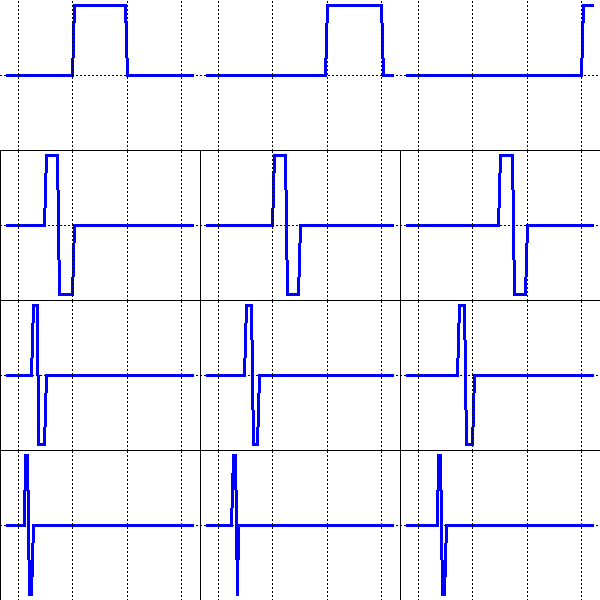

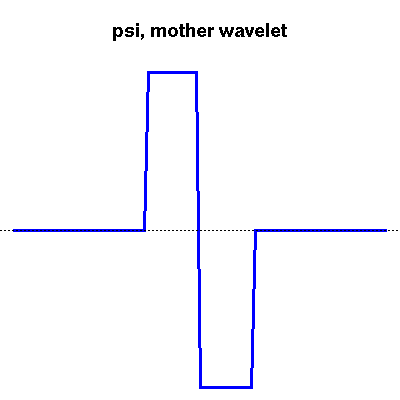

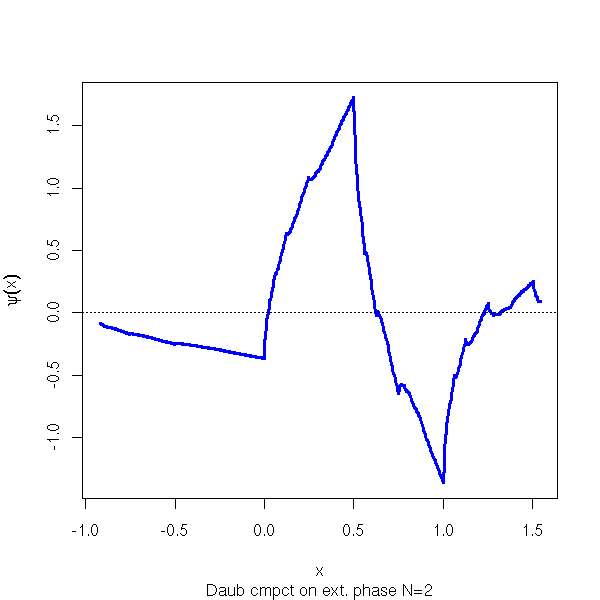

Wavelets

Digital Signal Processing (DSP)

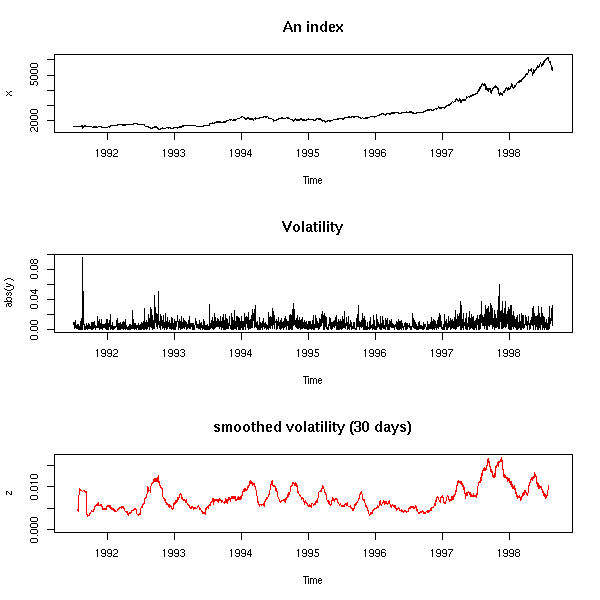

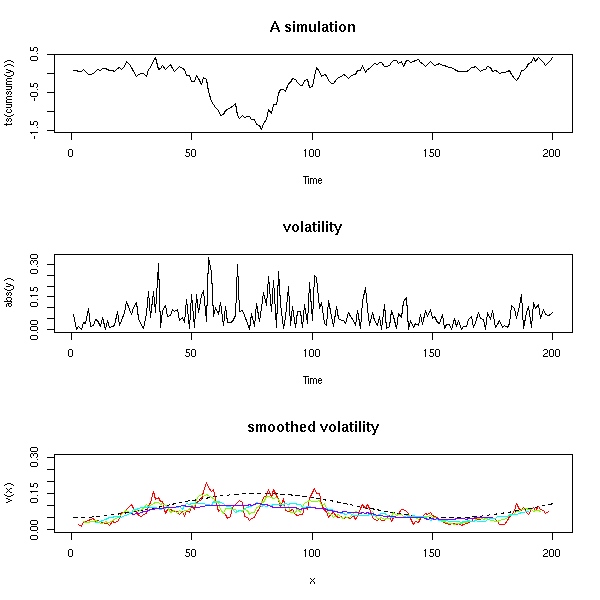

Modeling volatility: GARCH models (Generalized AutoRegressive Conditionnal Heteroscedasticity)

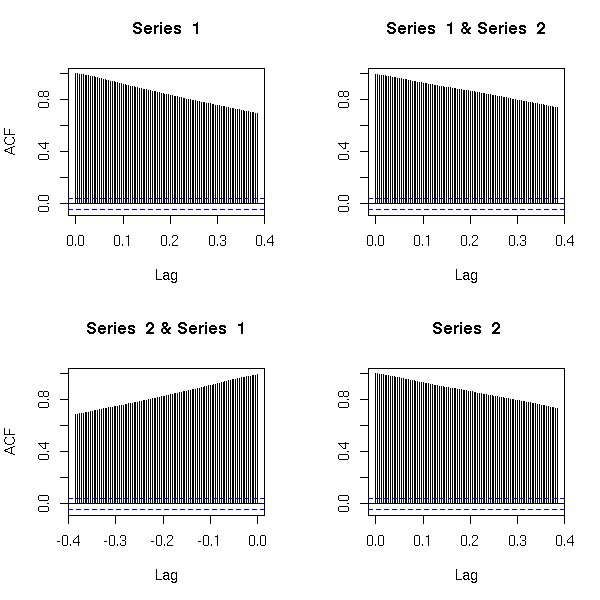

Multivariate time series

State-Space Models and Kalman Filtering

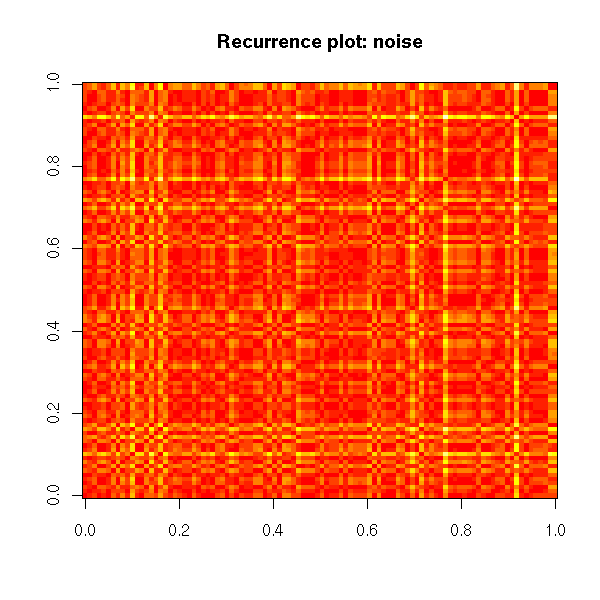

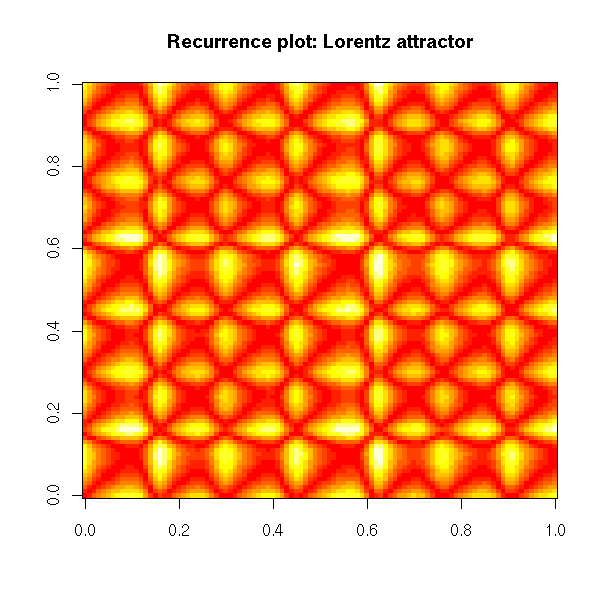

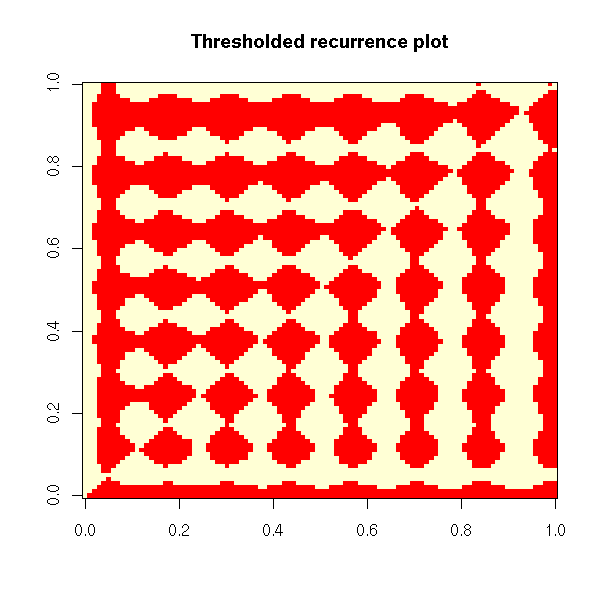

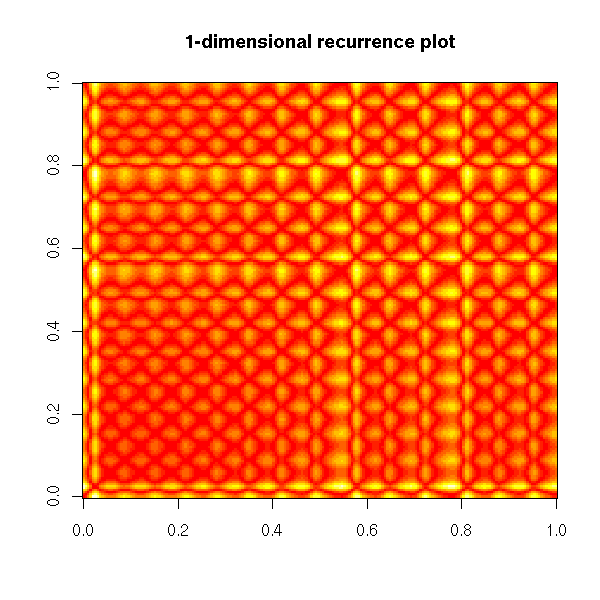

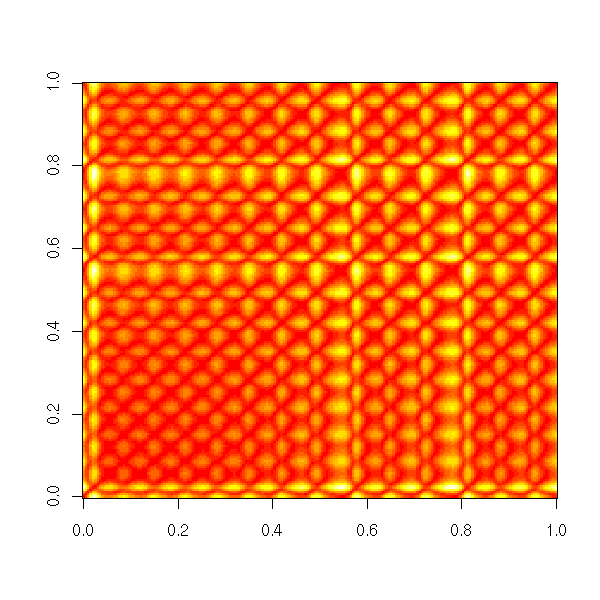

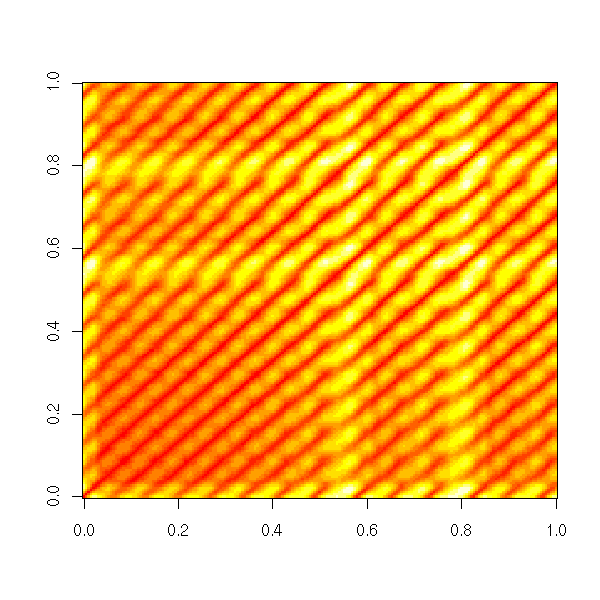

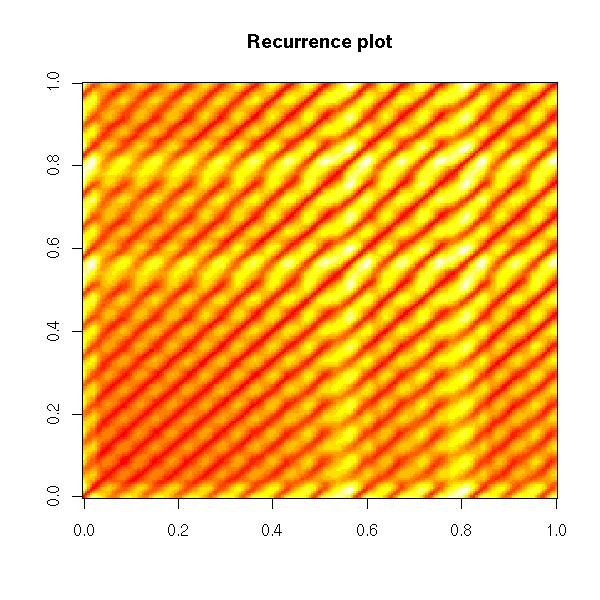

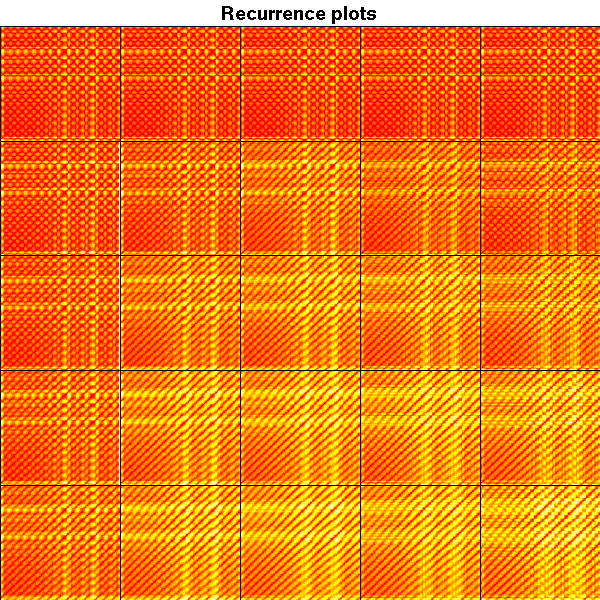

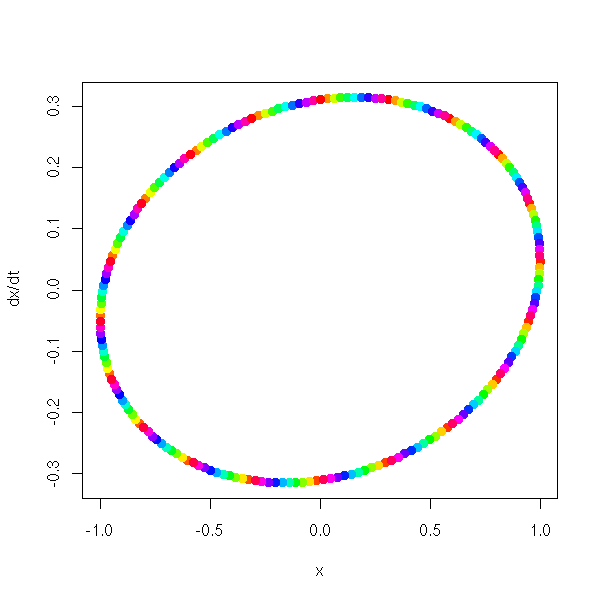

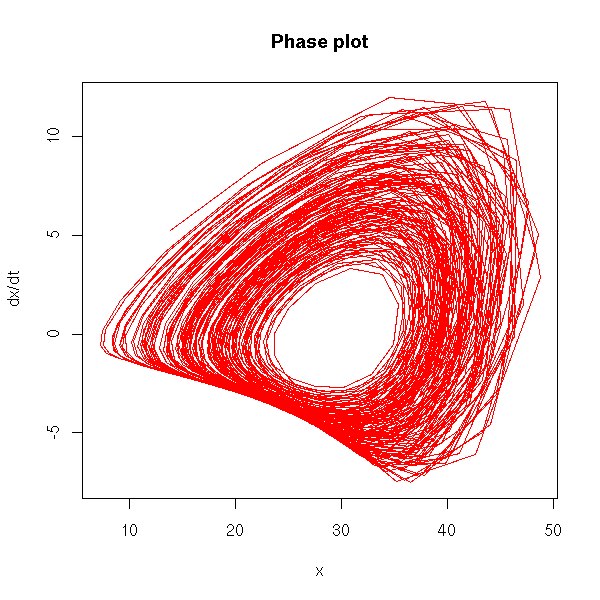

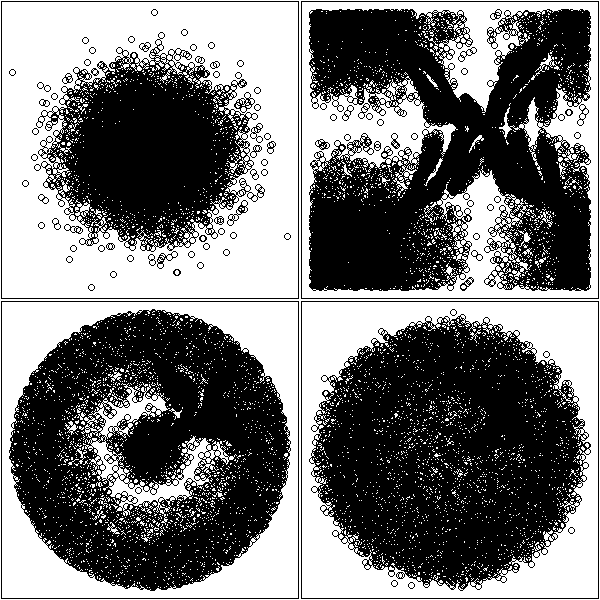

Non-linear time series and chaos

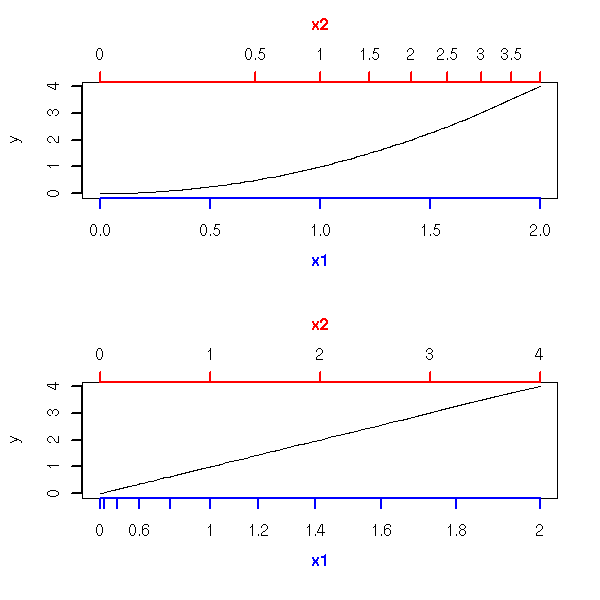

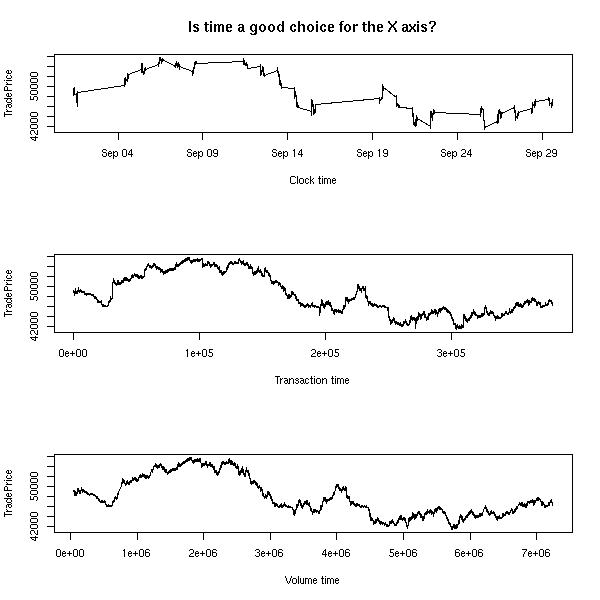

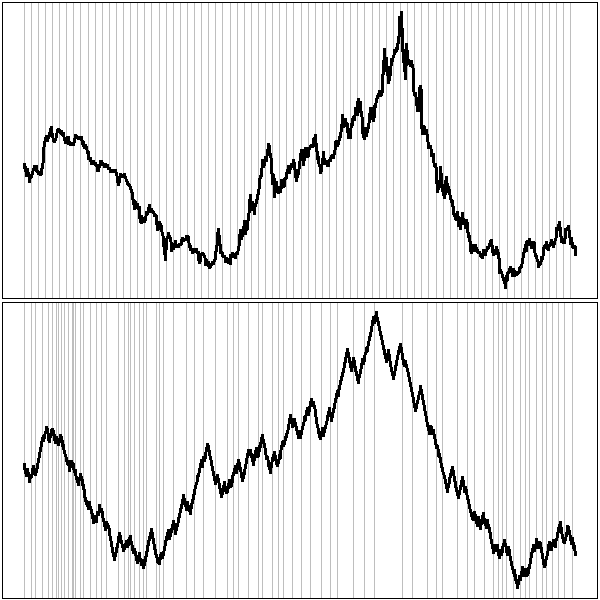

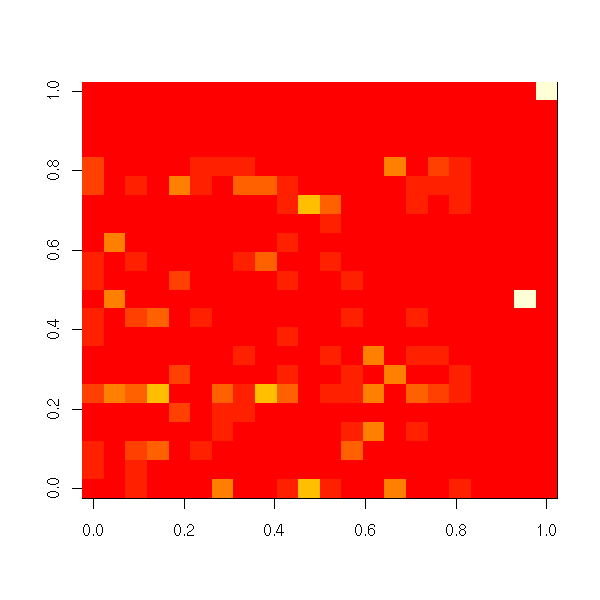

Other times

Discrete-valued time series: Markov chains and beyond

Variants of Markov chains

Untackled subjects

TO SORT

This chapter contrasts with the topics we have seen up to now: we were interested in the study of several independant realizations of a simple statistical process (e.g., a gaussian random variable, or a mixture of gaussians, or a linear model); we shall now focus on a single realization of a more complex process.

Here is the structure of this chapter.

After an introduction, motivating the notion of a time series and giving several examples, simulated or real, we shall present the classical models of time series (AR, MA, ARMA, ARIMA, SARIMA), that provide recipes to build time series with desired properties. We shall then present spectral methods, that focus on the discovery of periodic elements in time series. The simplicity of those models makes them amenable, but they cannot describe the properties of some real-world time series: non-linear methods, built upon the classical models (GARCH) are called for. State-Space Models and the Kalman filter follow the same vein: they assume that the data is build from linear algebra, but that we do not observe everything -- there are "hidden" (unobserved, latent) variables.

Some of those methods readily generalize to higher dimensions, i.e., to the study of vector-valued time-series, i.e., to the study of several related time series at the same time -- but some new phenomena appear (e.g., cointegration). Furthermore, if the number of time series to study becomes too large, the vector models have too many parameters to be useful: we enter the realm of panel data.

We shall then present some less mainstream ideas: instead of linear algebra, time series can be produced by analytical (read: differential equations) or procedural (read: chaos, fractals) means.

We finally present generalizations of time series: stochastic processes, in which time is continuous; irregular time series, in which time is discrete but irregular; and discrete-valued time series (with Markov chains and Hidden Markov Models instead of AR and state-space models).

In probability theory, a time series (you will also hear mention of "stochastic process": in a time series, time is discrete, in a stochastic process, it is continuous) is a sequence of random variables. In statistics, it is a variable that depends on time: for instance, the price of a stock, that changes every day; the air temperature, measured every month; the heart rate of a patient, minute after minute, etc.

plot(LakeHuron,

ylab = "",

main = "Level of Lake Huron")

Sometimes, it is so noisy that you do not see much,

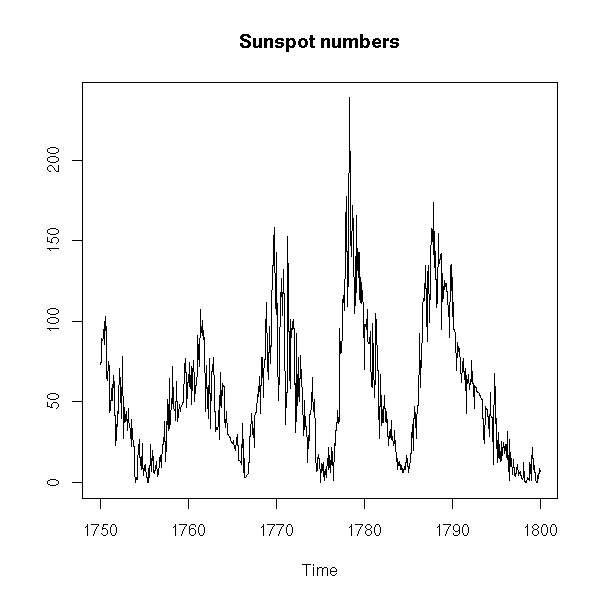

x <- window(sunspots, start=1750, end=1800)

plot(x,

ylab = "",

main = "Sunspot numbers")

You can then smooth the curve.

plot(x,

type = 'p',

ylab = "",

main = "Sunspot numbers")

k <- 20

lines( filter(x, rep(1/k,k)),

col = 'red',

lwd = 3 )

You can underline the periodicity, graphically, with vertical bars.

data(UKgas)

plot.band <- function (x, ...) {

plot(x, ...)

a <- time(x)

i1 <- floor(min(a))

i2 <- ceiling(max(a))

y1 <- par('usr')[3]

y2 <- par('usr')[4]

if( par("ylog") ){

y1 <- 10^y1

y2 <- 10^y2

}

for (i in seq(from=i1, to=i2-1, by=2)) {

polygon( c(i,i+1,i+1,i),

c(y1,y1,y2,y2),

col = 'grey',

border = NA )

}

par(new=T)

plot(x, ...)

}

plot.band(UKgas,

log = 'y',

ylab = "",

main = "UK gas consumption")

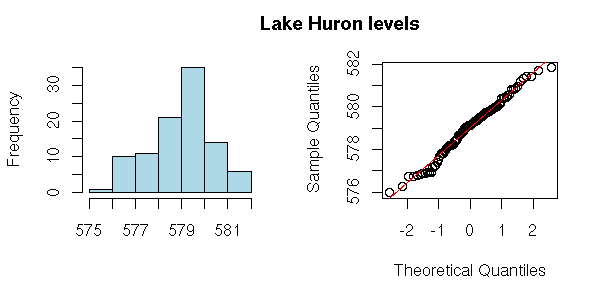

As always, before analysing the series, we have a look at it (evolution with time, pattern changes, misordered values (sometimes, you have both the value and the time: you should then check that the order is the correct one), distribution (histogram or, better, density estimation), y[i+1] ~ y[i], outliers -- the more data you have, the dirtier: someone may have forgotten the decimal dot while entering the data, someone may have decided to replace missing values by 0 or -999, etc.)

x <- LakeHuron

op <- par(mfrow = c(1,2),

mar = c(5,4,1,2)+.1,

oma = c(0,0,2,0))

hist(x,

col = "light blue",

xlab = "",

main = "")

qqnorm(x,

main = "")

qqline(x,

col = 'red')

par(op)

mtext("Lake Huron levels",

line = 2.5,

font = 2,

cex = 1.2)

x <- diff(LakeHuron)

op <- par(mfrow = c(1,2),

mar = c(5,4,1,2)+.1,

oma = c(0,0,2,0))

hist(x,

col = "light blue",

xlab = "",

main = "")

qqnorm(x,

main = "")

qqline(x,

col = 'red')

par(op)

mtext("Lake Huron level increments",

line = 2.5,

font = 2,

cex = 1.2)

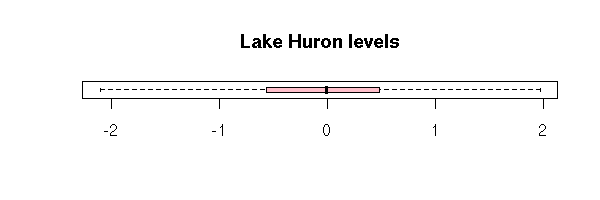

boxplot(x,

horizontal = TRUE,

col = "pink",

main = "Lake Huron levels")

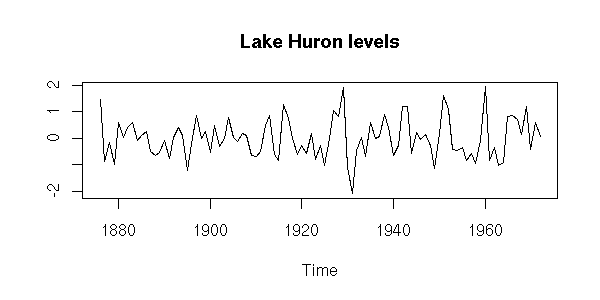

plot(x,

ylab = "",

main = "Lake Huron levels")

n <- length(x)

k <- 5

m <- matrix(nr=n+k-1, nc=k)

colnames(m) <- c("x[i]", "x[i-1]", "x[i-2]",

"x[i-3]", "x[i-4]")

for (i in 1:k) {

m[,i] <- c(rep(NA,i-1), x, rep(NA, k-i))

}

pairs(m,

gap = 0,

lower.panel = panel.smooth,

upper.panel = function (x,y) {

panel.smooth(x,y)

par(usr = c(0, 1, 0, 1))

a <- cor(x,y, use='pairwise.complete.obs')

text(.1,.9,

adj=c(0,1),

round(a, digits=2),

col='blue',

cex=2*a)

})

title("Lake Huron levels: autocorrelations",

line = 3)

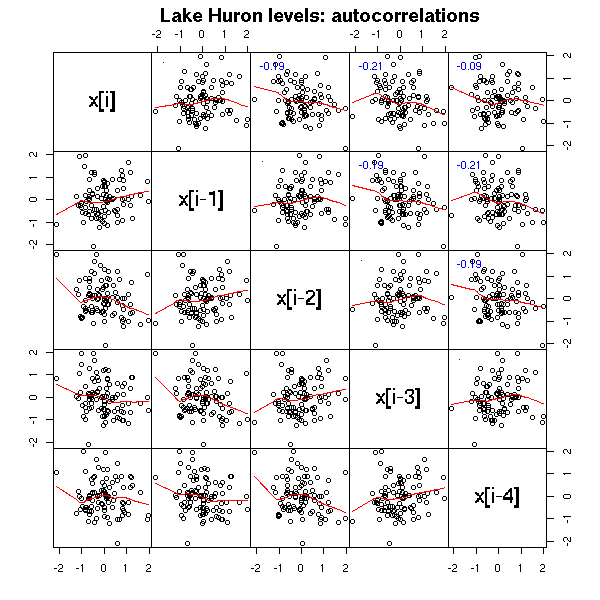

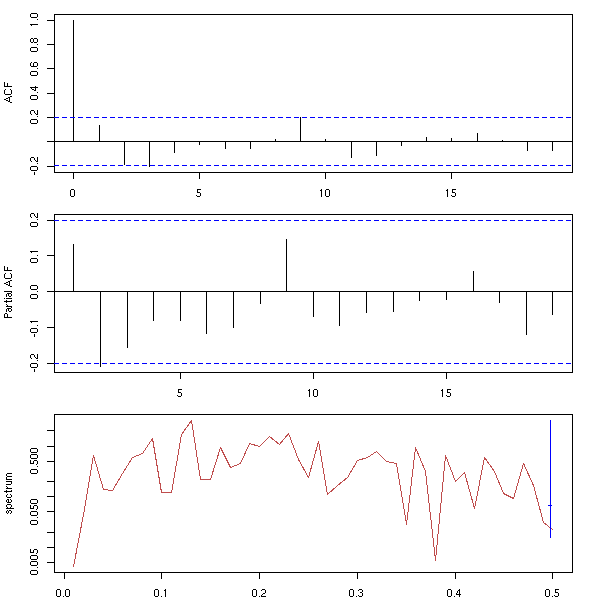

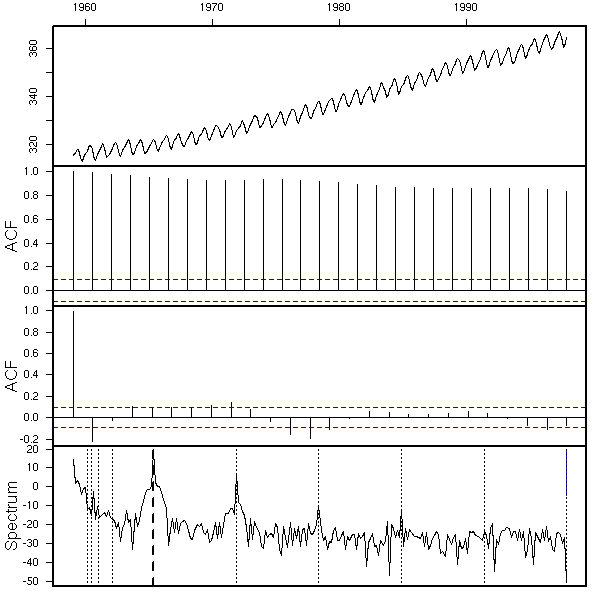

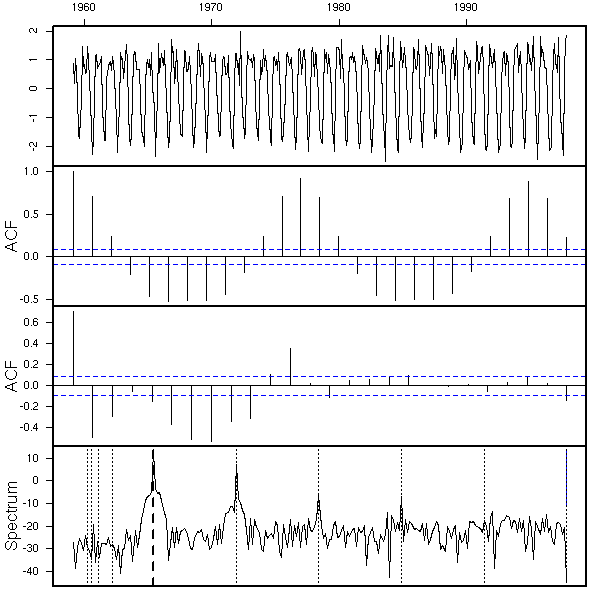

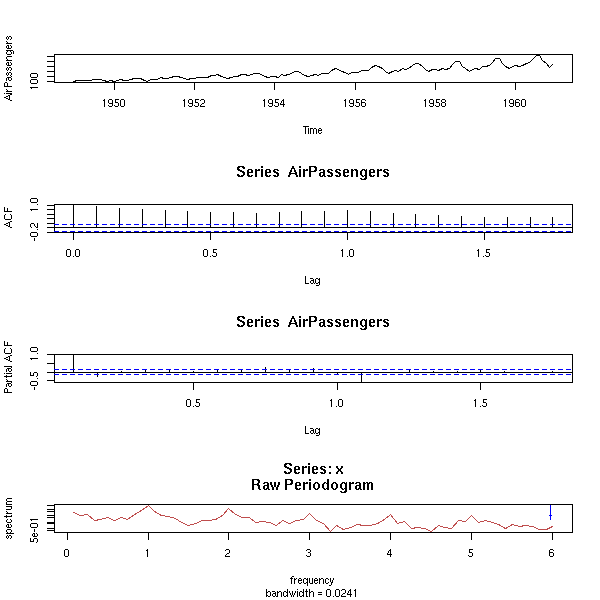

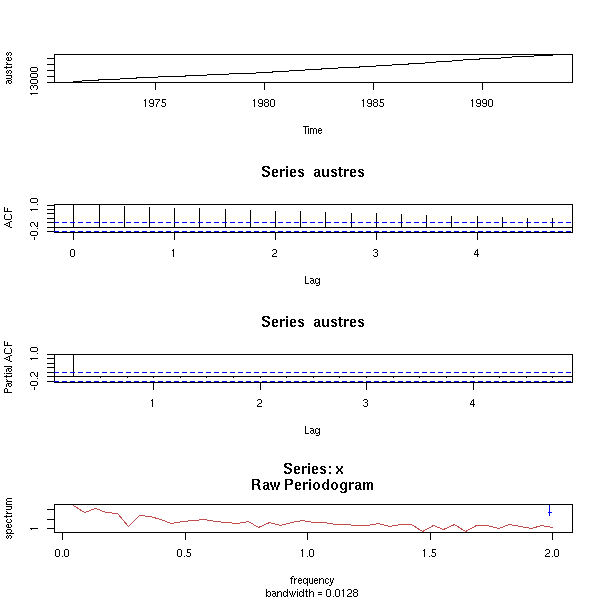

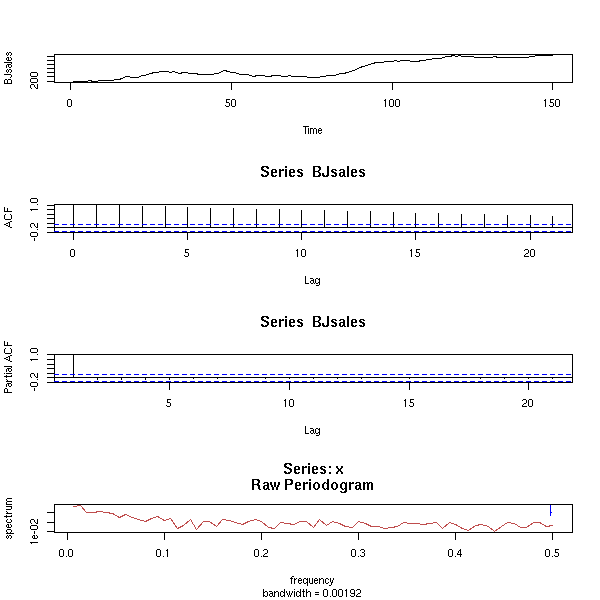

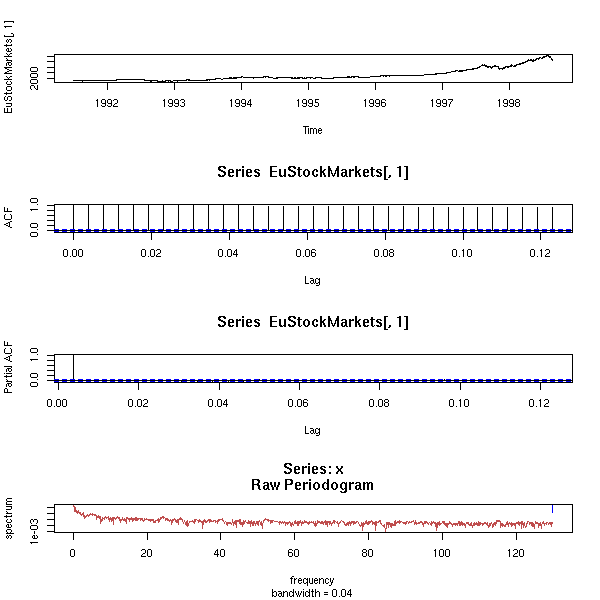

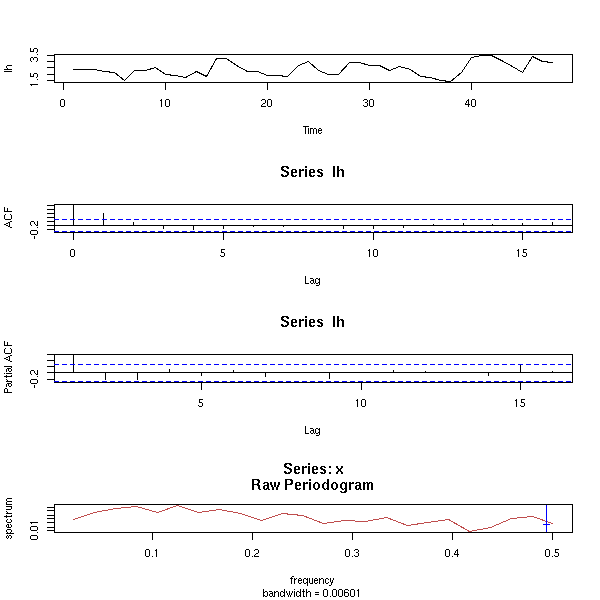

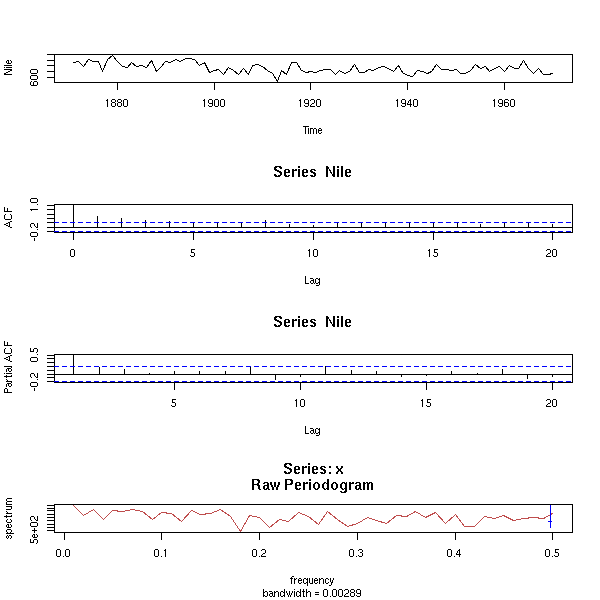

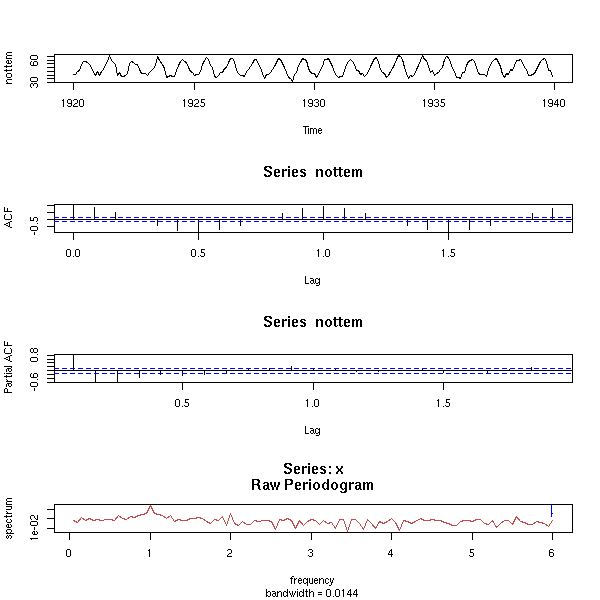

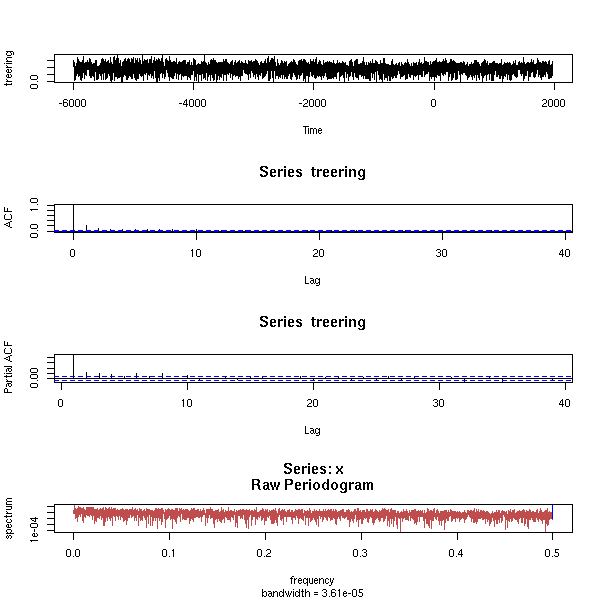

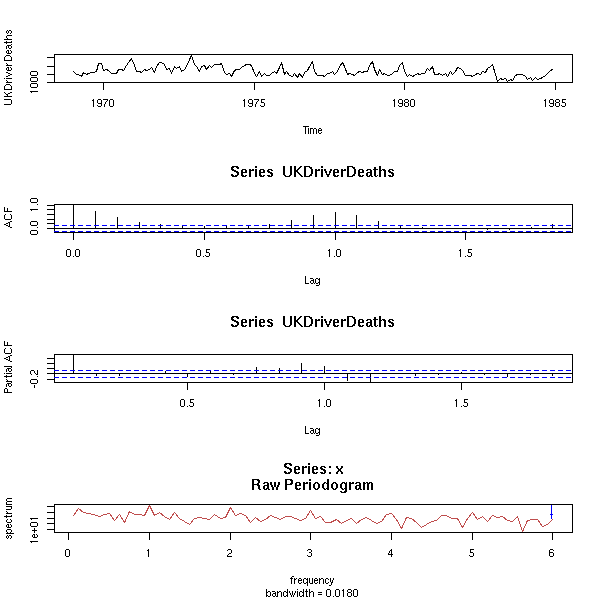

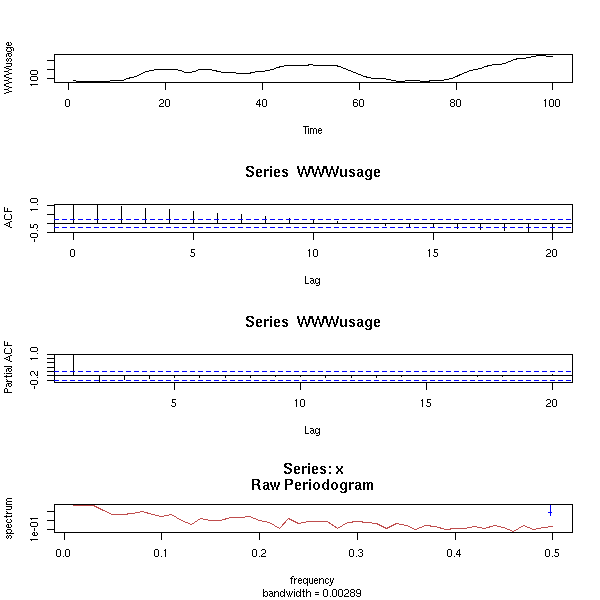

We shall also see other plots, more specific to time series. The first one, the AutoCorrelation Function (ACF) gives the correlation between x[i] and x[i-k], for increasing values of k (these are the numbers that already appeared in the previous pair plot). The second one, the PACF (Partial AutoCorrelation Function), contains the same information, but gives the correlation between x[i] and x[i-k] that is not explained by the correlations with a shorter lag (more about this later, when we speak of AR models). The last one, the spectrogram, tries to find periodic components, of various frequencies, in the signal and displays the importance of the various frequencies (more about this later).

op <- par(mfrow = c(3,1),

mar = c(2,4,1,2)+.1)

acf(x, xlab = "")

pacf(x, xlab = "")

spectrum(x, xlab = "", main = "")

par(op)

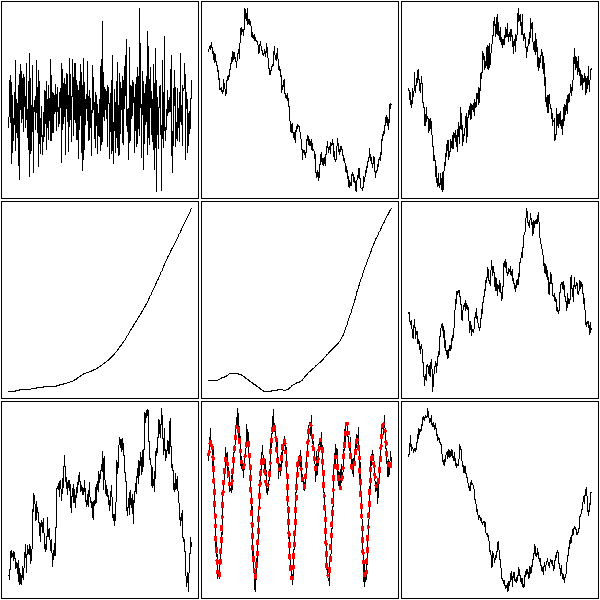

One aim (or one step) of time series analysis is to find the "structure" of a time series, i.e., to find how it was build, i.e., to find a simple algorithm that could produce similar-looking data. Here are, in no particular order, a few simulated time series.

The first is just gaussian noise; the second is an integrated noise (a "random walk"). We can then build on those, integrating several times, adding noise to the result, adding a linear or periodic trend, etc.

op <- par(mfrow = c(3,3),

mar = .1 + c(0,0,0,0))

n <- 100

k <- 5

N <- k*n

x <- (1:N)/n

y1 <- rnorm(N)

plot(ts(y1),

xlab="", ylab="", main="", axes=F)

box()

y2 <- cumsum(rnorm(N))

plot(ts(y2),

xlab="", ylab="", main="", axes=F)

box()

y3 <- cumsum(rnorm(N))+rnorm(N)

plot(ts(y3),

xlab="", ylab="", main="", axes=F)

box()

y4 <- cumsum(cumsum(rnorm(N)))

plot(ts(y4),

xlab="", ylab="", main="", axes=F)

box()

y5 <- cumsum(cumsum(rnorm(N))+rnorm(N))+rnorm(N)

plot(ts(y5),

xlab="", ylab="", main="", axes=F)

box()

# With a trend

y6 <- 1 - x + cumsum(rnorm(N)) + .2 * rnorm(N)

plot(ts(y6),

xlab="", ylab="", main="", axes=F)

box()

y7 <- 1 - x - .2*x^2 + cumsum(rnorm(N)) +

.2 * rnorm(N)

plot(ts(y7),

xlab="", ylab="", main="", axes=F)

box()

# With a seasonnal component

y8 <- .3 + .5*cos(2*pi*x) - 1.2*sin(2*pi*x) +

.6*cos(2*2*pi*x) + .2*sin(2*2*pi*x) +

-.5*cos(3*2*pi*x) + .8*sin(3*2*pi*x)

plot(ts(y8+ .2*rnorm(N)),

xlab="", ylab="", main="", axes=F)

box()

lines(y8, type='l', lty=3, lwd=3, col='red')

y9 <- y8 + cumsum(rnorm(N)) + .2*rnorm(N)

plot(ts(y9),

xlab="", ylab="", main="", axes=F)

box()

par(op)

In statistics, we like independent data -- the problem is that time series contain dependent data. The aim of a time series analysis will thus be to extract this structure and transform the initial time series into a series of independant values often called "innovations", usually by going in the other direction: by providing a recipe (a "model") to build a series similar to the one we have with noise as only ingredient.

We can present this problem from another point of view: when you study a statistical phenomenon, you usually have several realizations of it. With time series, you have a single one. Therefore, we replace the study of several realizations at a given point in time by the study of a single realization at several points in time. Depending on the statistical phenomenon, these two points of view may be equivalent or not -- this problem is called ergodicity -- more about this later.

As the observations in a time series are generally not independant, we can first have a look at their correlation: the AutoCorrelation Function (ACF) (strictly speaking, one can consider the Sample ACF, if it is computed from a sample, or the theoretical autocorrelation function, if it is not computed from actual data but from a model) at lag k is the correlation between observation number n and observation number n - k. In order to compute it from a sample, you have to assume that it does not depend on n but only on the lag, k.

We could do it by hand

my.acf <- function (

x,

lag.max = ceiling(5*log(length(x)))

) {

m <- matrix(

c( NA,

rep( c(rep(NA, lag.max-1), x),

lag.max ),

rep(NA,, lag.max-1)

),

byrow=T,

nr=lag.max)

x0 <- m[1,]

apply(m,1,cor, x0, use="complete")

}

n <- 200

x <- rnorm(n)

plot(my.acf(x),

xlab = "Lag",

type = 'h')

abline(h=0)

but there is already such a function.

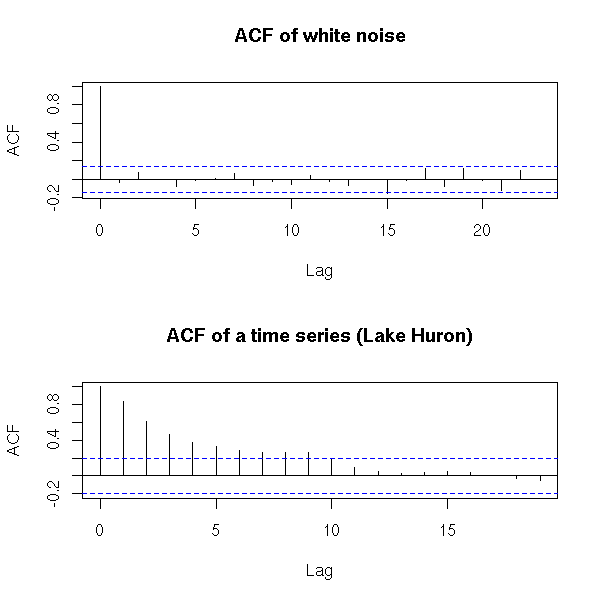

op <- par(mfrow=c(2,1)) acf(x, main="ACF of white noise") x <- LakeHuron acf(x, main="ACF of a time series (Lake Huron)") par(op)

The y=0 line traditionnally added to those plots can be misleading: it can suggest that the sign of a coefficient is known, while this coefficient is not significantly different from zero. The boundaries of the confidence interval are sufficient

op <- par(mfrow=c(2,1))

set.seed(1)

x <- rnorm(100)

# Default plot

acf(x, main = "ACF with a distracting horizontal line")

# Without the axis, with larger bars

r <- acf(x, plot = FALSE)

plot(r$lag, r$acf,

type = "h", lwd = 20, col = "grey",

xlab = "lag", ylab = "autocorrelation",

main = "Autocorrelation without the y=0 line")

ci <- .95

clim <- qnorm( (1+ci) / 2 ) / sqrt(r$n.used)

abline(h = c(-1,1) * clim,

lty = 2, col = "blue", lwd = 2)

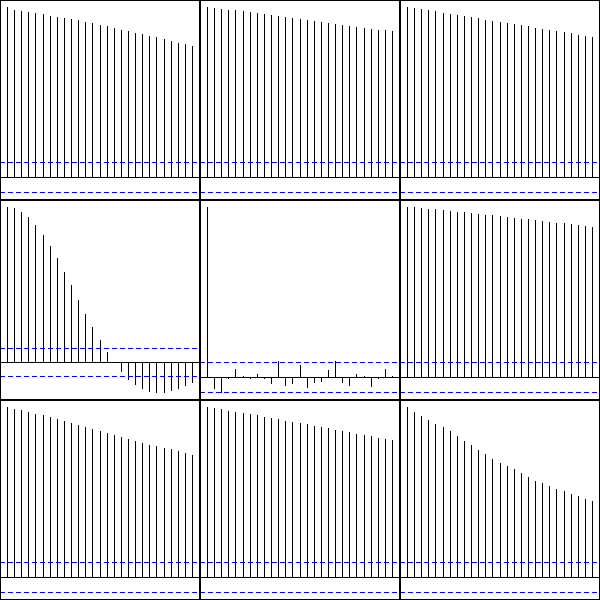

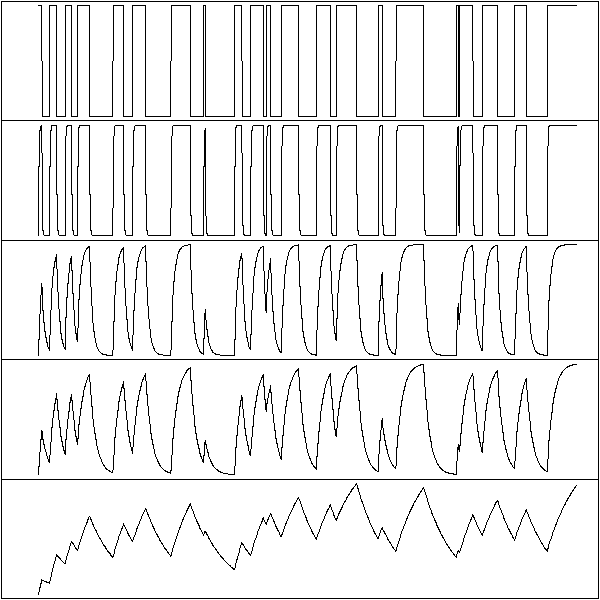

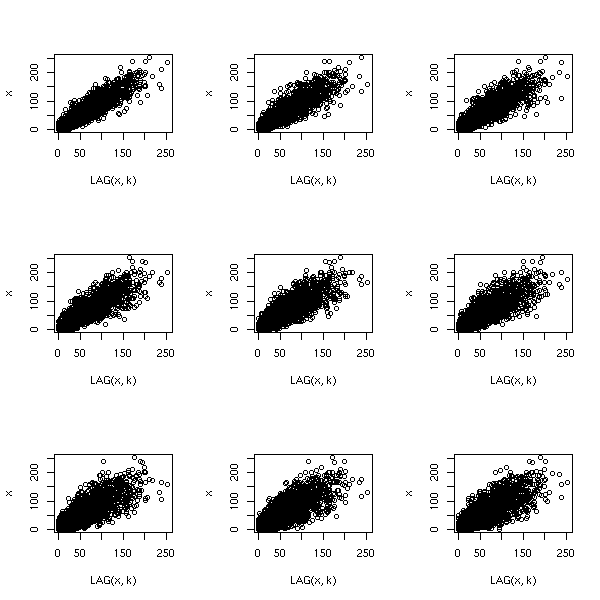

Here are the autocorrelation functions of the simulated examples from the introduction, but the plots have been shuffled: can you put them back in the correct order? Alternatively, can you see several groups among those time series?

op <- par(mfrow=c(3,3), mar=c(0,0,0,0))

for (y in sample(list(y1,y2,y3,y4,y5,y6,y7,y8,y9))) {

acf(y,

xlab="", ylab="", main="", axes=F)

box(lwd=2)

}

par(op)

Personnally, I can see three groups: when the ACF is almost zero, the data are not correlated; when the ACF is sometimes positive sometimes negative, the data might be periodic; in the other cases, the data are correlated -- but the speed at which the autocorrelation decreases can vary.

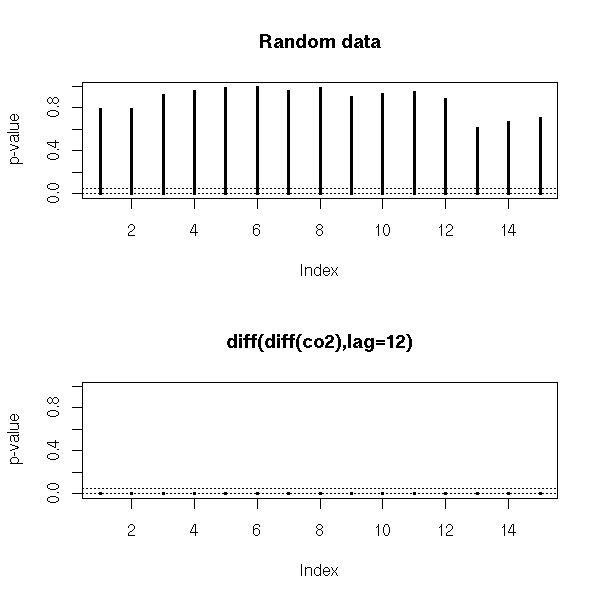

A white noise is a series of uncorrelated random variables, whose expectation is zero, whose variance is constant.

In other words, these are iid (independant, identically distributed) random variables, to the second order. (They may be dependent, but in non-linear ways, that cannot be seen from the correlation; they may have different distributions, as long as the mean and variance remain the same; their distribution need not be symetric.)

Quite often, we shall try to decompose our time series into a "trend" (or anything interpretable) plus a noise term, that should be white noise.

For instance, a series of iid random variables is a white noise.

my.plot.ts <- function (x, main="") {

op <- par(mar=c(2,2,4,2)+.1)

layout( matrix(c(1,2),nr=1,nc=2), widths=c(3,1) )

plot(x, xlab="", ylab="")

abline(h=0, lty=3)

title(main=main)

hist(x, col="light blue", main='', ylab="", xlab="")

par(op)

}

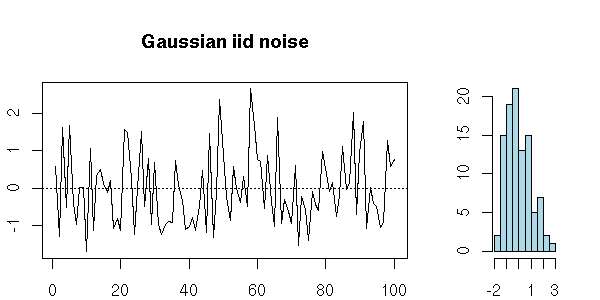

n <- 100

x <- ts(rnorm(n))

my.plot.ts(x, "Gaussian iid noise")

A series of iid random variables of mean zero is also a white noise.

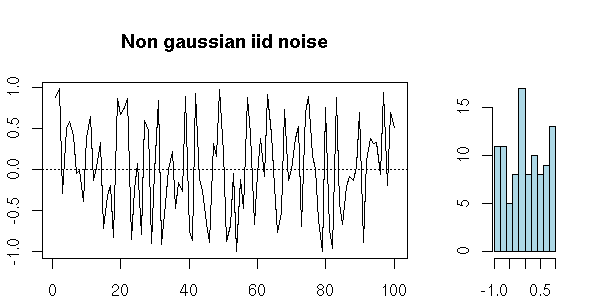

n <- 100 x <- ts(runif(n,-1,1)) my.plot.ts(x, "Non gaussian iid noise")

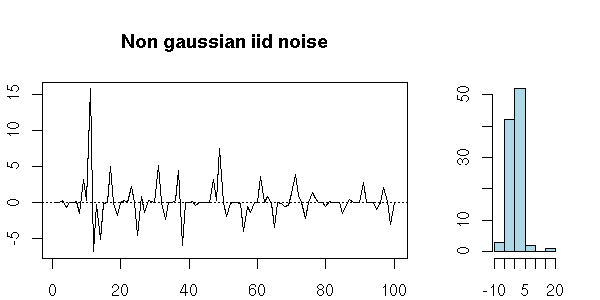

x <- ts(rnorm(100)^3) my.plot.ts(x, "Non gaussian iid noise")

But you can also have a series of uncorrelated random variables that are not independant: the definition just asks that they be "independant to the second order".

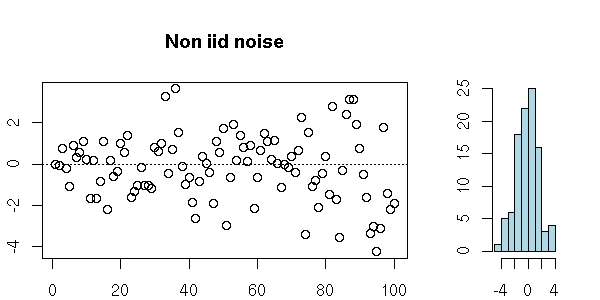

n <- 100

x <- rep(0,n)

z <- rnorm(n)

for (i in 2:n) {

x[i] <- z[i] * sqrt( 1 + .5 * x[i-1]^2 )

}

my.plot.ts(x, "Non iid noise")

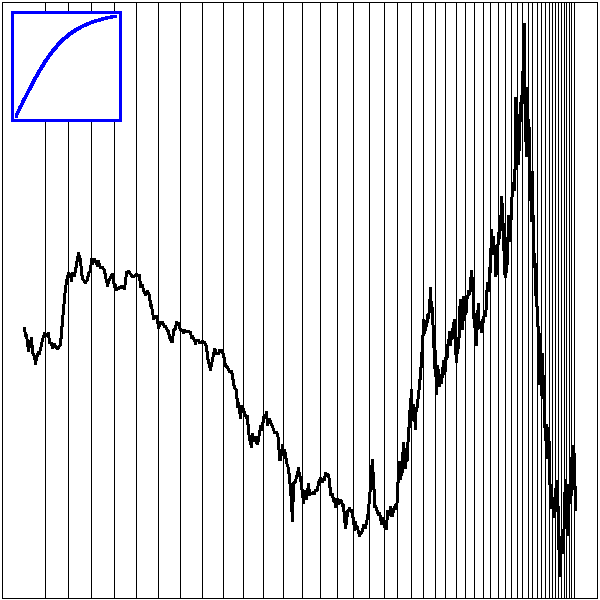

Some deterministic sequences look like white noise.

n <- 100

x <- rep(.7, n)

for (i in 2:n) {

x[i] <- 4 * x[i-1] * ( 1 - x[i-1] )

}

my.plot.ts(x, "A deterministic time series")

n <- 1000

tn <- cumsum(rexp(n))

# A C^infinity function defined as a sum

# of gaussian densities

f <- function (x) {

# If x is a single number: sum(dnorm(x-tn))

apply( dnorm( outer(x,rep(1,length(tn))) -

outer(rep(1,length(x)),tn) ),

1,

sum )

}

op <- par(mfrow=c(2,1))

curve(

f(x),

xlim = c(1,500),

n = 1000,

main = "From far away, it looks random..."

)

curve(

f(x),

xlim = c(1,10),

n = 1000,

main="...but it is not: it is a C^infinity function"

)

par(op)

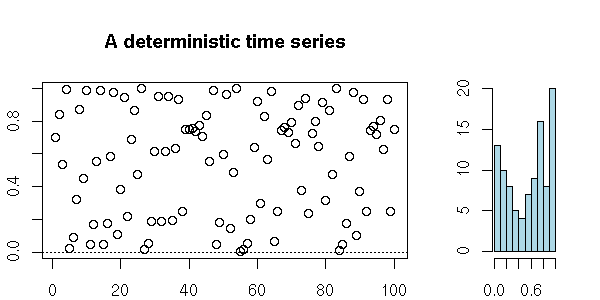

We have a few tests to check if a given time series actually is white noise.

The analysis of a time series mainly consists in finding out a recipe to build it (or to build a similar-looking series) from white noise, as we said in the introduction. But to find this recipe, we proceed in the other direction: we start with our time series and we try to transform it into something that looks like white noise. To check if our analysis is correct, we have to check that the residuals are indeed white noise (exactly as we did with regression). To this end, we can start to have a look at the ACF (on average, 5% of the values should be beyond the dashed lines -- if there are much more, there might be a problem).

z <- rnorm(200) op <- par(mfrow=c(2,1), mar=c(5,4,2,2)+.1) plot(ts(z)) acf(z, main = "") par(op)

x <- diff(co2) y <- diff(x,lag=12) op <- par(mfrow=c(2,1), mar=c(5,4,2,2)+.1) plot(ts(y)) acf(y, main="") par(op)

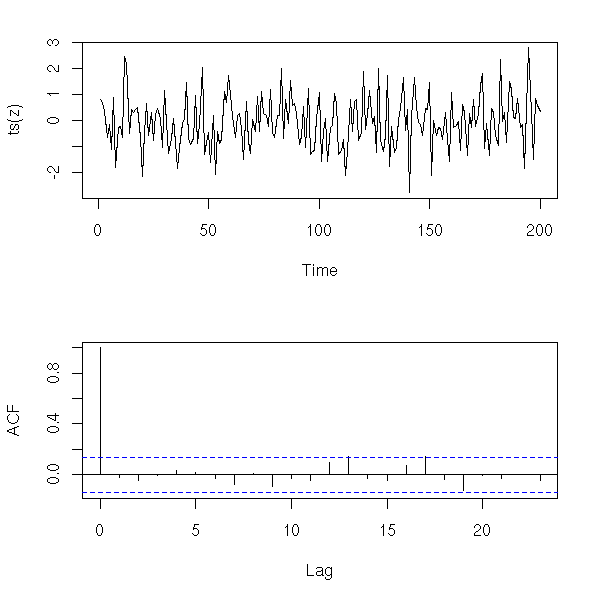

To have a numerical result (a p-value), we can perform a Box--Pierce or Ljung--Box test (these are also called "portmanteau statistics"): the idea is to consider the (weighted) sum of the first autocorrelation coefficients -- those sums (asymptotically) follow a chi^2 distribution (the Ljung-Box is a variant of the Box-Pierce one that gives a better Chi^2 approximation for small samples).

> Box.test(z) # Box-Pierce

Box-Pierce test

X-squared = 0.014, df = 1, p-value = 0.9059

> Box.test(z, type="Ljung-Box")

Box-Ljung test

X-squared = 0.0142, df = 1, p-value = 0.9051

> Box.test(y)

Box-Pierce test

X-squared = 41.5007, df = 1, p-value = 1.178e-10

> Box.test(y, type='Ljung')

Box-Ljung test

X-squared = 41.7749, df = 1, p-value = 1.024e-10

op <- par(mfrow=c(2,1))

plot.box.ljung <- function (

z,

k = 15,

main = "p-value of the Ljung-Box test",

ylab = "p-value"

) {

p <- rep(NA, k)

for (i in 1:k) {

p[i] <- Box.test(z, i,

type = "Ljung-Box")$p.value

}

plot(p,

type = 'h',

ylim = c(0,1),

lwd = 3,

main = main,

ylab = ylab)

abline(h = c(0,.05),

lty = 3)

}

plot.box.ljung(z, main="Random data")

plot.box.ljung(y, main="diff(diff(co2),lag=12)")

par(op)

There are other such tests: McLeod-Li, Turning-point, difference-sign, rank test, etc.

We can also use the Durbin--Watson we have already mentionned when we tackled regression.

TODO: check that I actually mention it. library(car) ?durbin.watson library(lmtest) ?dwtest

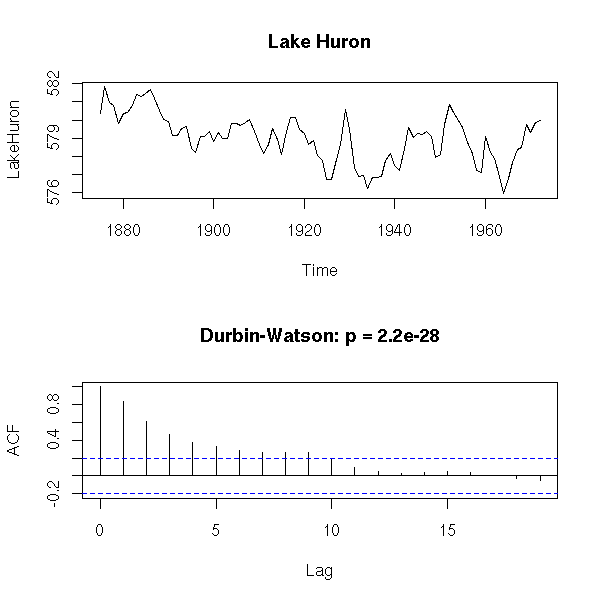

Here (it is the same test, but it is not implemented in the same way: the results may differ):

> dwtest(LakeHuron ~ 1)

Durbin-Watson test

data: LakeHuron ~ 1

DW = 0.3195, p-value = < 2.2e-16

alternative hypothesis: true autocorrelation is greater than 0

> durbin.watson(lm(LakeHuron ~ 1))

lag Autocorrelation D-W Statistic p-value

1 0.8319112 0.3195269 0

Alternative hypothesis: rho != 0

op <- par(mfrow=c(2,1))

library(lmtest)

plot(LakeHuron,

main = "Lake Huron")

acf(

LakeHuron,

main = paste(

"Durbin-Watson: p =",

signif( dwtest( LakeHuron ~ 1 ) $ p.value, 3 )

)

)

par(op)

n <- 200

x <- rnorm(n)

op <- par(mfrow=c(2,1))

x <- ts(x)

plot(x, main="White noise", ylab="")

acf(

x,

main = paste(

"Durbin-Watson: p =",

signif( dwtest( x ~ 1 ) $ p.value, 3)

)

)

par(op)

n <- 200

x <- rnorm(n)

op <- par(mfrow=c(2,1))

y <- filter(x,.8,method="recursive")

plot(y, main="AR(1)", ylab="")

acf(

y,

main = paste(

"p =",

signif( dwtest( y ~ 1 ) $ p.value, 3 )

)

)

par(op)

But beware: the default options tests wether the autocorrelation is positive or zero -- if it is significantly negative, the result will be misleading...

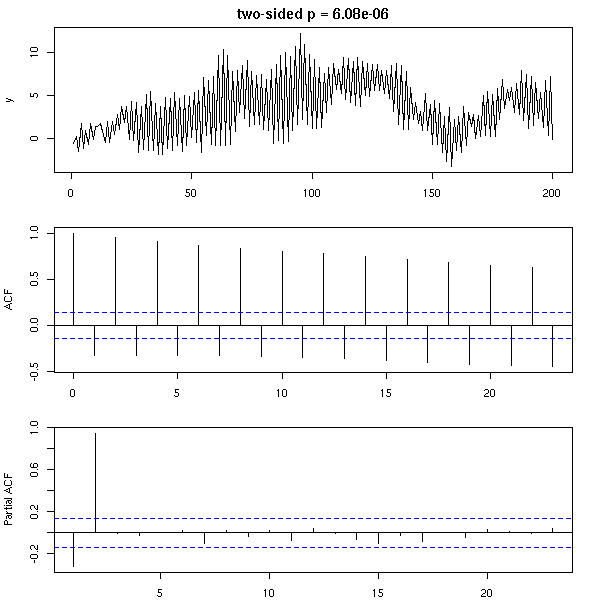

set.seed(1)

n <- 200

x <- rnorm(n)

y <- filter(x, c(0,1), method="recursive")

op <- par(mfrow=c(3,1), mar=c(2,4,2,2)+.1)

plot(

y,

main = paste(

"one-sided DW test: p =",

signif( dwtest ( y ~ 1 ) $ p.value, 3 )

)

)

acf( y, main="")

pacf(y, main="")

par(op)

op <- par(mfrow=c(3,1), mar=c(2,4,2,2)+.1)

res <- dwtest( y ~ 1, alternative="two.sided")

plot(

y,

main = paste(

"two-sided p =",

signif( res$p.value, 3 )

)

)

acf(y, main="")

pacf(y, main="")

par(op)

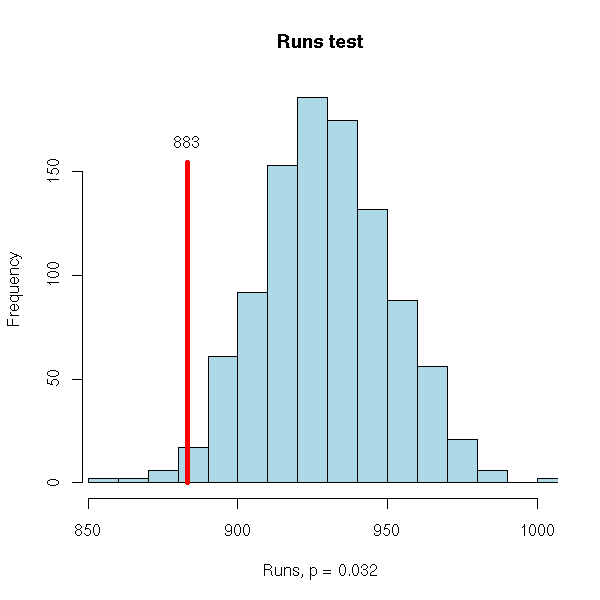

There are other tests, such as the runs test (that looks at the number of runs, a run being consecutive observations of the same sign; that kind of test is mainly used for qualitative time series or for time series that leave you completely clueless).

library(tseries) ?runs.test

or the Cowles-Jones test

TODO

A sequence is a pair of consecutive returns of the same sign; a

reversal is a pair of consecutive returns of opposite signs.

Their ratio, the Cowles-Jones ratio,

number of sequences

CJ = ---------------------

number of reversals

should be around one IF the drift is zero.

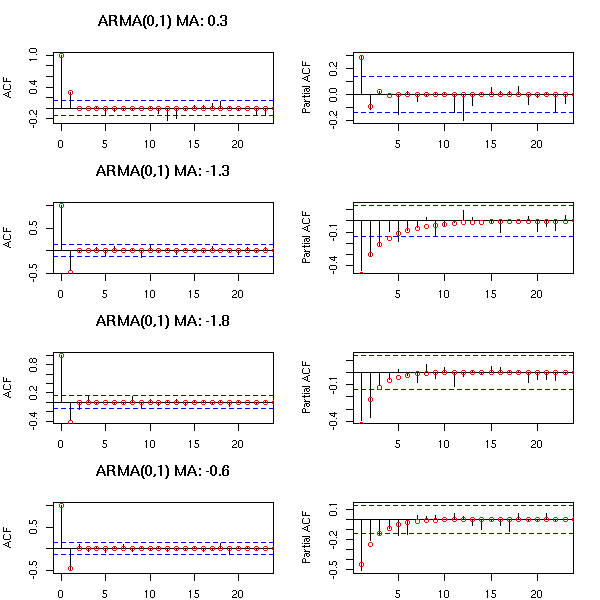

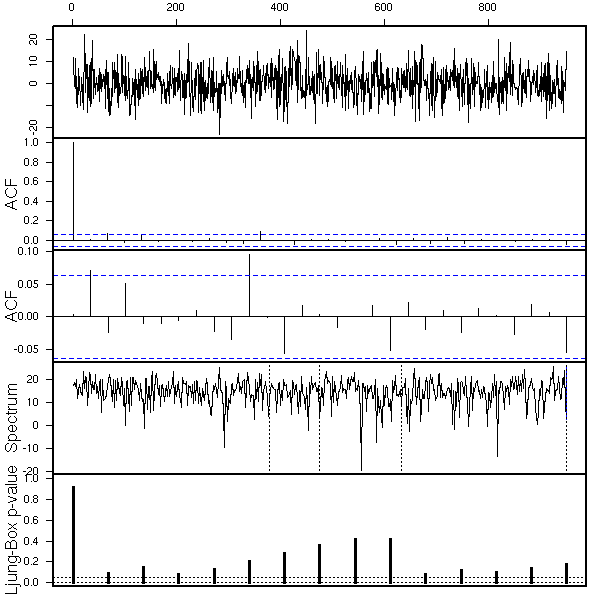

Actually, there is already a function, "tsdiag", that performs some tests on a time series (plot, ACF and Ljung-Box test).

data(co2) r <- arima( co2, order = c(0, 1, 1), seasonal = list(order = c(0, 1, 1), period = 12) ) tsdiag(r)

Classically, one tries to decompose a time series into a sum of three terms: a trend (often, an affine function), a seasonal component (a perdiodic function) and white noise.

In this section, we shall try to model time series from this idea, using classical statistical methods (mainly regression). Some of the procedures we shall present will be relevant, useful, but others will not work -- they are just ideas that one might think could work but turn out not to. Keep your eyes open!

TODO: give the structure of this section

We shall first start to model the time series to be studied with regression, as a polynomial plus a sine wave or, more generally, as a polynomial plus a periodic signal.

We shall then present modelling techniques based on the (exponential) moving average, that assume that the series locally looks like a constant plus noise or a linear function plus noise -- this is the Holt-Winters filter.

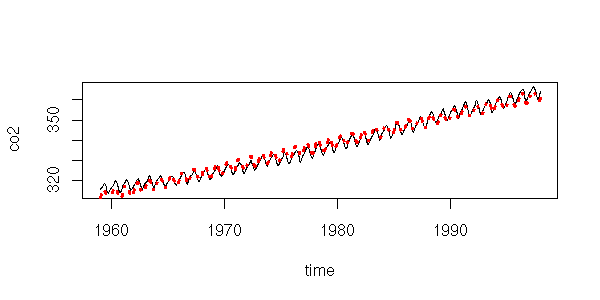

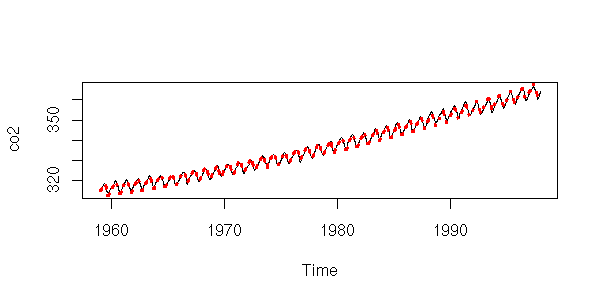

data(co2) plot(co2)

Here, we consider time as a predictive variable, as when we were playing with regressions. Let us first try to write the data as the sum of an affine function and a sine function.

y <- as.vector(co2) x <- as.vector(time(co2)) r <- lm( y ~ poly(x,1) + cos(2*pi*x) + sin(2*pi*x) ) plot(y~x, type='l', xlab="time", ylab="co2") lines(predict(r)~x, lty=3, col='red', lwd=3)

This is actually insufficient. It might not be that clear on this plot, but if you look at the residuals, it becomes obvious.

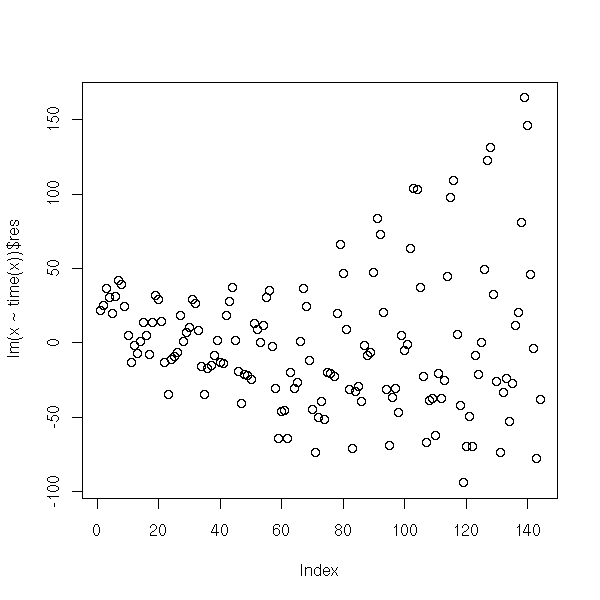

plot( y-predict(r),

main = "The residuals are not random yet",

xlab = "Time",

ylab = "Residuals" )

Let us complicate the model: a degree 2 polynomial plus a sine function (if it were not sufficient, we would replace the polynomial by splines) (we could also transform the time series, e.g., with a logarithm -- here, it does not work).

r <- lm( y ~ poly(x,2) + cos(2*pi*x) + sin(2*pi*x) ) plot(y~x, type='l', xlab="Time", ylab="co2") lines(predict(r)~x, lty=3, col='red', lwd=3)

That is better.

plot( y-predict(r),

main = "Better residuals -- but still not random",

xlab = "Time",

ylab = "Residuals" )

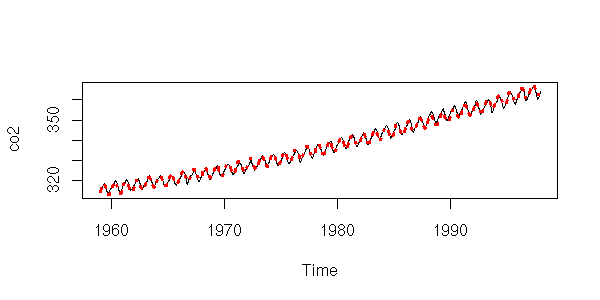

We could try to refine the periodic component, but it would not really improve things.

r <- lm( y ~ poly(x,2) + cos(2*pi*x) + sin(2*pi*x)

+ cos(4*pi*x) + sin(4*pi*x) )

plot(y~x, type='l', xlab="Time", ylab="co2")

lines(predict(r)~x, lty=3, col='red', lwd=3)

plot( y-predict(r),

type = 'l',

xlab = "Time",

ylab = "Residuals",

main = "Are those residuals any better?" )

However, the ACF suggests that those models are not as good as they seemed: if the residuals were really white noise, we would have very few values beyond the dashed lines...

r1 <- lm( y ~ poly(x,2) +

cos(2*pi*x) +

sin(2*pi*x) )

r2 <- lm( y ~ poly(x,2) +

cos(2*pi*x) +

sin(2*pi*x) +

cos(4*pi*x) +

sin(4*pi*x) )

op <- par(mfrow=c(2,1))

acf(y - predict(r1))

acf(y - predict(r2))

par(op)

Those plots tell us two things: first, after all, it was useful to refine the periodic component; second, we still have autocorrelation problems.

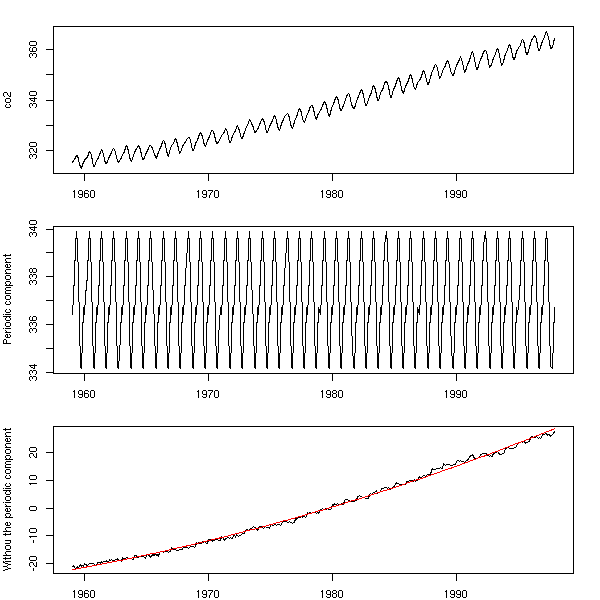

To estimate the periodic component, we could average the january values, then the february values, etc.

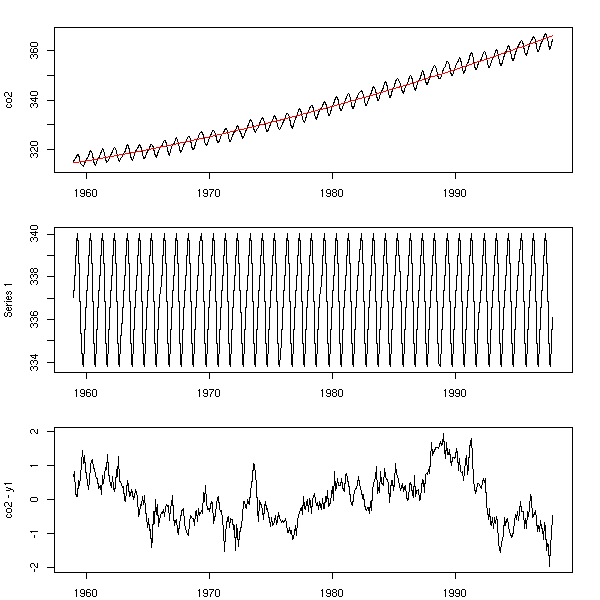

m <- tapply(co2, gl(12,1,length(co2)), mean) m <- rep(m, ceiling(length(co2)/12)) [1:length(co2)] m <- ts(m, start=start(co2), frequency=frequency(co2)) op <- par(mfrow=c(3,1), mar=c(2,4,2,2)) plot(co2) plot(m, ylab = "Periodic component") plot(co2-m, ylab = "Withou the periodic component") r <- lm(co2-m ~ poly(as.vector(time(m)),2)) lines(predict(r) ~ as.vector(time(m)), col='red') par(op)

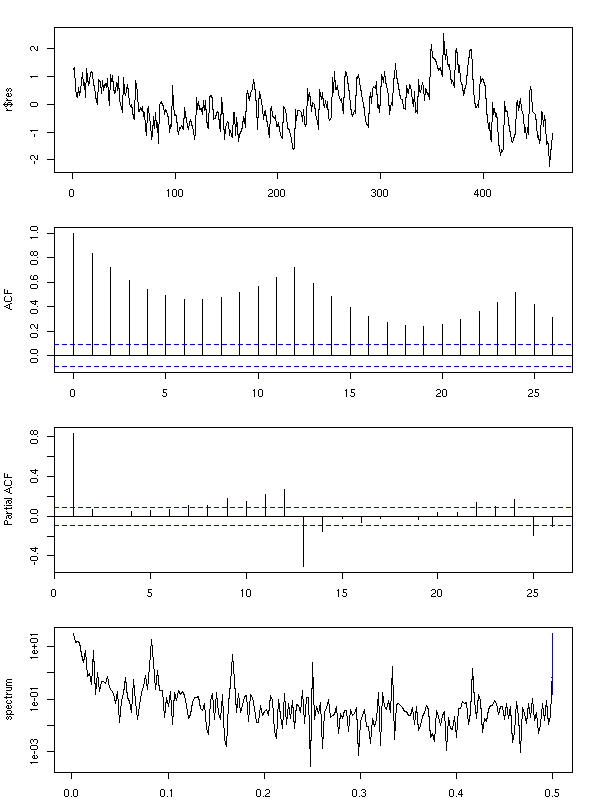

However, a look at the residuals tell us that the periodic component is still there...

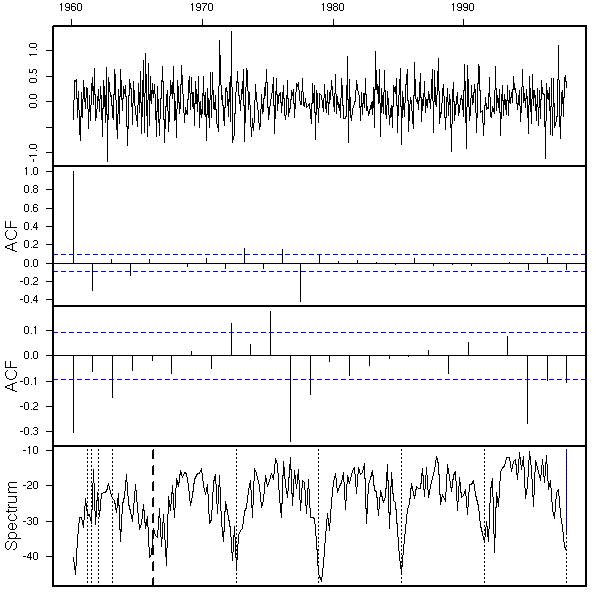

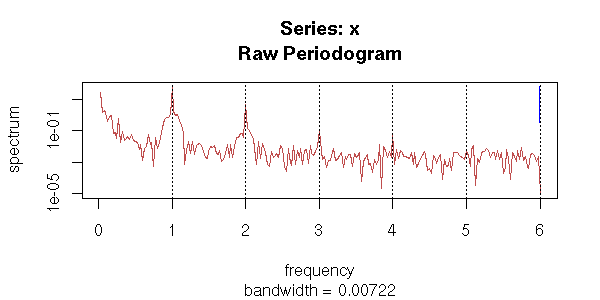

op <- par(mfrow=c(4,1), mar=c(2,4,2,2)+.1)

plot(r$res, type = "l")

acf(r$res, main="")

pacf(r$res, main="")

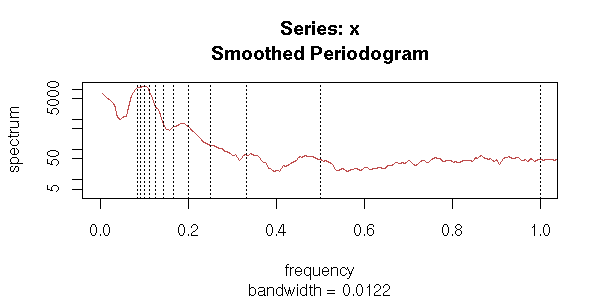

spectrum(r$res, col=par('fg'), main="")

abline(v=1:6, lty=3)

par(op)

Using the mean as above to estimate the periodic component would only work if the model were of the form

y ~ a + b x + periodic component.

But here, we have a quadratic term: averaging for each month will nt work. However, we can reuse the same idea with a single regression, to find both the periodic component and the trend. Here, the periodic component can be anything: we have 12 coefficients to estimate on top of those of the trend.

k <- 12

m <- matrix( as.vector(diag(k)),

nr = length(co2),

nc = k,

byrow = TRUE )

m <- cbind(m, poly(as.vector(time(co2)),2))

r <- lm(co2~m-1)

summary(r)

b <- r$coef

y1 <- m[,1:k] %*% b[1:k]

y1 <- ts(y1,

start=start(co2),

frequency=frequency(co2))

y2 <- m[,k+1:2] %*% b[k+1:2]

y2 <- ts(y2,

start=start(co2),

frequency=frequency(co2))

res <- co2 - y1 - y2

op <- par(mfrow=c(3,1), mar=c(2,4,2,2)+.1)

plot(co2)

lines(y2+mean(b[1:k]) ~ as.vector(time(co2)),

col='red')

plot(y1)

plot(res)

par(op)

Here, the analysis is not finished: we still have to study the resulting noise -- but we did get rid of the periodic component.

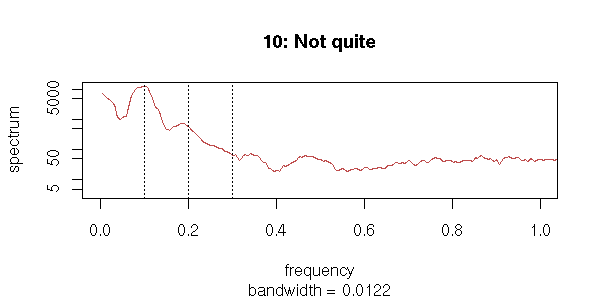

op <- par(mfrow=c(3,1), mar=c(2,4,2,2)+.1)

acf(res, main="")

pacf(res, main="")

spectrum(res, col=par('fg'), main="")

abline(v=1:10, lty=3)

par(op)

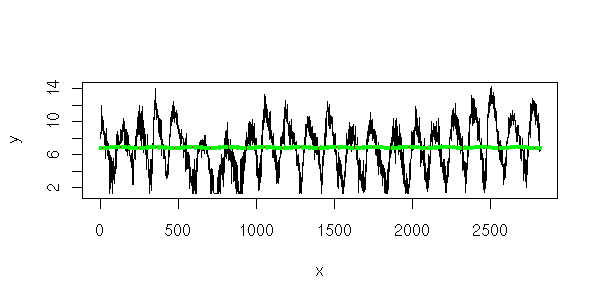

To pursue the example to its end, one is tempted to fit an AR(2) (an ARMA(1,1) would do, as well) to the residuals.

innovations <- arima(res, c(2,0,0))$residuals op <- par(mfrow=c(4,1), mar=c(3,4,2,2)) plot(innovations) acf(innovations) pacf(innovations) spectrum(innovations) par(op)

In the last example, if the period was longer, we would use splines to find a smoother periodic component -- and also to have fewer parameters: 15 was really a lot.

Exercise left to the reader...

To find the trend of a time series, you can simply "smooth" it, e.g., with a Moving Average (MA).

x <- co2 n <- length(x) k <- 12 m <- matrix( c(x, rep(NA,k)), nr=n+k-1, nc=k ) y <- apply(m, 1, mean, na.rm=T) y <- y[1:n + round(k/2)] y <- ts(y, start=start(x), frequency=frequency(x)) y <- y[round(k/4):(n-round(k/4))] yt <- time(x)[ round(k/4):(n-round(k/4)) ] plot(x, ylab="co2") lines(y~yt, col='red')

The Moving average will leave invariant some functions (e.g., polynomials of degree up to three -- choose the coefficients accordingly).

The "filter" function already does this. Do not forget the "side" argument: otherwise, it will use values from the past and the future -- for time series this is rarely desirable: it would introduce a look-ahead bias.

x <- co2 plot(x, ylab="co2") k <- 12 lines( filter(x, rep(1/k,k), side=1), col='red')

Actually, one should distinguish between "smoothing" with a Moving Average (to find the value at a point, one uses the whole sample, including what happend after that point) and "filtering" with a Moving Average (to find the smoothed value at a point, one only uses the information up to that point).

When we perform a usual regression, we are interested in the shape of our data in the whole interval. On the contrary, quite often, when we study time series, we are interested in what happens at the end of the interval -- and try to forecast beyond.

In the first case, we use smoothing: to compute the Moving Average at time t, we average over an interval centered on t. The drawback is that we cannot do that at the two ends of the interval: there will be missing values at the begining and the end.

In the second case, as we do want values at the end of the interval, we do not average on an interval centered on t, but on the values up to time t. The drawback is that this introduces a lag: we have the same values as before, but t/2 units later.

The "side" argument of the filter() function lest you choose between smoothing and filtering.

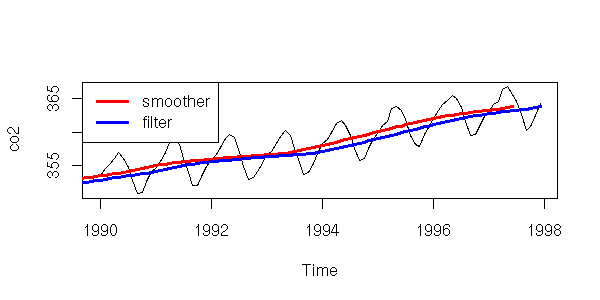

x <- co2

plot(window(x, 1990, max(time(x))), ylab="co2")

k <- 12

lines( filter(x, rep(1/k,k)),

col='red', lwd=3)

lines( filter(x, rep(1/k,k), sides=1),

col='blue', lwd=3)

legend(par('usr')[1], par('usr')[4], xjust=0,

c('smoother', 'filter'),

lwd=3, lty=1,

col=c('red','blue'))

Filters are especially used in real-time systems: we want the best estimation of some quantity using all the data collected so far, and we want to update this estimate when new data comes in.

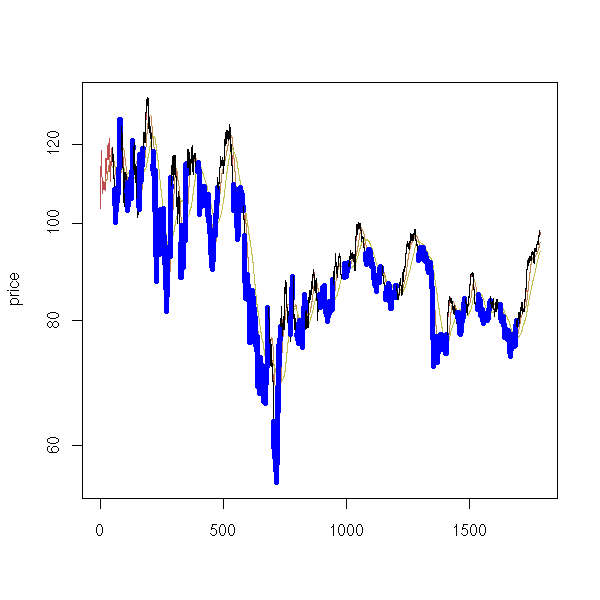

Here is a classical investment strategy (I do not claim it works): take a price time series, compute a 20-day moving average and a 50-day one; when the two curves intersect, buy or sell (finance people call the difference between those two moving averages an "oscillator" -- it is a time series that should wander around zero, that should revert to zero -- you can try to design you own oscillator: the strategy will be "buy when the oscillator is low, sell when it is high").

library(fBasics) # RMetrics

x <- yahooImport("s=IBM&a=11&b=1&c=1999&d=0&q=31&f=2000&z=IBM&x=.csv")

x <- as.numeric(as.character(x@data$Close))

x20 <- filter(x, rep(1/20,20), sides=1)

x50 <- filter(x, rep(1/50,50), sides=1)

matplot(cbind(x,x20,x50), type="l", lty=1, ylab="price", log="y")

segments(1:length(x), x[-length(x)], 1:length(x), x[-1], lwd=ifelse(x20>x50,1,5)[-1], col=ifelse(x20>x50,"black","blue")[-1])

Exercise: do the same with an exponential moving average.

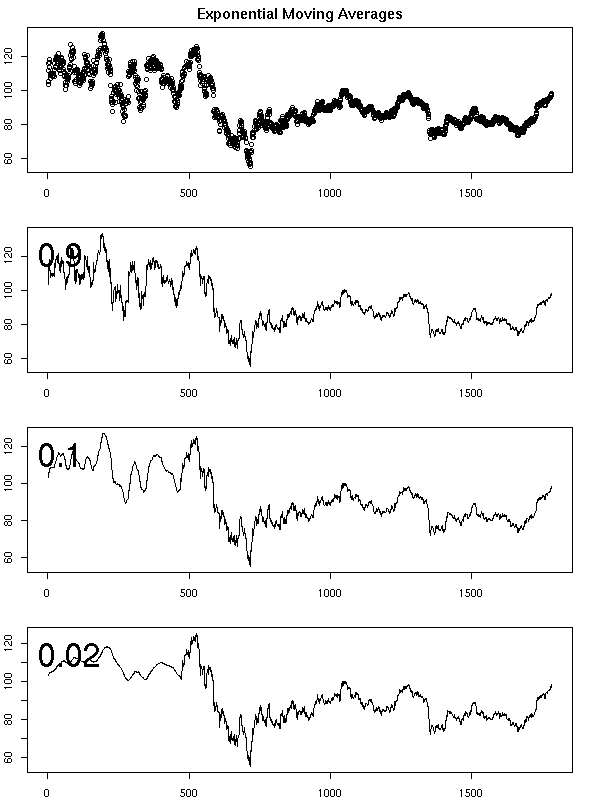

The moving average has a slight problem: it uses a window with sharp edges; an observation is either in the window or not. As a result, when large observations enter or leave the window, there is large jump in the moving average.

This is another moving average: instead of taking the N latest values, equally-weighted, we take all the preceding values and give a higher weight to the latest values.

exponential.moving.average <-

function (x, a) {

m <- x

for (i in 2:n) {

# Definition

# Exercise: use the "filter" function instead,

# with its "recursive" argument (that should be

# much, much faster)

m[i] <- a * x[i] + (1-a)*m[i-1]

}

m <- ts(m, start=start(x), frequency=frequency(x))

m

}

plot.exponential.moving.average <-

function (x, a=.9, ...) {

plot(exponential.moving.average(x,a), ...)

par(usr=c(0,1,0,1))

text(.02,.9, a, adj=c(0,1), cex=3)

}

op <- par(mfrow=c(4,1), mar=c(2,2,2,2)+.1)

plot(x, main="Exponential Moving Averages")

plot.exponential.moving.average(x, main="", ylab="")

plot.exponential.moving.average(x,.1, main="", ylab="")

plot.exponential.moving.average(x,.02, main="", ylab="")

par(op)

r <- x - exponential.moving.average(x,.02) op <- par(mfrow=c(4,1), mar=c(2,4,2,2)+.1) plot(r, main="Residuals of an exponential moving average") acf(r, main="") pacf(r, main="") spectrum(r, main="") abline(v=1:10,lty=3) par(op)

As with all moving average filters, there is a lag.

Actually, there is already a function to do this (it is a special case of the Holt-Winters filter -- we shall present the general case in a few moments):

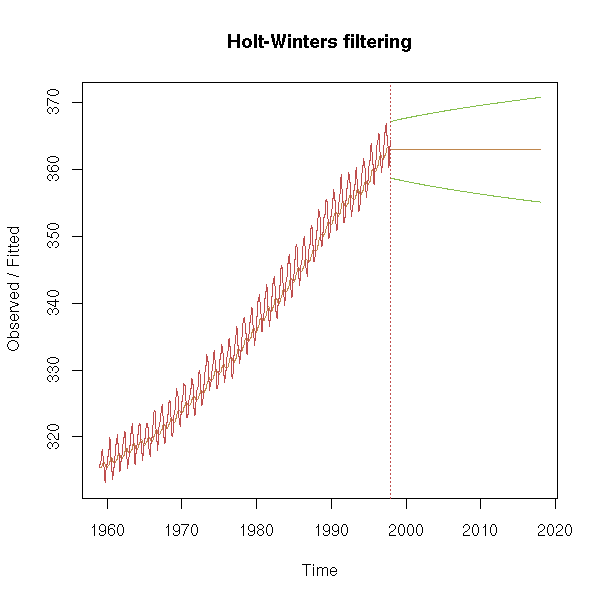

x <- co2 m <- HoltWinters(x, alpha=.1, beta=0, gamma=0) p <- predict(m, n.ahead=240, prediction.interval=T) plot(m, predicted.values=p)

y <- x20 > x50 op <- par(mfrow=c(5,1), mar=c(0,0,0,0), oma=.1+c(0,0,0,0)) plot(y, type="l", axes=FALSE); box() plot(filter(y, 50/100, "recursive", sides=1), axes=FALSE); box() plot(filter(y, 90/100, "recursive", sides=1), axes=FALSE); box() plot(filter(y, 95/100, "recursive", sides=1), axes=FALSE); box() plot(filter(y, 99/100, "recursive", sides=1), axes=FALSE); box() par(op)

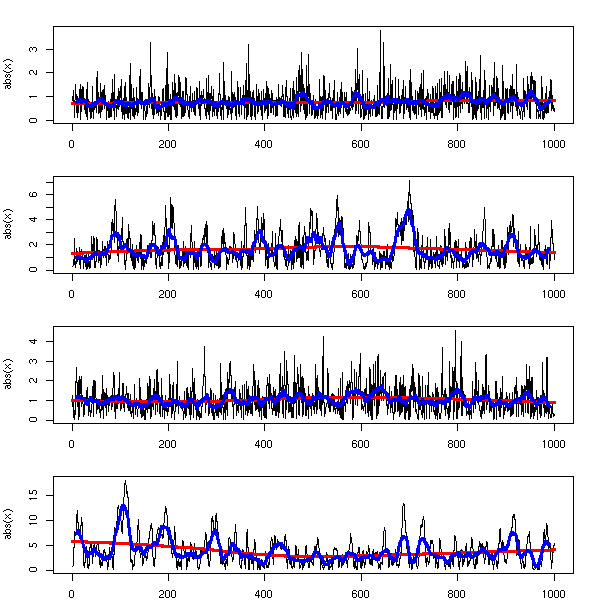

The "runing" function in the "gtools" package, the "rollFun" function in the "rollFun" in the "fMultivar" package (in RMetrics) and the "rollapply" function in the "zoo" package compute a statistic (mean, standard deviation, median, quantiles, etc.) on a moving window.

library(zoo)

op <- par(mfrow=c(3,1), mar=c(0,4,0,0), oma=.1+c(0,0,0,0))

plot(rollapply(zoo(x), 10, median, align="left"),

axes = FALSE, ylab = "median")

lines(zoo(x), col="grey")

box()

plot(rollapply(zoo(x), 50, sd, align="left"),

axes = FALSE, ylab = "sd")

box()

library(robustbase)

plot(sqrt(rollapply(zoo(x), 50, Sn, align="left")),

axes = FALSE, ylab = "Sn")

box()

par(op)

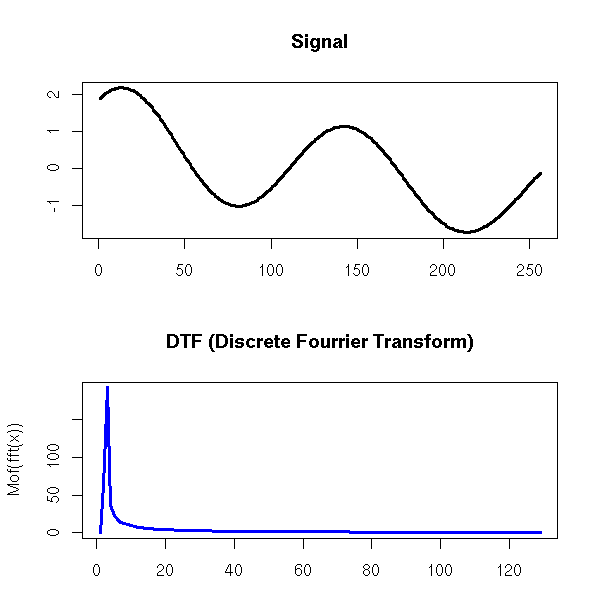

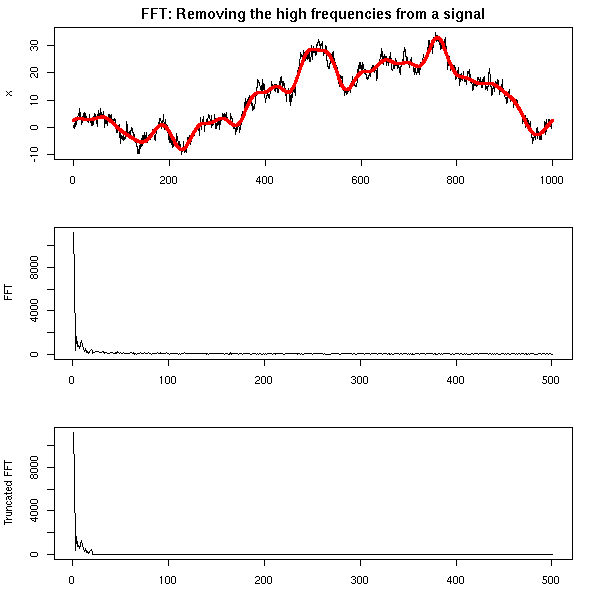

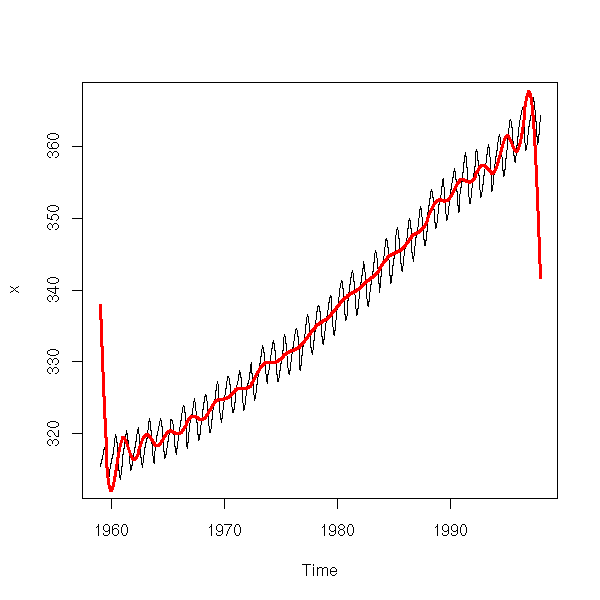

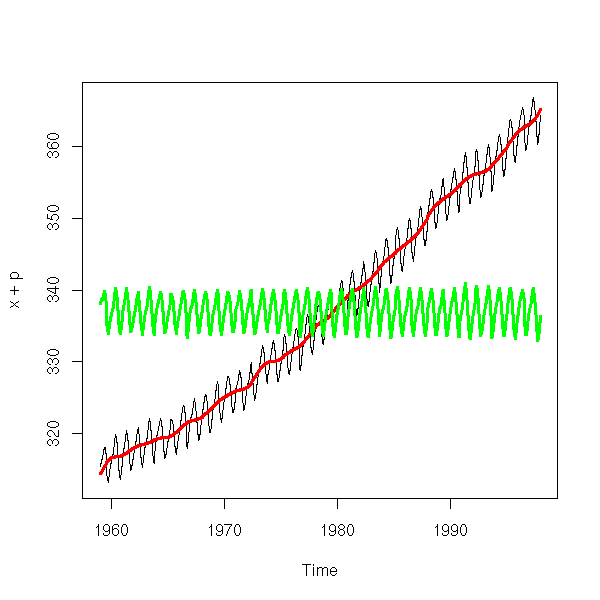

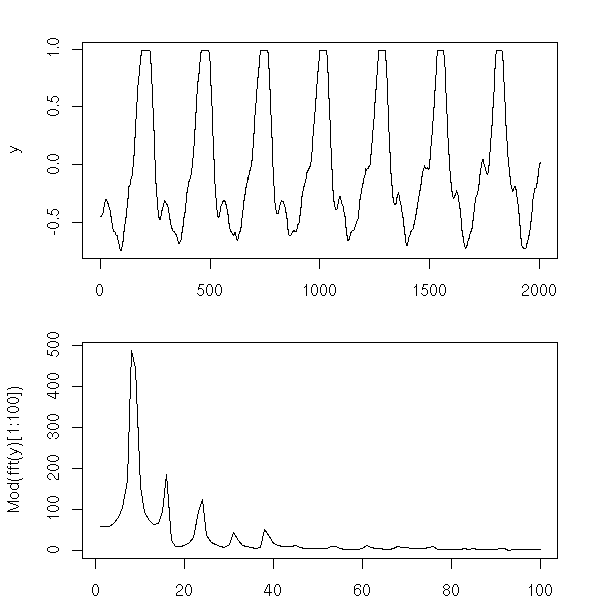

One can also find the trend of a time series by performing a Fourier transform and removing the high frequencies -- indeed, the moving averages we considered earlier are low-pass filters.

Exercise left to the reader

If a time series has a trend, if this trend is a polynomial of degree n, then you can transform this series into a trend-less one by differentiating it (using the discrete derivative) n times.

TODO: an example

To go back to the initial time series, you just have to integrate n times ("discrete integration" is just a complicated word for "cumulated sums" -- but, of course, you have to worry about the integration constants).

TODO: Explain the relations/confusions between trend, stationarity, integration, derivation.

TODO: Take a couple of (real-world) time series and apply them all the methods above, in order to compare them

The "stl" function performs a "Seasonal Decomposition of a Time Series by Loess".

In case you have forgotten, the "loess" function performs a local regression, i.e., for each value of the predictive variable, we take the neighbouring observations and perform a linear regression with them; the loess curve is the "envelope" of those regression lines.

op <- par(mfrow=c(3,1), mar=c(3,4,0,1), oma=c(0,0,2,0))

r <- stl(co2, s.window="periodic")$time.series

plot(co2)

lines(r[,2], col='blue')

lines(r[,2]+r[,1], col='red')

plot(r[,3])

acf(r[,3], main="residuals")

par(op)

mtext("STL(co2)", line=3, font=2, cex=1.2)

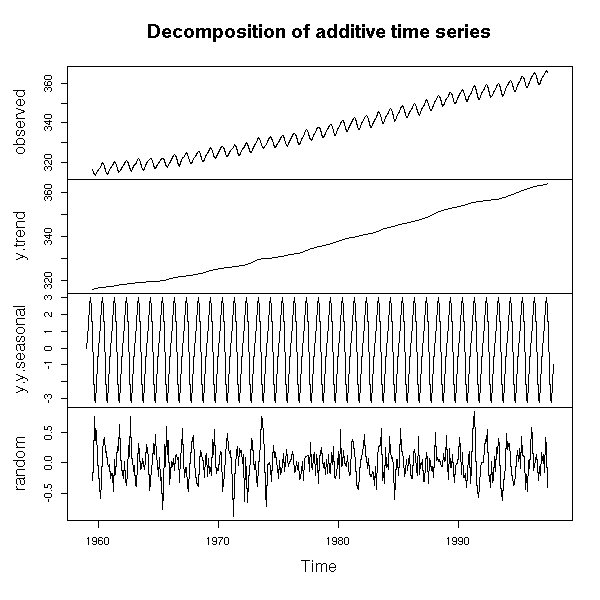

The "decompose" function has a similar purpose.

r <- decompose(co2) plot(r)

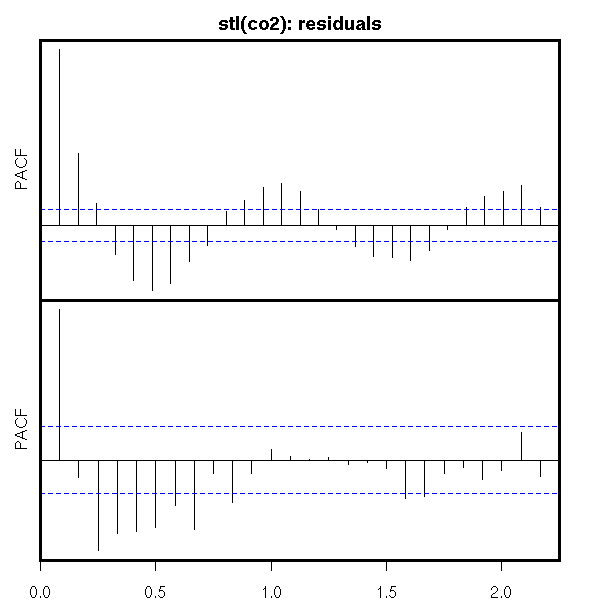

op <- par(mfrow=c(2,1), mar=c(0,2,0,2), oma=c(2,0,2,0))

acf(r$random, na.action=na.pass, axes=F, ylab="")

box(lwd=3)

mtext("PACF", side=2, line=.5)

pacf(r$random, na.action=na.pass, axes=F, ylab="")

box(lwd=3)

axis(1)

mtext("PACF", side=2, line=.5)

par(op)

mtext("stl(co2): residuals", line=2.5, font=2, cex=1.2)

This is a generalization of exponential filtering (which assumes there is no seasonal component). It has the same advantages: it is not very sensible to (not too drastic) structural changes.

For exponential filtering, we had used

a(t) = alpha * Y(t) + (1-alpha) * a(t-1).

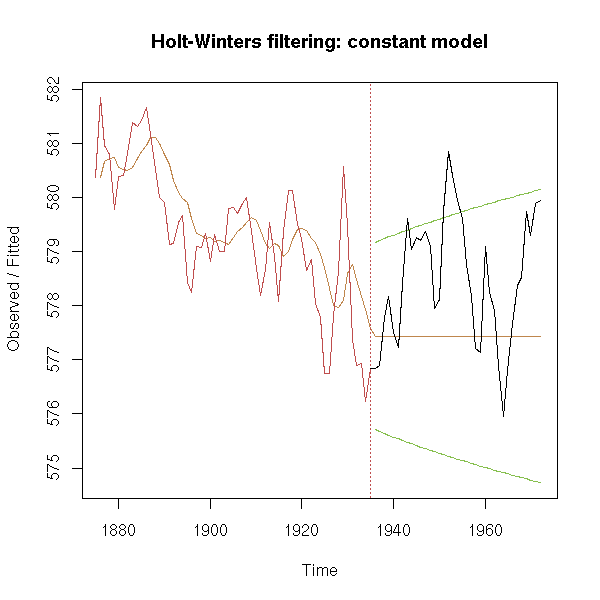

This is a good estimator of the future values of the time series if it is of the form Y(t) = Y_0 + noise, i.e., if the series is "a constant plus noise" (or even if it is just "locally constant").

This assumed that the series was locallly constant. Here is an example.

data(LakeHuron)

x <- LakeHuron

before <- window(x, end=1935)

after <- window(x, start=1935)

a <- .2

b <- 0

g <- 0

model <- HoltWinters(

before,

alpha=a, beta=b, gamma=g)

forecast <- predict(

model,

n.ahead=37,

prediction.interval=T)

plot(model, predicted.values=forecast,

main="Holt-Winters filtering: constant model")

lines(after)

We can add a trend: we model the data as a line, from the preceding values, giving more weight to the most recent values.

data(LakeHuron)

x <- LakeHuron

before <- window(x, end=1935)

after <- window(x, start=1935)

a <- .2

b <- .2

g <- 0

model <- HoltWinters(

before,

alpha=a, beta=b, gamma=g)

forecast <- predict(

model,

n.ahead=37,

prediction.interval=T)

plot(model, predicted.values=forecast,

main="Holt-Winters filtering: trend model")

lines(after)

Depending on the choice of beta, the forecasts can be very different...

data(LakeHuron)

x <- LakeHuron

op <- par(mfrow=c(2,2),

mar=c(0,0,0,0),

oma=c(1,1,3,1))

before <- window(x, end=1935)

after <- window(x, start=1935)

a <- .2

b <- .5

g <- 0

for (b in c(.02, .04, .1, .5)) {

model <- HoltWinters(

before,

alpha=a, beta=b, gamma=g)

forecast <- predict(

model,

n.ahead=37,

prediction.interval=T)

plot(model,

predicted.values=forecast,

axes=F, xlab='', ylab='', main='')

box()

text( (4*par('usr')[1]+par('usr')[2])/5,

(par('usr')[3]+5*par('usr')[4])/6,

paste("beta =",b),

cex=2, col='blue' )

lines(after)

}

par(op)

mtext("Holt-Winters filtering: different values for beta",

line=-1.5, font=2, cex=1.2)

You can also add a seosonal component (and additive or a multiplicative one).

TODO: Example TODO: A multiplicative example

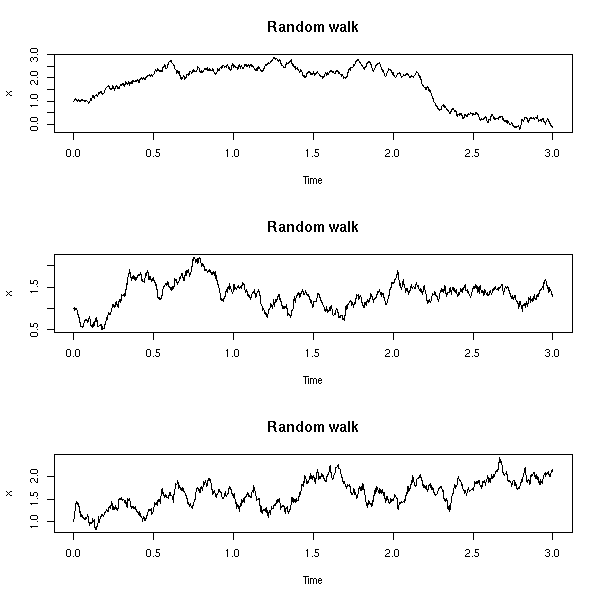

Before presenting general models whose interpretation may not be that straightforward (ARIMA, SARIMA), let us stop a moment to consider structural models, that are special cases of AR, MA, ARMA, ARIMA, SARIMA.

A random walk, with noise (a special case of ARIMA(0,1,1)):

X(t) = mu(t) + noise1

mu(t+1) = mu(t) + noise2

n <- 200

plot(ts(cumsum(rnorm(n)) + rnorm(n)),

main="Noisy random walk",

ylab="")

A random walk is simply "integrated noise" (as this is a discrete integration, we use the "cumsum" function). One can add some noise to a random walk: this is called a local level model.

We can also change the variance of those noises.

op <- par(mfrow=c(2,1),

mar=c(3,4,0,2)+.1,

oma=c(0,0,3,0))

plot(ts(cumsum(rnorm(n, sd=1))+rnorm(n,sd=.1)),

ylab="")

plot(ts(cumsum(rnorm(n, sd=.1))+rnorm(n,sd=1)),

ylab="")

par(op)

mtext("Noisy random walk", line=2, font=2, cex=1.2)

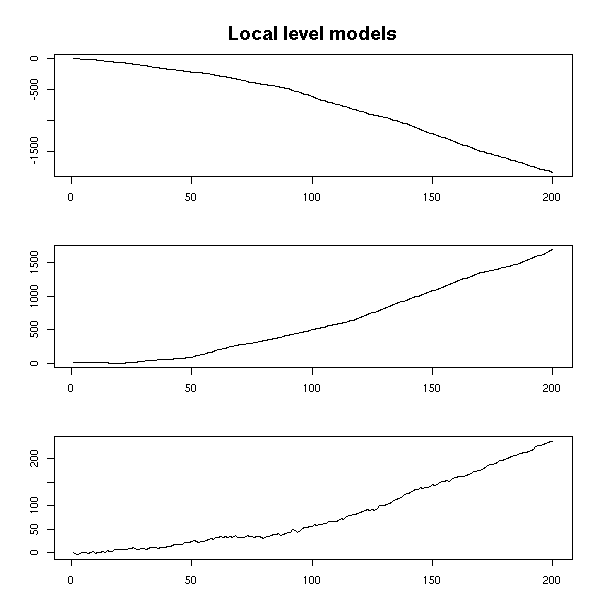

A noisy random walk, integrated, with added noise (ARIMA(0,2,2), aka local trend model):

X(t) = mu(t) + noise1

mu(t+1) = mu(t) + nu(t) + noise2 (level)

nu(t+1) = nu(t) + noise3 (slope)

n <- 200

plot(ts( cumsum( cumsum(rnorm(n))+rnorm(n) ) +

rnorm(n) ),

main = "Local trend model",

ylab="")

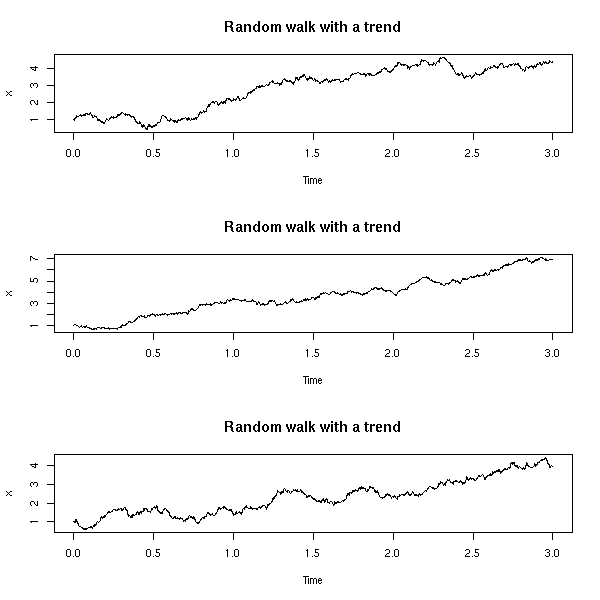

Here again, we can play and change the noise variances.

n <- 200

op <- par(mfrow=c(3,1),

mar=c(3,4,2,2)+.1,

oma=c(0,0,2,0))

plot(ts( cumsum( cumsum(rnorm(n,sd=1))+rnorm(n,sd=1) )

+ rnorm(n,sd=.1) ),

ylab="")

plot(ts( cumsum( cumsum(rnorm(n,sd=1))+rnorm(n,sd=.1) )

+ rnorm(n,sd=1) ),

ylab="")

plot(ts( cumsum( cumsum(rnorm(n,sd=.1))+rnorm(n,sd=1) )

+ rnorm(n,sd=1) ),

ylab="")

par(op)

mtext("Local level models", line=2, font=2, cex=1.2)

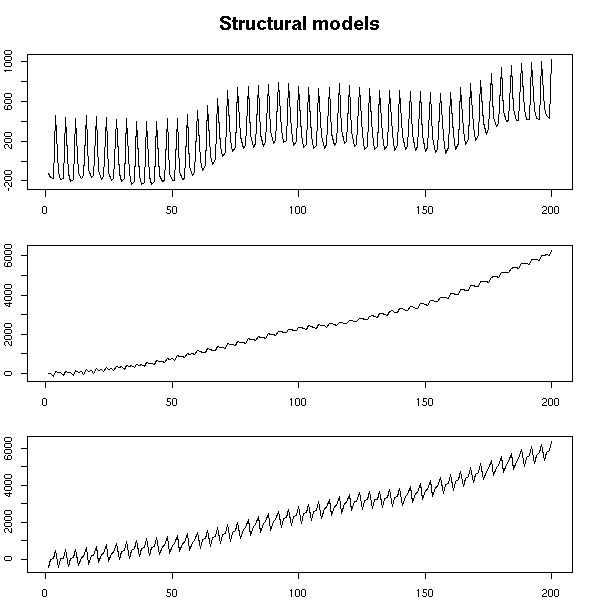

A noisy random walk, integrated, with added noise, to which we add a variable seasonal component.

X(t) = mu(t) + gamma(t) + noise1

mu(t+1) = mu(t) + nu(t) + noise2 (level)

nu(t+1) = nu(t) + noise2 (slope)

gamma(t+1) = -(gamma(t) + gamma(t-1) + gamma(t-2)) + noise4 (seasonal component)

structural.model <- function (

n=200,

sd1=1, sd2=1, sd3=1, sd4=1, sd5=200

) {

sd1 <- 1

sd2 <- 2

sd3 <- 3

sd4 <- 4

mu <- rep(rnorm(1),n)

nu <- rep(rnorm(1),n)

g <- rep(rnorm(1,sd=sd5),n)

x <- mu + g + rnorm(1,sd=sd1)

for (i in 2:n) {

if (i>3) {

g[i] <- -(g[i-1]+g[i-2]+g[i-3]) + rnorm(1,sd=sd4)

} else {

g[i] <- rnorm(1,sd=sd5)

}

nu[i] <- nu[i-1] + rnorm(1,sd=sd3)

mu[i] <- mu[i-1] + nu[i-1] + rnorm(1,sd=sd2)

x[i] <- mu[i] + g[i] + rnorm(1,sd=sd1)

}

ts(x)

}

n <- 200

op <- par(mfrow=c(3,1),

mar=c(2,2,2,2)+.1,

oma=c(0,0,2,0))

plot(structural.model(n))

plot(structural.model(n))

plot(structural.model(n))

par(op)

mtext("Structural models", line=2.5, font=2, cex=1.2)

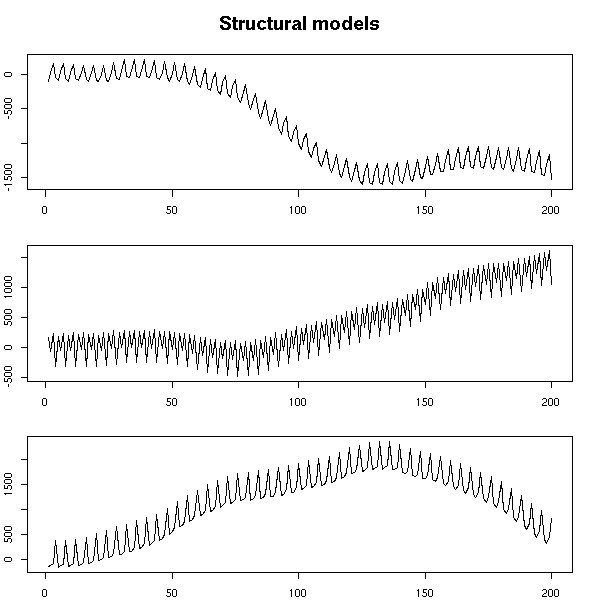

Here again, we can change the noise variance. If the seasonal component is not noisy, it is constant (but arbitrary).

n <- 200

op <- par(mfrow=c(3,1),

mar=c(2,2,2,2)+.1,

oma=c(0,0,2,0))

plot(structural.model(n,sd4=0))

plot(structural.model(n,sd4=0))

plot(structural.model(n,sd4=0))

par(op)

mtext("Structural models", line=2.5, font=2, cex=1.2)

You can model a time series along those models with the "StructTS" function.

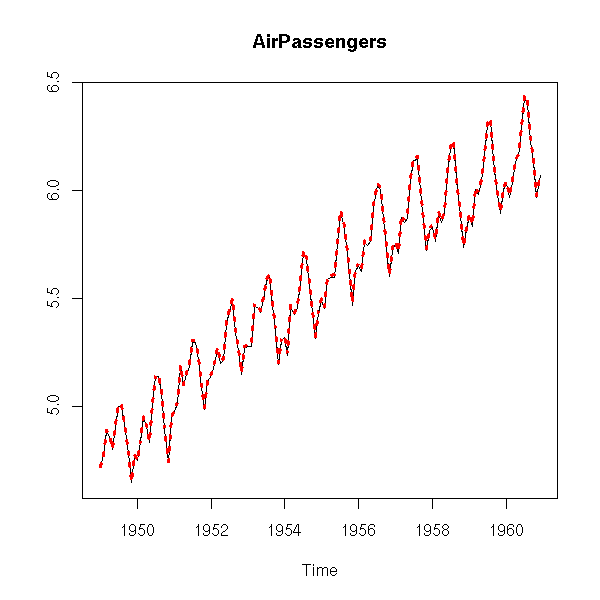

data(AirPassengers) plot(AirPassengers)

plot(log(AirPassengers))

x <- log(AirPassengers) r <- StructTS(x) plot(x, main="AirPassengers", ylab="") f <- apply(fitted(r), 1, sum) f <- ts(f, frequency=frequency(x), start=start(x)) lines(f, col='red', lty=3, lwd=3)

Here, the slope seems constant.

> r

Call:

StructTS(x = x)

Variances:

level slope seas epsilon

0.0007718 0.0000000 0.0013969 0.0000000

> summary(r$fitted[,"slope"])

Min. 1st Qu. Median Mean 3rd Qu. Max.

0.000000 0.009101 0.010210 0.009420 0.010560 0.011040

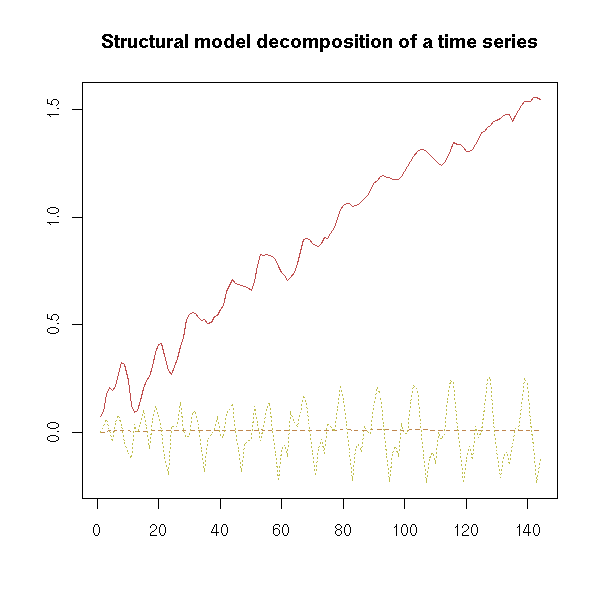

matplot(

(StructTS(x-min(x)))$fitted,

type = 'l',

ylab = "",

main = "Structural model decomposition of a time series"

)

You can also try to forecast future values (but it might not be that reliable).

l <- 1956

x <- log(AirPassengers)

x1 <- window(x, end=l)

x2 <- window(x, start=l)

r <- StructTS(x1)

plot(x)

f <- apply(fitted(r), 1, sum)

f <- ts(f, frequency=frequency(x), start=start(x))

lines(f, col='red')

p <- predict(r, n.ahead=100)

lines(p$pred, col='red')

lines(p$pred + qnorm(.025) * p$se,

col='red', lty=2)

lines(p$pred + qnorm(.975) * p$se,

col='red', lty=2)

title(main="Forecasting with a structural model (StructTS)")

TODO: put this at the end of the introduction?

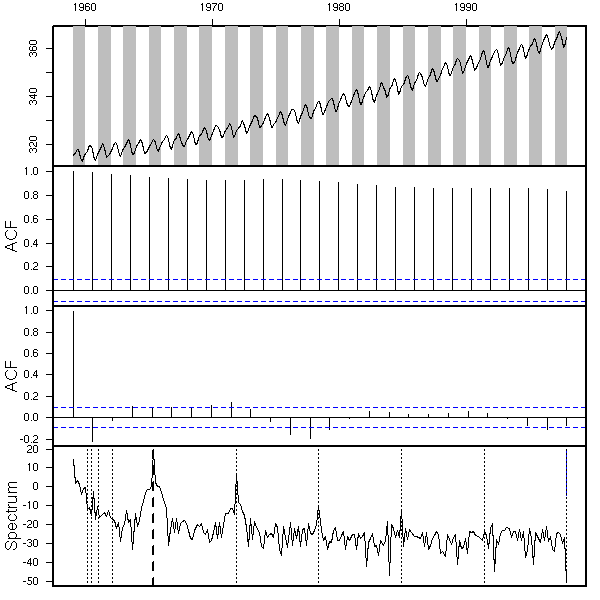

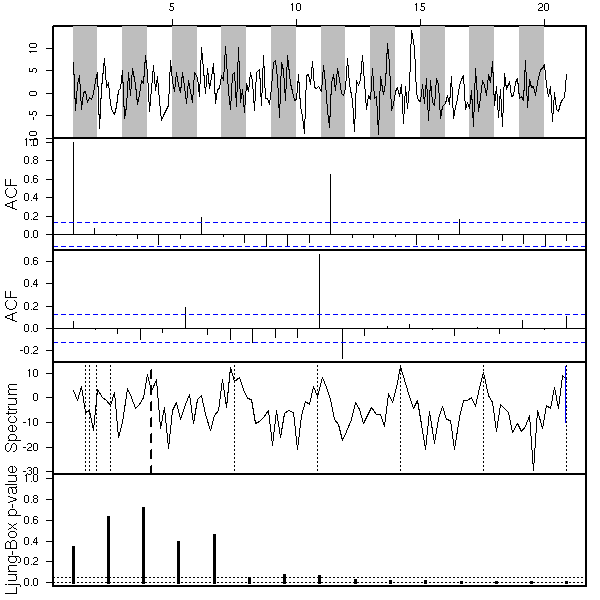

# A function to look at a time series

eda.ts <- function (x, bands=FALSE) {

op <- par(no.readonly = TRUE)

par(mar=c(0,0,0,0), oma=c(1,4,2,1))

# Compute the Ljung-Box p-values

# (we only display them if needed, i.e.,

# if we have any reason of

# thinking it is white noise).

p.min <- .05

k <- 15

p <- rep(NA, k)

for (i in 1:k) {

p[i] <- Box.test(

x, i, type = "Ljung-Box"

)$p.value

}

if( max(p)>p.min ) {

par(mfrow=c(5,1))

} else {

par(mfrow=c(4,1))

}

if(!is.ts(x))

x <- ts(x)

plot(x, axes=FALSE);

axis(2); axis(3); box(lwd=2)

if(bands) {

a <- time(x)

i1 <- floor(min(a))

i2 <- ceiling(max(a))

y1 <- par('usr')[3]

y2 <- par('usr')[4]

if( par("ylog") ){

y1 <- 10^y1

y2 <- 10^y2

}

for (i in seq(from=i1, to=i2-1, by=2)) {

polygon( c(i,i+1,i+1,i), c(y1,y1,y2,y2),

col='grey', border=NA )

}

lines(x)

}

acf(x, axes=FALSE)

axis(2, las=2)

box(lwd=2)

mtext("ACF", side=2, line=2.5)

pacf(x, axes=FALSE)

axis(2, las=2)

box(lwd=2)

mtext("ACF", side=2, line=2.5)

spectrum(x, col=par('fg'), log="dB",

main="", axes=FALSE )

axis(2, las=2)

box(lwd=2)

mtext("Spectrum", side=2, line=2.5)

abline(v=1, lty=2, lwd=2)

abline(v=2:10, lty=3)

abline(v=1/2:5, lty=3)

if( max(p)>p.min ) {

main <-

plot(p, type='h', ylim=c(0,1),

lwd=3, main="", axes=F)

axis(2, las=2)

box(lwd=2)

mtext("Ljung-Box p-value", side=2, line=2.5)

abline(h=c(0,.05),lty=3)

}

par(op)

}

data(co2)

eda.ts(co2, bands=T)

TODO: Put this section somewhere above

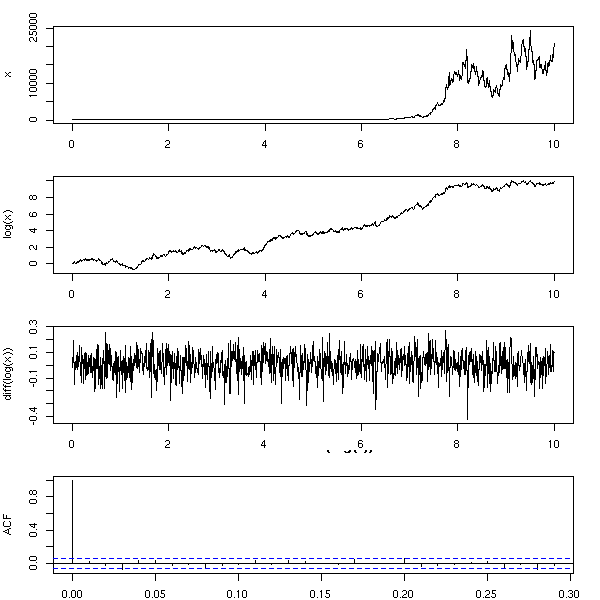

As for the regression, we first transform the data so that ot looks "nicer" (as usual, plot the histogram, density estimation, qqplot, etc., try a Box-Cox transformation). For instance, if you remark that the larger the value, the larger the variations, and if it is reasonable to think that the model could be "something deterministic plus noise", you can take the logarithm.

More generally, the aim of time series analysis is actually to transform the data so that it looks gaussian; the inverse of that transformation is then a model describing how the time series may have been produced from white noise.

Examples

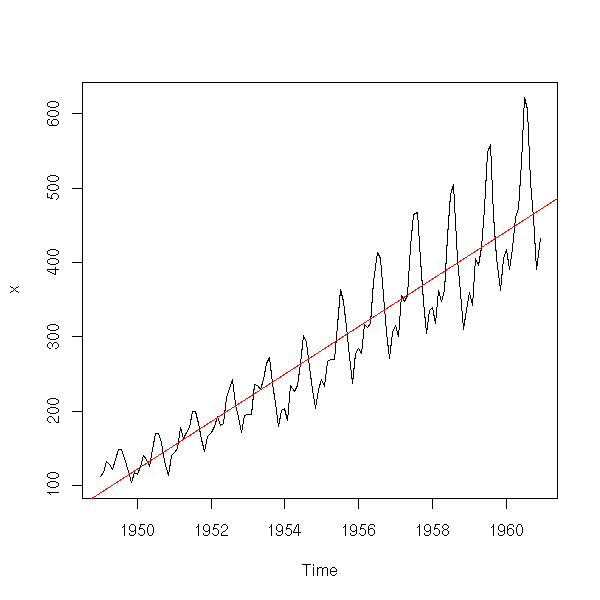

data(AirPassengers) x <- AirPassengers plot(x)

plot(x) abline(lm(x~time(x)), col='red')

The residuals or the first derivative suggest that the amplitude of the variations increase.

plot(lm(x~time(x))$res)

plot(diff(x))

Let us try with the logarithm.

x <- log(x) plot(x) abline(lm(x~time(x)),col='red')

plot(lm(x~time(x))$res)

plot(diff(x))

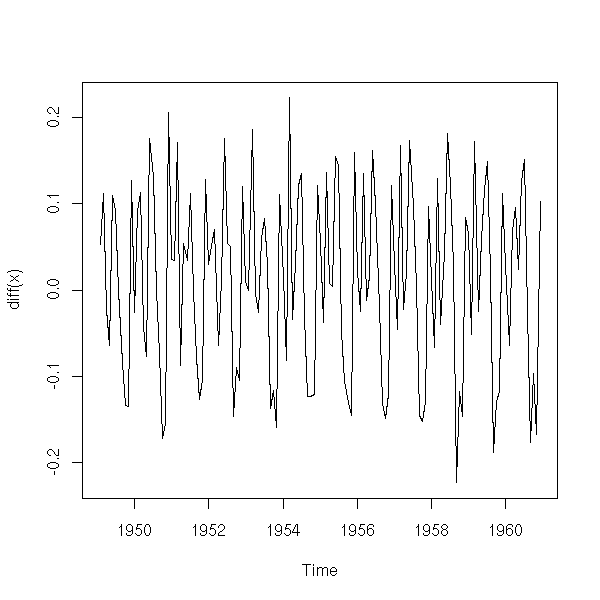

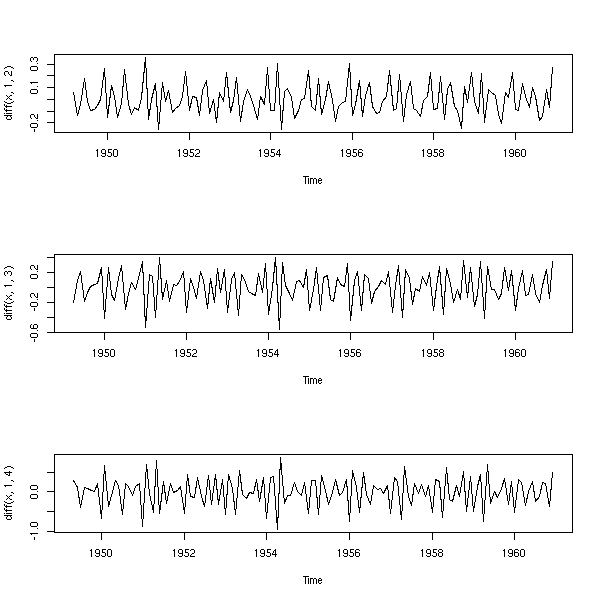

We could also have a look at the next derivatives.

op <- par(mfrow=c(3,1)) plot(diff(x,1,2)) plot(diff(x,1,3)) plot(diff(x,1,4)) par(op)

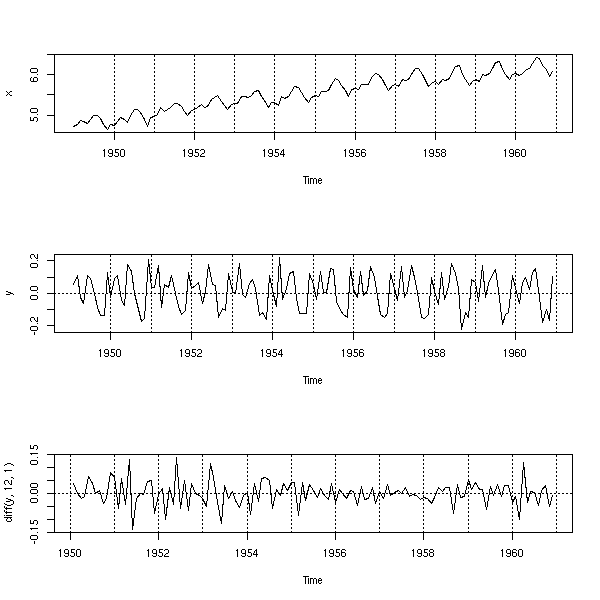

There seems to be a 12-month seasonal component.

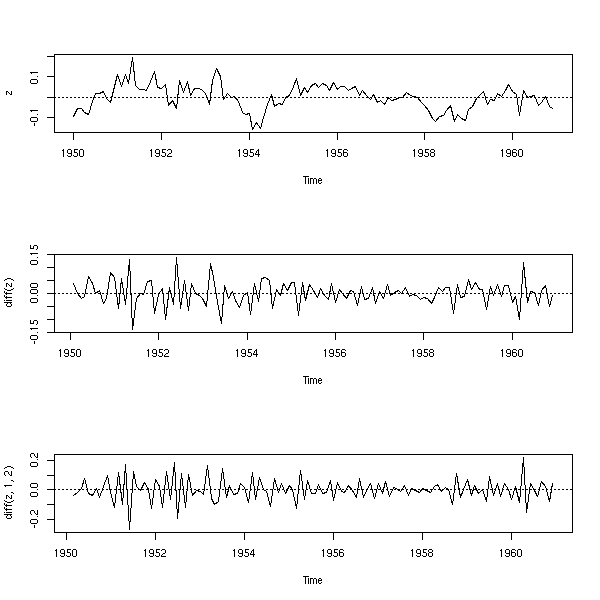

op <- par(mfrow=c(3,1)) plot(x) abline(h=0, v=1950:1962, lty=3) y <- diff(x) plot(y) abline(h=0, v=1950:1962, lty=3) plot(diff(y, 12,1)) abline(h=0, v=1950:1962, lty=3) par(op)

You can also use a regression to get rid of the up-trend.

op <- par(mfrow=c(3,1)) plot(x) abline(lm(x~time(x)), col='red', lty=2) abline(h=0, v=1950:1962, lty=3) y <- x - predict(lm(x~time(x))) plot(y) abline(h=0, v=1950:1962, lty=3) plot(diff(y, 12,1)) abline(h=0, v=1950:1962, lty=3) par(op)

Let us look at the residuals and differentiate.

z <- diff(y,12,1) op <- par(mfrow=c(3,1)) plot(z) abline(h=0,lty=3) plot(diff(z)) abline(h=0,lty=3) plot(diff(z,1,2)) abline(h=0,lty=3) par(op)

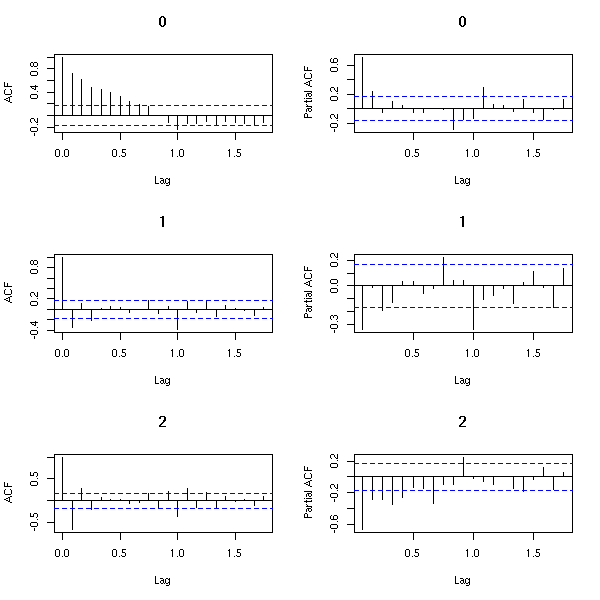

How many times should we differentiate?

k <- 3

op <- par(mfrow=c(k,2))

zz <- z

for(i in 1:k) {

acf(zz, main=i-1)

pacf(zz, main=i-1)

zz <- diff(zz)

}

par(op)

TODO: What is this?

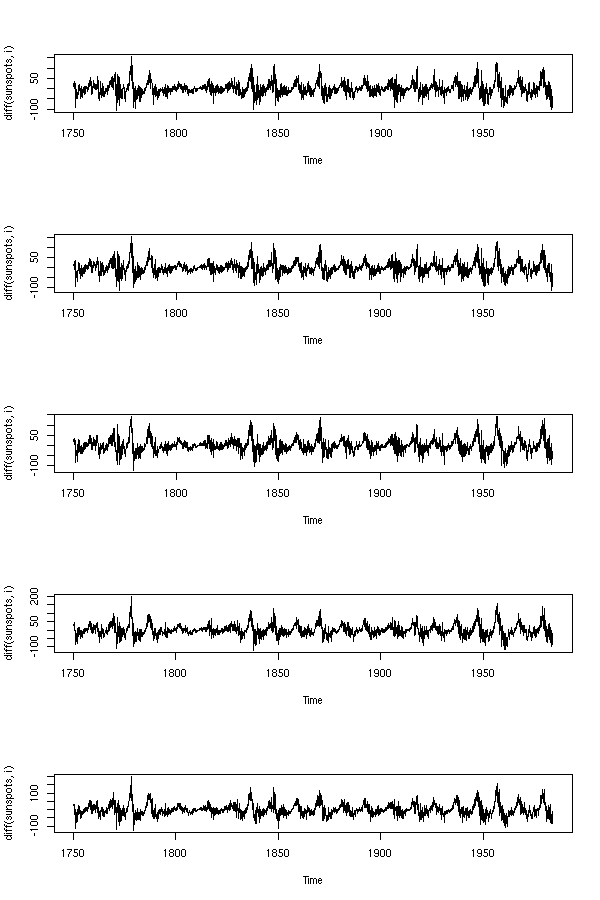

data(sunspots)

op <- par(mfrow=c(5,1))

for (i in 10+1:5) {

plot(diff(sunspots,i))

}

par(op)

The main difference between time series and the series of iid random variables we have been playing with up to now is the lack of independance. Correlation is one means of measuring that lack of independance (if the variables are gaussian, it is even an accurate means).

The AutoCorrelation Function (ACF) is the correlation between a term and the i-th preceding term

ACF(i) = Cor( X(t), X(t-i) )

If indeed this does not depend on the position t, the time series is said to be weakly stationary -- it is said to be strongly stationary if for all t, h, the joint distributions of (X(t),X(t+1),...,X(t+n)) and (X(t+h),X(t+h+1),...,X(t+h+n)) are the same.

In the following plot, most (1/20) of the values of the ACT should be between the dashed lines -- otherwise, there might be something else than gaussian noise in your data.

Strictly speaking, one should distinguish between the theoretical ACF, computed from a model, without any data and the Sample ACF (SACF), computed from a sample. Here, we compute the SACF of a realization of a model.

op <- par(mfrow=c(2,1), mar=c(2,4,3,2)+.1)

x <- ts(rnorm(200))

plot(x, main="gaussian iidrv",

xlab="", ylab="")

acf(x, main="")

par(op)

A real example:

op <- par(mfrow=c(2,1), mar=c(2,4,3,2)+.1) data(BJsales) plot(BJsales, xlab="", ylab="", main="BJsales") acf(BJsales, main="") par(op)

f <- 24 x <- seq(0,10, by=1/f) y <- sin(2*pi*x) y <- ts(y, start=0, frequency=f) op <- par(mfrow=c(4,1), mar=c(2,4,2,2)+.1) plot(y, xlab="", ylab="") acf(y, main="") pacf(y, main="") spectrum(y, main="", xlab="") par(op)

f <- 24 x <- seq(0,10, by=1/f) y <- x + sin(2*pi*x) + rnorm(10*f) y <- ts(y, start=0, frequency=f) op <- par(mfrow=c(4,1), mar=c(2,4,2,2)+.1) plot(y, xlab="", ylab="") acf(y, main="") pacf(y, main="") spectrum(y, main="", xlab="") par(op)

The ACF plot is called an "autocorrelogram". In the case where the observations are not evenly spaced, one can plot a variogram instead: (y(t_i)-y(t_j))^2 as a function of t_i-t_j.

TODO: plot with irregularly-spaced data TODO: smooth this plot.

Often, these are (regular) time series with missing values, In this case, for a given value of k=t_i-t_j (on the horizontal axis), we have several values. You can replace them by their mean.

TODO: example Take a time series, remove some of its values, compare the correlogram and the variogram.

(There is also an analogue of the variogram for discrete-valued time series: the lorelogram, based on the LOR -- Logarithm of Odds Ratio.)

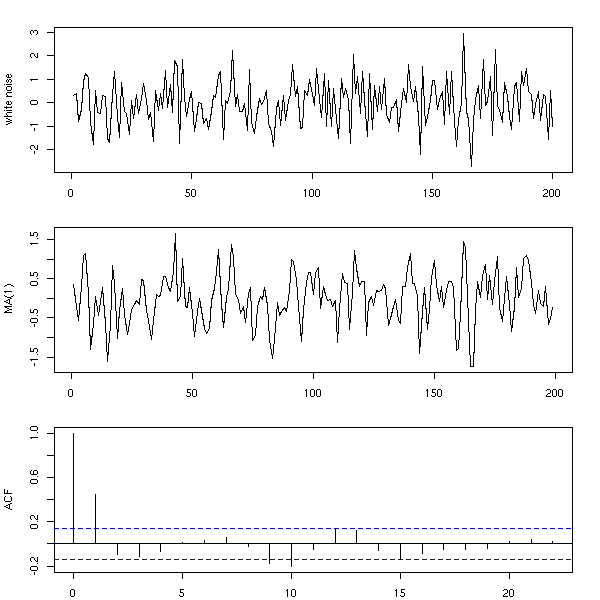

Here is a simple way of building a time series from a white noise: just perform a Moving Average (MA) of this noise.

n <- 200 x <- rnorm(n) y <- ( x[2:n] + x[2:n-1] ) / 2 op <- par(mfrow=c(3,1), mar=c(2,4,2,2)+.1) plot(ts(x), xlab="", ylab="white noise") plot(ts(y), xlab="", ylab="MA(1)") acf(y, main="") par(op)

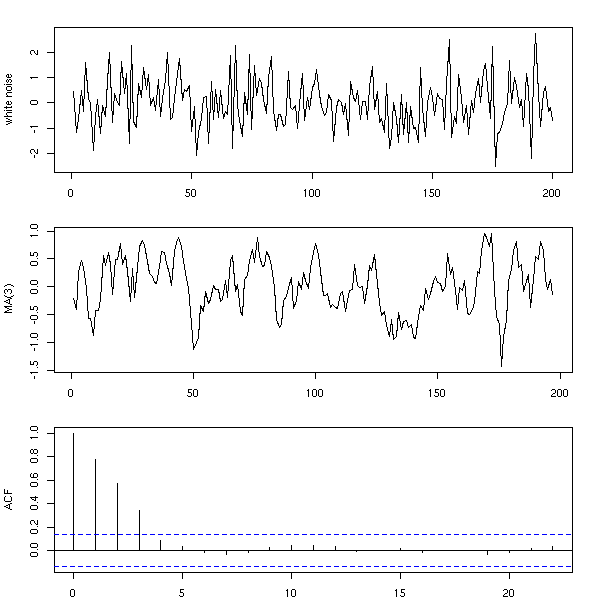

n <- 200 x <- rnorm(n) y <- ( x[1:(n-3)] + x[2:(n-2)] + x[3:(n-1)] + x[4:n] )/4 op <- par(mfrow=c(3,1), mar=c(2,4,2,2)+.1) plot(ts(x), xlab="", ylab="white noise") plot(ts(y), xlab="", ylab="MA(3)") acf(y, main="") par(op)

You can also compute the moving average with different coefficients.

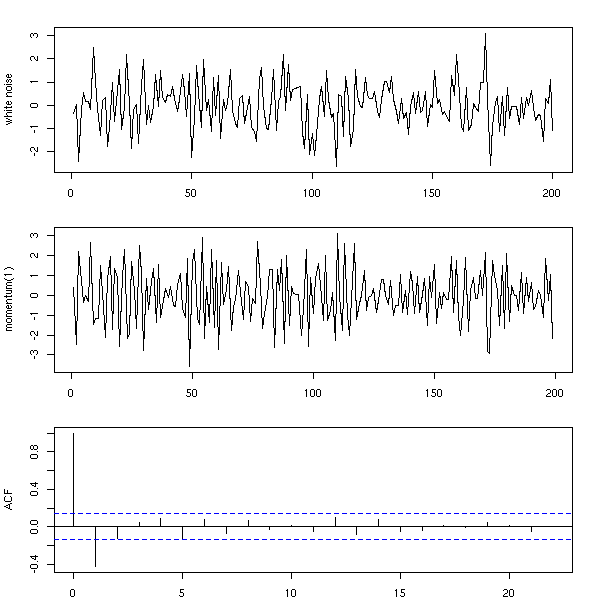

n <- 200 x <- rnorm(n) y <- x[2:n] - x[1:(n-1)] op <- par(mfrow=c(3,1), mar=c(2,4,2,2)+.1) plot(ts(x), xlab="", ylab="white noise") plot(ts(y), xlab="", ylab="momentum(1)") acf(y, main="") par(op)

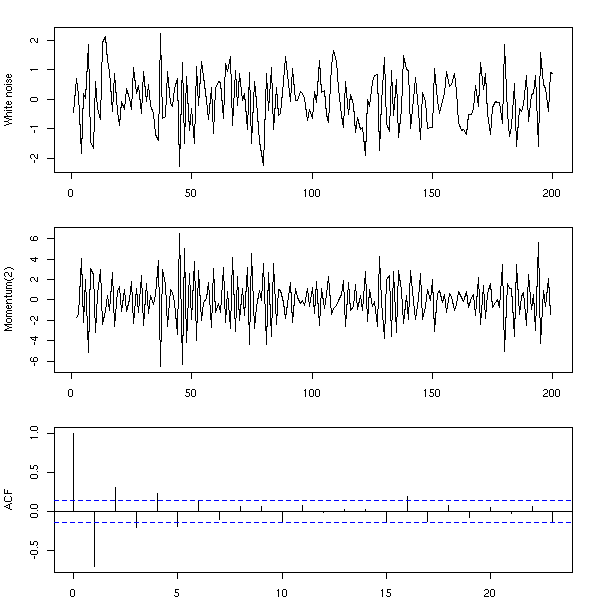

n <- 200 x <- rnorm(n) y <- x[3:n] - 2 * x[2:(n-1)] + x[1:(n-2)] op <- par(mfrow=c(3,1), mar=c(2,4,2,2)+.1) plot(ts(x), xlab="", ylab="white noise") plot(ts(y), xlab="", ylab="Momentum(2)") acf(y, main="") par(op)

Instead of computing the moving average by hand, you can use the "filter" function.

n <- 200 x <- rnorm(n) y <- filter(x, c(1,-2,1)) op <- par(mfrow=c(3,1), mar=c(2,4,2,2)+.1) plot(ts(x), xlab="", ylab="White noise") plot(ts(y), xlab="", ylab="Momentum(2)") acf(y, na.action=na.pass, main="") par(op)

TODO: the "side=1" argument.

Another means of building a time series is to compute each term by adding noise to the preceding term: this is called a random walk.

For instance,

n <- 200

x <- rep(0,n)

for (i in 2:n) {

x[i] <- x[i-1] + rnorm(1)

}This can be written, more simply, with the "cumsum" function.

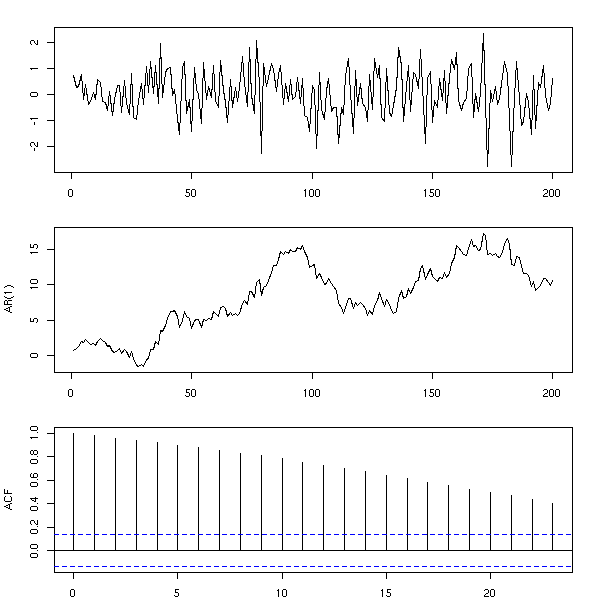

n <- 200 x <- rnorm(n) y <- cumsum(x) op <- par(mfrow=c(3,1), mar=c(2,4,2,2)+.1) plot(ts(x), xlab="", ylab="") plot(ts(y), xlab="", ylab="AR(1)") acf(y, main="") par(op)

More generally, one can consider

X(n+1) = a X(n) + noise.

This is called an auto-regressive model, or AR(1), because one can estimate the coefficients by performing a regression of x against lag(x,1).

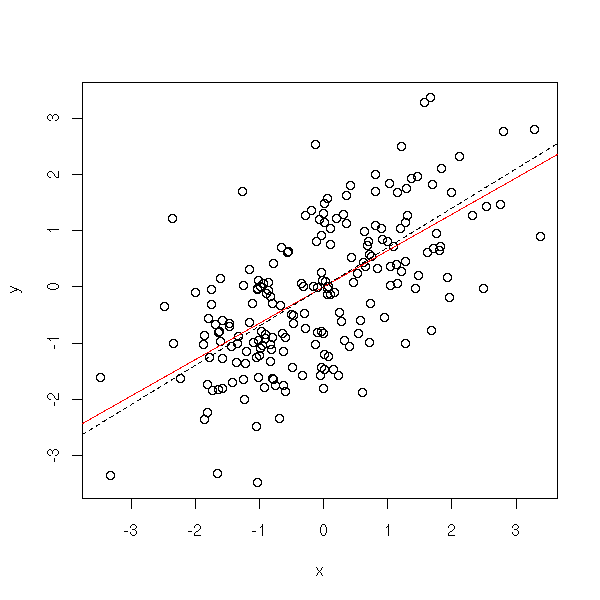

n <- 200

a <- .7

x <- rep(0,n)

for (i in 2:n) {

x[i] <- a*x[i-1] + rnorm(1)

}

y <- x[-1]

x <- x[-n]

r <- lm( y ~ x -1)

plot(y~x)

abline(r, col='red')

abline(0, .7, lty=2)

More generally, an AR(q) process is a process in which each term is a linear combination of the q preceding terms and a white noise (with fixed coefficients).

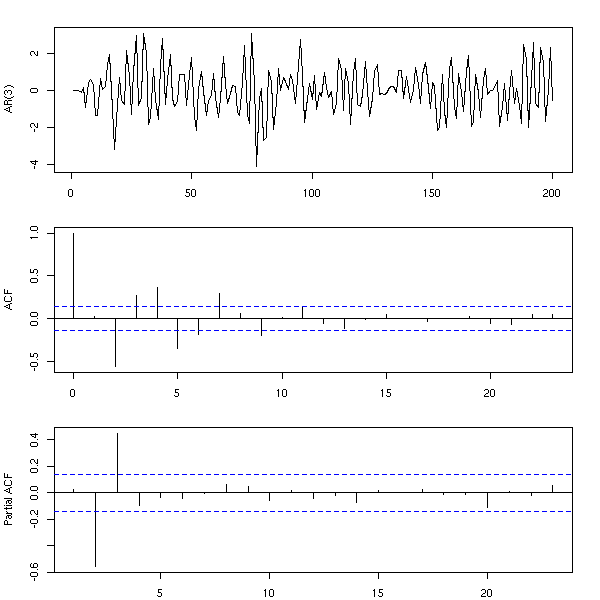

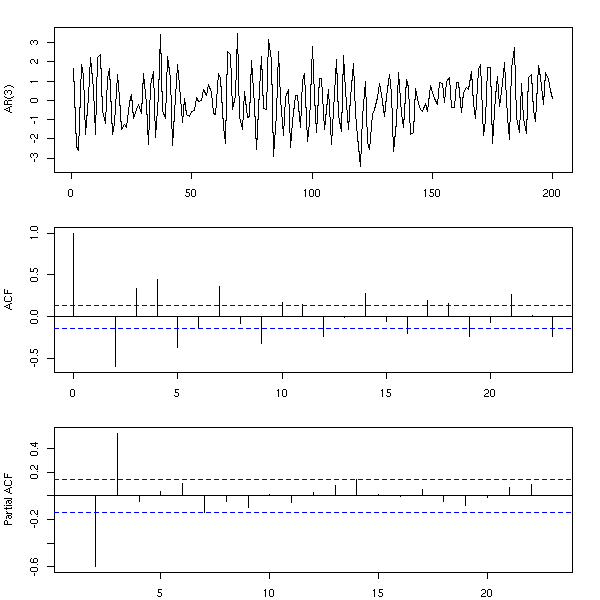

n <- 200

x <- rep(0,n)

for (i in 4:n) {

x[i] <- .3*x[i-1] -.7*x[i-2] + .5*x[i-3] + rnorm(1)

}

op <- par(mfrow=c(3,1), mar=c(2,4,2,2)+.1)

plot(ts(x), xlab="", ylab="AR(3)")

acf(x, main="", xlab="")

pacf(x, main="", xlab="")

par(op)

You can also simulate those models with the "arima.sim" function.

n <- 200 x <- arima.sim(list(ar=c(.3,-.7,.5)), n) op <- par(mfrow=c(3,1), mar=c(2,4,2,2)+.1) plot(ts(x), xlab="", ylab="AR(3)") acf(x, xlab="", main="") pacf(x, xlab="", main="") par(op)

The partial AutoCorrelation Function (PACF) provides an estimation of the coefficients of an AR(infinity) model: we have already seen it on the previous examples. It can be easily computed from the autocorrelation function with the "Yule-Walker" equations.

To compute the auto-correlation function of an AR(p) process whose coefficients are known,

(1 - a1 B - a2 B^2 - ... - ap B^p) Y = Z

we just have to compute the first autocorrelations r1, r2, ..., rp, and then use the Yule-Walker equations:

r(j) = a1 r(j-1) + a2 r(j-2) + ... + ap r(j-p).

You can also use them in the other direction to compute the coefficients of an AR process from its autocorrelations.

A time series is said to be weakly stationary if the expectation of X(t) does not depend on t and if the covariance of X(t) and X(s) only depends on abs(t-s).

A time series is said to be stationary if all the X(t) have the same distribution and all the joint distribution of (X(t),X(s)) (for a given value of abs(s-t)) are the same. Thus, "weakly stationary" means "stationary up to the second order.

For instance, if your time series has a trend, i.e., if the expectation of X(t) is not constant, the series is not stationary.

n <- 200

x <- seq(0,2,length=n)

trend <- ts(sin(x))

plot(trend,

ylim=c(-.5,1.5),

lty=2, lwd=3, col='red',

ylab='')

r <- arima.sim(

list(ar = c(0.5,-.3), ma = c(.7,.1)),

n,

sd=.1

)

lines(trend+r)

Other example: a random walk is not stationary, because the variance of X(t) increases with t -- but the expectation of X(t) remains zero.

n <- 200

k <- 10

x <- 1:n

r <- matrix(nr=n,nc=k)

for (i in 1:k) {

r[,i] <- cumsum(rnorm(n))

}

matplot(x, r,

type = 'l',

lty = 1,

col = par('fg'),

main = "A random walk is not stationnary")

abline(h=0,lty=3)

Ergodicity and stationarity are two close but different notions.

Given a stochastic process X(n), (n integer), to compute the mean of X(1), we can use one of the following methods: either take several realizations of this process, each providing a value for X(1), and average those values; or take a single realization of this process and average X(1), X(2), X(3), etc.. The result we want is the first, but if we are lucky (if the process is ergodic), both will coincide.

Intuitively, a process is ergodic if, to get information that would require several realizations of the process, you can instead consider a single longer realization.

The practical interest of ergodic processes is that usually, when we study time series, we have a single realization of this time series. With an ergodic hypothesis, we can say something about it -- without it, we are helpless.

TODO: understand and explain the differences. For instance, a stationnary process need not be ergodic.

In an autoregressive (AR) process, it is reasonable to ask that past observations have less influence than more recent ones. That is why we ask, in the AR(1) model,

Y(t+1) = a * Y(t) + Z(t) (where Z is a white noise)

that abs(a)<1. This can also be written:

Y(t+1) - a Y(t) = Z(t)

or

phi(B) Y = Z (where phi(u) = 1 - a u

and B is the Backwards, operator,

aka shift, delay or lag operator)and we ask that all the roots of phi have a modulus greater than 1.

More generally, an AR process

phi(B) Y = Z where phi(u) = 1 - a_1 u - a_2 u^2 - ... - a_p u^p

is stationary if the modulus of all the roots of phi are greater that 1.

You can check that these stationary AR process are MA(infinity) processes (this is another meaning of the Yule--Walker equations).

TODO: understand and correct this.

Symetrically, for a Moving Average (MA) process, defined by

Y = psi(B) Z where psi(u) = 1 + b_1 u + b_2 u^3 + ... + b_q u^q

We shall also ask that modulus of the roots of psi be greater than 1. The process is then said to be invertible. Without this hypothesis, the autocorrelation function does not uniquely define the coefficients of the Moving Average.

For instance, for an MA(1) process,

Y(t+1) = Z(t+1) + a Z(t),

you can replace a by 1/a without changing the autocorrelation function.

n <- 200

ma <- 2

mai <- 1/ma

op <- par(mfrow=c(4,1), mar=c(2,4,1,2)+.1)

x <- arima.sim(list(ma=ma),n)

plot(x, xlab="", ylab="")

acf(x, xlab="", main="")

lines(0:n,

ARMAacf(ma=ma, lag.max=n),

lty=2, lwd=3, col='red')

x <- arima.sim(list(ma=mai),n)

plot(x, xlab="", ylab="")

acf(x, main="", xlab="")

lines(0:n,

ARMAacf(ma=mai, lag.max=n),

lty=2, lwd=3, col='red')

par(op)

TODO: an example with a higher degree polynomial (I naively thought I would just have to invert the roots of the polynomial, but apparently it is more complicated...)

sym.poly <- function (z,k) {

# Sum of the products of k

# distinct elements of the vector z

if (k==0) {

r <- 1

} else if (k==1) {

r <- sum(z)

} else {

r <- 0

for (i in 1:length(z)) {

r <- r + z[i]*sym.poly(z[-i],k-1)

}

r <- r/k # Each term appeared k times

}

r

}

sym.poly( c(1,2,3), 1 ) # 6

sym.poly( c(1,2,3), 2 ) # 11

sym.poly( c(1,2,3), 3 ) # 6

roots.to.poly <- function (z) {

n <- length(z)

p <- rep(1,n)

for (k in 1:n) {

p[n-k+1] <- (-1)^k * sym.poly(z,k)

}

p <- c(p,1)

p

}

roots.to.poly(c(1,2)) # 2 -3 1

round(

Re(polyroot( roots.to.poly(c(1,2,3)) )),

digits=1

)

# After this interlude, we can finally

# construct an MA process and one of

# its inverses

n <- 200

k <- 3

ma <- runif(k,-1,1)

# The roots

z <- polyroot(c(1,-ma))

# The inverse of the roots

zi <- 1/z

# The polynomial

p <- roots.to.poly(zi)

# The result should be real, but because

# of rounding errors, it is not.

p <- Re(p)

# We want the constant term to be 1.

p <- p/p[1]

mai <- -p[-1]

op <- par(mfrow=c(4,1), mar=c(2,4,1,2)+.1)

x <- arima.sim(list(ma=ma),n)

plot(x, xlab="")

acf(x, main="", xlab="")

lines(0:n, ARMAacf(ma=ma, lag.max=n),

lty=2, lwd=3, col='red')

x <- arima.sim(list(ma=mai),n)

plot(x, xlab="")

acf(x, main="", xlab="")

lines(0:n, ARMAacf(ma=mai, lag.max=n),

lty=2, lwd=3, col='red')

par(op)

The MA(p) processes have another interesting feature: they are AR(infinity) processes.

TODO: on an example, plot the roots of those polynomials.

TODO: To check if the series we are studying has unit roots.

library(tseries) ?adf.test ?pp.tests

Of course, one can mix up MA and AR models, to get the so-called ARMA models. In the following formulas, z is a white noise and x the series we are interested in.

MA(p): x(i) = a1 z(i-1) + a2 z(i-2) + ... + ap z(i-p) AR(q): x(i) - b1 x(i-1) - b2 x(i-2) - ... - bq x(i-q) = z(i) ARMA(p,q): x(i) - b1 x(i-1) - ... - bq x(i-q) = a1 z(i-1) + ... + ap z(i-p)

Remark: AR(q) processes are also MA(infinity) processes, we could replace ARMA processes by MA(infinity) processes, but we prefer having fewer coefficients to estimate.

Remark: Wold's theorem states that any stationary process can be written as the sum of an MA(infinity) process and a deterministic one.

TODO: I have not defined what a deterministic process was.

Remark: if we let B be the shift operator, so that the derivation operator be 1-B, an ARMA process can be written as

phi(B) X(t) = theta(B) Z(t)

where theta(B) = 1 + a1 B + a2 B^2 + ... + ap B^p

phi(B) = 1 - b1 B - b2 B^2 - ... - bq B^q

Z is a white noiseARMA processes give a good approximation to most stationary processes. As a result, you can use the estimated ARMA coefficients as a statistical summary of a stationary process, exactly as the mean and the quantiles for univariate statistical series. They might be useful to forecast future values, but they provide little information as the the underlying mechanisms that produced the time series.

An ARMA(p,q) process

phi(B) Y = theta(B) Z

in which phi and theta have a root in common can be written, more simply, as an ARMA(p-1,q-1).

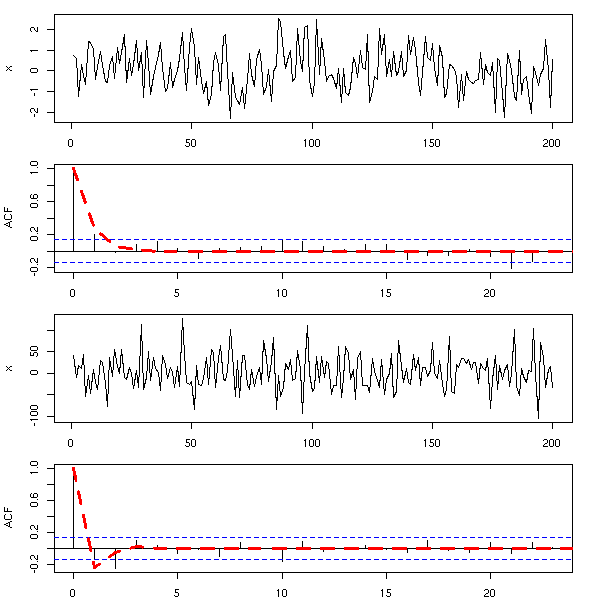

To model a time series as an ARMA model, it has to be stationnary. To get a stationary series (in short, to get rid of the trend), you can try to differentiate it.

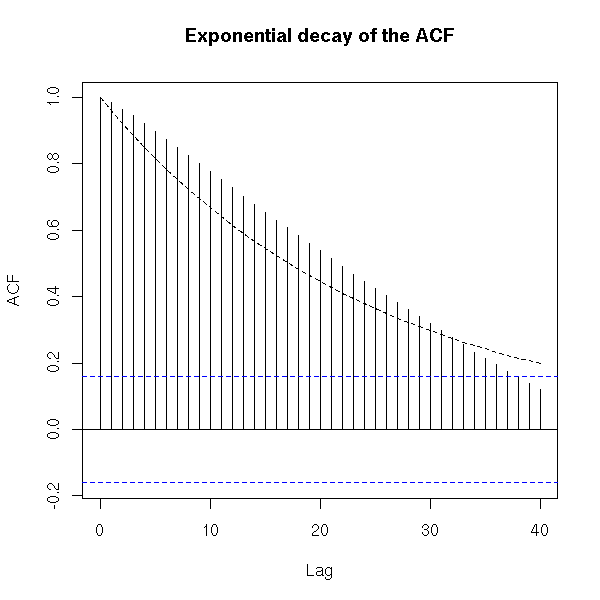

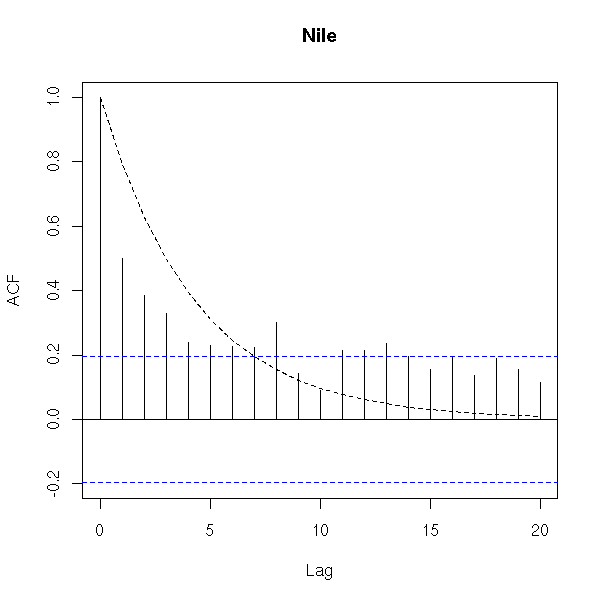

The fact that the series is not stationary is usually obvious on the plot. You can also see it on the ACF: if the series is stationary, the ACF should rapidly (usually exponentially) decay to zero.

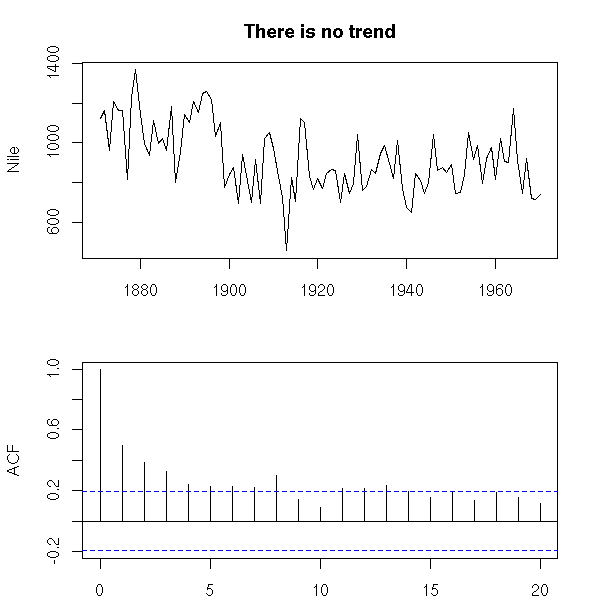

data(Nile) op <- par(mfrow=c(2,1), mar=c(2,4,3,2)+.1) plot(Nile, main="There is no trend", xlab="") acf(Nile, main="", xlab="") par(op)

data(BJsales)

op <- par(mfrow=c(3,1), mar=c(2,4,3,2)+.1)

plot(BJsales, xlab="",

main="The trend disappears if we differentiate")

acf(BJsales, xlab="", main="")

acf(diff(BJsales), xlab="", main="",

ylab="ACF(diff(BJsales)")

par(op)

n <- 2000

x <- arima.sim(

model = list(

ar = c(.3,.6),

ma = c(.8,-.5,.2),

order = c(2,1,3)),

n

)

x <- ts(x)

op <- par(mfrow=c(3,1), mar=c(2,4,3,2)+.1)

plot(x, main="It suffices to differentiate once",

xlab="", ylab="")

acf(x, xlab="", main="")

acf(diff(x), xlab="", main="",

ylab="ACF(diff(x))")

par(op)

n <- 10000

x <- arima.sim(

model = list(

ar = c(.3,.6),

ma = c(.8,-.5,.2),

order = c(2,2,3)

),

n

)

x <- ts(x)

op <- par(mfrow=c(4,1), mar=c(2,4,3,2)+.1)

plot(x, main="One has to differentiate twice",

xlab="", ylab="")

acf(x, main="", xlab="")

acf(diff(x), main="", xlab="",

ylab="ACF(diff(x))")

acf(diff(x,differences=2), main="", xlab="",

ylab="ACF(diff(diff(x)))")

par(op)

To check more precisely if the ACF decreases exponentially, one could perform a regression (but it might be overkill).

acf.exp <- function (x, lag.max=NULL, lag.max.reg=lag.max, ...) {

a <- acf(x, lag.max=lag.max.reg, plot=F)

b <- acf(x, lag.max=lag.max, ...)

r <- lm( log(a$acf) ~ a$lag -1)

lines( exp( b$lag * r$coef[1] ) ~ b$lag, lty=2 )

}

data(BJsales)

acf.exp(BJsales,

main="Exponential decay of the ACF")

acf.exp(BJsales,

lag.max=40,

main="Exponential decay of the ACF")

data(Nile) acf.exp(Nile, lag.max.reg=10, main="Nile")

Differentiating can also help you get rid of the seasonal component, if you differentiate with a lag: e.g., take the difference between the value today and the same value one year ago.

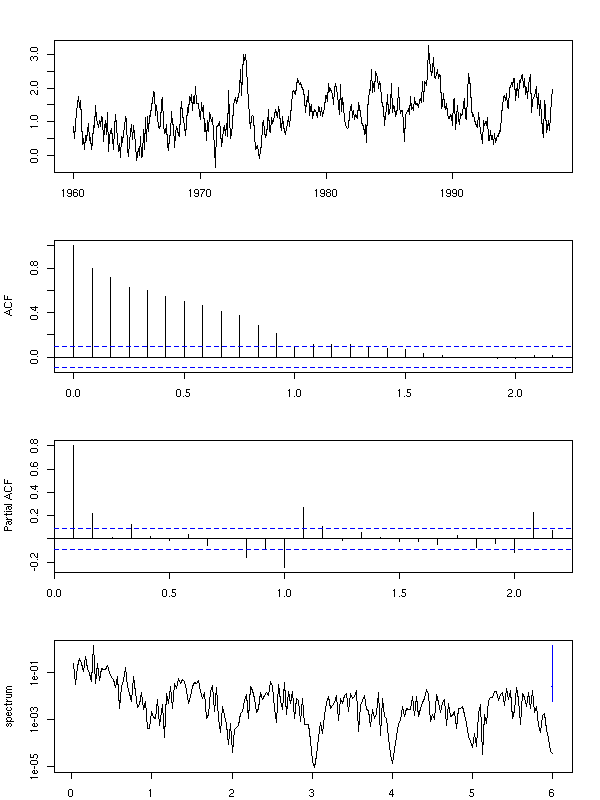

x <- diff(co2, lag=12)

op <- par(mfrow=c(4,1), mar=c(2,4,3,2)+.1)

plot(x, ylab="", xlab="")

acf(x, xlab="", main="")

pacf(x, xlab="", main="")

spectrum(x, xlab="", main="",

col=par('fg'))

par(op)

y <- diff(x)

op <- par(mfrow=c(4,1), mar=c(2,4,3,2)+.1)

plot(y, xlab="", ylab="")

acf(y, xlab="", main="")

pacf(y, xlab="", main="")

spectrum(y, col=par('fg'),

xlab="", main="")

par(op)

ARIMA processes are just integrated ARMA processes. In other words, a process is ARIMA of order d if its d-th derivative is ARMA. The model can be written

phi(B) (1-B)^d X(t) = theta(B) Z(t)

where B is the shift operator, Z a white noise, phi the polynomial defining the AR part, theta the polynomial defining the MA part of the process.

ARIMA processes are not stationary processes. We have already seen it with the random walk, which is an integrated ARMA(0,0) process, i.e., an ARIMA process of order 1: the variance of X(t) increases with t. This is the very reason why we differentiate: to get a stationary process.

TODO: Give an example of ARIMA(0,1,0) process, show that it is not stationary. Recall the tests to check if it is stationary. Quick and dirty stationarity test: cut the data into two parts, compute Cor(X(t),X(t-1)) on each, compare.

To infer the order of an ARIMA process, you can differentiate it until its ACF rapidly decreases.

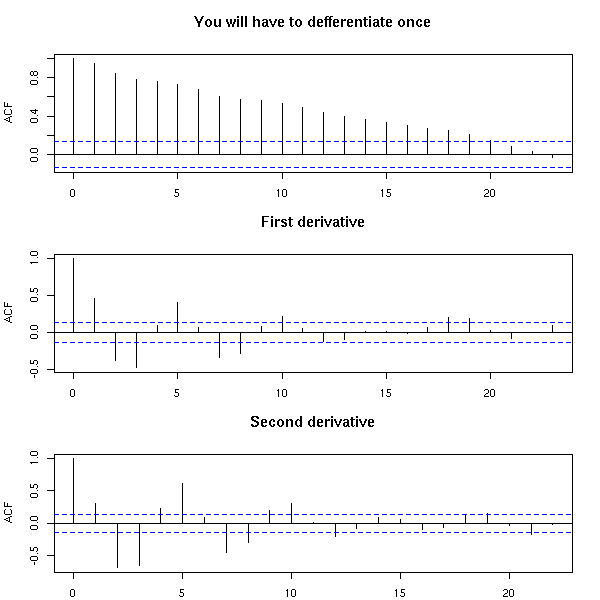

n <- 200

x <- arima.sim(

list(

order=c(2,1,2),

ar=c(.5,-.8),

ma=c(.9,.6)

),

n

)

op <- par(mfrow=c(3,1), mar=c(2,4,4,2)+.1)

acf(x, main="You will have to defferentiate once")

acf(diff(x), main="First derivative")

acf(diff(x, differences=2), main="Second derivative")

par(op)

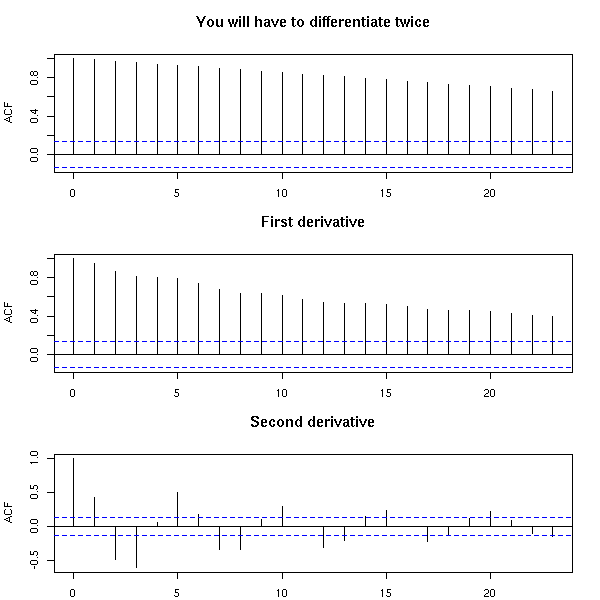

n <- 200

x <- arima.sim(

list(

order=c(2,2,2),

ar=c(.5,-.8),

ma=c(.9,.6)

),

n

)

op <- par(mfrow=c(3,1), mar=c(2,4,4,2)+.1)

acf(x, main="You will have to differentiate twice")

acf(diff(x), main="First derivative")

acf(diff(x, differences=2), main="Second derivative")

par(op)

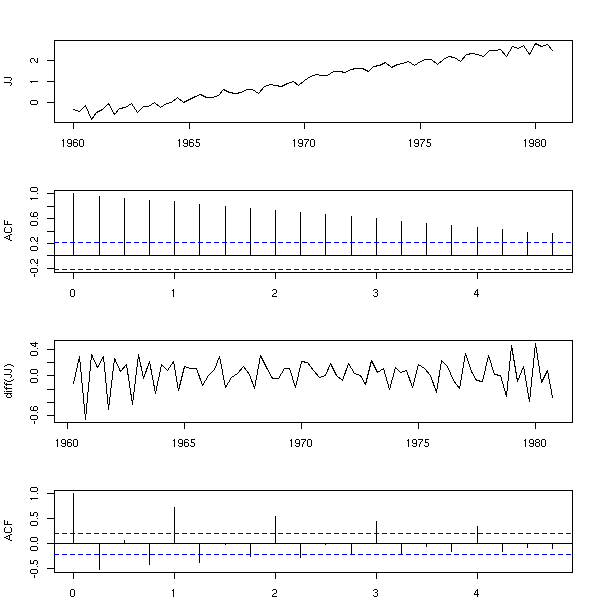

Here is a concrete example.

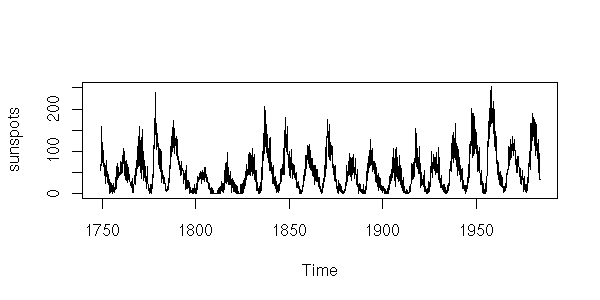

data(sunspot)

op <- par(mfrow=c(4,1), mar=c(2,4,3,2)+.1)

plot(sunspot.month, xlab="", ylab="sunspot")

acf(sunspot.month, xlab="", main="")

plot(diff(sunspot.month),

xlab="", ylab="diff(sunspot)")

acf(diff(sunspot.month), xlab="", main="")

par(op)

Same here (but actually, the differentiation discards the affine trend).

data(JohnsonJohnson) x <- log(JohnsonJohnson) op <- par(mfrow=c(4,1), mar=c(2,4,3,2)+.1) plot(x, xlab="", ylab="JJ") acf(x, main="") plot(diff(x), ylab="diff(JJ)") acf(diff(x), main="") par(op)

In the following examples, you might want to differentiate twice. But beware, it might not always be a good idea: if the ACF decreases exponentially, you can stop differentiating.

data(BJsales) x <- BJsales op <- par(mfrow=c(6,1), mar=c(2,4,0,2)+.1) plot(x) acf(x) plot(diff(x)) acf(diff(x)) plot(diff(x, difference=2)) acf(diff(x, difference=2)) par(op)

data(austres) x <- austres op <- par(mfrow=c(6,1), mar=c(2,4,0,2)+.1) plot(x) acf(x) plot(diff(x)) acf(diff(x)) plot(diff(x, difference=2)) acf(diff(x, difference=2)) par(op)

# In the preceding example, there was a linear trend: # let ut remove it. data(austres) x <- lm(austres ~ time(austres))$res op <- par(mfrow=c(6,1), mar=c(2,4,0,2)+.1) plot(x) acf(x) plot(diff(x)) acf(diff(x)) plot(diff(x, difference=2)) acf(diff(x, difference=2)) par(op)

These are Seasonnal ARIMA processes (the integration, the MA or the AR parts can be seasonal). They are often denoted:

(p,d,q) \times (P,D,Q) _s

and they are described by the model:

phi(B) Phi(B^s) (1-B)^d (1-B^s)^D X(t) = theta(B) Theta(B^s) Z(t)

where s is the period

theta(B) = 1 + a1 B + a2 B^2 + ... + ap B^p is the MA polynomial

phi(B) = 1 - b1 B - b2 B^2 - ... - bq B^q is the AR polynomial

Theta(B^s) = 1 + A1 B^s + A2 B^2s + ... + AP B^(P*s) is the seasonal MA polynomial

Phi(B^s) = 1 - B1 B^s - B2 B^2s - ... - BQ B^(Q*s) is the seasonal AR polynomial

Z is a white noiseThere is no function to simulate SARIMA processes -- but we can model them.

my.sarima.sim <- function (

n = 20,

period = 12,

model,

seasonal

) {

x <- arima.sim( model, n*period )

x <- x[1:(n*period)]

for (i in 1:period) {

xx <- arima.sim( seasonal, n )

xx <- xx[1:n]

x[i + period * 0:(n-1)] <-

x[i + period * 0:(n-1)] + xx

}

x <- ts(x, frequency=period)

x

}

op <- par(mfrow=c(3,1))

x <- my.sarima.sim(

20,

12,

list(ar=.6, ma=.3, order=c(1,0,1)),

list(ar=c(.5), ma=c(1,2), order=c(1,0,2))

)

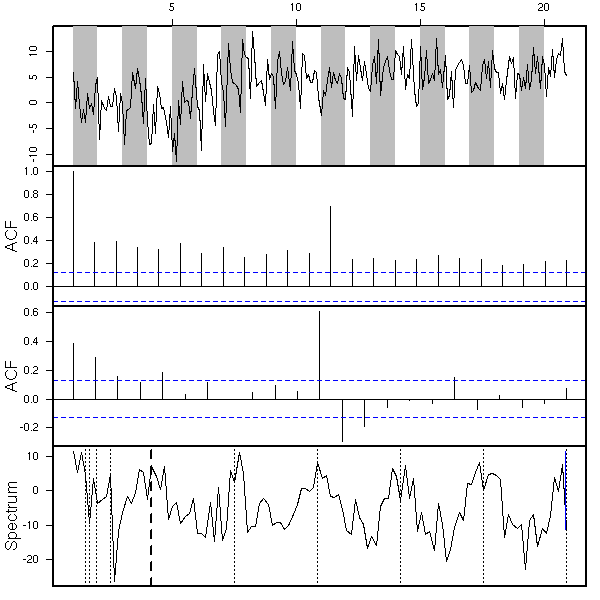

eda.ts(x, bands=T)

x <- my.sarima.sim( 20, 12, list(ar=c(.5,-.3), ma=c(-.8,.5,-.3), order=c(2,1,3)), list(ar=c(.5), ma=c(1,2), order=c(1,0,2)) ) eda.ts(x, bands=T)

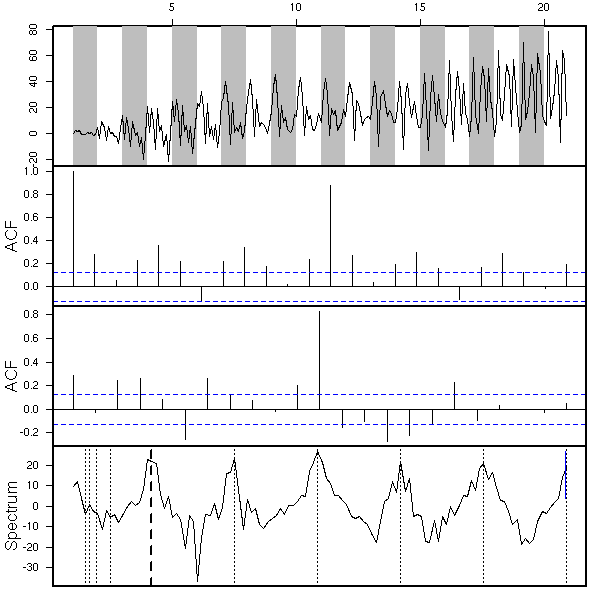

x <- my.sarima.sim( 20, 12, list(ar=c(.5,-.3), ma=c(-.8,.5,-.3), order=c(2,1,3)), list(ar=c(.5), ma=c(1,2), order=c(1,1,2)) ) eda.ts(x, bands=T)

TODO

The co2 example (somewhere above) could well be modeled as an SARIMA model.

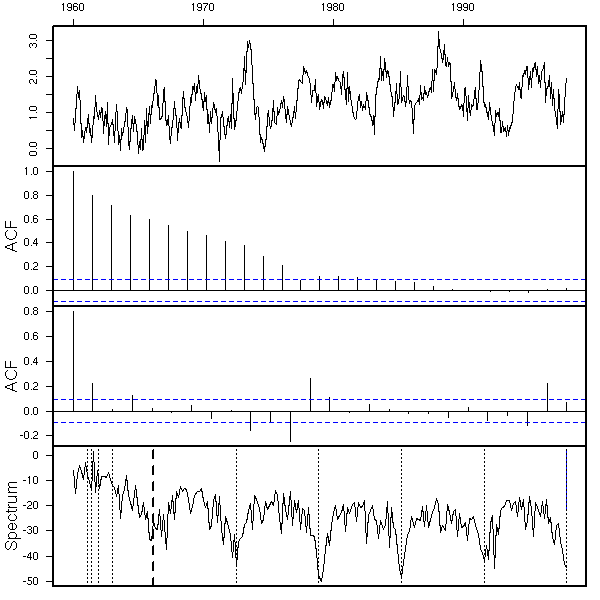

x <- co2 eda.ts(x)

First, we see that there is a trend: we differentiate once to get rid of it.

eda.ts(diff(x))

There is also a periodic component: we differentiate, with a 12-month lag, to get rid of it.

eda.ts(diff(diff(x),lag=12))

But wait! We have differentiated twice. Couldn't we get rid of both the periodic component and the trend by differentiating just once, with the 12-month lag?

eda.ts(diff(x,lag=12))

Well, perhaps. We hesitate between

SARIMA(?,1,?)(?,1,?)

and

SARIMA(?,0,?)(?,1,?).

If we look at the ACF and the PACF:

SARIMA(1,1,1)(2,1,1) SARIMA(1,1,2)(2,1,1) SARIMA(2,0,0)(1,1,0) SARIMA(2,0,0)(1,1,1)

Let us compute the coefficients of those models:

r1 <- arima(co2,

order=c(1,1,1),

list(order=c(2,1,1), period=12)

)

r2 <- arima(co2,

order=c(1,1,2),

list(order=c(2,1,1), period=12)

)

r3 <- arima(co2,

order=c(2,0,0),

list(order=c(1,1,0), period=12)

)

r4 <- arima(co2,

order=c(2,0,0),

list(order=c(1,1,1), period=12)

)This yields:

> r1

Call:

arima(x = co2, order = c(1, 1, 1), seasonal = list(order = c(2, 1, 1), period = 12))

Coefficients:

ar1 ma1 sar1 sar2 sma1

0.2595 -0.5902 0.0113 -0.0869 -0.8369

s.e. 0.1390 0.1186 0.0558 0.0539 0.0332

sigma^2 estimated as 0.08163: log likelihood = -83.6, aic = 179.2

> r2

Call:

arima(x = co2, order = c(1, 1, 2), seasonal = list(order = c(2, 1, 1), period = 12))

Coefficients:

ar1 ma1 ma2 sar1 sar2 sma1

0.5935 -0.929 0.1412 0.0141 -0.0870 -0.8398

s.e. 0.2325 0.237 0.1084 0.0557 0.0538 0.0328

sigma^2 estimated as 0.08132: log likelihood = -82.85, aic = 179.7

> r3

Call:

arima(x = co2, order = c(2, 0, 0), seasonal = list(order = c(1, 1, 0), period = 12))

Coefficients:

ar1 ar2 sar1

0.6801 0.3087 -0.4469

s.e. 0.0446 0.0446 0.0432

sigma^2 estimated as 0.1120: log likelihood = -150.65, aic = 309.3For r4, it was even:

Error in arima(co2, order = c(2, 0, 1), list(order = c(1, 1, 1), period = 12)) :

non-stationary AR part from CSSThe AIC of a3 is appallingly high (we want as low a value as possible): we really need to differentiate twice.

Let us look at the p-values:

> round(pnorm(-abs(r1$coef), sd=sqrt(diag(r1$var.coef))),5)

ar1 ma1 sar1 sar2 sma1

0.03094 0.00000 0.42007 0.05341 0.00000

> round(pnorm(-abs(r1$coef), sd=sqrt(diag(r1$var.coef))),5)

ar1 ma1 ma2 sar1 sar2 sma1

0.00535 0.00004 0.09635 0.39989 0.05275 0.00000This suggests an SARIMA(1,1,1)(0,1,1) model.

r3 <- arima( co2,

order=c(1,1,1),

list(order=c(0,1,1), period=12)

)This yields:

> r3

Call:

arima(x = co2, order = c(1, 1, 1), seasonal = list(order = c(0, 1, 1), period = 12))

Coefficients:

ar1 ma1 sma1

0.2399 -0.5710 -0.8516

s.e. 0.1430 0.1237 0.0256

sigma^2 estimated as 0.0822: log likelihood = -85.03, aic = 178.07

> round(pnorm(-abs(r3$coef), sd=sqrt(diag(r3$var.coef))),5)

ar1 ma1 sma1

0.04676 0.00000 0.00000We now look at the residuals:

r3 <- arima(

co2,

order = c(1, 1, 1),

seasonal = list(

order = c(0, 1, 1),

period = 12

)

)

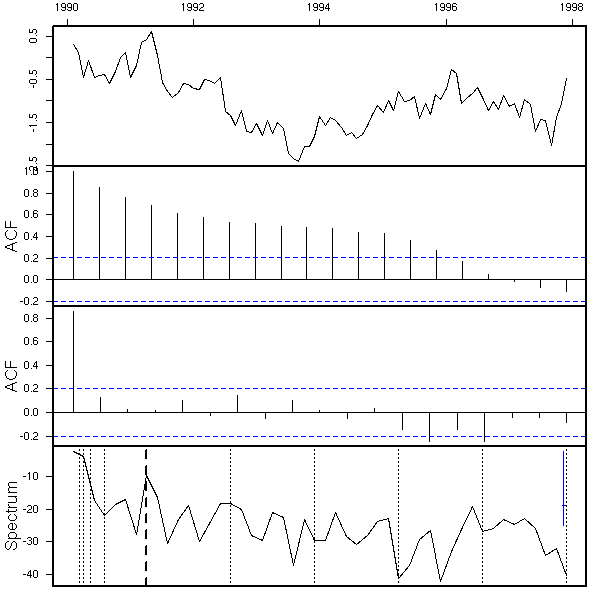

eda.ts(r3$res)

Good, we can now try to use this mode to predict future values. To get an idea of the quality of those forecasts, we can use the first part of the data to estimate the model coefficients and compute the predictions and the second part to assess the quality of the predictions -- but beware, this is biased, because we chose the model by using all the data, including the data from the test sample.

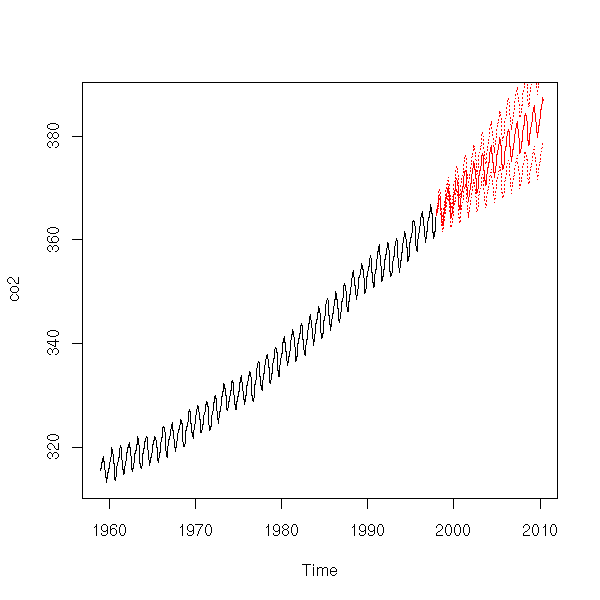

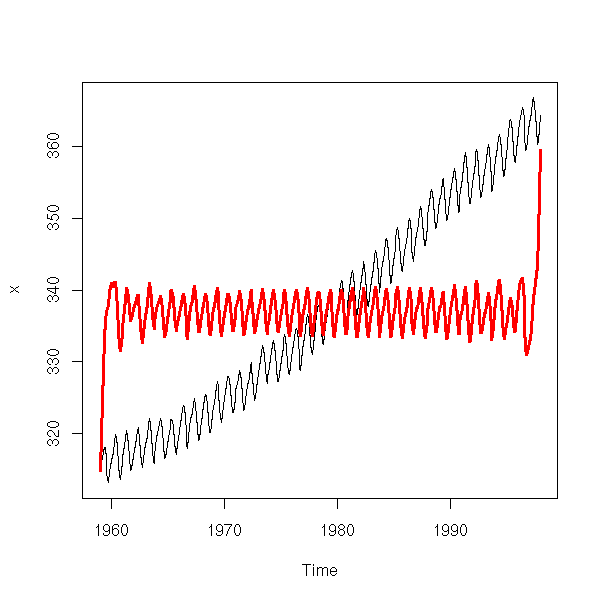

x1 <- window(co2, end = 1990)

r <- arima(

x1,

order = c(1, 1, 1),

seasonal = list(

order = c(0, 1, 1),

period = 12

)

)

plot(co2)

p <- predict(r, n.ahead=100)

lines(p$pred, col='red')

lines(p$pred+qnorm(.025)*p$se, col='red', lty=3)

lines(p$pred+qnorm(.975)*p$se, col='red', lty=3)

# On the contrary, I do not know what to do with # this plots (it looks like integrated noise). eda.ts(co2-p$pred)

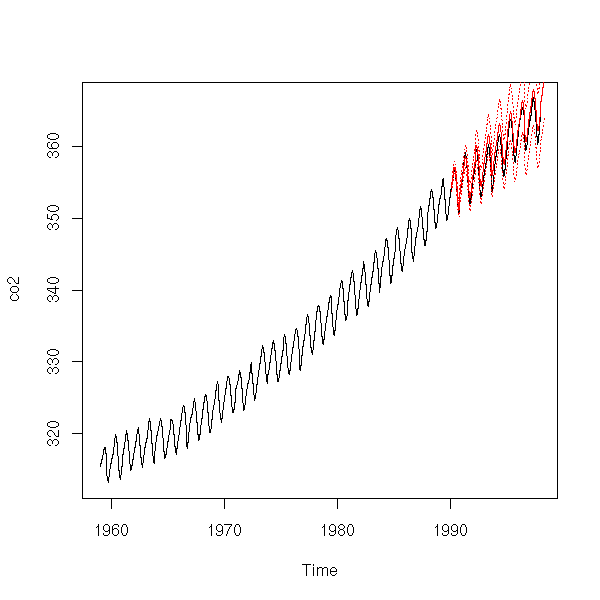

It is not that bad. Here are our forecasts.

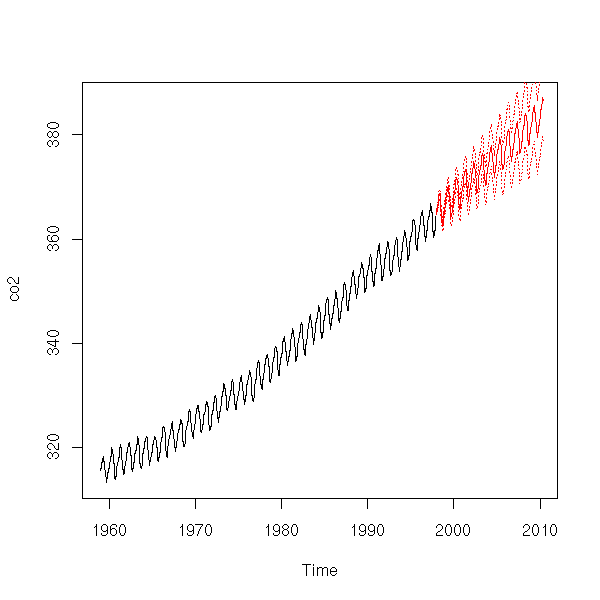

r <- arima(

co2,

order = c(1, 1, 1),

seasonal = list(

order = c(0, 1, 1),

period = 12

)

)

p <- predict(r, n.ahead=150)

plot(co2,

xlim=c(1959,2010),

ylim=range(c(co2,p$pred)))

lines(p$pred, col='red')

lines(p$pred+qnorm(.025)*p$se, col='red', lty=3)

lines(p$pred+qnorm(.975)*p$se, col='red', lty=3)

What we have done is called the Box and Jenkins method. The general case can be a little more complicated: if the residuals do not look like white noise, we have to get back to find another model.

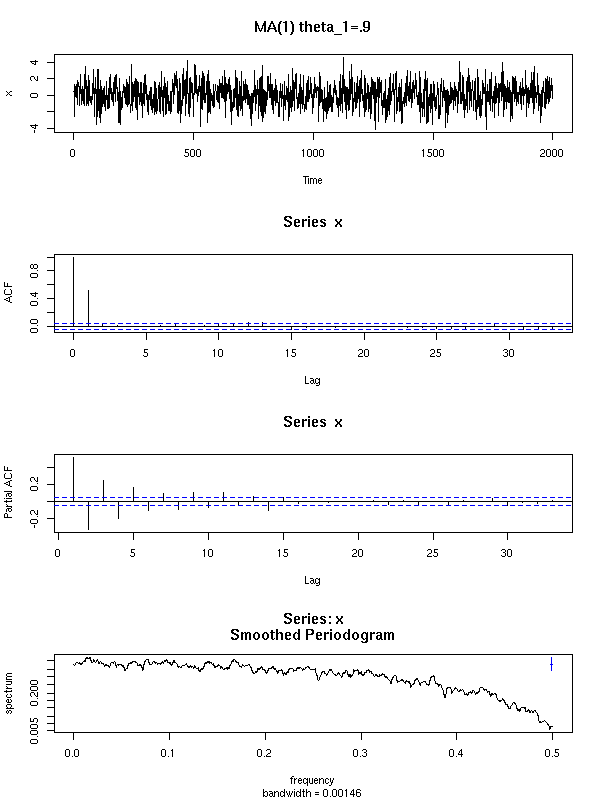

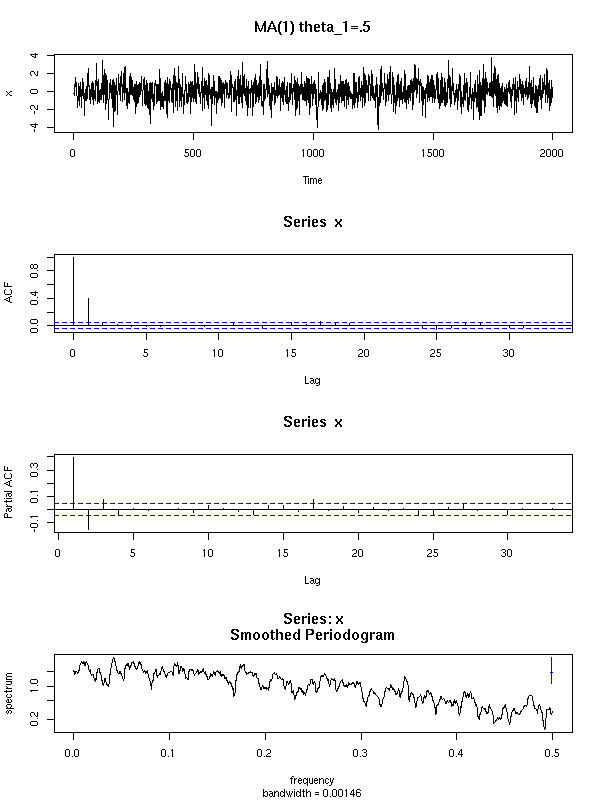

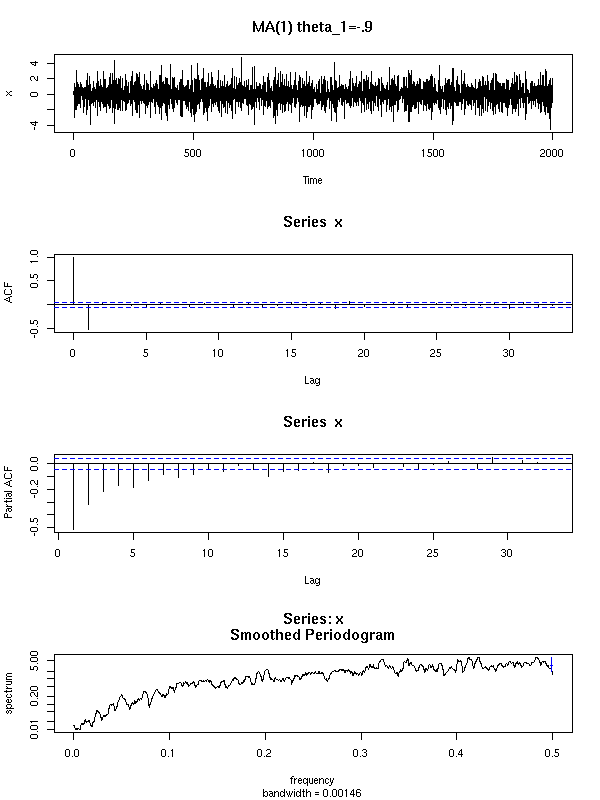

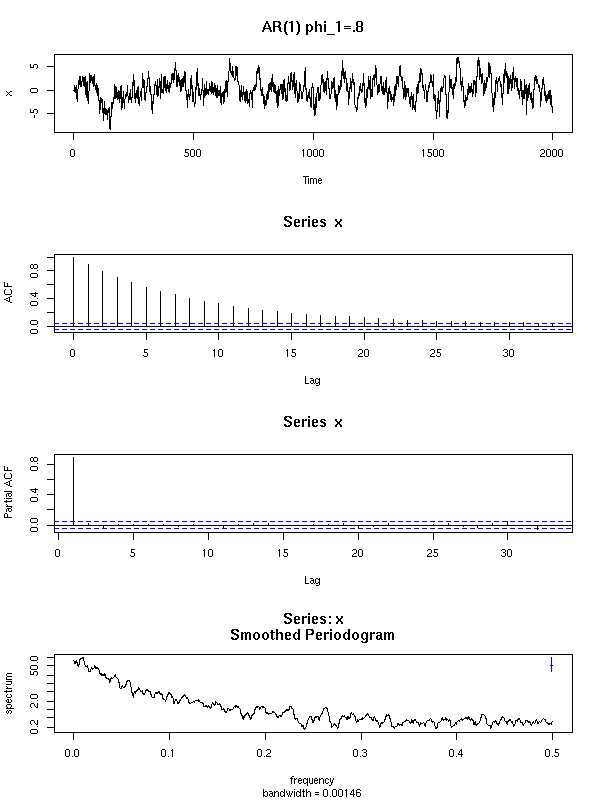

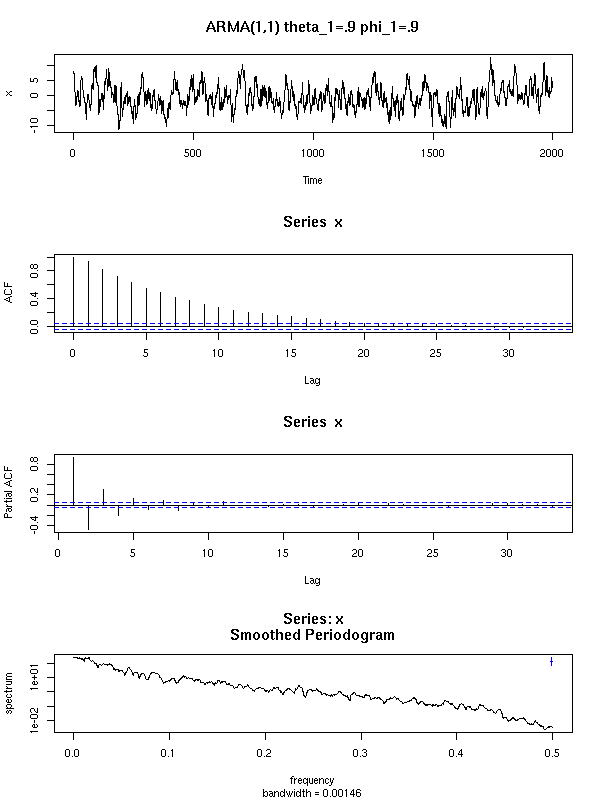

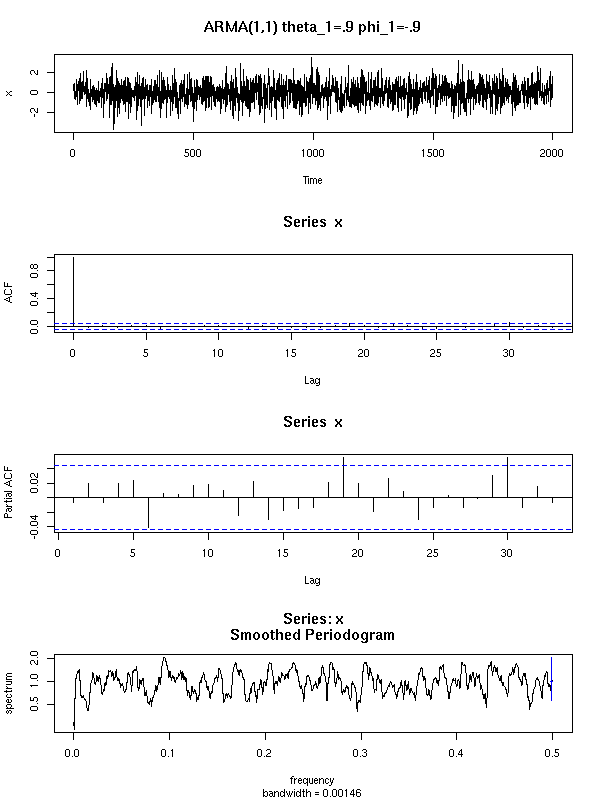

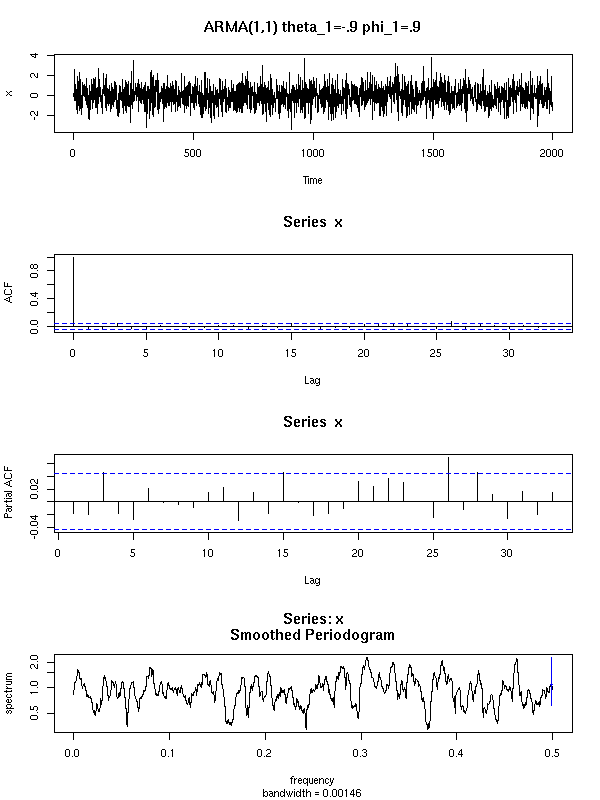

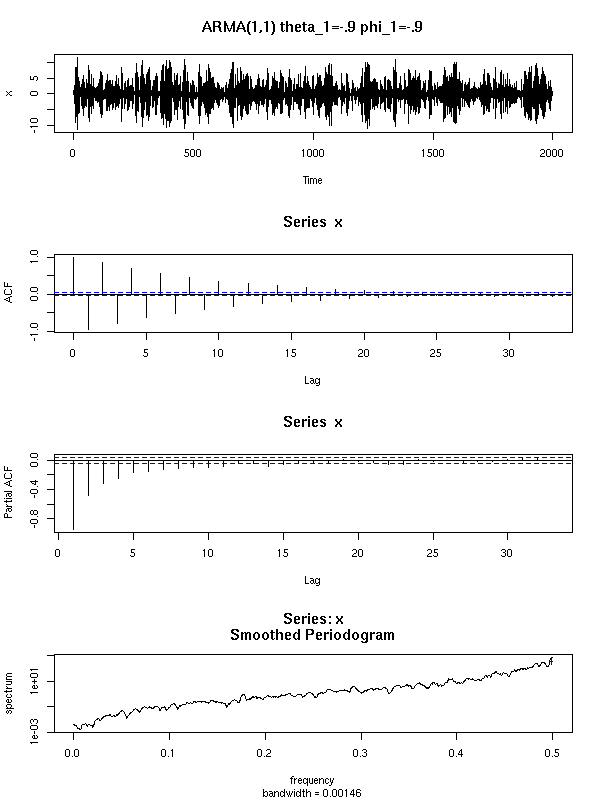

0. Differentiate to get a stationary process. If there is a trend, the process is not stationary. If the ACF decreases slowly, try to differentiate once more. 1. Identify the model: ARMA(1,0): ACF: exponential decrease; PACF: one peak ARMA(2,0): ACF: exponential decrease or waves; PACF: two peaks ARMA(0,1): ACF: one peak; PACF: exponential decrease ARMA(0,2): ACF: two peaks; PACF: exponential decrease or waves ARMA(1,1): ACF&PACF: exponential decrease 2. Compute the coefficients 3. Diagnostics (go to 1 if they do not look like white noise) Computhe the p-values, remove unneeded coefficients (check on the residuals that they are indeed unneeded). 4. Forecasts

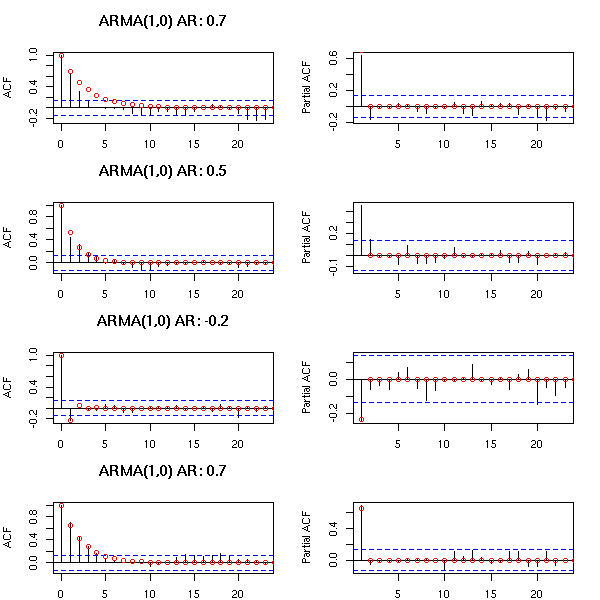

Here are a few examples of ARMA processes (in red: the theoretic ACF and PACF).

The ARMA(1,0) is characterized by the exponential decrease of the ACF and the single peak in the PACF.

op <- par(mfrow=c(4,2), mar=c(2,4,4,2))

n <- 200

for (i in 1:4) {

x <- NULL

while(is.null(x)) {

model <- list(ar=rnorm(1))

try( x <- arima.sim(model, n) )

}

acf(x,

main = paste(

"ARMA(1,0)",

"AR:",

round(model$ar, digits = 1)

))

points(0:50,

ARMAacf(ar=model$ar, lag.max=50),

col='red')

pacf(x, main="")

points(1:50,

ARMAacf(ar=model$ar, lag.max=50, pacf=T),

col='red')

}

par(op)

You can recognize an ARMA(2,0) from the two peaks in the PACF and the exponential decrease (or the waves) in the ACF.

op <- par(mfrow=c(4,2), mar=c(2,4,4,2))

n <- 200

for (i in 1:4) {

x <- NULL

while(is.null(x)) {

model <- list(ar=rnorm(2))

try( x <- arima.sim(model, n) )

}

acf(x,

main=paste("ARMA(2,0)","AR:",

round(model$ar[1],digits=1),

round(model$ar[2],digits=1)

))

points(0:50,

ARMAacf(ar=model$ar, lag.max=50),

col='red')

pacf(x, main="")

points(1:50,

ARMAacf(ar=model$ar, lag.max=50, pacf=T),

col='red')

}

par(op)

You can recognize an ARMA(0,1) process from its unique peak in the ACF and the exponential decrease of the PACF.

op <- par(mfrow=c(4,2), mar=c(2,4,4,2))

n <- 200

for (i in 1:4) {

x <- NULL

while(is.null(x)) {

model <- list(ma=rnorm(1))

try( x <- arima.sim(model, n) )

}

acf(x,

main = paste(

"ARMA(0,1)",

"MA:",

round(model$ma, digits=1)

))

points(0:50,

ARMAacf(ma=model$ma, lag.max=50),

col='red')

pacf(x, main="")

points(1:50,

ARMAacf(ma=model$ma, lag.max=50, pacf=T),

col='red')

}

par(op)

The ARMA(0,2) model has two peaks in the ACF and if PACF exponentially decreases or exhibits a pattern of waves.

op <- par(mfrow=c(4,2), mar=c(2,4,4,2))

n <- 200

for (i in 1:4) {

x <- NULL

while(is.null(x)) {

model <- list(ma=rnorm(2))

try( x <- arima.sim(model, n) )

}

acf(x, main=paste("ARMA(0,2)","MA:",

round(model$ma[1],digits=1),

round(model$ma[2],digits=1)

))

points(0:50,

ARMAacf(ma=model$ma, lag.max=50),

col='red')

pacf(x, main="")

points(1:50,

ARMAacf(ma=model$ma, lag.max=50, pacf=T),

col='red')

}

par(op)

For the ARMA(1,1), both the ACF and the PACF exponentially decrease.

op <- par(mfrow=c(4,2), mar=c(2,4,4,2))

n <- 200

for (i in 1:4) {

x <- NULL

while(is.null(x)) {

model <- list(ma=rnorm(1),ar=rnorm(1))

try( x <- arima.sim(model, n) )

}

acf(x, main=paste("ARMA(1,1)",

"AR:", round(model$ar,digits=1),

"AR:", round(model$ma,digits=1)

))

points(0:50,

ARMAacf(ar=model$ar, ma=model$ma, lag.max=50),

col='red')

pacf(x, main="")

points(1:50,

ARMAacf(ar=model$ar, ma=model$ma, lag.max=50, pacf=T),

col='red')

}

par(op)

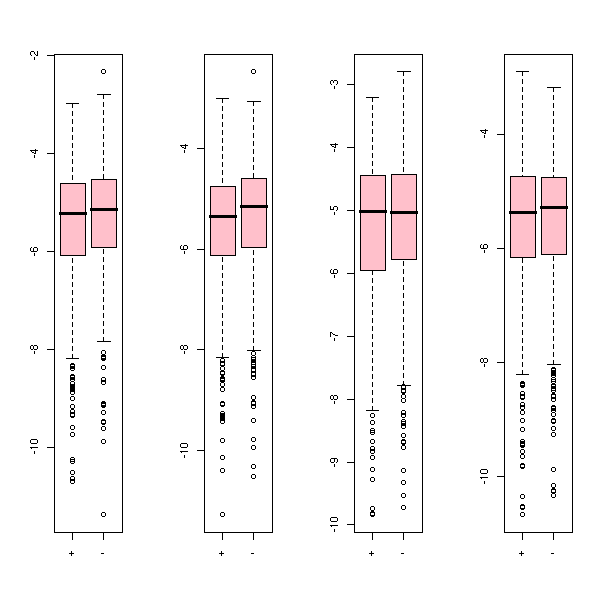

Instead of the stepwise procedure presented, we can proceed in a more violent way, by looking at the AIC of all thereasonably complex models and retaining those whose AIC is the lowest. As this amounts to performing innumarable tests, we do not only take the single model with the lowest AIC, but several -- we shall prefer a simple model whose residuals look like white noise. You can remorselessly discard models whose AIC (or BIC: the AIC may be meaningful for nested model, but the BIC has a more general validity) is 100 units more that the lowest one, you should have remorse if you discard those 6 to 20 units from the lowest, and you should not discard those less than 6 units away.

a <- array(NA, dim=c(2,2,2,2,2,2))

for (p in 0:2) {

for (d in 0:2) {

for (q in 0:2) {

for (P in 0:2) {

for (D in 0:2) {

for (Q in 0:2) {

r <- list(aic=NA)

try(

r <- arima( co2,

order=c(p,d,q),

list(order=c(P,D,Q), period=12)

)

)

a[p,d,q,P,D,Q] <- r$aic

cat(r$aic); cat("\n")

}

}

}

}

}

}

# When I wrote this, I dod not know the "which.min" function.

argmin.vector <- function (v) {

(1:length(v)) [ v == min(v) ]

}

x <- sample(1:10)

x

argmin.vector(x)

x <- sample(1:5, 20, replace=T)

x

argmin.vector(x)

x <- array(x, dim=c(5,2,2))

index.from.vector <- function (i,d) {

res <- NULL

n <- prod(d)

i <- i-1

for (k in length(d):1) {

n <- n/d[k]

res <- c( i %/% n, res )

i <- i %% n

}

res+1

}

index.from.vector(7, c(2,2,2))

index.from.vector(29, c(5,3,2))

argmin <- function (a) {

a <- as.array(a)

d <- dim(a)

a <- as.vector(a)

res <- matrix(nr=0, nc=length(d))

for (i in (1:length(a))[ a == min(a) ]) {

j <- index.from.vector(i,d)

res <- rbind(res, j)

}

res

}