Tests and confidence intervals

Partial residual plots, added variable plots

Some plots to explore a regression

Overfit

Underfit

Influential points

Influential clusters

Non gaussian residuals

Heteroskedasticity

Correlated errors

Unidentifiability

Missing values

Extrapolation

Miscellaneous

The curse of dimension

Wide problems

In this chapter, we list some of the problems that may occur in a regression and explain how to spot them -- graphically. Often, you can solve the problem by transforming the variables (so that the outliers and influential observations disappear, so that the residuals look normal, so that the residuals have the same variance -- quite often, you can do all this at the same time), by altering the model (for a simpler or more complex one) or by using another regression (GLS to account for heteroskedasticity and correlated residuals, robust regression to account for remaining influencial observations).

Overfit: choose a simpler model

Underfit: curvilinear regression, non-linear regression, local regression

Influential points: transform the data, robust regression, weighted

least squares, remove the points

Influential clusters: transform the data, mixtures

Non-gaussian residuals: transform the data, robust regression, normalp

Heteroskedasticity: gls

correlated residuals: gls

Unidentifiability: shrinkage methods

Missing values: discard the observations???

The curse of dimension (GAM,...)

Combining regressions (BMA,...)

After this bird's eye view of several regression techniques, let us come back to linear regression.

The "summary" function gave us the results of a Student T test on the regression coefficients -- that answered the question "is this coefficient significantly different from zero?".

> x <- rnorm(100)

> y <- 1 + 2*x + .3*rnorm(100)

> summary(lm(y~x))

...

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 1.08440 0.03224 33.64 <2e-16 ***

x 2.04051 0.03027 67.42 <2e-16 ***In the same example, if we have a prior idea on the value of the coefficient, we can test this value: here, let us test if the intercept is 1.

(When you write a formula to describe a model, some operators are interpreted in a different way (especially * and ^): to be sure that they will be understood as arithmetic operations on the variables, surround them with I(...). Here, it is not needed.)

> x <- rnorm(100)

> y <- 1 + 2*x + .3*rnorm(100)

> summary(lm(I(y-1)~x))

Call:

lm(formula = I(y - 1) ~ x)

Residuals:

Min 1Q Median 3Q Max

-0.84692 -0.24891 0.02781 0.20486 0.60522

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) -0.01294 0.02856 -0.453 0.651

x 1.96405 0.02851 68.898 <2e-16 ***

---

Signif. codes: 0 `***' 0.001 `**' 0.01 `*' 0.05 `.' 0.1 ` ' 1

Residual standard error: 0.2855 on 98 degrees of freedom

Multiple R-Squared: 0.9798, Adjusted R-squared: 0.9796

F-statistic: 4747 on 1 and 98 DF, p-value: < 2.2e-16Under the hypothesis that this coefficient is 1, let us check if the other is zero.

> summary(lm(I(y-1)~0+x))

Call:

lm(formula = I(y - 1) ~ 0 + x)

Residuals:

Min 1Q Median 3Q Max

-0.85962 -0.26189 0.01480 0.19184 0.59227

Coefficients:

Estimate Std. Error t value Pr(>|t|)

x 1.96378 0.02839 69.18 <2e-16 ***

---

Signif. codes: 0 `***' 0.001 `**' 0.01 `*' 0.05 `.' 0.1 ` ' 1

Residual standard error: 0.2844 on 99 degrees of freedom

Multiple R-Squared: 0.9797, Adjusted R-squared: 0.9795

F-statistic: 4786 on 1 and 99 DF, p-value: < 2.2e-16Other method:

> x <- rnorm(100)

> y <- 1 + 2*x + .3*rnorm(100)

> a <- rep(1,length(x))

> summary(lm(y~offset(a)-1+x))

Call:

lm(formula = y ~ offset(a) - 1 + x)

Residuals:

Min 1Q Median 3Q Max

-0.92812 -0.09901 0.09515 0.28893 0.99363

Coefficients:

Estimate Std. Error t value Pr(>|t|)

x 2.04219 0.03114 65.58 <2e-16 ***

---

Signif. codes: 0 `***' 0.001 `**' 0.01 `*' 0.05 `.' 0.1 ` ' 1

Residual standard error: 0.3317 on 99 degrees of freedom

Multiple R-Squared: 0.9816, Adjusted R-squared: 0.9815

F-statistic: 5293 on 1 and 99 DF, p-value: < 2.2e-16Let us check if it is equal to 2:

> summary(lm(I(y-1-2*x)~0+x))

Call:

lm(formula = I(y - 1 - 2 * x) ~ 0 + x)

Residuals:

Min 1Q Median 3Q Max

-0.85962 -0.26189 0.01480 0.19184 0.59227

Coefficients:

Estimate Std. Error t value Pr(>|t|)

x -0.03622 0.02839 -1.276 0.205

Residual standard error: 0.2844 on 99 degrees of freedom

Multiple R-Squared: 0.01618, Adjusted R-squared: 0.006244

F-statistic: 1.628 on 1 and 99 DF, p-value: 0.2049Other method:

> summary(lm(y~offset(1+2*x)+0+x))

Call:

lm(formula = y ~ offset(1 + 2 * x) + 0 + x)

Residuals:

Min 1Q Median 3Q Max

-0.92812 -0.09901 0.09515 0.28893 0.99363

Coefficients:

Estimate Std. Error t value Pr(>|t|)

x 0.04219 0.03114 1.355 0.179

Residual standard error: 0.3317 on 99 degrees of freedom

Multiple R-Squared: 0.9816, Adjusted R-squared: 0.9815

F-statistic: 5293 on 1 and 99 DF, p-value: < 2.2e-16More generally, you can use the "offset" function in a linear regression when you know exactly one of the coefficients.

Other method:

x <- rnorm(100) y <- 1 + 2*x + .3*rnorm(100) library(car) linear.hypothesis( lm(y~x), matrix(c(1,0,0,1), 2, 2), c(1,2) )

This checks if

[ 1 0 ] [ first coefficient ] [ 1 ] [ ] * [ ] = [ ] [ 0 1 ] [ second coefficient ] [ 2 ].

This yields:

F-Test SS = 0.04165324 SSE = 9.724817 F = 0.2098763 Df = 2 and 98 p = 0.8110479

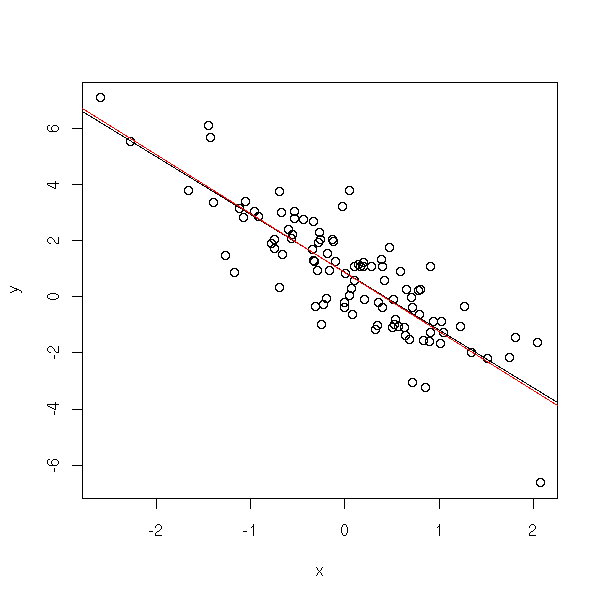

You can compute confidence intervals on the parameters.

> library(MASS)

> n <- 100

> x <- rnorm(n)

> y <- 1 - 2*x + rnorm(n)

> r <- lm(y~x)

> r$coefficients

(Intercept) x

0.9569173 -2.1296830

> confint(r)

2.5 % 97.5 %

(Intercept) 0.7622321 1.151603

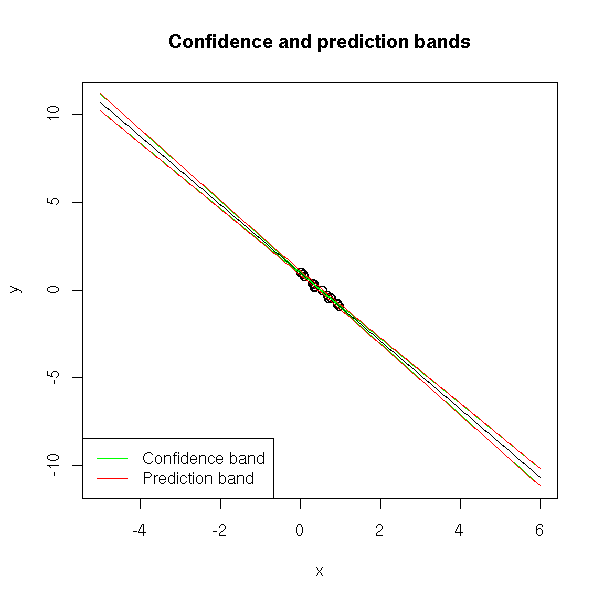

x -2.3023449 -1.957021You can also look for confidence intervals on the predicted values. It can be a confidence interval of aX+b (confidence band) or -- this is different -- of E[Y|X=x] (prediction band).

x <- runif(20)

y <- 1-2*x+.1*rnorm(20)

res <- lm(y~x)

plot(y~x)

new <- data.frame( x=seq(0,1,length=21) )

p <- predict(res, new)

points( p ~ new$x, type='l' )

p <- predict(res, new, interval='confidence')

points( p[,2] ~ new$x, type='l', col="green" )

points( p[,3] ~ new$x, type='l', col="green" )

p <- predict(res, new, interval='prediction')

points( p[,2] ~ new$x, type='l', col="red" )

points( p[,3] ~ new$x, type='l', col="red" )

title(main="Confidence and prediction bands")

legend( par("usr")[1], par("usr")[3], yjust=0,

c("Confidence band", "Prediction band"),

lwd=1, lty=1, col=c("green", "red") )

TODO: stress the difference between the two...

Away from the values of the sample, the intervals grow.

plot(y~x, xlim=c(-1,2), ylim=c(-3,3))

new <- data.frame( x=seq(-2,3,length=200) )

p <- predict(res, new)

points( p ~ new$x, type='l' )

p <- predict(res, new, interval='confidence')

points( p[,2] ~ new$x, type='l', col="green" )

points( p[,3] ~ new$x, type='l', col="green" )

p <- predict(res, new, interval='prediction')

points( p[,2] ~ new$x, type='l', col="red" )

points( p[,3] ~ new$x, type='l', col="red" )

title(main="Confidence and prediction bands")

legend( par("usr")[1], par("usr")[3], yjust=0,

c("Confidence band", "Prediction band"),

lwd=1, lty=1, col=c("green", "red") )

plot(y~x, xlim=c(-5,6), ylim=c(-11,11))

new <- data.frame( x=seq(-5,6,length=200) )

p <- predict(res, new)

points( p ~ new$x, type='l' )

p <- predict(res, new, interval='confidence')

points( p[,2] ~ new$x, type='l', col="green" )

points( p[,3] ~ new$x, type='l', col="green" )

p <- predict(res, new, interval='prediction')

points( p[,2] ~ new$x, type='l', col="red" )

points( p[,3] ~ new$x, type='l', col="red" )

title(main="Confidence and prediction bands")

legend( par("usr")[1], par("usr")[3], yjust=0,

c("Confidence band", "Prediction band"),

lwd=1, lty=1, col=c("green", "red") )

Here are other ways of representing the confidence and prediction intervals.

N <- 100 n <- 20 x <- runif(N, min=-1, max=1) y <- 1 - 2*x + rnorm(N, sd=abs(x)) res <- lm(y~x) plot(y~x) x0 <- seq(-1,1,length=n) new <- data.frame( x=x0 ) p <- predict(res, new) points( p ~ x0, type='l' ) p <- predict(res, new, interval='prediction') segments( x0, p[,2], x0, p[,3], col='red') p <- predict(res, new, interval='confidence') segments( x0, p[,2], x0, p[,3], col='green', lwd=3 )

mySegments <- function(a,b,c,d,...) {

u <- par('usr')

e <- (u[2]-u[1])/100

segments(a,b,c,d,...)

segments(a+e,b,a-e,b,...)

segments(c+e,d,c-e,d,...)

}

plot(y~x)

p <- predict(res, new)

points( p ~ x0, type='l' )

p <- predict(res, new, interval='prediction')

mySegments( x0, p[,2], x0, p[,3], col='red')

p <- predict(res, new, interval='confidence')

mySegments( x0, p[,2], x0, p[,3], col='green', lwd=3 )

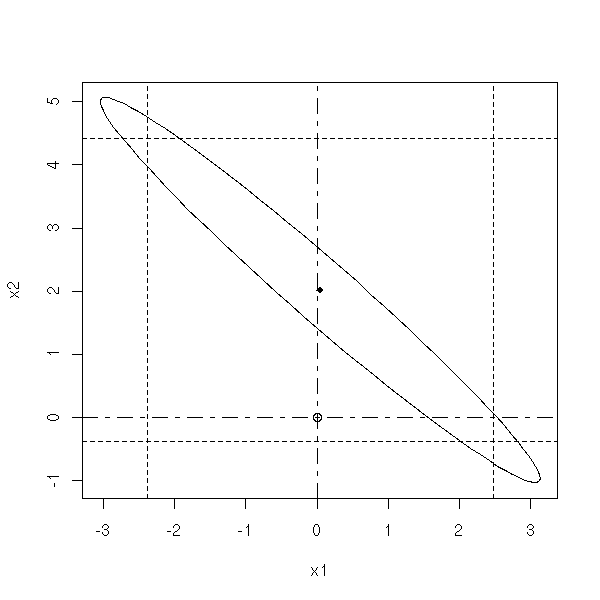

You can want a confidence interval for a single, isolated, parameter -- you get an interval -- or for several parameters at a time -- you get an ellipsoid, called the confidence region. It brings more information than the 1-variable confidence intervals (you cannot combine those intervals). Thus you might want to plot these ellipses.

The ellipse you get is skewed, because it comes from the correlation matrix of the two coefficients: simply diagonalize it in an orthonormal basis and the eigen vectors will give you the axes.

library(ellipse)

my.confidence.region <- function (g, a=2, b=3) {

e <- ellipse(g,c(a,b))

plot(e,

type="l",

xlim=c( min(c(0,e[,1])), max(c(0,e[,1])) ),

ylim=c( min(c(0,e[,2])), max(c(0,e[,2])) ),

)

x <- g$coef[a]

y <- g$coef[b]

points(x,y,pch=18)

cf <- summary(g)$coefficients

ia <- cf[a,2]*qt(.975,g$df.residual)

ib <- cf[b,2]*qt(.975,g$df.residual)

abline(v=c(x+ia,x-ia),lty=2)

abline(h=c(y+ib,y-ib),lty=2)

points(0,0)

abline(v=0,lty="F848")

abline(h=0,lty="F848")

}

n <- 20

x1 <- rnorm(n)

x2 <- rnorm(n)

x3 <- rnorm(n)

y <- x1+x2+x3+rnorm(n)

g <- lm(y~x1+x2+x3)

my.confidence.region(g)

n <- 20 x <- rnorm(n) x1 <- x+.2*rnorm(n) x2 <- x+.2*rnorm(n) y <- x1+x2+rnorm(n) g <- lm(y~x1+x2) my.confidence.region(g)

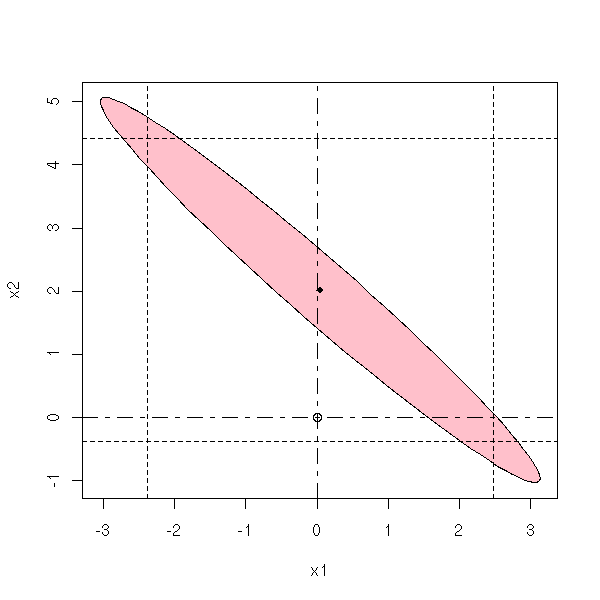

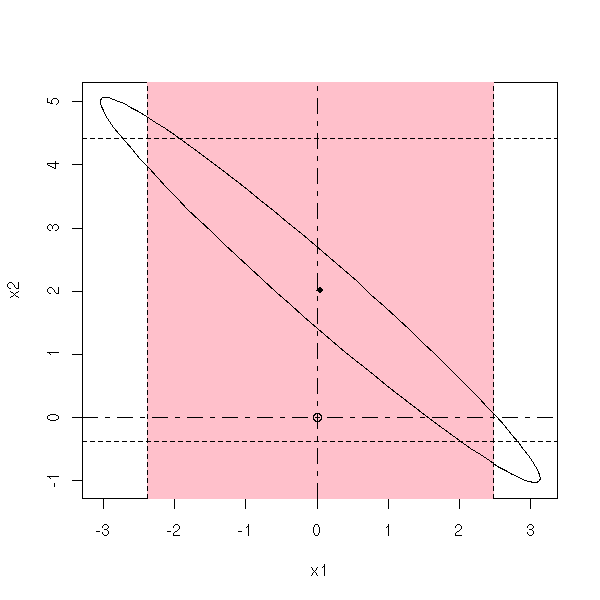

In the three following plots, the probability that the actual values of the parameters be in the pink area is the same.

my.confidence.region <- function (g, a=2, b=3, which=0, col='pink') {

e <- ellipse(g,c(a,b))

x <- g$coef[a]

y <- g$coef[b]

cf <- summary(g)$coefficients

ia <- cf[a,2]*qt(.975,g$df.residual)

ib <- cf[b,2]*qt(.975,g$df.residual)

xmin <- min(c(0,e[,1]))

xmax <- max(c(0,e[,1]))

ymin <- min(c(0,e[,2]))

ymax <- max(c(0,e[,2]))

plot(e,

type="l",

xlim=c(xmin,xmax),

ylim=c(ymin,ymax),

)

if(which==1){ polygon(e,col=col) }

else if(which==2){ rect(x-ia,par('usr')[3],x+ia,par('usr')[4],col=col,border=col) }

else if(which==3){ rect(par('usr')[1],y-ib,par('usr')[2],y+ib,col=col,border=col) }

lines(e)

points(x,y,pch=18)

abline(v=c(x+ia,x-ia),lty=2)

abline(h=c(y+ib,y-ib),lty=2)

points(0,0)

abline(v=0,lty="F848")

abline(h=0,lty="F848")

}

my.confidence.region(g, which=1)

my.confidence.region(g, which=2)

my.confidence.region(g, which=3)

When you perform a multiple regression, you try to retain as few predictive variables as possible, while retaining all those that are relevant. To choose or discard variables, you might be tempted to perform a lot of statistical tests.

This is a bad idea.

Indeed, for each testm you have a certain risk of making a mistake -- and those risks pile up.

However, this is usually what we do -- we rarely have the choice. You can either start with a model with no variable at all, then add the "best" predictive variable (say, the one with the higher correlation) and progressively add other variables (say, the ones that provide the biggest increase in R^2) and stop when you reach a certain criterion (say, when F^2 reaches a certain value); or start with a saturated model, containing all the variables an successively remove the variables that provide the smallest decrease in R^2 and stop when F^2 reaches a value fixed in advance.

TODO: Tukey, etc. (in the Anova chapter?)

When you read regression or anova (analysis of variance) results, you often face a table "full of sums of squares".

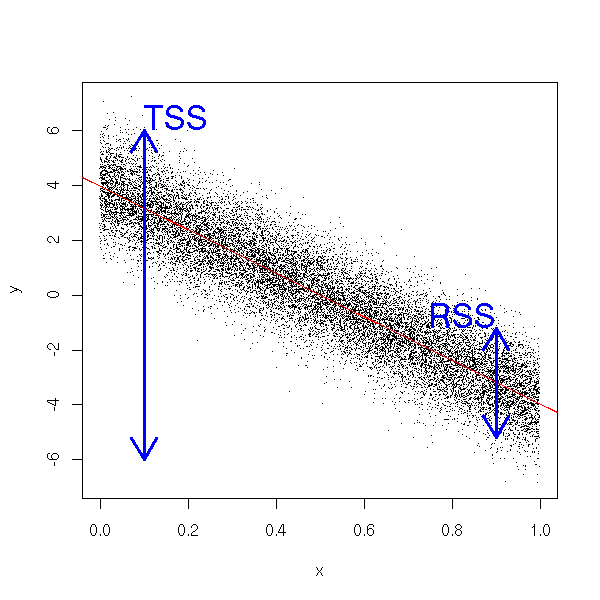

RSS (Residual Sum of Squares): this is the quantity you try to minimize in a regression. More precisely, let X be the predictive variable, Y the variable to predict and hat(Yi) the predicted velue, we set

hat Yi = b0 + b1 Xi

and we try to find the values of b0 and b1 that minimize

RSS = Sum( (Yi - hat Yi)^2 ).

TSS (Total sum of squares): The is the sun of squares minimized when you look for the mean of Y

TSS = Sum( (Yi - bar Y)^2 )

ESS (Explained Sum of Squares): This is the difference between the preceding two sums of squares. It can also be written as a sum of squares.

ESS = Sum( ( hat Yi - bar Y )^2 )

R-square: "determination coefficient" or "percentage of variance of Y explained by X". The closer to one, the better the regression explains the variations of Y.

R^2 = ESS/TSS.

You can give a graphical interpretation of the determination coefficient. The data are clustered in a band of height RSS around the regression line, while the height of the plot is TSS. We then have R^2=1-RSS/TSS.

n <- 20000 x <- runif(n) y <- 4 - 8*x + rnorm(n) plot(y~x, pch='.') abline(lm(y~x), col='red') arrows( .1, -6, .1, 6, code=3, lwd=3, col='blue' ) arrows( .9, -3.2-2, .9, -3.2+2, code=3, lwd=3, col='blue' ) text( .1, 6, "TSS", adj=c(0,0), cex=2, col='blue' ) text( .9, -3.2+2, "RSS", adj=c(1,0), cex=2, col='blue' )

We have seen three way of printing the results of a regression: with the "print", "summary" and "anova" functions. The last line of the "anova" function compares our model with the null model (i.e., with the model with no explanatory variables at all, y ~ 1).

> x <- rnorm(100)

> y <- 1 + 2*x + .3*rnorm(100)

> summary(lm(y~x))

Call:

lm(formula = y ~ x)

Residuals:

Min 1Q Median 3Q Max

-0.84692 -0.24891 0.02781 0.20486 0.60522

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 0.98706 0.02856 34.56 <2e-16 ***

x 1.96405 0.02851 68.90 <2e-16 ***

---

Signif. codes: 0 `***' 0.001 `**' 0.01 `*' 0.05 `.' 0.1 ` ' 1

Residual standard error: 0.2855 on 98 degrees of freedom

Multiple R-Squared: 0.9798, Adjusted R-squared: 0.9796

F-statistic: 4747 on 1 and 98 DF, p-value: < 2.2e-16The result of the "anova" function explains where these fugures come from: you have the sum of squares, their "mean" (just divide by the "number of degrees of freedom"), their quotient (F-value) and the probability that this quotient be as high if the slope of the line is zero (i.e., the p-value of the test of H0: "the slope is zero" against H1: "the slope is not zero").

> anova(lm(y~x))

Analysis of Variance Table

Response: y

Df Sum Sq Mean Sq F value Pr(>F)

x 1 386.97 386.97 4747 < 2.2e-16 ***

Residuals 98 7.99 0.08

---

Signif. codes: 0 `***' 0.001 `**' 0.01 `*' 0.05 `.' 0.1 ` ' 1More generally, the "anova" function performs a test that compares embedded models (here, a model with an intercept and a slope, and a model with an intercept and no slope).

In a multiple regression, you strive to retain as few variables as possible. In this process, you want to compare models: e.g., compare a model with a lot of variable and a model with fewer variables.

The "anova" function performs that kind of comparison (it does not answer the question "is this model better?" but "are these models significantly different?" -- if they are not significantly different, you will reject the more complicated one).

data(trees) r1 <- lm(Volume ~ Girth, data=trees) r2 <- lm(Volume ~ Girth + Height, data=trees) anova(r1,r2)

The result

Analysis of Variance Table Model 1: Volume ~ Girth Model 2: Volume ~ Girth + Height Res.Df RSS Df Sum of Sq F Pr(>F) 1 29 524.30 2 28 421.92 1 102.38 6.7943 0.01449 * --- Signif. codes: 0 `***' 0.001 `**' 0.01 `*' 0.05 `.' 0.1 ` ' 1

tells us that the two models are significantly different with a risk of error under 2%.

Here are a few other examples.

x1 <- rnorm(100) x2 <- rnorm(100) x3 <- rnorm(100) b <- .1* rnorm(100) y <- 1 + x1 + x2 + x3 + b r1 <- lm( y ~ x1 ) r2 <- lm( y ~ x1 + x2 + x3 ) anova(r1,r2) # p-value = 2e-16 y <- 1 + x1 r1 <- lm( y ~ x1 ) r2 <- lm( y ~ x1 + x2 + x3 ) anova(r1,r2) # p-value = 0.25 y <- 1 + x1 + .02*x2 - .02*x3 + b r1 <- lm( y ~ x1 ) r2 <- lm( y ~ x1 + x2 + x3 ) anova(r1,r2) # p-value = 0.10

You can compare more that two model (but always nested models: each model is included in the next).

y <- 1 + x1 + x2 + x3 + b r1 <- lm( y ~ x1 ) r2 <- lm( y ~ x1 + x2 ) r3 <- lm( y ~ x1 + x2 + x3 ) anova(r1,r2,r3) # p-values = 2e-16 (both)

If, in the comparison of two models, you get a very high p-value, i.e., if the two models are not significantly different, you will reject the more complex and retain the simplest.

You can present the computations performed in a regression as an anova table. Furthermore, the idea behind the computations is the same: express the variance of Y as the sum of a variance of a variable affine in X and a residual variance, and minimize this residual variance.

x <- runif(10) y <- 1 + x + .2*rnorm(10) anova(lm(y~x))

Here, the anova tells us that, indeed, Y depend on X, with a risk of error under 1%.

Analysis of Variance Table

Response: y

Df Sum Sq Mean Sq F value Pr(>F)

x 1 0.85633 0.85633 20.258 0.002000 **

Residuals 8 0.33817 0.04227It still works with several predictive variables.

x <- runif(10) y <- runif(10) z <- 1 + x - y + .2*rnorm(10) anova(lm(z~x+y))

The analysis of variance table tells us that z depends on x and y, with a risk of error under 1%.

Analysis of Variance Table

Response: z

Df Sum Sq Mean Sq F value Pr(>F)

x 1 2.33719 2.33719 45.294 0.0002699 ***

y 1 0.73721 0.73721 14.287 0.0068940 **

Residuals 7 0.36120 0.05160Counterintuitive and frightening as it may be, you might notice that the result depends on the order of the parameters...

> anova(lm(z~y+x))

Analysis of Variance Table

Response: z

Df Sum Sq Mean Sq F value Pr(>F)

y 1 2.42444 2.42444 46.985 0.000241 ***

x 1 0.64996 0.64996 12.596 0.009355 **

Residuals 7 0.36120 0.05160In some cases, you can even get contradictory results: depending on the order of the predictive variables, you can find that z sometimes depends on x, sometimes not.

> x <- runif(10)

> y <- runif(10)

> z <- 1 + x + 5*y + .2*rnorm(10)

> anova(lm(z~x+y))

Analysis of Variance Table

Response: z

Df Sum Sq Mean Sq F value Pr(>F)

x 1 0.0104 0.0104 0.1402 0.7192

y 1 7.5763 7.5763 102.1495 1.994e-05 ***

Residuals 7 0.5192 0.0742

> anova(lm(z~y+x))

Analysis of Variance Table

Response: z

Df Sum Sq Mean Sq F value Pr(>F)

y 1 7.1666 7.1666 96.626 2.395e-05 ***

x 1 0.4201 0.4201 5.664 0.04889 *

Residuals 7 0.5192 0.0742

The residuals are the differences between the observed values and the predicted values.

The noise is the difference between the observed values and the actual values: it appears in the model, e.g.

Y = a + b X + noise

Residues and noise are two different things. Even from a statistical point of view, they look different. For instance, their variance is not the same (neither is the shape of their distribution, by the way). The following simulation estimates the variance of the residuals: we get 0.008893307 while the noise variance was 0.01.

a <- 1

b <- -2

s <- .1

n <- 10

N <- 1e6

v <- NULL

for (i in 1:N) {

x <- rnorm(n)

y <- 1-2*x+s*rnorm(n)

v <- append(v, var(lm(y~x)$res))

}

mean(v)You can show that the variance of the residual of the observation i is

sigma^2 * ( 1 - h_i )

where sigma^2 is the noise variance and h_i is the "leverage" of observation i (the i-th diagonal term of t(X)%*%X).

These are the normalized residuals. Their variance is estimated from all the sample.

?rstandard

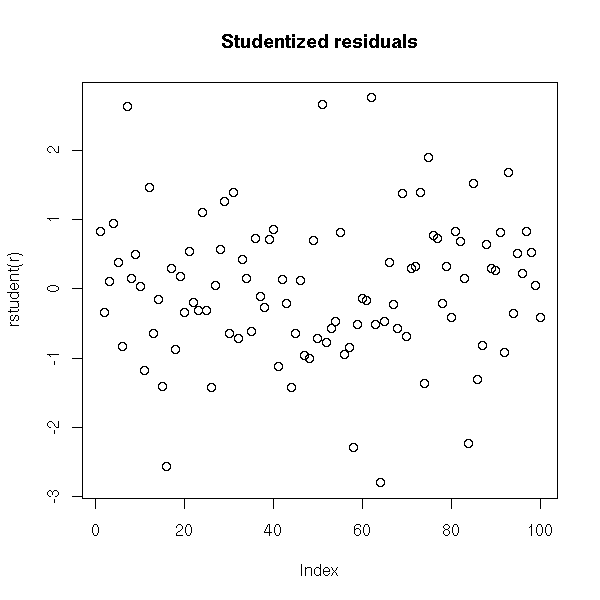

These are still the "normalized" residuals, but this time, we estimate the variance with the sample without the current observation.

?rstudent

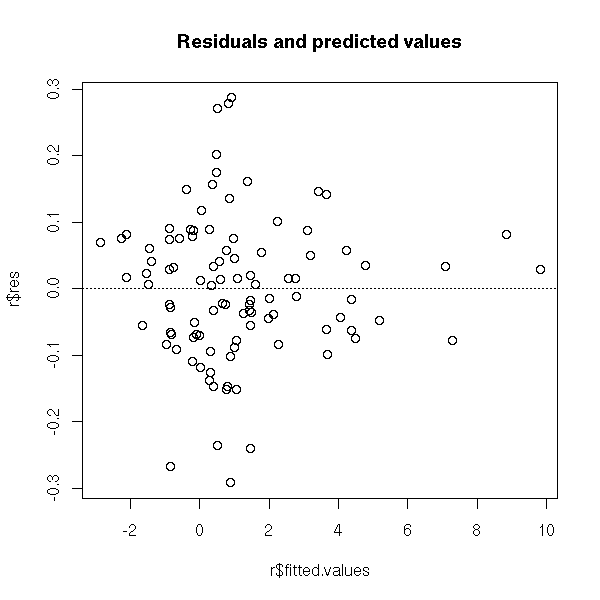

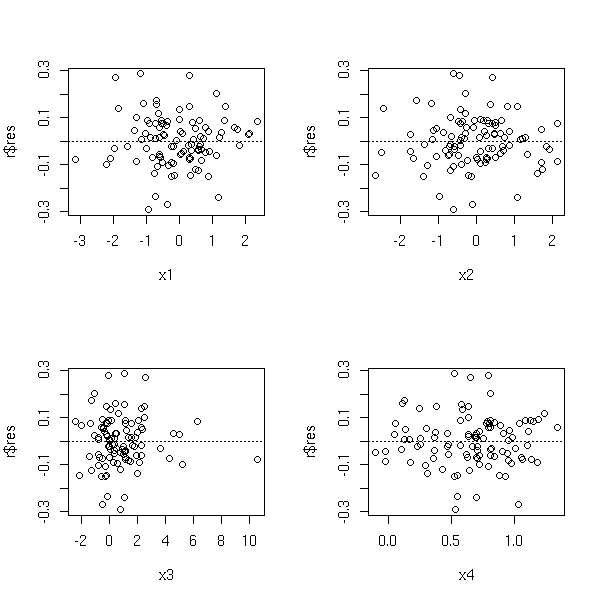

Let us consider the following example.

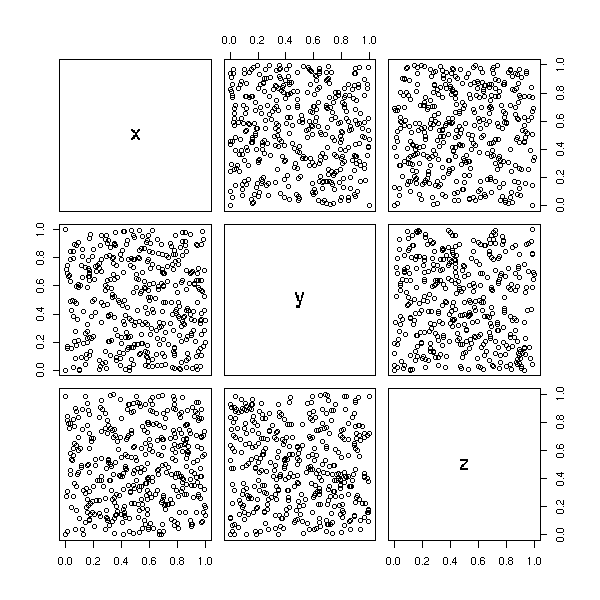

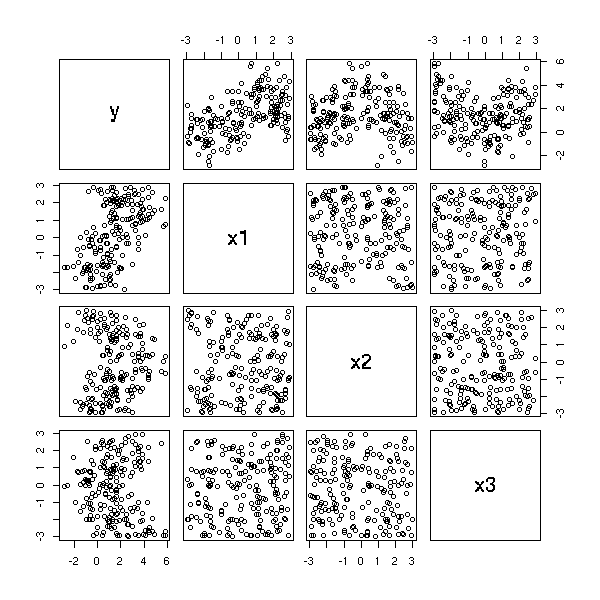

n <- 100 x1 <- rnorm(n) x2 <- rnorm(n) x3 <- x1^2+rnorm(n) x4 <- 1/(1+x2^2)+.2*rnorm(n) y <- 1+x1-x2+x3-x4+.1*rnorm(n) pairs(cbind(x1,x2,x3,x4,y))

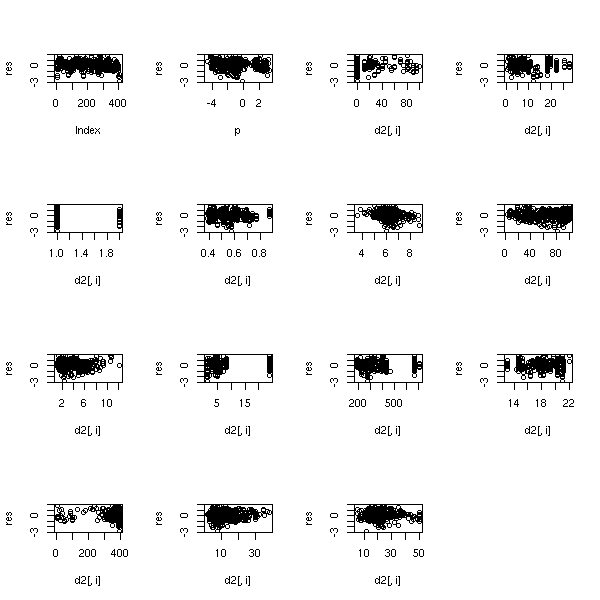

The residuals are full-fledged statistical variables: you can look at them through box-and-whisker plots, histograms, qqplots, etc. You can also plot them as a function of the predicted values, as a function of the various predictive variables or as a function of the observation number.

r <- lm(y~x1+x2+x3+x4) boxplot(r$res, horizontal=T)

hist(r$res)

plot(r$res, main='Residuals')

plot(rstandard(r), main='Standardized residuals')

plot(rstudent(r), main="Studentized residuals")

plot(r$res ~ r$fitted.values,

main="Residuals and predicted values")

abline(h=0, lty=3)

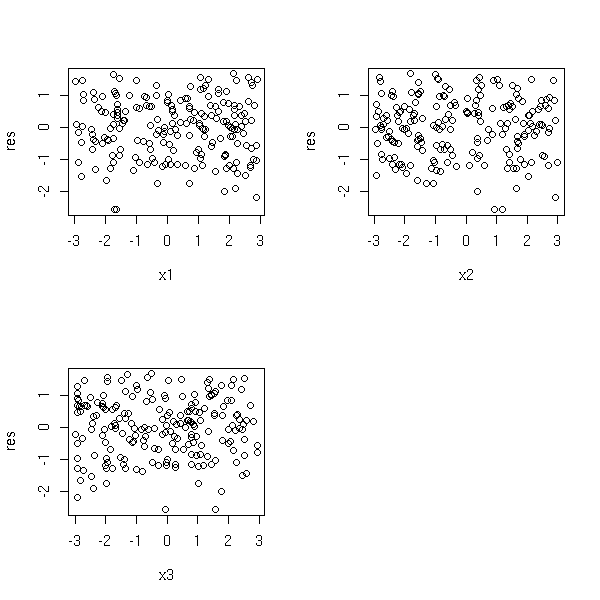

op <- par(mfrow=c(2,2)) plot(r$res ~ x1) abline(h=0, lty=3) plot(r$res ~ x2) abline(h=0, lty=3) plot(r$res ~ x3) abline(h=0, lty=3) plot(r$res ~ x4) abline(h=0, lty=3) par(op)

n <- 100

x1 <- rnorm(n)

x2 <- 1:n

y <- rnorm(1)

for (i in 2:n) {

y <- c(y, y[i-1] + rnorm(1))

}

y <- x1 + y

r <- lm(y~x1+x2) # Or simply: lm(y~x1)

op <- par(mfrow=c(2,1))

plot( r$res ~ x1 )

plot( r$res ~ x2 )

par(op)

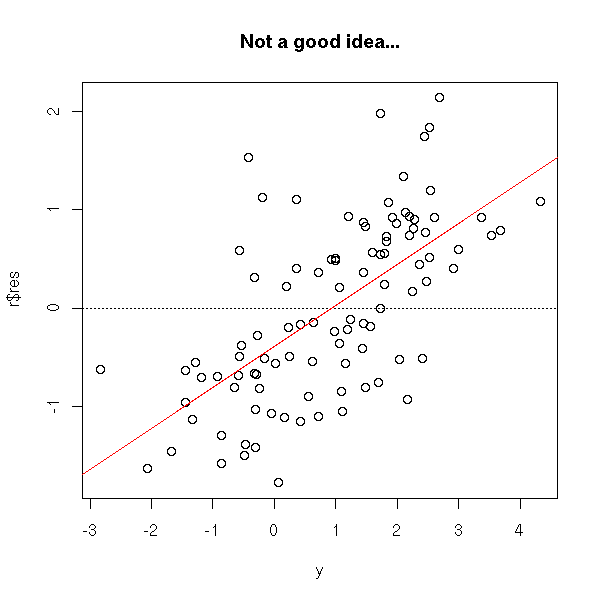

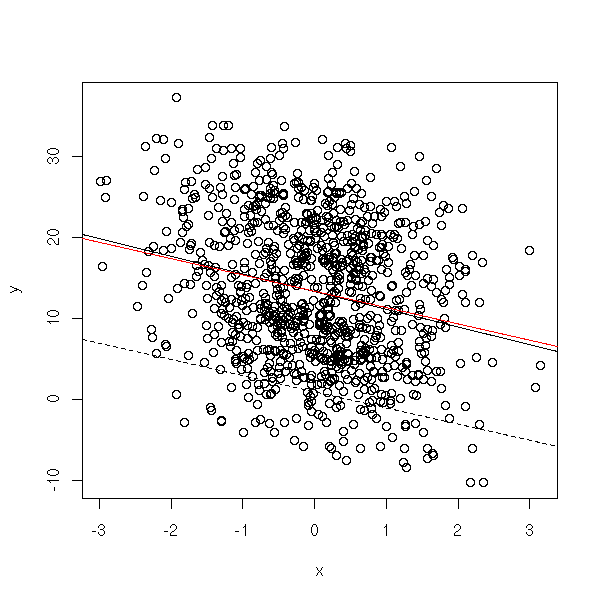

It is usually a bad idea to plot the residuals as a function of the observed values, because the "noise term" in the model appears on both axes, and you (almost) end up plotting this noise as a function of itself...

n <- 100 x <- rnorm(n) y <- 1-x+rnorm(n) r <- lm(y~x) plot(r$res ~ y) abline(h=0, lty=3) abline(lm(r$res~y),col='red') title(main='Not a good idea...')

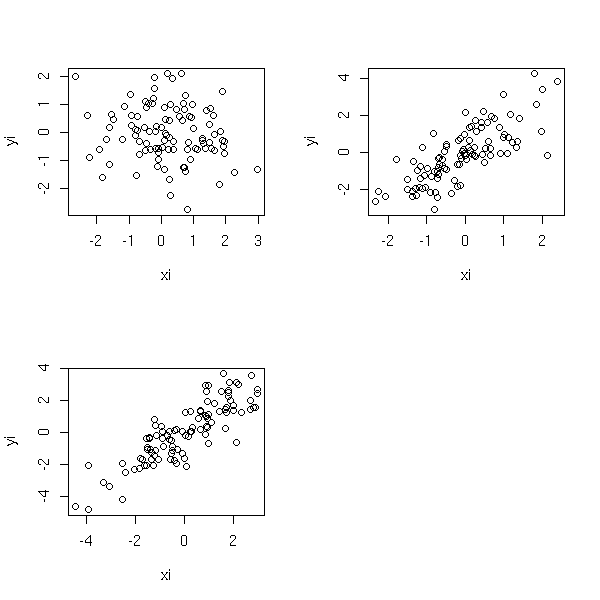

Let us consider a regression situation with two predictive variables X1 and X2 and one variable to predict Y.

You can study the effect of X1 on Y after removing the (linear) effect of X2 on Y: simply regress Y against X2, X1 against X2 and plot the residuals of the former against those of the latter.

Those plots may help you spot influent observations.

partial.regression.plot <- function (y, x, n, ...) {

m <- as.matrix(x[,-n])

y1 <- lm(y ~ m)$res

x1 <- lm(x[,n] ~ m)$res

plot( y1 ~ x1, ... )

abline(lm(y1~x1), col='red')

}

n <- 100

x1 <- rnorm(n)

x2 <- rnorm(n)

x3 <- x1+x2+rnorm(n)

x <- cbind(x1,x2,x3)

y <- x1+x2+x3+rnorm(n)

op <- par(mfrow=c(2,2))

partial.regression.plot(y, x, 1)

partial.regression.plot(y, x, 2)

partial.regression.plot(y, x, 3)

par(op)

There is already an "av.plot" function in the "car" package for this.

library(car) av.plots(lm(y~x1+x2+x3),ask=F)

The "leverage.plots", still in the "car" package, generalizes this idea.

?leverage.plots

It is very similar to partial regression plots: this time, you plot Y, from which you have removed the effects of X2, as a function of X1. It is more efficient than oartial regression to spot non-linearities -- but partial regression is superior when it comes to spotting influent or outlying observations.

my.partial.residual.plot <- function (y, x, i, ...) {

r <- lm(y~x)

xi <- x[,i]

# Y, minus the linear effects of X_j

yi <- r$residuals + r$coefficients[i] * x[,i]

plot( yi ~ xi, ... )

}

n <- 100

x1 <- rnorm(n)

x2 <- rnorm(n)

x3 <- x1+x2+rnorm(n)

x <- cbind(x1,x2,x3)

y <- x1+x2+x3+rnorm(n)

op <- par(mfrow=c(2,2))

my.partial.residual.plot(y, x, 1)

my.partial.residual.plot(y, x, 2)

my.partial.residual.plot(y, x, 3)

par(op)

The "car" or "Design" packages provide functions to plot the partial residuals.

library(car) ?cr.plots ?ceres.plots library(Design) ?plot.lrm.partial ?lrm

The regression might be "too close" to the data, to the point that it becomes irrealistic, that it performs poorly with "out-of-sample" data. The situation is not always as striking and obvious as here. However, if you want to choose, say, a non-linear model (or anyting complex), you must be able to justify it. In particular, compare the number of parameters to estimate with the number of observations...

n <- 10 x <- seq(0,1,length=n) y <- 1-2*x+.3*rnorm(n) plot(spline(x, y, n = 10*n), col = 'red', type='l', lwd=3) points(y~x, pch=16, lwd=3, cex=2) abline(lm(y~x)) title(main='Overfit')

This is mainly common-sense.

In the case of a linear regression, you can compare the determination coefficient (in case of overfit, it is close to 1) and the adjusted determination coefficient (that accounts for overfitting problems).

> summary(lm(y~poly(x,n-1)))

Call:

lm(formula = y ~ poly(x, n - 1))

Residuals:

ALL 10 residuals are 0: no residual degrees of freedom!

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 0.01196

poly(x, n - 1)1 -1.94091

poly(x, n - 1)2 -0.02303

poly(x, n - 1)3 -0.08663

poly(x, n - 1)4 -0.06938

poly(x, n - 1)5 -0.34501

poly(x, n - 1)6 -0.51048

poly(x, n - 1)7 -0.28479

poly(x, n - 1)8 -0.22273

poly(x, n - 1)9 0.39983

Residual standard error: NaN on 0 degrees of freedom

Multiple R-Squared: 1, Adjusted R-squared: NaN

F-statistic: NaN on 9 and 0 DF, p-value: NA

> summary(lm(y~poly(x,n-2)))

Call:

lm(formula = y ~ poly(x, n - 2))

Residuals:

1 2 3 4 5 6 7 8 9 10

-0.001813 0.016320 -0.065278 0.152316 -0.228473 0.228473 -0.152316 0.065278 -0.016320 0.001813

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 0.01196 0.12644 0.095 0.940

poly(x, n - 2)1 -1.94091 0.39983 -4.854 0.129

poly(x, n - 2)2 -0.02303 0.39983 -0.058 0.963

poly(x, n - 2)3 -0.08663 0.39983 -0.217 0.864

poly(x, n - 2)4 -0.06938 0.39983 -0.174 0.891

poly(x, n - 2)5 -0.34501 0.39983 -0.863 0.547

poly(x, n - 2)6 -0.51048 0.39983 -1.277 0.423

poly(x, n - 2)7 -0.28479 0.39983 -0.712 0.606

poly(x, n - 2)8 -0.22273 0.39983 -0.557 0.676

Residual standard error: 0.3998 on 1 degrees of freedom

Multiple R-Squared: 0.9641, Adjusted R-squared: 0.6767

F-statistic: 3.355 on 8 and 1 DF, p-value: 0.4If you are reasonable, the determination coefficient and its adjusted version are very close.

> x <- seq(0,1,length=n)

> y <- 1-2*x+.3*rnorm(n)

> summary(lm(y~poly(x,10)))

Call:

lm(formula = y ~ poly(x, 10))

Residuals:

Min 1Q Median 3Q Max

-0.727537 -0.206951 -0.002332 0.177562 0.902353

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) -0.01312 0.02994 -0.438 0.662

poly(x, 10)1 -6.11784 0.29943 -20.431 <2e-16 ***

poly(x, 10)2 -0.11099 0.29943 -0.371 0.712

poly(x, 10)3 -0.04936 0.29943 -0.165 0.869

poly(x, 10)4 -0.28863 0.29943 -0.964 0.338

poly(x, 10)5 -0.15348 0.29943 -0.513 0.610

poly(x, 10)6 0.12146 0.29943 0.406 0.686

poly(x, 10)7 0.05066 0.29943 0.169 0.866

poly(x, 10)8 0.09707 0.29943 0.324 0.747

poly(x, 10)9 0.07554 0.29943 0.252 0.801

poly(x, 10)10 0.42494 0.29943 1.419 0.159

---

Signif. codes: 0 `***' 0.001 `**' 0.01 `*' 0.05 `.' 0.1 ` ' 1

Residual standard error: 0.2994 on 89 degrees of freedom

Multiple R-Squared: 0.8256, Adjusted R-squared: 0.8059

F-statistic: 42.12 on 10 and 89 DF, p-value: < 2.2e-16

> summary(lm(y~poly(x,1)))

...

Multiple R-Squared: 0.8182, Adjusted R-squared: 0.8164

...

If the sample is too small, you will not be able to estimate much, In such situationm you have to restrict yourself to simple (simplistic) models, such as linear models, becaus the overfitting risk is too high.

TODO Explain what you can do if there are more variables than observations. The naive approach will not work: n <- 100 k <- 500 x <- matrix(rnorm(n*k), nr=n, nc=k) y <- apply(x, 1, sum) lm(y~x) svm

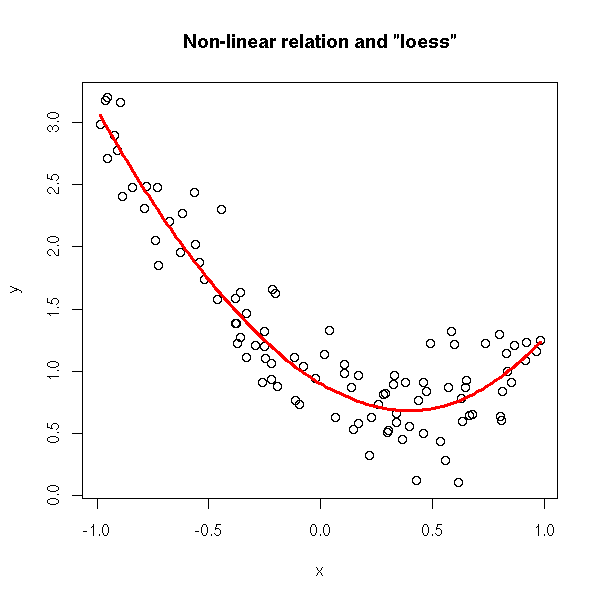

Sometimes, the model is too simplistic.

x <- runif(100, -1, 1) y <- 1-x+x^2+.3*rnorm(100) plot(y~x) abline(lm(y~x), col='red')

On the preceding plot, it is not obvious, but you can spot the problem if you try to see if a polynomial model would not be better,

> summary(lm(y~poly(x,10)))

...

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 1.29896 0.02841 45.725 < 2e-16 ***

poly(x, 10)1 -4.98079 0.28408 -17.533 < 2e-16 ***

poly(x, 10)2 2.53642 0.28408 8.928 5.28e-14 ***

poly(x, 10)3 -0.06738 0.28408 -0.237 0.813

poly(x, 10)4 -0.15583 0.28408 -0.549 0.585

poly(x, 10)5 0.15112 0.28408 0.532 0.596

poly(x, 10)6 0.04512 0.28408 0.159 0.874

poly(x, 10)7 -0.29056 0.28408 -1.023 0.309

poly(x, 10)8 -0.39384 0.28408 -1.386 0.169

poly(x, 10)9 -0.25763 0.28408 -0.907 0.367

poly(x, 10)10 -0.09940 0.28408 -0.350 0.727or by using splines (or any other regularization method) an by looking at the result,

plot(y~x) lines(smooth.spline(x,y), col='red', lwd=2) title(main="Splines can help you spot non-linear relations")

plot(y~x) lines(lowess(x,y), col='red', lwd=2) title(main='Non-linear relations and "lowess"')

plot(y~x) xx <- seq(min(x),max(x),length=100) yy <- predict( loess(y~x), data.frame(x=xx) ) lines(xx,yy, col='red', lwd=3) title(main='Non-linear relation and "loess"')

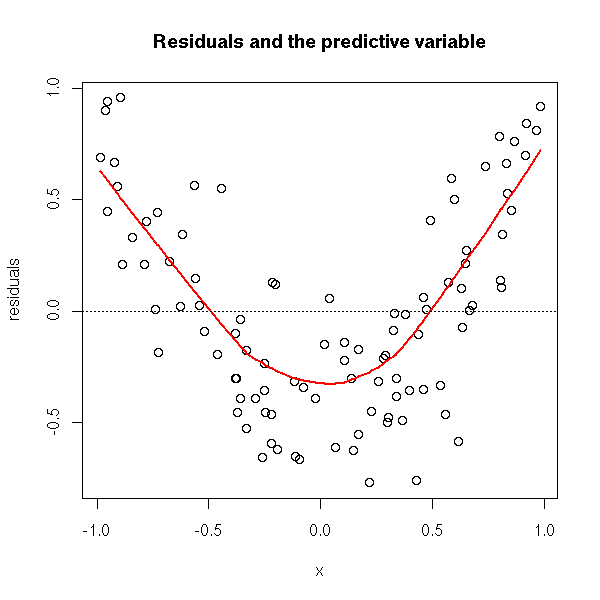

or by looking at the residuals with any regularization method (plot the residuals as a function of the predicted values or as a function of the predictive variables).

r <- lm(y~x)

plot(r$residuals ~ r$fitted.values,

xlab='predicted values', ylab='residuals',

main='Residuals and predicted values')

lines(lowess(r$fitted.values, r$residuals), col='red', lwd=2)

abline(h=0, lty=3)

plot(r$residuals ~ x,

xlab='x', ylab='residuals',

main='Residuals and the predictive variable')

lines(lowess(x, r$residuals), col='red', lwd=2)

abline(h=0, lty=3)

In some (rare) cases, you have several observations for the same value of the predictive variables: you can then perform the following test.

x <- rep(runif(10, -1, 1), 10) y <- 1-x+x^2+.3*rnorm(100) r1 <- lm(y ~ x) r2 <- lm(y ~ factor(x)) anova(r1,r2)

Both models should give the same predictions: here, it is not the case.

Analysis of Variance Table Model 1: y ~ x Model 2: y ~ factor(x) Res.Df RSS Df Sum of Sq F Pr(>F) 1 98 19.5259 2 90 6.9845 8 12.5414 20.201 < 2.2e-16 *** --- Signif. codes: 0 `***' 0.001 `**' 0.01 `*' 0.05 `.' 0.1 ` ' 1

Let us try with a linear relation.

x <- rep(runif(10, -1, 1), 10) y <- 1-x+.3*rnorm(100) r1 <- lm(y ~ x) r2 <- lm(y ~ factor(x)) anova(r1,r2)

The models are not significantly different.

Analysis of Variance Table Model 1: y ~ x Model 2: y ~ factor(x) Res.Df RSS Df Sum of Sq F Pr(>F) 1 98 9.6533 2 90 8.9801 8 0.6733 0.8435 0.5671

# Non-linearity library(lmtest) ?harvtest ?raintest ?reset

The "strucchange" package detects structural changes (very often with time series, e.g., in econometry). There is a structural change when (for instance) the linear model is correct, but its coefficients change for time to time. If you know where the change occurs, you just split your sample into several chuks and perform a regression on each (to make sure that a change occured, you can test the equality of the coefficients in the chunks).

But usually, you do not know where the changes occur. You can try with moving window to find the most probable date for the structural change (you can take a window with a constant width, or one with a constrant number of observations).

TODO: an example # Structural change library(strucchange) efp(..., type="Rec-CUSUM") efp(..., type="OLS-MOSUM") plot(efp(...)) sctest(efp(...)) TODO: an example Fstat(...) plot(Fstat(...)) sctest(Fstat(...))

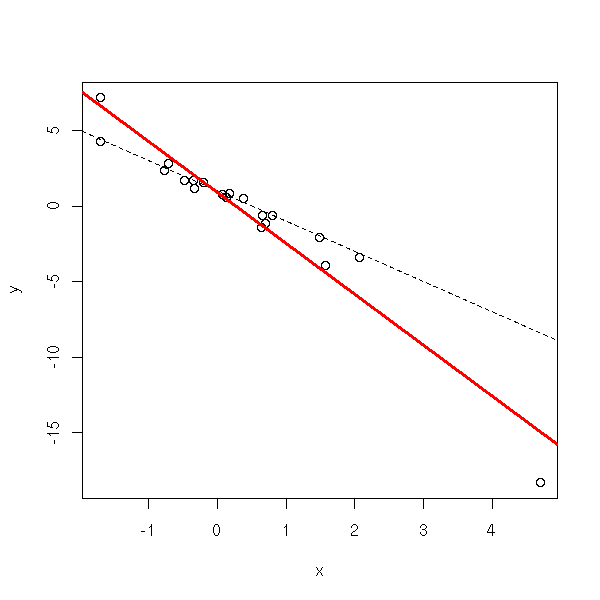

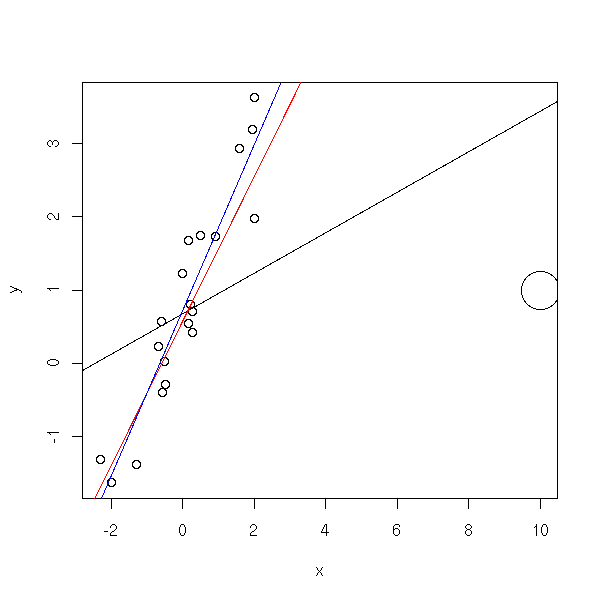

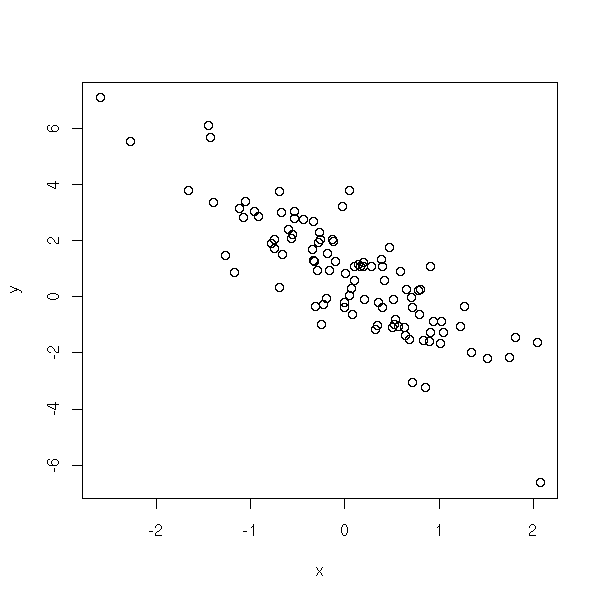

Some points might bear an abnormally high influence on the regression results. SOmetimes, they come from mistakes (they should be identified and corrected), sometimes, they are perfectly normal but extreme. The leverage effect can yield incorrect results.

n <- 20

done.outer <- F

while (!done.outer) {

done <- F

while(!done) {

x <- rnorm(n)

done <- max(x)>4.5

}

y <- 1 - 2*x + x*rnorm(n)

r <- lm(y~x)

done.outer <- max(cooks.distance(r))>5

}

plot(y~x)

abline(1,-2,lty=2)

abline(lm(y~x),col='red',lwd=3)

lm(y~x)$coef

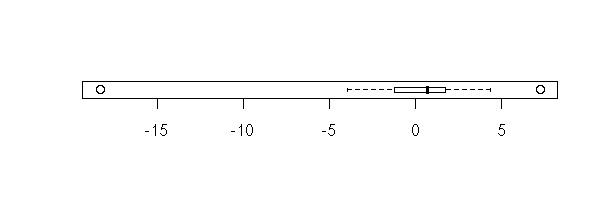

The first thing to do, even before starting the regression, is to look at the variables one at a time.

boxplot(x, horizontal=T)

stripchart(x, method='jitter')

hist(x, col='light blue', probability=T) lines(density(x), col='red', lwd=3)

Same for y.

boxplot(y, horizontal=T)

stripchart(y, method='jitter')

hist(y, col='light blue', probability=T) lines(density(y), col='red', lwd=3)

Here, there is an extreme point. AS there is a single one, we might be tempted to remove it -- if there were several, we would rather try to transform the data.

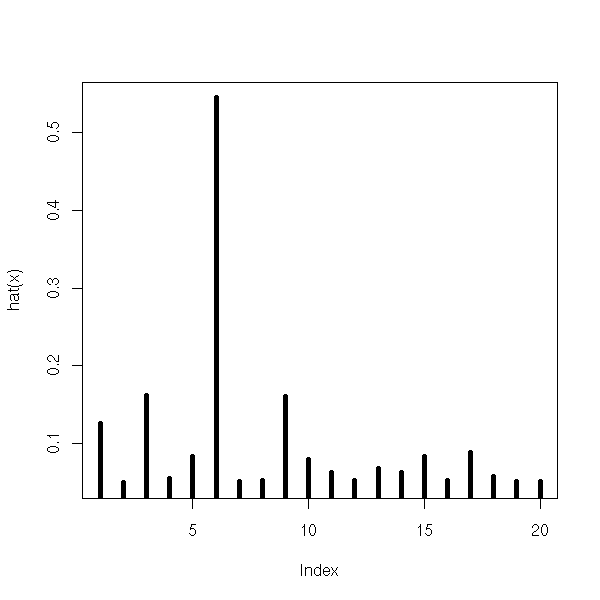

There is a measure of the "extremeness" of a point -- its leverage --: the diagonal elements of the hat matrix

H = X (X' X)^-1 X'

It is called "hat matrix" because

\hat Y = H Y.

Those values tells us how an error on a predictive variable prapagates to the predictions.

The leverages are between 1/n and 1. Under 0.2, it is fine. You will not that this uses the predictive variable X but not the variable to predict Y.

plot(hat(x), type='h', lwd=5)

You can also measure the effect of each observation on the regression: remove the point, compute the regression, the predicted values and compare them with the values predicted from the whole sample:

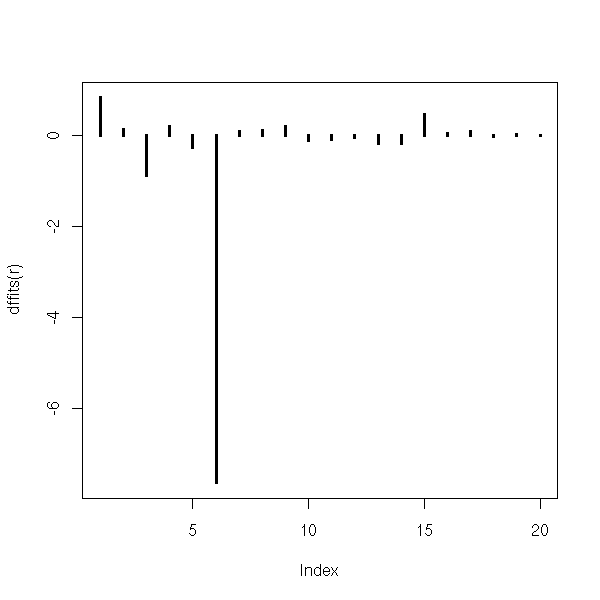

plot(dffits(r),type='h',lwd=3)

You can also compare the coefficients:

plot(dfbetas(r)[,1],type='h',lwd=3)

plot(dfbetas(r)[,2],type='h',lwd=3)

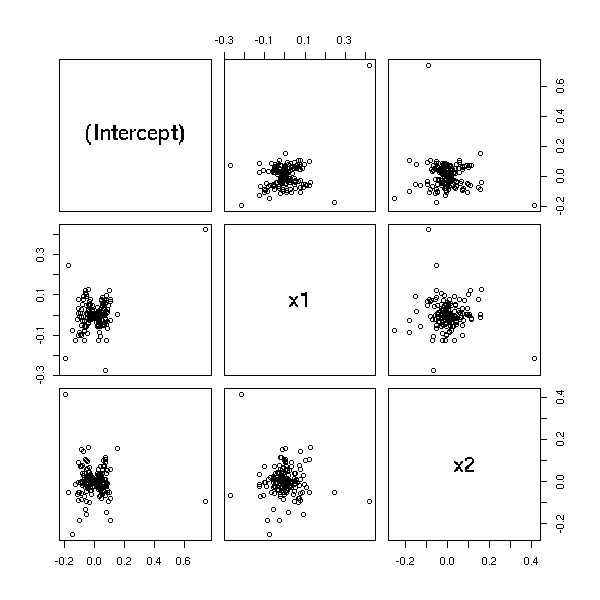

In higher dimensions, you can plot the variation of a coefficient as a function of the variation of other coefficients.

n <- 200 x1 <- rnorm(n) x2 <- rnorm(n) yy <- x1 - x2 + rnorm(n) yy[1] <- 10 r <- lm(yy~x1+x2) pairs(dfbetas(r))

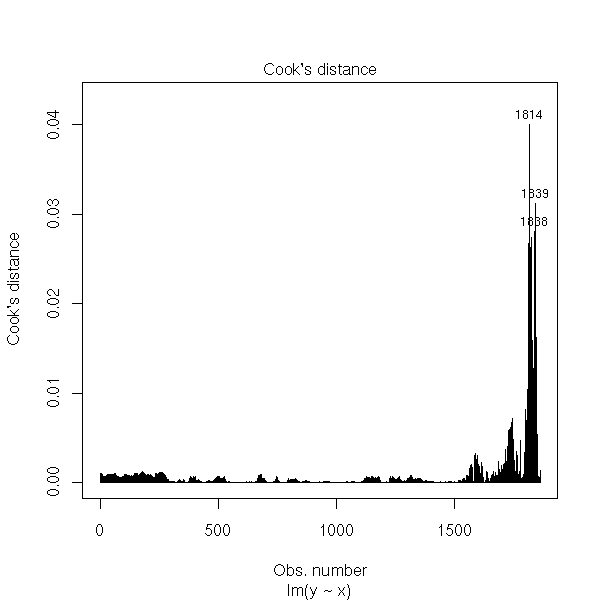

Cook's distance measures the effect of an observation on the regression as a whole. You should start to be cautious when D > 4/n.

cd <- cooks.distance(r) plot(cd,type='h',lwd=3)

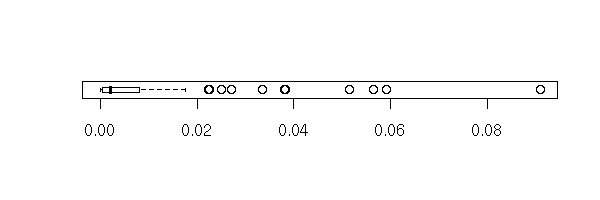

You can also have a look at the box-and-whiskers plot, the scatterplot, the histogram, the density of Cook's distances (for a moment, we put aside our pathological example).

n <- 100 xx <- rnorm(n) yy <- 1 - 2 * x + rnorm(n) rr <- lm(yy~xx) cd <- cooks.distance(rr) plot(cd,type='h',lwd=3)

boxplot(cd, horizontal=T)

stripchart(cd, method='jitter')

hist(cd, probability=T, breaks=20, col='light blue')

plot(density(cd), type='l', col='red', lwd=3)

qqnorm(cd) qqline(cd, col='red')

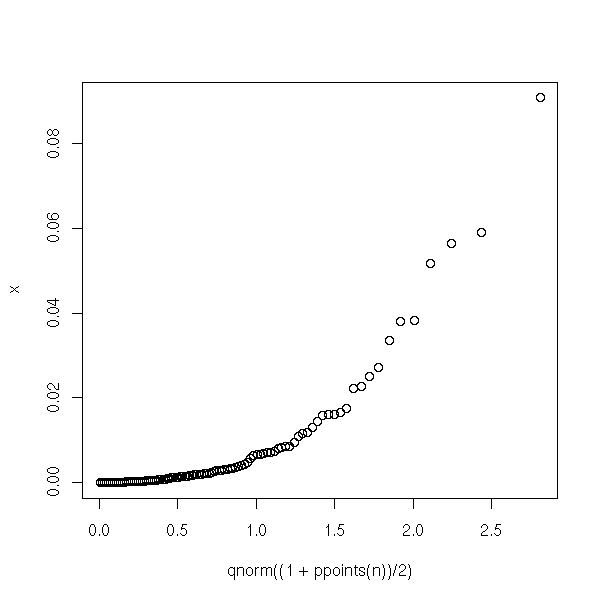

Some suggest to compare the distribution of Cook's distance with a half-gaussian distribution. People often do that for variables whose values are all positive -- but it does not look like a half-gaussian!

half.qqnorm <- function (x) {

n <- length(x)

qqplot(qnorm((1+ppoints(n))/2), x)

}

half.qqnorm(cd)

You can use those values to spot the most important points on the scatterplot pr on a residual plot.

m <- max(cooks.distance(r)) plot(y~x, cex=1+5*cooks.distance(r)/m)

cd <- cooks.distance(r) # rescaled Cook's distance rcd <- (99/4) * cd*(cd+1)^2 rcd[rcd>100] <- 100 plot(r$res~r$fitted.values, cex=1+.05*rcd) abline(h=0,lty=3)

You can also use colors.

m <- max(cd)

plot(r$res,

cex=1+5*cd/m,

col=heat.colors(100)[ceiling(70*cd/m)],

pch=16,

)

points(r$res, cex=1+5*cd/m)

abline(h=0,lty=3)

plot(r$res,

cex=1+.05*rcd,

col=heat.colors(100)[ceiling(rcd)],

pch=16,

)

points(r$res, cex=1+.05*rcd)

abline(h=0,lty=3)

The following example should be more colorful.

n <- 100

x <- rnorm(n)

y <- 1 - 2*x + rnorm(n)

r <- lm(y~x)

cd <- cooks.distance(r)

m <- max(cd)

plot(r$res ~ r$fitted.values,

cex=1+5*cd/m,

col=heat.colors(100)[ceiling(70*cd/m)],

pch=16,

)

points(r$res ~ r$fitted.values, cex=1+5*cd/m)

abline(h=0,lty=3)

It might be prettier on a black background.

op <- par(fg='white', bg='black',

col='white', col.axis='white',

col.lab='white', col.main='white',

col.sub='white')

plot(r$res ~ r$fitted.values,

cex=1+5*cd/m,

col=heat.colors(100)[ceiling(100*cd/m)],

pch=16,

)

abline(h=0,lty=3)

par(op)

You can also have a look at the "lm.influence", "influence.measures", "ls.diag" functions.

TODO: delete the following plot?

# With Cook's distance x <- rnorm(20) y <- 1 + x + rnorm(20) x <- c(x,10) y <- c(y,1) r <- lm(y~x) d <- cooks.distance(r) d <- (99/4)*d*(d+1)^2 + 1 d[d>100] <- 100 d[d<20] <- 20 d <- d/20 plot( y~x, cex=d ) abline(r) abline(coef(line(x,y)), col='red') abline(lm(y[1:20]~x[1:20]),col='blue')

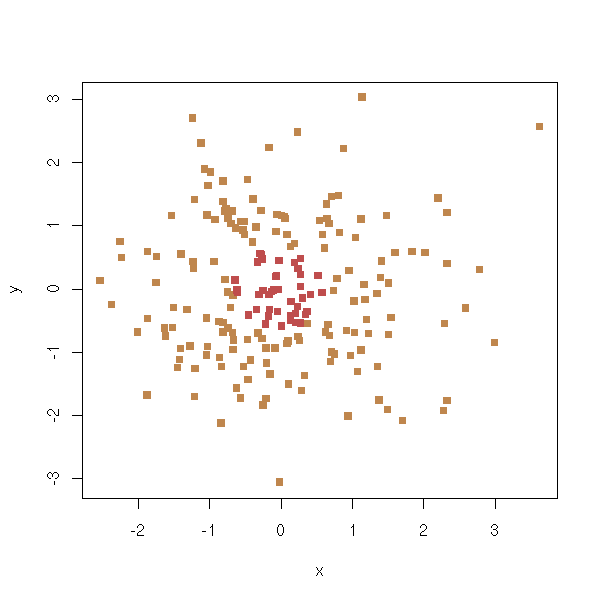

When there is not a single extreme value but several, it is trickier to spot. You can try with a resistant regression, such as a trimmed regression (lts).

Usually, those multiple extreme values do not appear at random (as would an isolated outlier), but have a real meaning -- as such, they should be dealt with. You can try to spot them with unsupervised learning algorithms.

?hclust ?kmeans TODO: give an example

A cluster of extreme values can also be a sign that the model is not appropriate.

TODO: write up (and model) the following example (mixture) n <- 200 s <- .2 x <- runif(n) y1 <- 1 - 2 * x + s*rnorm(n) y2 <- 2 * x - 1 + s*rnorm(n) y <- ifelse( sample(c(T,F),n,replace=T,prob=c(.25,.75)), y1, y2 ) plot(y~x) abline(1,-2,lty=3) abline(-1,2,lty=3)

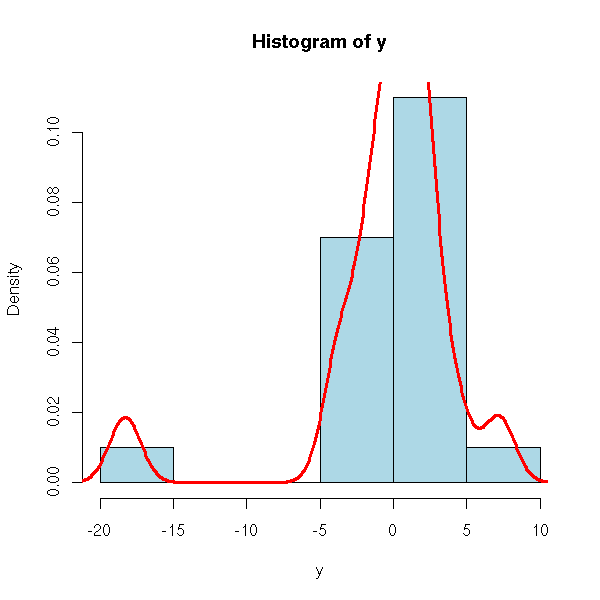

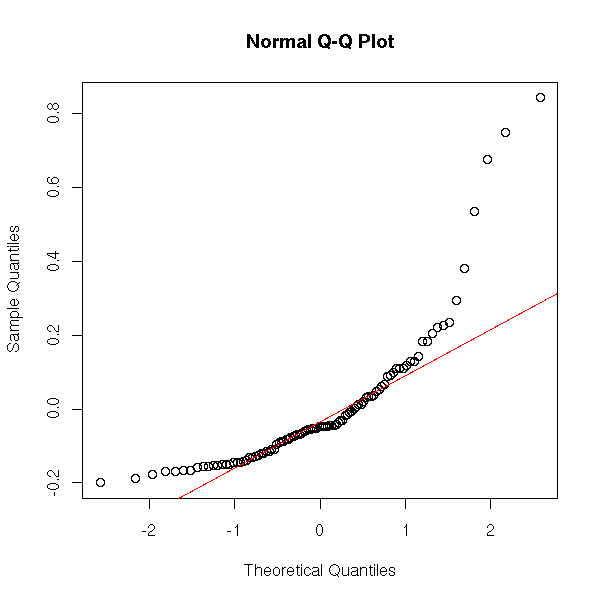

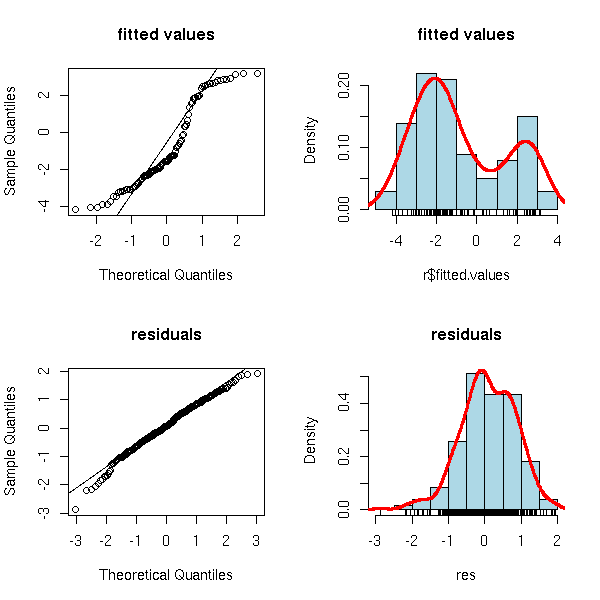

If the residuals are not gaussian, the least squares estimators are not optimal (some robust estimators are better, even if they are biased) and, even worse, all the tests, variance computations, confidence interval computations are wrong.

However, if the residuals are less dispersed that with a gaussian distribution or, to a lesser extent, if the sample is very large, you can forget those problems.

You can spot non-gaussian residuals with histograms, box-and-whiskers plots (boxplots) or quantile-quantile plots.

x <- runif(100) y <- 1 - 2*x + .3*exp(rnorm(100)-1) r <- lm(y~x) boxplot(r$residuals, horizontal=T)

hist(r$residuals, breaks=20, probability=T, col='light blue')

lines(density(r$residuals), col='red', lwd=3)

f <- function(x) {

dnorm(x,

mean=mean(r$residuals),

sd=sd(r$residuals),

)

}

curve(f, add=T, col="red", lwd=3, lty=2)

Do not forget the quantile-quantile plots.

qqnorm(r$residuals) qqline(r$residuals, col='red')

Let us look at what happens with non-gaussian residuals. We shall consider a rather extreme situation: a Cauchy variable with a hole in the middle and a rather small sample.

rcauchy.with.hole <- function (n) {

x <- rcauchy(n)

x[x>0] <- 10+x[x>0]

x[x<0] <- -10+x[x<0]

x

}

n <- 20

x <- rcauchy(n)

y <- 1 - 2*x + .5*rcauchy.with.hole(n)

plot(y~x)

abline(1,-2)

r <- lm(y~x)

abline(r, col='red')

op <- par(mfrow=c(2,2))

hist(r$residuals, breaks=20, probability=T, col='light blue')

lines(density(r$residuals), col='red', lwd=3)

f <- function(x) {

dnorm(x,

mean=mean(r$residuals),

sd=sd(r$residuals),

)

}

curve(f, add=T, col="red", lwd=3, lty=2)

qqnorm(r$residuals)

qqline(r$residuals, col='red')

plot(r$residuals ~ r$fitted.values)

plot(r$residuals ~ x)

par(op)

Let us compute some forecasts.

n <- 10000 xp <- runif(n,-50,50) yp <- predict(r, data.frame(x=xp), interval="prediction") yr <- 1 - 2*xp + .5*rcauchy.with.hole(n) sum( yr < yp[,3] & yr > yp[,2] )/n

We get 0.9546, i.e., we are in the prediction interval in (more than) 5% of the cases -- but the prediction interval is huge: it tells us that we cannot predict much.

Let us try with a smaller sample.

n <- 5 x <- rcauchy(n) y <- 1 - 2*x + .5*rcauchy.with.hole(n) r <- lm(y~x) n <- 10000 xp <- sort(runif(n,-50,50)) yp <- predict(r, data.frame(x=xp), interval="prediction") yr <- 1 - 2*xp + .5*rcauchy.with.hole(n) sum( yr < yp[,3] & yr > yp[,2] )/n

Even worse: 0.9975.

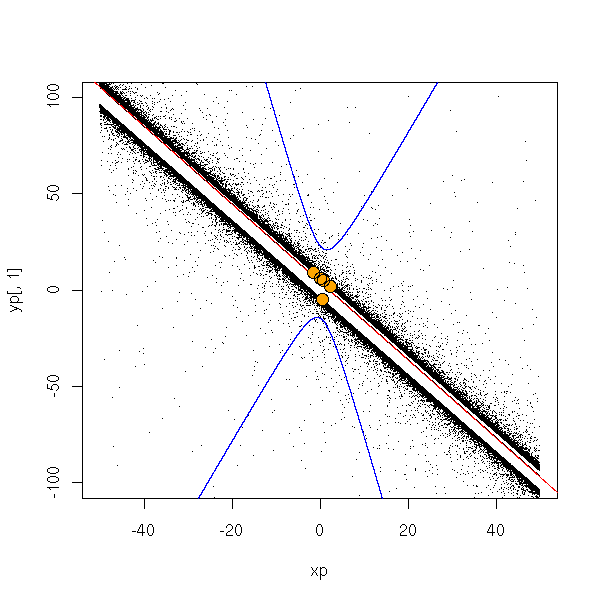

To see what happens, let us plot some of these points.

done <- F

while(!done) {

# A situation where the prediction interval is not too

# large, so that it appears on the plot.

n <- 5

x <- rcauchy(n)

y <- 1 - 2*x + .5*rcauchy.with.hole(n)

r <- lm(y~x)

n <- 100000

xp <- sort(runif(n,-50,50))

yp <- predict(r, data.frame(x=xp), interval="prediction")

done <- ( yp[round(n/2),2] > -75 & yp[round(n/2),3] < 75 )

}

yr <- 1 - 2*xp + .5*rcauchy.with.hole(n)

plot(yp[,1]~xp, type='l',

xlim=c(-50,50), ylim=c(-100,100))

points(yr~xp, pch='.')

lines(xp, yp[,2], col='blue')

lines(xp, yp[,3], col='blue')

abline(r, col='red')

points(y~x, col='orange', pch=16, cex=1.5)

points(y~x, cex=1.5)

done <- F

while(!done) {

# Even worse: the sign of the slope is incorrect

n <- 5

x <- rcauchy(n)

y <- 1 - 2*x + .5*rcauchy.with.hole(n)

r <- lm(y~x)

n <- 100000

xp <- sort(runif(n,-50,50))

yp <- predict(r, data.frame(x=xp), interval="prediction")

print(r$coef[2])

done <- ( yp[round(n/2),2] > -75 &

yp[round(n/2),3] < 75 &

r$coef[2]>0 )

}

yr <- 1 - 2*xp + .5*rcauchy.with.hole(n)

plot(yp[,1]~xp, type='l',

xlim=c(-50,50), ylim=c(-100,100))

points(yr~xp, pch='.')

lines(xp, yp[,2], col='blue')

lines(xp, yp[,3], col='blue')

abline(r, col='red')

points(y~x, col='orange', pch=16, cex=1.5)

points(y~x, cex=1.5)

We see that the prediction interval is huge if there are several outliers. Let us try with smaller values.

n <- 10000 xp <- sort(runif(n,-.1,.1)) yp <- predict(r, data.frame(x=xp), interval="prediction") yr <- 1 - 2*xp + .5*rcauchy.with.hole(n) sum( yr < yp[,3] & yr > yp[,2] )/n

We get 0.9932...

done <- F

while (!done) {

n <- 5

x <- rcauchy(n)

y <- 1 - 2*x + .5*rcauchy.with.hole(n)

r <- lm(y~x)

done <- T

}

n <- 10000

xp <- sort(runif(n,-2,2))

yp <- predict(r, data.frame(x=xp), interval="prediction")

yr <- 1 - 2*xp + .5*rcauchy.with.hole(n)

plot(c(xp,x), c(yp[,1],y), pch='.',

xlim=c(-2,2), ylim=c(-50,50) )

lines(yp[,1]~xp)

abline(r, col='red')

lines(xp, yp[,2], col='blue')

lines(xp, yp[,3], col='blue')

points(yr~xp, pch='.')

points(y~x, col='orange', pch=16)

points(y~x)

done <- F

essais <- 0

while (!done) {

n <- 5

x <- rcauchy(n)

y <- 1 - 2*x + .5*rcauchy.with.hole(n)

r <- lm(y~x)

yp <- predict(r, data.frame(x=2), interval='prediction')

done <- yp[3]<0

essais <- essais+1

}

print(essais) # Around 20 or 30

n <- 10000

xp <- sort(runif(n,-2,2))

yp <- predict(r, data.frame(x=xp), interval="prediction")

yr <- 1 - 2*xp + .5*rcauchy.with.hole(n)

plot(c(xp,x), c(yp[,1],y), pch='.',

xlim=c(-2,2), ylim=c(-50,50) )

lines(yp[,1]~xp)

points(yr~xp, pch='.')

abline(r, col='red')

lines(xp, yp[,2], col='blue')

lines(xp, yp[,3], col='blue')

points(y~x, col='orange', pch=16)

points(y~x)

done <- F

e <- NULL

for (i in 1:100) {

essais <- 0

done <- F

while (!done) {

n <- 5

x <- rcauchy(n)

y <- 1 - 2*x + .5*rcauchy.with.hole(n)

r <- lm(y~x)

yp <- predict(r, data.frame(x=2), interval='prediction')

done <- yp[3]<0

essais <- essais+1

}

e <- append(e,essais)

}

hist(e, probability=T, col='light blue')

lines(density(e), col='red', lwd=3)

abline(v=median(e), lty=2, col='red', lwd=3)

> mean(e) [1] 25.8 > median(e) [1] 19

In short, to have the most incorrect prediction intervals, take large values of x, bit not too large (close to 0, the predictions are correct, away from 0, the prediction intervals are huge).

I wanted to prove, here, on an example, that non gaussian residuals produces confidence intervals too small and thus incorrect results. I was wrong: the confidence intervals are correct but very large, to the point that the forecasts are useless.

Exercice: do the same with other distributions (Cauchy, uniform, etc.), either for the noise or for the variables.

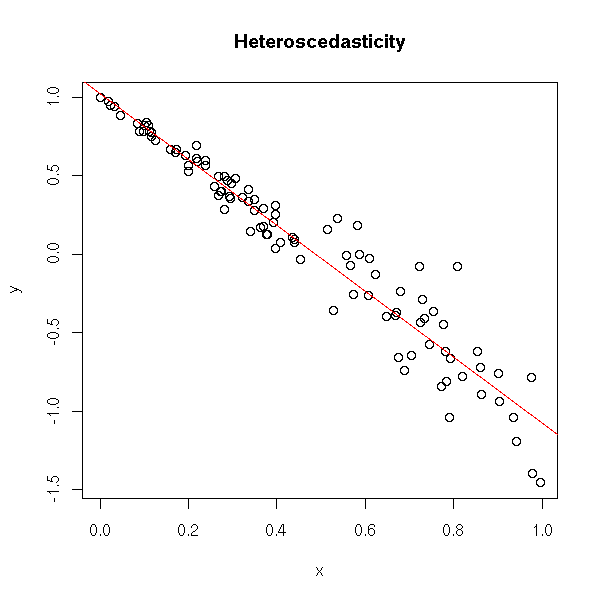

For the least squares estimators to be optimal and for the test results to be correct, we had to assume (among other hypotheses) that the variance of the noise was constant. If it is not, it is said toe be heteroscedastic.

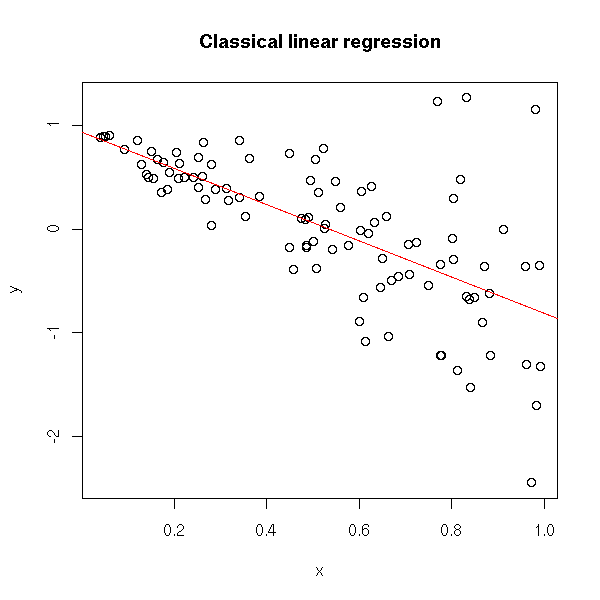

x <- runif(100) y <- 1 - 2*x + .3*x*rnorm(100) plot(y~x) r <- lm(y~x) abline(r, col='red') title(main="Heteroscedasticity")

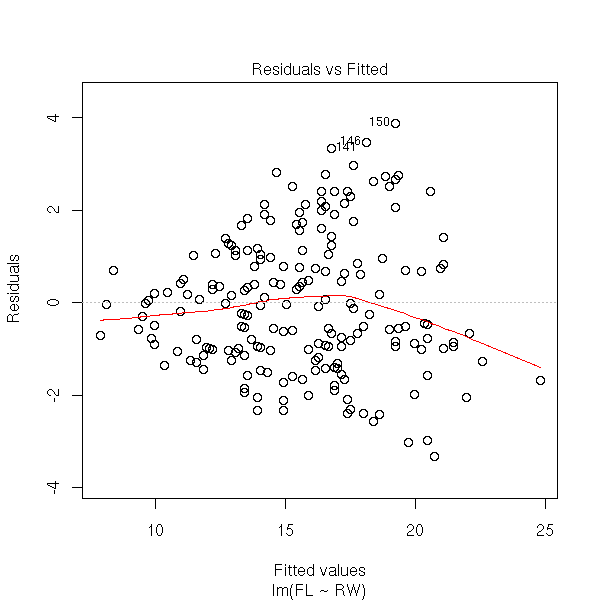

You can spot the problem on the residuals.

plot(r$residuals ~ r$fitted.values)

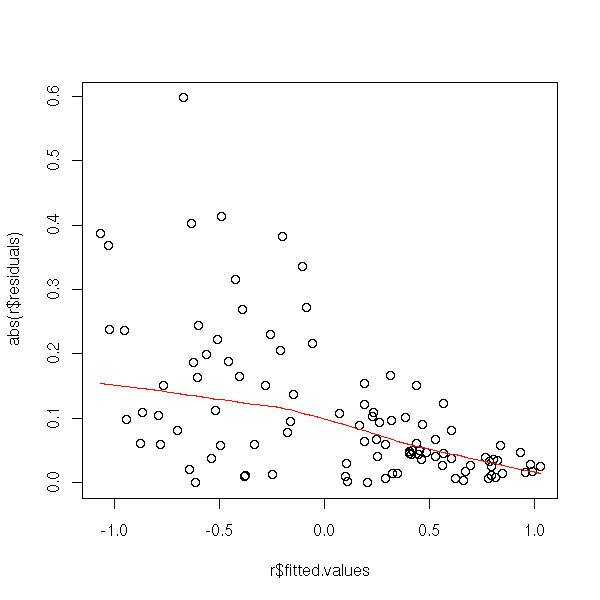

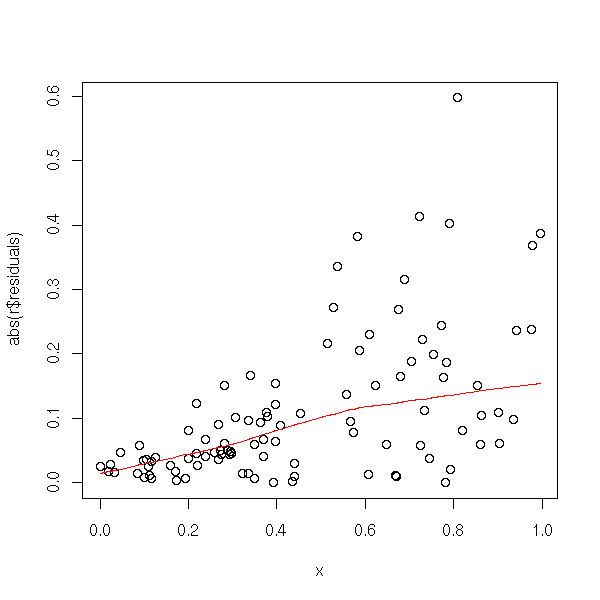

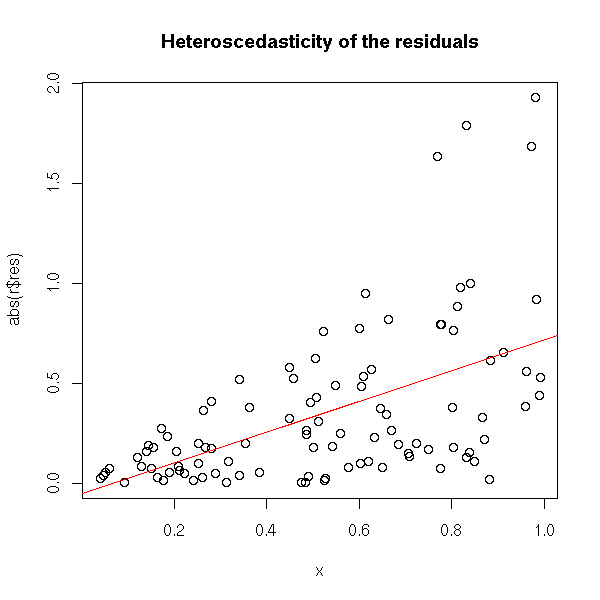

Or, more precisely, on their absolute value, on which you can perform a non-linear regression.

plot(abs(r$residuals) ~ r$fitted.values) lines(lowess(r$fitted.values, abs(r$residuals)), col='red')

plot(abs(r$residuals) ~ x) lines(lowess(x, abs(r$residuals)), col='red')

Here is a concrete example.

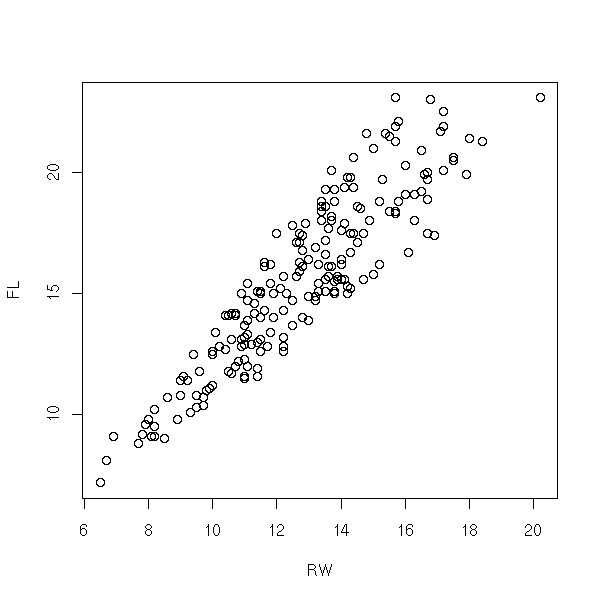

data(crabs) plot(FL~RW, data=crabs)

r <- lm(FL~RW, data=crabs) plot(r, which=1)

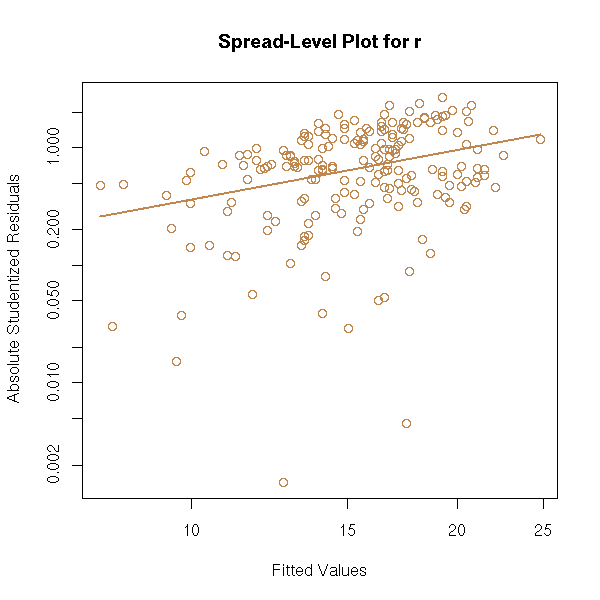

plot(r, which=3, panel = panel.smooth)

The "spread.level.plot" from the "car" package has the same aim: it plots the absolute value of the residuals as a function of the predicted values, on logarithmic scales and suggests a transformation to get rid of heteroscedasticity.

library(car) spread.level.plot(r)

You can also see the problem in a more computational way, by splitting the sample into two parts and performing a test to see if the two parts have the same variance.

n <- length(crabs$RW) m <- ceiling(n/2) o <- order(crabs$RW) r <- lm(FL~RW, data=crabs) x <- r$residuals[o[1:m]] y <- r$residuals[o[(m+1):n]] var.test(y,x) # p-value = 1e-4

Let us see, on an example, what the effects of heteroscedasticity are.

x <- runif(100) y <- 1 - 2*x + .3*x*rnorm(100) r <- lm(y~x) xp <- runif(10000,0,.1) yp <- predict(r, data.frame(x=xp), interval="prediction") yr <- 1 - 2*xp + .3*xp*rnorm(100) sum( yr < yp[,3] & yr > yp[,2] )/n

We get 1: where the variance is small, the confidence intervals are too small.

x <- runif(100) y <- 1 - 2*x + .3*x*rnorm(100) r <- lm(y~x) xp <- runif(10000,.9,1) yp <- predict(r, data.frame(x=xp), interval="prediction") yr <- 1 - 2*xp + .3*xp*rnorm(100) sum( yr < yp[,3] & yr > yp[,2] )/n

We get 0.67: where the variance is higher, the confidence intervals are too small.

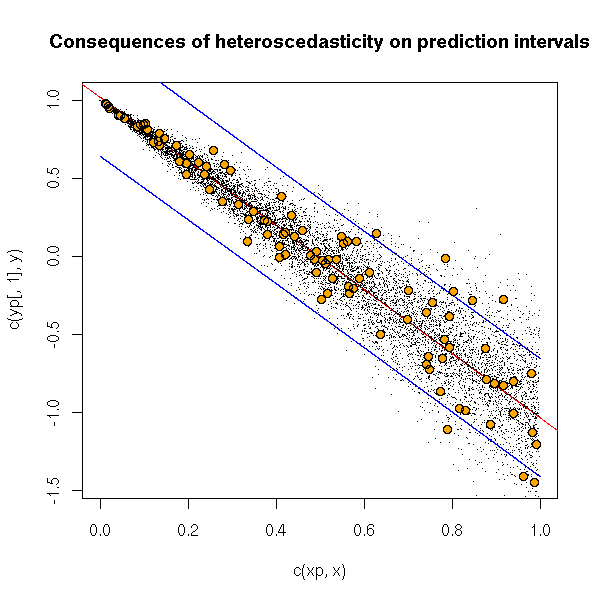

We can see this graphically.

x <- runif(100) y <- 1 - 2*x + .3*x*rnorm(100) r <- lm(y~x) n <- 10000 xp <- sort(runif(n,)) yp <- predict(r, data.frame(x=xp), interval="prediction") yr <- 1 - 2*xp + .3*xp*rnorm(n) plot(c(xp,x), c(yp[,1],y), pch='.') lines(yp[,1]~xp) abline(r, col='red') lines(xp, yp[,2], col='blue') lines(xp, yp[,3], col='blue') points(yr~xp, pch='.') points(y~x, col='orange', pch=16) points(y~x) title(main="Consequences of heteroscedasticity on prediction intervals")

The simplest way to get rid of heteroscedasticity is (when it works) to transform the data. If it is possible, find a transformation of the data that will both have it look gaussian and get rid of heteroscedasticity.

Generalized least squaes allow you to perform a regression with heteroscedastic data, but you have to know how the variance varies.

TODO: put this example later? n <- 100 x <- runif(n) y <- 1 - 2*x + x*rnorm(n) plot(y~x) r <- lm(y~x) abline(r, col='red') title(main="Classical linear regression")

plot(abs(r$res) ~ x) r2 <- lm( abs(r$res) ~ x ) abline(r2, col="red") title(main="Heteroscedasticity of the residuals")

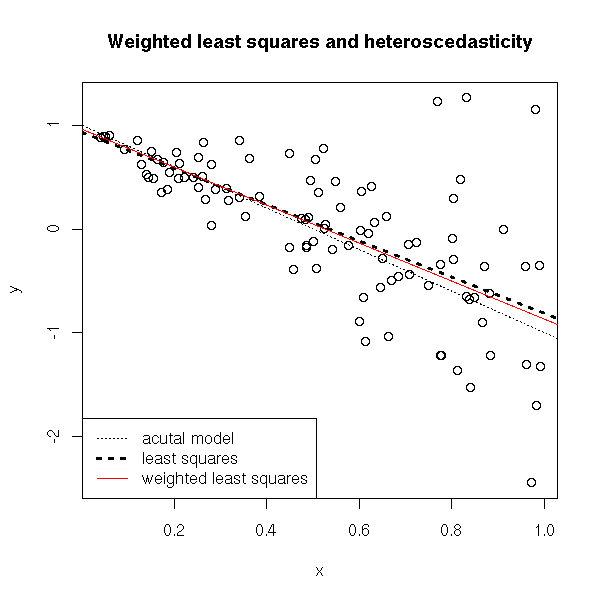

The idea of weighted least squares is to give a lesser weight (i.e., a lesser importance) to observations whose variance is high.

# We assume the the standard deviation of the residuals

# is of the form a*x

a <- lm( I(r$res^2) ~ I(x^2) - 1 )$coefficients

w <- (a*x)^-2

r3 <- lm( y ~ x, weights=w )

plot(y~x)

abline(1,-2, lty=3)

abline(lm(y~x), lty=3, lwd=3)

abline(lm(y~x, weights=w), col='red')

legend( par("usr")[1], par("usr")[3], yjust=0,

c("acutal model", "least squares",

"weighted least squares"),

lwd=c(1,3,1),

lty=c(3,3,1),

col=c(par("fg"), par("fg"), 'red') )

title("Weighted least squares and heteroscedasticity")

On the contrary, the prediction intervals are not very convincing...

TODO: check what follows

# Prediction intervals

N <- 10000

xx <- runif(N,min=0,max=2)

yy <- 1 - 2*xx + xx*rnorm(N)

plot(y~x, xlim=c(0,2), ylim=c(-3,2))

points(yy~xx, pch='.')

abline(1,-2, col='red')

xp <- seq(0,3,length=100)

yp1 <- predict(r, new=data.frame(x=xp), interval='prediction')

lines( xp, yp1[,2], col='red', lwd=3 )

lines( xp, yp1[,3], col='red', lwd=3 )

yp3 <- predict(r3, new=data.frame(x=xp), interval='prediction')

lines( xp, yp3[,2], col='blue', lwd=3 )

lines( xp, yp3[,3], col='blue', lwd=3 )

legend( par("usr")[1], par("usr")[3], yjust=0,

c("least squares", "weighted least squares"),

lwd=3, lty=1,

col=c('red', 'blue') )

title(main="Prediction band")

You can also do that with the "gls" function

?gls ?varConstPower ?varPower r4 <- gls(y~x, weights=varPower(1, form= ~x)) ???

library(help=lmtest) library(help=strucchange) # Heteroscedasticity library(lmtest) ?dwtest ?bgtest ?bptest ?gqtest ?hmctest

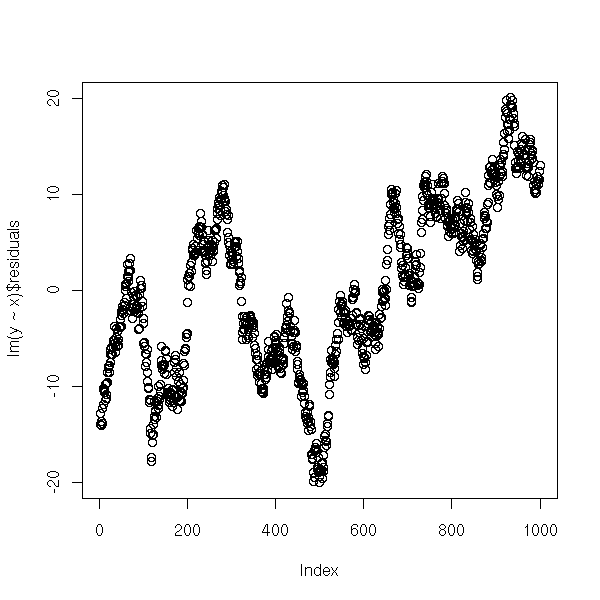

In the case of time series, of geographical data (or more generally, data for which you ave a notion of "proximity" between the observations), the errors of two consecutive observations may be correlated.

In the case of time series, you can see the problem in an autocorrelogram.

my.acf.plot <- function (x, n=10, ...) {

y <- rep(NA,n)

l <- length(x)

for (i in 1:n) {

y[i] <- cor( x[1:(l-i)], x[(i+1):l] )

}

plot(y, type='h', ylim=c(-1,1),...)

}

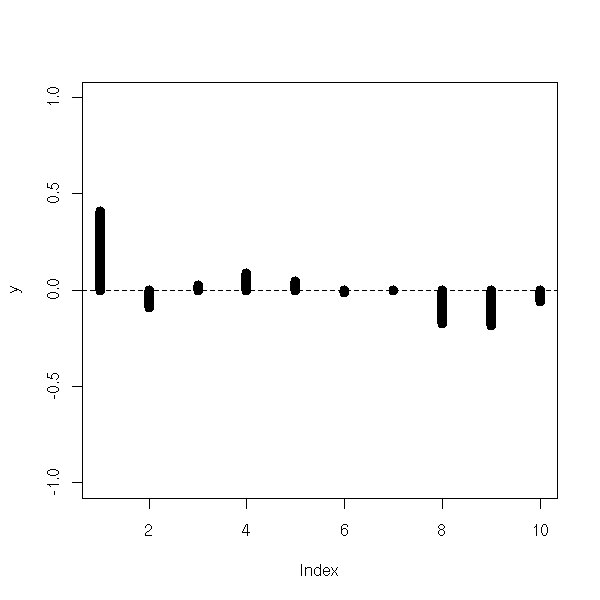

n <- 100

x <- runif(n)

b <- .1*rnorm(n+1)

y <- 1-2*x+b[1:n]

my.acf.plot(lm(y~x)$res, lwd=10)

abline(h=0, lty=2)

z <- 1-2*x+.5*(b[1:n]+b[1+1:n]) my.acf.plot(lm(z~x)$res, lwd=10) abline(h=0, lty=2)

Here is a very autocorrelated example.

n <- 500

x <- runif(n)

b <- rep(NA,n)

b[1] <- 0

for (i in 2:n) {

b[i] <- b[i-1] + .1*rnorm(1)

}

y <- 1-2*x+b[1:n]

my.acf.plot(lm(y~x)$res, n=100)

abline(h=0, lty=2)

title(main='Very autocorrelated example')

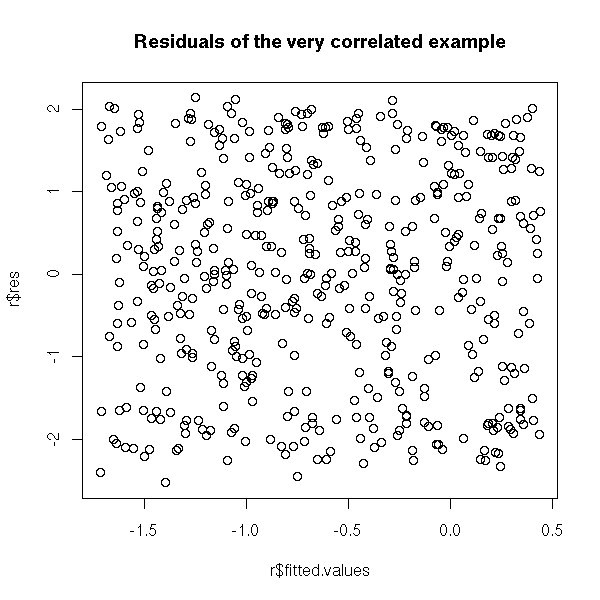

We do not see anything on the plot of the residuals as a function of the predicted values.

r <- lm(y~x) plot(r$res ~ r$fitted.values) title(main="Residuals of the very correlated example")

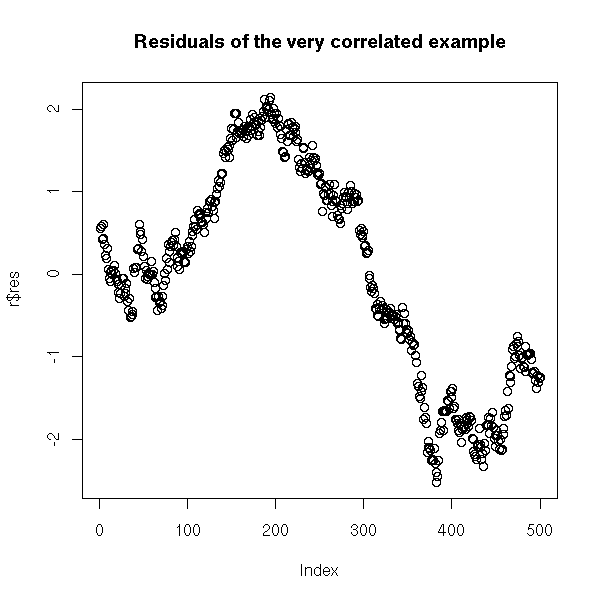

On the contrary, if you plot the residuals as a function of time, it is clearer.

r <- lm(y~x) plot(r$res) title(main="Residuals of the very correlated example")

Another means of spotting the problem is to check if the correlation between x[i] and x[i-1] is significantly non zero.

n <- 100

x <- runif(n)

b <- rep(NA,n)

b[1] <- 0

for (i in 2:n) {

b[i] <- b[i-1] + .1*rnorm(1)

}

y <- 1-2*x+b[1:n]

r <- lm(y~x)$res

cor.test(r[1:(n-1)], r[2:n]) # p-value under 1e-15

n <- 100

x <- runif(n)

b <- .1*rnorm(n+1)

y <- 1-2*x+b[1:n]

r <- lm(y~x)$res

cor.test(r[1:(n-1)], r[2:n]) # p-value = 0.3

y <- 1-2*x+.5*(b[1:n]+b[1+1:n])

cor.test(r[1:(n-1)], r[2:n]) # p-value = 0.3 (again)See also the Durbin--Watson test:

library(car) ?durbin.watson library(lmtest) ?dwtest

and the chapter on time series.

Yet another means of spotting the problem is to plot the consecutive residuals in 2 or 3 dimensions.

n <- 500

x <- runif(n)

b <- rep(NA,n)

b[1] <- 0

for (i in 2:n) {

b[i] <- b[i-1] + .1*rnorm(1)

}

y <- 1-2*x+b[1:n]

r <- lm(y~x)$res

plot( r[1:(n-1)], r[2:n],

xlab='i-th residual',

ylab='(i+1)-th residual' )

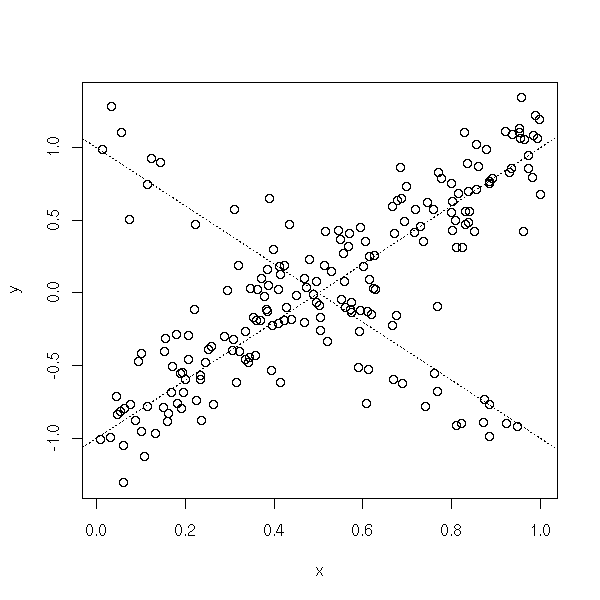

In the following example, we do not see anything with two consecutive terms (well, it looks like a Rorschach test, it is suspicious): we need three.

n <- 500

x <- runif(n)

b <- rep(NA,n)

b[1] <- 0

b[2] <- 0

for (i in 3:n) {

b[i] <- b[i-2] + .1*rnorm(1)

}

y <- 1-2*x+b[1:n]

r <- lm(y~x)$res

plot(data.frame(x=r[3:n-2], y=r[3:n-1], z=r[3:n]))

plot(r)

It is exaclty like that we can see the problems of some old random number generators. In three dimensions, front view, there is nothing visible,

data(randu) plot(randu) # Nothing visible

but if we rotate the figure...

library(xgobi) xgobi(randu)

You can also turn the picture directly in R, by taking a "random" rotation matrix (exercice: write a function to produce such a matrix -- hint: there is one somewhere inthis document).

m <- matrix( c(0.0491788982891203, -0.998585856299176, 0.0201921658647648,

0.983046639705112, 0.0448184901961194, -0.177793720645666,

-0.176637312387723, -0.028593540105802, -0.983860594462783),

nr=3, nc=3)

plot( t( m %*% t(randu) )[,1:2] )

Here is a real example.

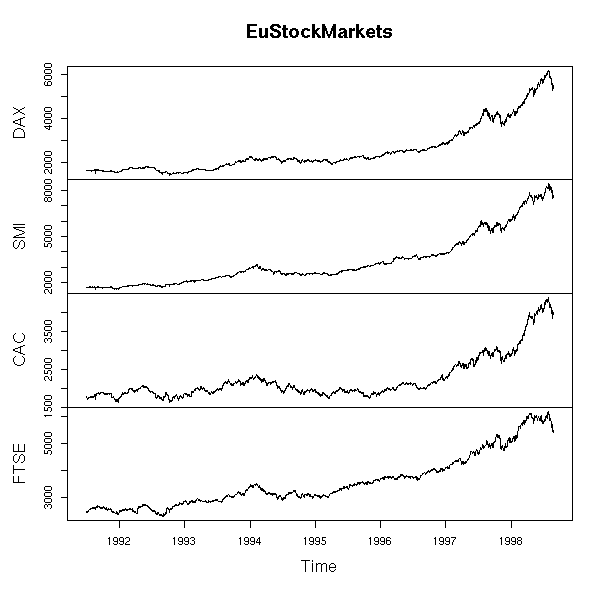

data(EuStockMarkets) plot(EuStockMarkets)

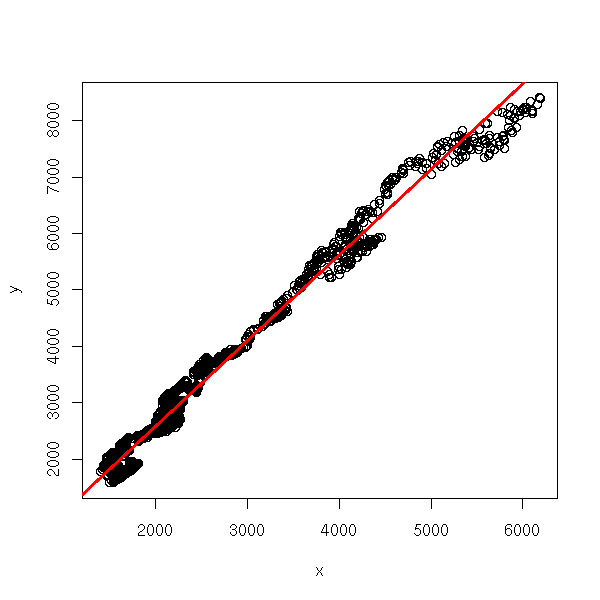

x <- EuStockMarkets[,1] y <- EuStockMarkets[,2] r <- lm(y~x) plot(y~x) abline(r, col='red', lwd=3)

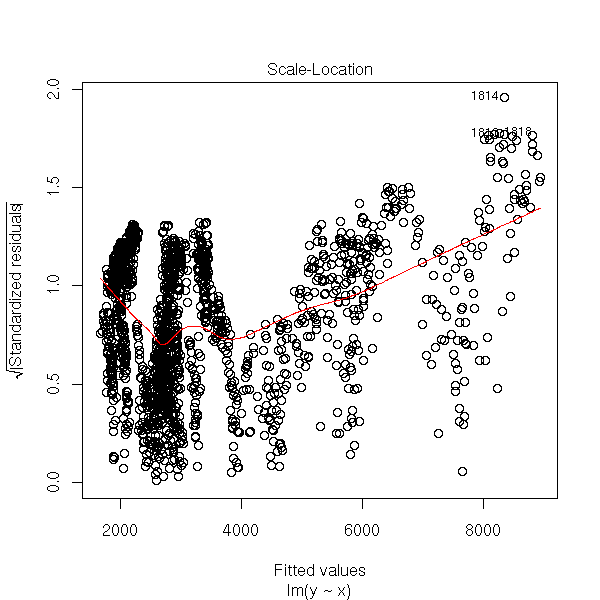

plot(r, which=1)

plot(r, which=3)

plot(r, which=4)

r <- r$res hist(r, probability=T, col='light blue') lines(density(r), col='red', lwd=3)

plot(r)

acf(r)

pacf(r)

r <- as.vector(r) x <- r[1:(length(r)-1)] y <- r[2:length(r)] plot(x,y, xlab='x[i]', ylab='x[i+1]')

In such a situation, you can use generalized least squares. The AR1 model assumes that two successive errors are correlated:

e_{i+1} = r * e_i + f_iWhere r is the "AR1 coefficient" and the f_i are independant.

n <- 100

x <- rnorm(n)

e <- vector()

e <- append(e, rnorm(1))

for (i in 2:n) {

e <- append(e, .6 * e[i-1] + rnorm(1) )

}

y <- 1 - 2*x + e

i <- 1:n

plot(y~x)

r <- lm(y~x)$residuals plot(r)

The "gls" function (generalized least squares) is in the "nlme" package.

library(nlme) g <- gls(y~x, correlation = corAR1(form= ~i))

Here is the result.

> summary(g)

Generalized least squares fit by REML

Model: y ~ x

Data: NULL

AIC BIC logLik

298.4369 308.7767 -145.2184

Correlation Structure: AR(1)

Formula: ~i

Parameter estimate(s):

Phi

0.3459834

Coefficients:

Value Std.Error t-value p-value

(Intercept) 1.234593 0.15510022 7.959971 <.0001

x -1.892171 0.09440561 -20.042992 <.0001

Correlation:

(Intr)

x 0.04

Standardized residuals:

Min Q1 Med Q3 Max

-2.14818684 -0.75053384 0.02200128 0.57222518 2.45362824

Residual standard error: 1.085987

Degrees of freedom: 100 total; 98 residualWe can look at the confidence interval on the autocorrelation coefficient.

> intervals(g)

Approximate 95% confidence intervals

Coefficients:

lower est. upper

(Intercept) 0.926802 1.234593 1.542385

x -2.079516 -1.892171 -1.704826

Correlation structure:

lower est. upper

Phi 0.1477999 0.3459834 0.5174543

Residual standard error:

lower est. upper

0.926446 1.085987 1.273003Let us compare with a naive regression.

library(nlme) plot(y~x) abline(lm(y~x)) abline(gls(y~x, correlation = corAR1(form= ~i)), col='red')

In this example, there is no remarkable effect. In the following, the situation is more drastic.

n <- 1000

x <- rnorm(n)

e <- vector()

e <- append(e, rnorm(1))

for (i in 2:n) {

e <- append(e, 1 * e[i-1] + rnorm(1) )

}

y <- 1 - 2*x + e

i <- 1:n

plot(lm(y~x)$residuals)

plot(y~x) abline(lm(y~x)) abline(gls(y~x, correlation = corAR1(form= ~i)), col='red') abline(1,-2, lty=2)

We shall come back on this when we tackle time series.

For spatial data, it is more complicated.

TODO: a reference???

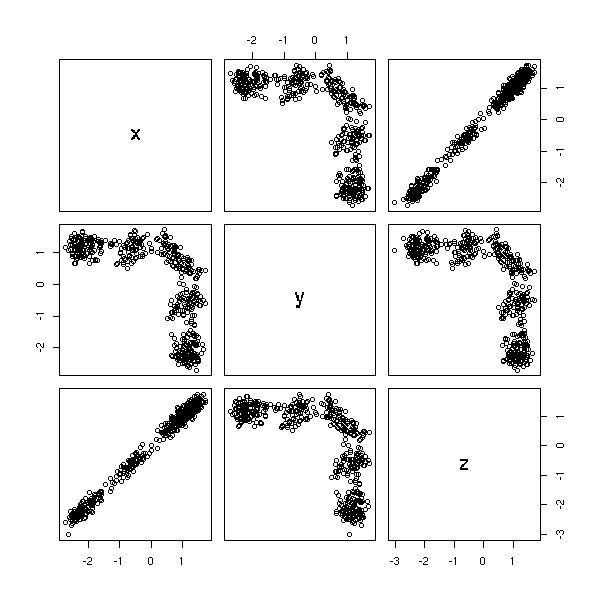

The correlation coefficient between two variables tells you if they are correlated. But you can also have relations between more than two variables, such as X3 = X1 + X2. To detect those, you can perform a regression of Xk agains the other Xi's and check the R^2: if it is high (>1e-1), Xk can be expressed from the other Xi.

n <- 100 x <- rnorm(n) x1 <- x+rnorm(n) x2 <- x+rnorm(n) x3 <- rnorm(n) y <- x+x3 summary(lm(x1~x2+x3))$r.squared # 1e-1 summary(lm(x2~x1+x3))$r.squared # 1e-1 summary(lm(x3~x1+x2))$r.squared # 1e-3

Other example, with three dependant variables.

n <- 100 x1 <- rnorm(n) x2 <- rnorm(n) x3 <- x1+x2+rnorm(n) x4 <- rnorm(n) y <- x1+x2+x3+x4+rnorm(n) summary(lm(x1~x2+x3+x4))$r.squared # 0.5 summary(lm(x2~x1+x3+x4))$r.squared # 0.4 summary(lm(x3~x1+x2+x4))$r.squared # 0.7 summary(lm(x4~x1+x2+x3))$r.squared # 3e-3

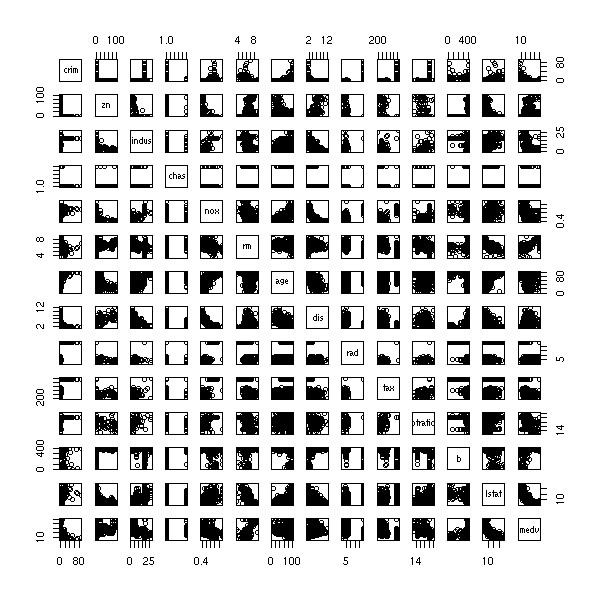

Real example (with the adjusted determination coefficient):

check.multicolinearity <- function (M) {

a <- NULL

n <- dim(M)[2]

for (i in 1:n) {

m <- as.matrix(M[, 1:n!=i])

y <- M[,i]

a <- append(a, summary(lm(y~m))$adj.r.squared)

}

names(a) <- names(M)

print(round(a,digits=2))

invisible(a)

}

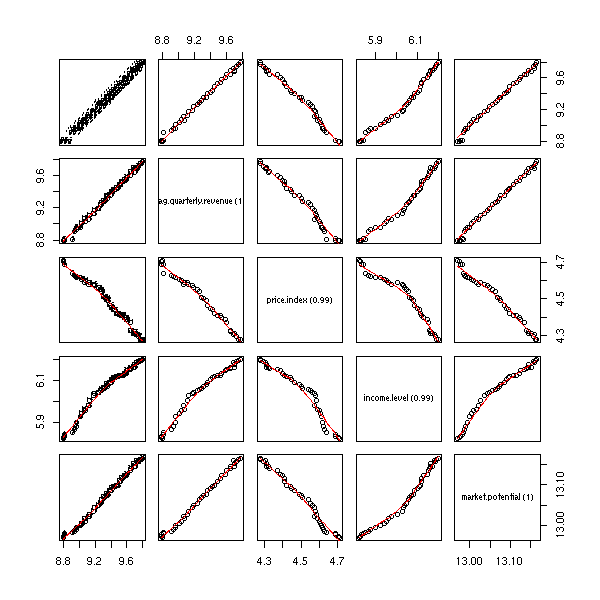

data(freeny)

names(freeny) <- paste(

names(freeny),

" (",

round(check.multicolinearity(freeny), digits=2),

")",

sep='')

pairs(freeny,

upper.panel=panel.smooth,

lower.panel=panel.smooth)

In such a situation, the fact that a certain coefficient be statistically different from zero depends on the presence of other variables. With all the variables, the first variables does not play a significant role, but the second does.

> summary(lm(freeny.y ~ freeny.x))

...

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) -10.4726 6.0217 -1.739 0.0911 .

freeny.xlag quarterly revenue 0.1239 0.1424 0.870 0.3904

freeny.xprice index -0.7542 0.1607 -4.693 4.28e-05 ***

freeny.xincome level 0.7675 0.1339 5.730 1.93e-06 ***

freeny.xmarket potential 1.3306 0.5093 2.613 0.0133 *

...

Multiple R-Squared: 0.9981, Adjusted R-squared: 0.9978On the contrary, if you only retain the first two variables, it is the opposite.

> summary(lm(freeny.y ~ freeny.x[,1:2]))

...

Estimate Std. Error t value Pr(>|t|)

(Intercept) 2.18577 1.47236 1.485 0.146

freeny.x[, 1:2]lag quarterly revenue 0.89122 0.07412 12.024 3.63e-14 ***

freeny.x[, 1:2]price index -0.25592 0.17534 -1.460 0.153

...

Multiple R-Squared: 0.9958, Adjusted R-squared: 0.9956Furthermore, the estimation of the coefficients anf their standard deviation is worrying: in a multilinearity situation, you cannot be sure of the sign of the coefficients.

n <- 100 v <- .1 x <- rnorm(n) x1 <- x + v*rnorm(n) x2 <- x + v*rnorm(n) x3 <- x + v*rnorm(n) y <- x1+x2-x3 + rnorm(n)

Let us check that the variables are linearly dependant.

> summary(lm(x1~x2+x3))$r.squared [1] 0.986512 > summary(lm(x2~x1+x3))$r.squared [1] 0.98811 > summary(lm(x3~x1+x2))$r.squared [1] 0.9862133

Let us look at the most relevant ones.

> summary(lm(y~x1+x2+x3))

Call:

lm(formula = y ~ x1 + x2 + x3)

Residuals:

Min 1Q Median 3Q Max

-3.0902 -0.7658 0.0793 0.6995 2.6456

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 0.06361 0.11368 0.560 0.5771

x1 1.47317 0.94653 1.556 0.1229

x2 1.18874 0.98481 1.207 0.2304

x3 -1.70372 0.94366 -1.805 0.0741 .

---

Signif. codes: 0 `***' 0.001 `**' 0.01 `*' 0.05 `.' 0.1 ` ' 1

Residual standard error: 1.135 on 96 degrees of freedom

Multiple R-Squared: 0.4757, Adjusted R-squared: 0.4593

F-statistic: 29.03 on 3 and 96 DF, p-value: 1.912e-13It is the third.

> lm(y~x3)$coef (Intercept) x3 0.06970513 0.98313878

Its coefficient was negative, but if we remove the other variables, it becomes positive.

Instead of looking at the determination coefficient (percentage of explained variance) R^2, you can look at the "Variance Inflation Factors" (VIF),

1

V_j = -----------

1 - R_j^2

> vif(lm(y~x1+x2+x3))

x1 x2 x3

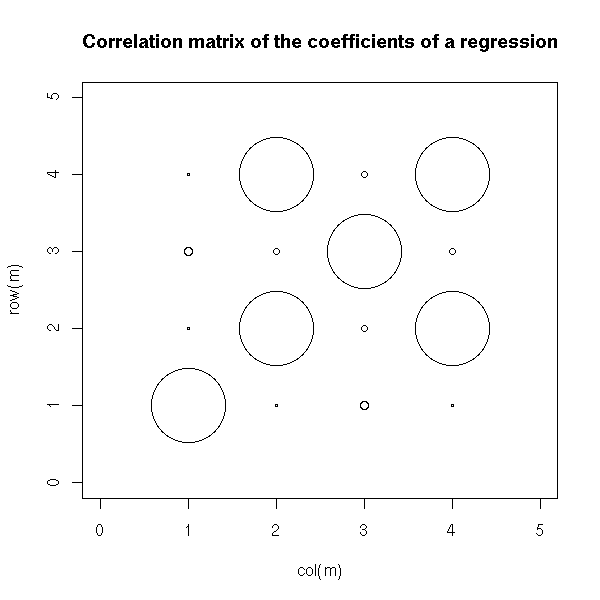

48.31913 41.13990 52.10746Instead of looking at the R^2, you can look at the correlation matrix between the estimated coefficients.

n <- 100 v <- .1 x <- rnorm(n) x1 <- x + v*rnorm(n) x2 <- rnorm(n) x3 <- x + v*rnorm(n) y <- x1+x2-x3 + rnorm(n) summary(lm(y~x1+x2+x3), correlation=T)$correlation

We get

(Intercept) x1 x2 x3

(Intercept) 1.0000000000 -0.02036269 0.0001812560 0.02264558

x1 -0.0203626936 1.00000000 -0.1582002900 -0.98992751

x2 0.0001812560 -0.15820029 1.0000000000 0.14729488

x3 0.0226455841 -0.98992751 0.1472948846 1.00000000We can see that X1 and X3 are dependant. We can also see it graphically.

n <- 100

v <- .1

x <- rnorm(n)

x1 <- x + v*rnorm(n)

x2 <- rnorm(n)

x3 <- x + v*rnorm(n)

y <- x1+x2-x3 + rnorm(n)

m <- summary(lm(y~x1+x2+x3), correlation=T)$correlation

plot(col(m), row(m), cex=10*abs(m),

xlim=c(0, dim(m)[2]+1),

ylim=c(0, dim(m)[1]+1),

main="Correlation matrix of the coefficients of a regression")

TODO: A graphical representation of this correlation matrix (transform it into a distance matrix, perform an MDS, plot the points, add their MST -- or simply plot the MST, without the MDS).

Here is yet another way of spotting the problem: compute the ratio of the largest eigen value and the smallest. Under 100, it is fine, over 1000, it is worrying. This is called the "contitionning index".

m <- model.matrix(y~x1+x2+x3) d <- eigen( t(m) %*% m, symmetric=T )$values d[1]/d[4] # 230

To solve the problem, you can remove the "superfluous" variables -- but you might run into interpretation problems. You can also ask for more data (multicolinearity are more frequent when you have many variables and few observations). You can also use regression techniques adapted to multicolinearity, such as "ridge regression" or SVM (see somewhere below).

We have already run into this problem with polynomial regression: to get rid of multicolinearity, we had orthonormalized the predictive variables.

> y <- cars$dist

> x <- cars$speed

> m <- cbind(x, x^2, x^3, x^4, x^5)

> cor(m)

x

x 1.0000000 0.9794765 0.9389237 0.8943823 0.8515996

0.9794765 1.0000000 0.9884061 0.9635754 0.9341101

0.9389237 0.9884061 1.0000000 0.9927622 0.9764132

0.8943823 0.9635754 0.9927622 1.0000000 0.9951765

0.8515996 0.9341101 0.9764132 0.9951765 1.0000000

> m <- poly(x,5)

> cor(m)

1 2 3 4 5

1 1.000000e+00 6.409668e-17 -1.242089e-17 -3.333244e-17 7.935005e-18

2 6.409668e-17 1.000000e+00 -4.468268e-17 -2.024748e-17 2.172470e-17

3 -1.242089e-17 -4.468268e-17 1.000000e+00 -6.583818e-17 -1.897354e-18

4 -3.333244e-17 -2.024748e-17 -6.583818e-17 1.000000e+00 -4.903304e-17

5 7.935005e-18 2.172470e-17 -1.897354e-18 -4.903304e-17 1.000000e+00TODO: Give the example of mixed models

Adding a subject-dependant intercept is equivalent to imposing a certain correlation structure.

Important variables may be missing, that can change the results of the regression and their interpretation.

In the following example, we have three variables.

n <- 100

x <- runif(n)

z <- ifelse(x>.5,1,0)

y <- 2*z -x + .1*rnorm(n)

plot( y~x, col=c('red','blue')[1+z] )

If we take into account the three variables, there is a negative correlation between x and y.

> summary(lm( y~x+z ))

Call:

lm(formula = y ~ x + z)

Residuals:

Min 1Q Median 3Q Max

-0.271243 -0.065745 0.002929 0.068085 0.215251

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 0.01876 0.02404 0.78 0.437

x -1.05823 0.07126 -14.85 <2e-16 ***

z 2.05321 0.03853 53.28 <2e-16 ***

---

Signif. codes: 0 `***' 0.001 `**' 0.01 `*' 0.05 `.' 0.1 ` ' 1

Residual standard error: 0.1008 on 97 degrees of freedom

Multiple R-Squared: 0.9847, Adjusted R-squared: 0.9844

F-statistic: 3125 on 2 and 97 DF, p-value: < 2.2e-16On the contrary, if we do not have z, the correlation becomes positive.

> summary(lm( y~x ))

Call:

lm(formula = y ~ x)

Residuals:

Min 1Q Median 3Q Max

-1.05952 -0.38289 -0.01774 0.50598 1.05198

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) -0.5689 0.1169 -4.865 4.37e-06 ***

x 2.1774 0.2041 10.669 < 2e-16 ***

---

Signif. codes: 0 `***' 0.001 `**' 0.01 `*' 0.05 `.' 0.1 ` ' 1

Residual standard error: 0.5517 on 98 degrees of freedom

Multiple R-Squared: 0.5374, Adjusted R-squared: 0.5327

F-statistic: 113.8 on 1 and 98 DF, p-value: < 2.2e-16To avoid this problem, you should include all the variables that are likely to be important (you select them from a prior knowledge of the domain studied, i.e., with non-statistical methods).

To confirm a given effect, you can also try other models (if they all confirm the effect, and its direction, it is a good omen) or gather more data, either from the same experiment, or from a comparable but slightly different one.

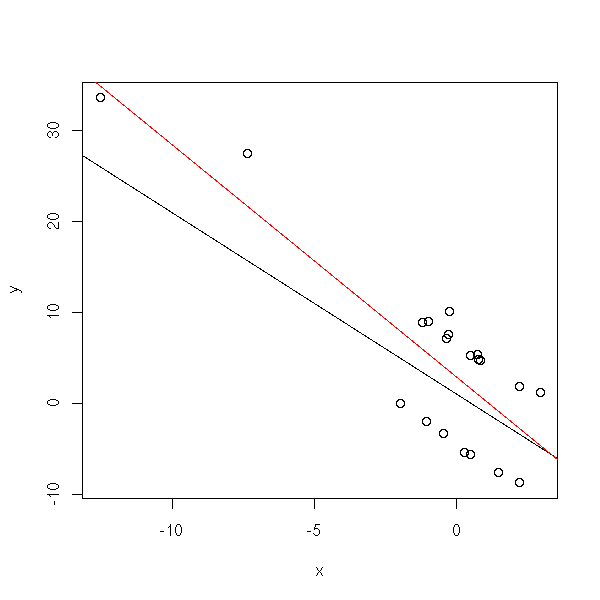

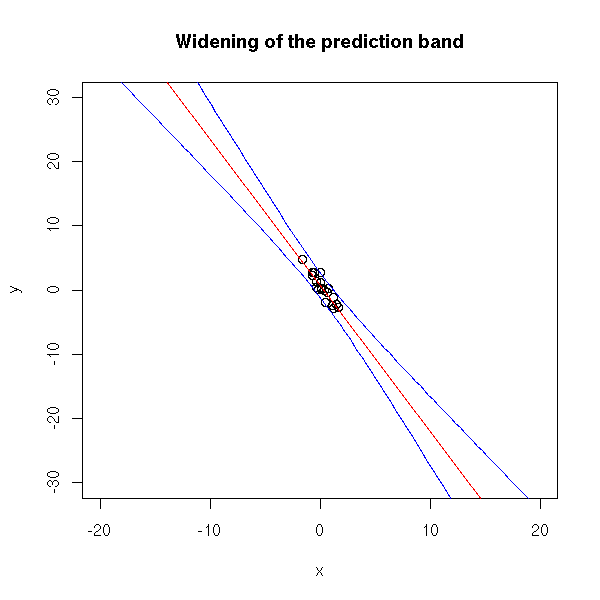

You often want to extrapolate from your data, i.e., infer what happens at larger scale (say, you have data with X in [0,1] and you would like conclusions for X in [0,10]). Several problems occur. The first is that the prediction intervals increase when you get away from the sample values.

n <- 20 x <- rnorm(n) y <- 1 - 2*x - .1*x^2 + rnorm(n) #summary(lm(y~poly(x,10))) plot(y~x, xlim=c(-20,20), ylim=c(-30,30)) r <- lm(y~x) abline(r, col='red') xx <- seq(-20,20,length=100) p <- predict(r, data.frame(x=xx), interval='prediction') lines(xx,p[,2],col='blue') lines(xx,p[,3],col='blue') title(main="Widening of the prediction band")

Furthermore, if the relation looks linear on a small scale, it might be completely different on a larger scale.

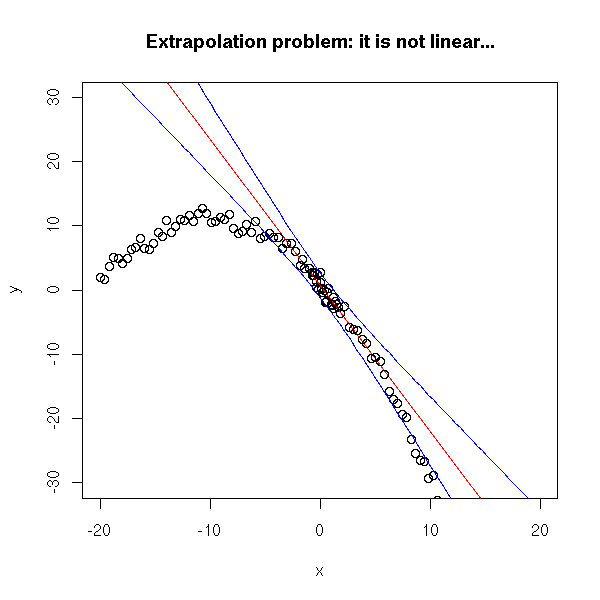

plot(y~x, xlim=c(-20,20), ylim=c(-30,30)) r <- lm(y~x) abline(r, col='red') xx <- seq(-20,20,length=100) yy <- 1 - 2*xx - .1*xx^2 + rnorm(n) p <- predict(r, data.frame(x=xx), interval='prediction') points(yy~xx) lines(xx,p[,2],col='blue') lines(xx,p[,3],col='blue') title(main="Extrapolation problem: it is not linear...")

data(cars) y <- cars$dist x <- cars$speed o <- x<quantile(x,.25) x1 <- x[o] y1 <- y[o] r <- lm(y1~x1) xx <- seq(min(x),max(x),length=100) p <- predict(r, data.frame(x1=xx), interval='prediction') plot(y~x) abline(r, col='red') lines(xx,p[,2],col='blue') lines(xx,p[,3],col='blue')

If you give the same data to different persons (for instance, students, in an exam), each will have a different model with different forecasts. The forecasts and the corresponding confidence intervals are usually incompatible: the prediction intervals are always too small...

There can be measurement errors on the predictive variable: this yields to biased estimations of the parameters (towards 0).

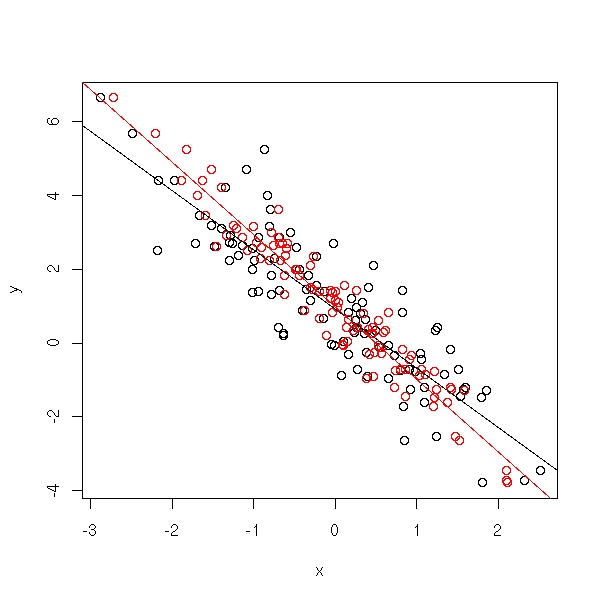

n <- 100 e <- .5 x0 <- rnorm(n) x <- x0 + e*rnorm(n) y <- 1 - 2*x0 + e*rnorm(n) plot(y~x) points(y~x0, col='red') abline(lm(y~x)) abline(lm(y~x0),col='red')

On the other hand, there is no problem about the predictions, because the measurement errors will always be present and are accounted for.

TODO: proofread this. In particular, mention the dangers of variable selection. There are actually two different subjects here: - the curse of dimension - combining models

The curse of dimension

TODO: put this part after the other regressions (logistic, Poisson, ordinal, multilogistic).

TODO: write this part.

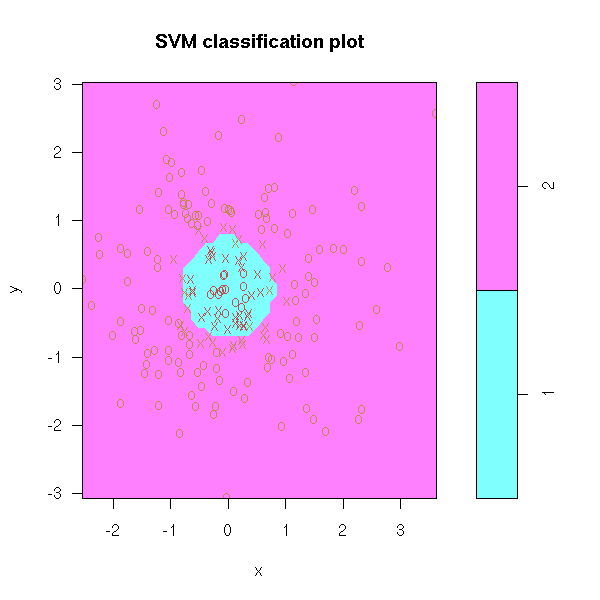

TODO: I mainly mention non-linear models in high dimensions. But what can one do when there are more variables than observations? See: svm (support vector machines).

We have already mentionned the "curse of dimension": what can we do when we have more variables than observations? What can we do when we want a non-linear regression with a very large number of variables: In both cases, if we try to play the game as in two dimensions, we end up with too many parameters to estimate with respect to the number of available observations. Either we cannot gat any estimation, or the estimations are almost random (somewhere else in this document, we call this "overfit").

We can turn around the problem by choosing simpler models, models with fewer variables -- simpler, but not too simple: the model has to be sufficiently complex to describe the data.

The following regression methods lie between linear regression (relevant when there are too few observations to allow anything else, or when the data is too noisy) and multiimensional non-linear regression (unuseable, because there are too many parameters to estimate). The allow us to thwart the curse of dimension. More precisely, we shall mention variable selection, principal component regression, ridge regression, lasso, partial least squares, Generalized Additive Models (GAMs) and tree-based algorith,s (CART, MARS).

TODO: give the structure of this chapter

Putative structure of this chapter:

Variables selection GAM, ACE?, AVAS? Trees: CART, MARS Bootstrap: bagging, boosting (But there is already a chapter about the bootstrap -- the previous chapter...)

Remark: Not all the methods we shall mention seem to be implemented in R.

When facing real data with a very large number of variables, we shall first reduce the dimension, for instance, by selecting the "most relevant" variables and discarding the others.

But how do we do that?

TODO (This is a good question...)

Here are a few ideas (beware: not all thes ideas are good).

Compute a PCA and "round" the components to the "nearest" variables. Reverse the problem and look at the variables: are some of them "close", in some sense (e.g., define a notion of distance between the variables and perform a distance analysis). Then, retain a single variables from each cluster.

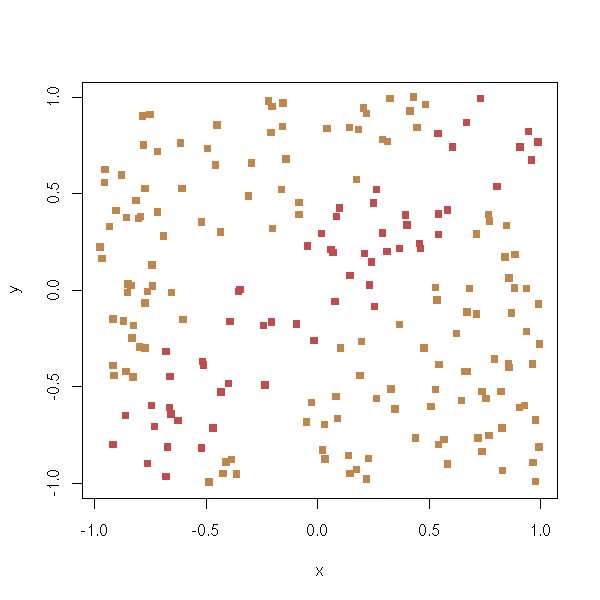

TO SORT Variables selection to predict a qualitative variables 1. Perform ANOVAs and only retain important variables (say, p-value>0.05) n <- 100 k <- 5 x <- matrix(rnorm(n*k),nc=k) y <- x[,1] + x[,2] - sqrt(abs(x[,3]*x[,4])) y <- y-median(y) y <- factor(y>0) pairs(x, col=as.numeric(y)+1)

for (i in 1:k) {

f <- summary(lm(x[,i]~y))$fstatistic

f <- pf(f[1],f[2],f[3], lower.tail=F)

print(paste(i, "; p =", round(f, digits=3)))

}

2. With the retained variables, compute the correlations and their

p-values (the p-value of a correlation if the p-value of the test

of H0: "correlation=0".

TODO: gove an example with colinear variables.

cor(x)

round(cor(x),digits=3)

m <- cor(x)

for (i in 1:k) {

for (j in 1:k) {

m[i,j] <- cor.test(x[,i],x[,j])$p.value

}

}

m

round(m,digits=3)

m<.05

Exercise: write a "print" method for correlation matrices

that adds stars besides the correlations significantly different

from zero.

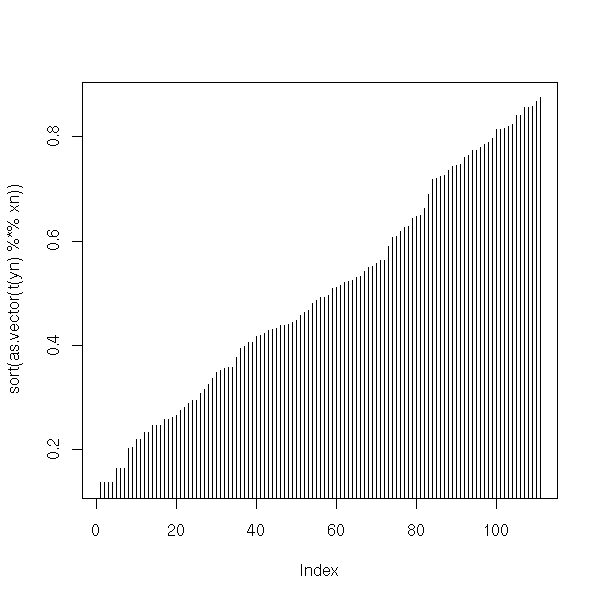

When there are too many perdictive variables in a regression (with respect to the number of observations), the first thing that comes to the mind, is to remode the "spurious" or "redundant" variables. Here are several ways of doing so.

We can start with a model containing all the variables, discard the variable that brings the least to the regression (the one whose p-value is the largest) and go on, until we have removed all the variables whose p-value is over a pre-specified threshold. We remove the variables one at a time, because each time we remove one, the p-value of the others change. (We have already mentionned this phenomenon when we presented polynomial regression: the predictive variables need not be orthogonal.)

We can also do the opposite: start with an empty model and add the variables one after the other, starting with the one with the smallest p-value.

Finally, we can combine both methods: First add the variables, then try to remove them, try do add some more, etc.. (this may happen: we might decide to add variable A, then variable B, then variable C, then remove variable B, then add variable D, etc. -- as the predictive variables are not orthogonal, the fact that a variable is present or not in the model depends on the other variables). We stop when the criteria tell us to stop, or when we get tired.

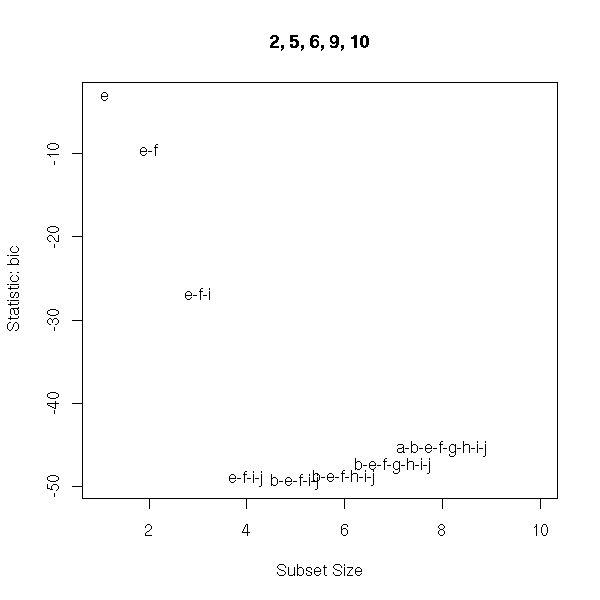

In the preceding discussion, we have used the p-value to decide if we were to keep or discard a variable: use can choose another criterion, say, the R^2 or a penalized log-likelihood, such as the AIC (Akaike Information Criterion),

AIC = -2log(vraissemblance) + 2 p,

or the BIC (Bayesian Information Criterion),

BIC = -2log(vraissemblance) + p ln n.

Let us consider an example.

library(nlme) # For the "BIC" function (there may be another one elsewhere) n <- 20 m <- 15 d <- as.data.frame(matrix(rnorm(n*m),nr=n,nc=m)) i <- sample(1:m, 3) d <- data.frame(y=apply(d[,i],1,sum)+rnorm(n), d) r <- lm(y~., data=d) AIC(r) BIC(r) summary(r)

It yields:

Call:

lm(formula = y ~ ., data = d)

Residuals:

1 2 3 4 5 6 7 8

-0.715513 0.063803 0.233524 1.063999 -0.001007 -0.421912 0.712749 -1.188755

9 10 11 12 13 14 15 16

-1.686568 -0.907378 0.293071 -0.506539 0.644674 2.046780 0.236374 -0.110205

17 18 19 20

0.256414 0.397595 0.052581 -0.463687

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) -0.03322 0.86408 -0.038 0.971

V1 0.76079 1.23426 0.616 0.571

V2 0.60744 0.52034 1.167 0.308

V3 -0.18183 1.09441 -0.166 0.876

V4 0.49537 0.68360 0.725 0.509

V5 0.54538 1.72066 0.317 0.767

V6 -0.16841 0.89624 -0.188 0.860

V7 0.51331 1.25093 0.410 0.703

V8 0.25457 2.05536 0.124 0.907

V9 0.34990 0.82277 0.425 0.693

V10 0.72410 1.26269 0.573 0.597

V11 0.69057 1.84400 0.374 0.727

V12 0.64329 1.15298 0.558 0.607

V13 0.07364 0.79430 0.093 0.931

V14 -0.06518 0.53887 -0.121 0.910

V15 0.92515 1.18697 0.779 0.479

Residual standard error: 1.798 on 4 degrees of freedom

Multiple R-Squared: 0.7795, Adjusted R-squared: -0.04715

F-statistic: 0.943 on 15 and 4 DF, p-value: 0.5902The "gls" function gives you directly the AIC and the BIC.

> r <- gls(y~., data=d)

> summary(r)

Generalized least squares fit by REML

Model: y ~ .

Data: d

AIC BIC logLik

86.43615 76.00316 -26.21808

...These quantities are important when you compare models with a different number of parameters: the log-likelihood will always increase if you add more variables, falling in the "overfit" trap. On the contrary, the AIC and the BIC have a corrective term to avoid this trap.

library(nlme)

n <- 20

m <- 15

d <- as.data.frame(matrix(rnorm(n*m),nr=n,nc=m))

# i <- sample(1:m, 3)

i <- 1:3

d <- data.frame(y=apply(d[,i],1,sum)+rnorm(n), d)

k <- m

res <- matrix(nr=k, nc=5)

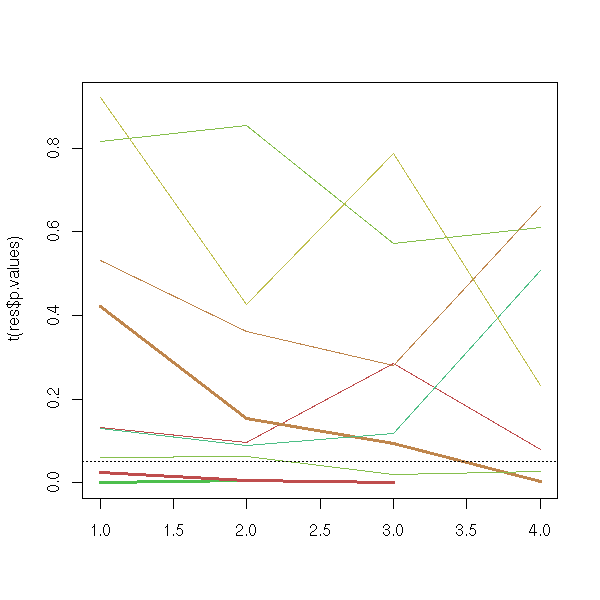

for (j in 1:k) {

r <- lm(d$y ~ as.matrix(d[,2:(j+1)]))

res[j,] <- c( logLik(r), AIC(r), BIC(r),

summary(r)$r.squared,

summary(r)$adj.r.squared )

}

colnames(res) <- c('logLik', 'AIC', 'BIC',

"R squared", "adjusted R squared")

res <- t( t(res) - apply(res,2,mean) )

res <- t( t(res) / apply(res,2,sd) )

matplot(0:(k-1), res,

type = 'l',

col = c(par('fg'),'blue','green', 'orange', 'red'),

lty = 1,

xlab = "Number of variables")

legend(par('usr')[2], par('usr')[3],

xjust = 1, yjust = 0,

c('log-vraissemblance', 'AIC', 'BIC',

"R^2", "adjusted R^2" ),

lwd = 1, lty = 1,

col = c(par('fg'), 'blue', 'green', "orange", "red") )

abline(v=3, lty=3)

(In some cases, in the previous simulation, the AIC and the BIC have a local minimum for three variables and a global minimim for a dozen variables.)

There are other criteria, such as the adjusted R-squared or Mallow's Cp.

> library(wle)

> r <- mle.cp(y~., data=d)

> summary(r)

Call:

mle.cp(formula = y ~ ., data = d)

Mallows Cp:

(Intercept) V1 V2 V3 V4 V5 V6 V7 V8 V9 V10 V11 V12 V13 V14 V15 cp

[1,] 0 0 0 0 0 0 0 1 0 0 1 0 0 0 1 0 -5.237

[2,] 0 1 0 0 0 0 0 1 0 0 1 0 0 0 1 0 -4.911

[3,] 0 0 1 0 0 0 0 1 0 0 1 0 0 0 1 0 -4.514

[4,] 0 0 0 0 0 0 0 1 0 0 1 0 1 0 1 0 -4.481

[5,] 0 0 0 0 0 0 1 1 0 0 1 0 0 0 1 0 -4.078

[6,] 0 1 1 0 0 0 0 1 0 0 1 0 0 0 1 0 -3.854

[7,] 0 0 0 1 0 0 0 1 0 0 1 0 0 0 1 0 -3.829

[8,] 0 1 0 0 0 0 1 1 0 0 1 0 0 0 1 0 -3.826

[9,] 1 0 0 0 0 0 0 1 0 0 1 0 0 0 1 0 -3.361

[10,] 0 0 0 0 0 0 1 1 0 0 1 0 1 0 1 0 -3.335

[11,] 0 0 0 0 1 0 0 1 0 0 1 0 1 0 1 0 -3.287

[12,] 0 0 0 0 0 0 0 1 1 0 1 0 0 0 1 0 -3.272