Introduction to statistical tests: TODO: REWRITE THIS SECTION

The Zoo of statistical tests: Parametric Tests

The Zoo of Statistical Tests: discrete variables and the Chi^2 test

The Zoo of Statistical Tests: non-parametric tests

Estimators

TO SORT

TODO: give the structure of this chapter

motivation of statistical tests

notion of estimator, bias, MSE

...

MLE (should be in a section of its own)

Bayesian methods (in a section of their own)

(TODO)

TODO: list the more important tests (Student and Chi^2)

Student T test: compare a mean with a given number

compare the mean in two samples

There are generalizations for more than two samples

(analysis of variance) and for non-gaussian samples

(Wilcoxon).

One can devise similar tests to compare the variance of

a sample with a given number or to compare the variance

of two samples.

Chi^2 test: to compare the distribution of a qualitative

variable with predetermined values, to compare the

distribution of a qualitative variable in two

samples. One can also use it to check if two qualitative

variables are independant. However, it is only an

approximation, valid for large samples (more than 100

observations, more that 10 observations per class).

TODO: check if we can do without the Chi^2 test:

- binary variable: bimom.test

- multinomial test: ???

- Independance Chi^2: fisher.test

- two variables: fisher.test

We want to answer a question of the kind "Does tobacco increase the risk of cancer?", "Does the proximity of a nuclear waste reprocessing plant increase the risk of leukemia?", "Is the mean of the population from which this sample was drawn zero, given that the sample mean is 0.02?"

Let us detail the problem "Have those two samples the same mean?" (it is a simplification of the problem "Do those two samples come from the same population?").

Let us consider a first population, on which is defined a statistical variable (with a gaussian distribution), from which we get a sample. We do the same for a second population, with the same population mean.

We can then consider the statistical variable

sample mean in the first sample - sample mean in the second sample

and find its distribution.

If we measure a certain value of this difference, we can compute the probability of obtaining a difference at least as large.

If

P( difference > observed difference ) < alpha,

(for a given value of alpha, say 0.05), we reject the hypothesis "the two means are equal", with a risk equal to alpha.

But beware, this result is not certain at all. There can be two kinds of error: either wrongly clain that they are different (this happens with a probability alpha) or wrongly claim that the two means are equal.

Beware again, those tests are only valid under certain conditions (gaussian variables, same variance, etc.).

If we really wish to be rigorous, we do not consider a single hypothesis, but two: for instamce "the means are equal" and "the means are different"; or "the means are equal" and "the first mean is larger than the second". We would use the second formulation if we can a priori reject the fact that the first mean is lower than the second -- but this has to come from information independant from the samples at hand.

The statistical tests will never tell "the hypothesis is true": they will merely reject or fail to reject the hypothesis stating "there is nothing significant". (This is very similar to the development of science as explained by K. Popper: we never prove that something is true, we merely continuously try to prove it wrong and fail to do so.)

Let us consider two hypotheses: the null hypothesis H0, "there is no noticeable effect" (for instance, "tobacco does not increas the risk of cancer", the proximity of a waste recycling plant does not increas the risk of leukemia) and the alternative hypothesis H1, "there is a noticeable effect" (e.g., "tobacco increases the risk of cancer"). The alternative hypothesis can be symetric ("tobacco increases of decreases the risk of cancer") or not ("tobacco increases the risk of cancer"). To choose an asymetric hypothesis means that we reject, a priori, half of the hypothesis: it can be a prejudice, so you should think carefully before choosing an asymetric alternative hypothesis.

H0 is sometimes called the "conservative hypothesis", because it is the hypothesis we keep if the results of the test are not conclusive.

To wrongly reject the null hypothesis (i.e., to wrongly conclude "there is an effet" or "there is a noticeable difference").

For instance, if the variable X follows a gaussian distribution, we expect to get values "in the middle" of the bell-shaped curve. If we get extreme values, we shall reject, sometimes wrongly, the null hypothesis (that the mean is actually zero). The type I error corresponds to the red part in the following plot.

colorie <- function (x, y1, y2, N=1000, ...) {

for (t in (0:N)/N) {

lines(x, t*y1+(1-t)*y2, ...)

}

}

# No, there is already a function to do this

colorie <- function (x, y1, y2, ...) {

polygon( c(x, x[length(x):1]), c(y1, y2[length(y2):1]), ... )

}

x <- seq(-6,6, length=100)

y <- dnorm(x)

plot(y~x, type='l')

i = x<qnorm(.025)

colorie(x[i],y[i],rep(0,sum(i)) ,col='red')

i = x>qnorm(.975)

colorie(x[i],y[i],rep(0,sum(i)) ,col='red')

lines(y~x)

title(main="Type I error")

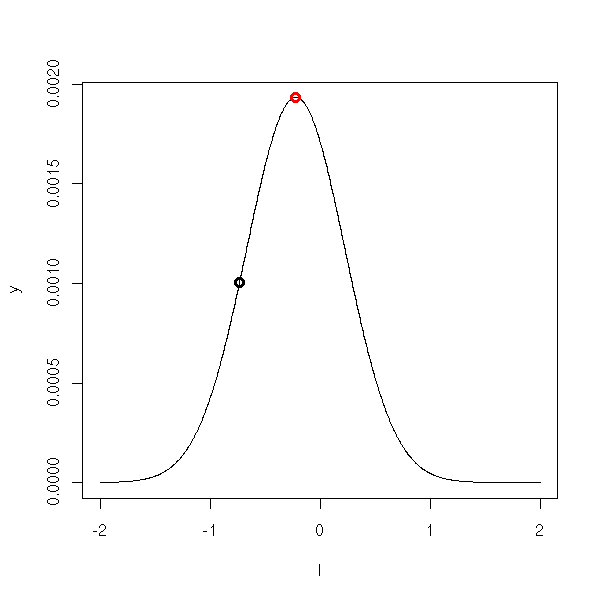

Probability (if the null hypothesis is true) to get a result at least as extreme. It is the probability of making a type I error.

Wrongly accepting the null hypothesis (i.e., wrongly concluding "there is no statistically significant effect" or "there is no difference").

In all rigour, it is not an error, because one never says "H0 is true" but "we do not reject H0 (yet)". It is not an error, but a missed opportunity.

The type II error is the area of the red part in the following plot. The middle bell-shaped curve is the distribution predicted by the null hypothesis, the other one is the actual distribution of the population.

x <- seq(-6,6, length=1000) y <- dnorm(x) plot(y~x, type='l') y2 <- dnorm(x-.5) lines(y2~x) i <- x>qnorm(.025) & x<qnorm(.975) colorie(x[i],y2[i],rep(0,sum(i)), col='red') segments( qnorm(.025),0,qnorm(.025),dnorm(qnorm(.025)), col='red' ) segments( qnorm(.975),0,qnorm(.975),dnorm(qnorm(.975)), col='red' ) lines(y~x) lines(y2~x) title(main="High risk of type II error")

If the two curves are sufficiently far appart (i.e., if the difference of means is more significant), the risk is much lower.

x <- seq(-6,6, length=1000) y <- dnorm(x) plot(y~x, type='l') y2 <- dnorm(x-3.5) lines(y2~x) i <- x>qnorm(.025) & x<qnorm(.975) colorie(x[i],y2[i],rep(0,sum(i)), col='red') segments( qnorm(.025),0,qnorm(.025),dnorm(qnorm(.025)), col='red' ) segments( qnorm(.975),0,qnorm(.975),dnorm(qnorm(.975)), col='red' ) lines(y~x) lines(y2~x) title(main="Lower risk of type II error")

You can plot the probability of type II error against the mean difference (contrary to the type I error, the risk of type II error is not a constant but depends on the actual value of the parameter: this is why this risk is often considered as difficult to compute and people tend to fail to mention it in elementary courses).

delta <- seq(-1.5, 1.5, length=500)

p <- NULL

for (d in delta) {

p <- append(p,

power.t.test(delta=abs(d), sd=1, sig.level=0.05, n=20,

type='one.sample')$power)

}

plot(1-p~delta, type='l',

xlab='mean difference', ylab="propability of a type II error",

main="type II error in a Student T test")

abline(h=0,lty=3)

abline(h=0.05,lty=3)

abline(v=0,lty=3)

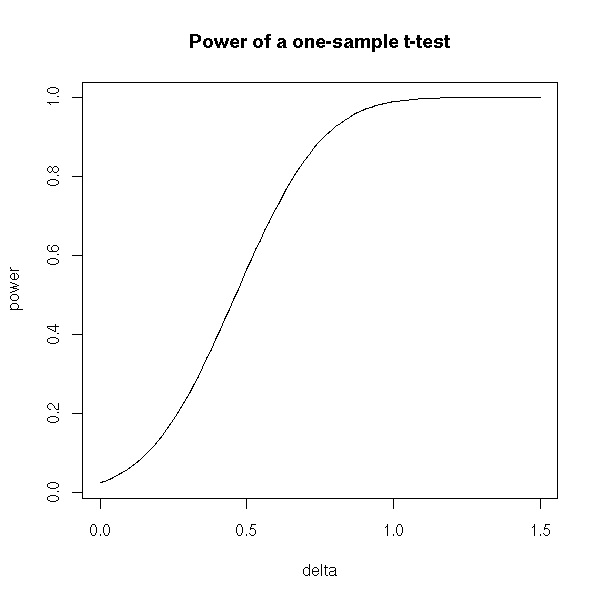

The power of a test is

1 - P( type II error ).

Usually, the power is not a number but a function. The null hypothesis is often of the form H0: "mu = mu0" and the alternative hypothesis of the form H1: "mu different from mu0". The power will depend on the actual value of mu: if mu is close to mu0, the type II error probability is hygh and the power is low; on the contrary, if mu and mu0 are very different. the probability of type II error is lower and the power higher.

delta <- seq(0, 1.5, length=100)

p <- NULL

for (d in delta) {

p <- append(p,

power.t.test(delta=d, sd=1, sig.level=0.05, n=20,

type='one.sample')$power)

}

plot(p~delta, type='l',

ylab='power', main='Power of a one-sample t-test')

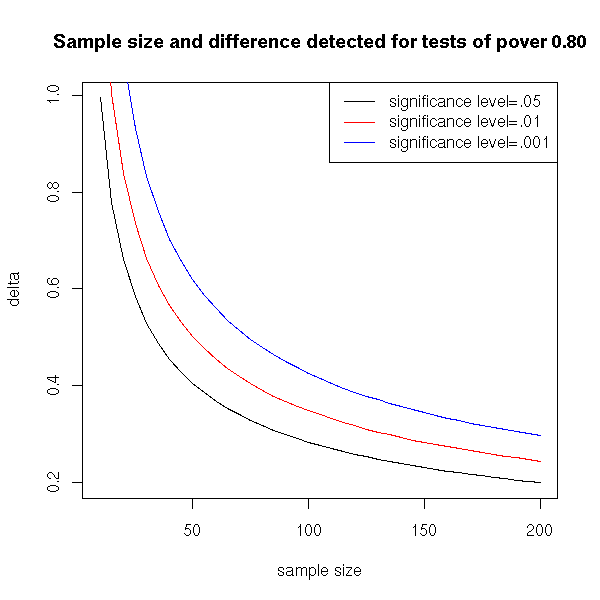

The power plays an important role when you design an experiment. Let us imagine that we want to know if the mean mu of a certain variable on a certain population equals mu0. We want to be able to detect a difference at least epsilon in at least 80% of the cases, with a type I error risk inferior to 5%: what should be the sample size?

In other words, we want that the power of the test H0: << mu = mu0 >> against H1: << abs(mu - mu0) > epsilon >> with a confidence level alpha=0.05 be at least 0.80 ("tradition" suggests a power equal to 0.80, and a confidence level 0.05).

The "power.t.test" performs those computations (for Student's T test).

> power.t.test(delta=.1, sd=1, sig.level=.05, power=.80, type='one.sample')

One-sample t test power calculation

n = 786.8109

delta = 0.1

sd = 1

sig.level = 0.05

power = 0.8

alternative = two.sidedYou can ask the question in any direction. For instance, the experiment has already been carried out, with a sample of size n: what is the minimal difference in mean we can expect to spot if we want the power of the test to be 0.08?

> power.t.test(n=100, sd=1, sig.level=.05, power=.80, type='one.sample')

One-sample t test power calculation

n = 100

delta = 0.2829125

sd = 1

sig.level = 0.05

power = 0.8

alternative = two.sidedHere is a plot of this minimum noticeable difference against the sample size.

N <- seq(10,200, by=5)

delta <- NULL

for (n in N) {

delta <- append(delta,

power.t.test(n=n, sd=1, sig.level=.05,

power=.80, type='one.sample')$delta

)

}

plot(delta~N, type='l', xlab='sample size')

delta <- NULL

for (n in N) {

delta <- append(delta,

power.t.test(n=n, sd=1, sig.level=.01,

power=.80, type='one.sample')$delta

)

}

lines(delta~N, col='red')

delta <- NULL

for (n in N) {

delta <- append(delta,

power.t.test(n=n, sd=1, sig.level=.001,

power=.80, type='one.sample')$delta

)

}

lines(delta~N, col='blue')

legend(par('usr')[2], par('usr')[4], xjust=1,

c('significance level=.05', 'significance level=.01', 'significance level=.001'),

col=c(par('fg'), 'red', 'blue'),

lwd=1, lty=1)

title(main='Sample size and difference detected for tests of pover 0.80')

You might want to read:

http://www.stat.uiowa.edu/~rlenth/Power/2badHabits.pdf http://www.stat.uiowa.edu/techrep/tr303.pdf

A hypothesis is said to be simple if it entails a complete knowledge of the distribution of the random variables. For instance, if we are looking for the mean of a gaussian variable of variance 1 (we already know the variance), the hypothesis H0: "the mean is zero" is simple. On the contrary, if we are looking for the mean of a gaussian variable (we know the variable is gaussian, but we do not know its variance -- we are not interested in it), this hypothesis is not simple.

Here is another example: we have a sample that comes either from a population 1 (described by a random variable of mean 3 and variance 2), or from a population 2 (described by a random variable of mean 1 and variance 1). In this case, both hypotheses H0: "the sample comes from population 1" and H1: "the sample comes from population 2" are simple.

A non-simple hypothesis.

Quite often, the alternative assumptions (H1) are composite: if H1 is true, we know that the distribution has a certain form, with a parameter that has not a certain value -- but we do not know its value.

Let us consider a random variable, whose distribution is not completely known: for instance, we know it is a gaussian distribution of variance 1, but the mean is unknown.

If we estimate this mean as a single number, we are sure to be wrong: the actual mean might be close to our proposal, but there is no reason it should be exactly this one, up to the umpteenth decimal. Instead, we can give a confidence interval -- note the use of an indefinite article: there are many such intervals (you can shift it to the left or to the right -- and doing so will change its width).

TODO: check that I give an example with several intervals.

Here are two interpretations of this notion of "confidence interval".

(1) It is an interval in which we have a 95% probability of finding the sample mean.

(2) More naively, it is an interval that has a 95% probability of containing the population mean (i.e., the actual mean).

Actually, these two interpretations are equivalent. As I was very dubious, let us check it on a simulation (here, I draw the population mean at random, and this prior distribution does not play any role).

TODO: say that it is bayesian...

# Sample size

n <- 100

# Number of points to draw the curve

N <- 1000

v <- vector()

for (i in 1:N) {

m <- runif(1)

x <- m+rnorm(n)

int <- t.test(x)$conf.int

v <- append(v, int[1]<m & m<int[2])

}

sum(v)/NWe get 0.95.

From this, we get two interpretations of the p-value: first, as the probability pf getting results at least as extre,e if H0 is true (this is the definition); second, it is the probability of being the in "the" confidence interval.

TODO: explain a bit more. I said that "the" confidence interval was not uniquely defined, and thus, that we should not use a definite article...

The p-value is NOT the probability that H0 be true. To convince yourself of this, consider gaussian random variable, of variance 1 and unknown mean. From a sample, we test H0: "the mean is 0" against H1: "the mean is not 0". We will get a certain p-value.

> n <- 10 # Sample size > m <- rnorm(1) # Unknown mean > x <- m + rnorm(n) # Sample > t.test(x)$p.value # p-value [1] 0.2574325

But what is the probability that the mean be exactly zero? In our simulation, this probability is zero: the mean is almost surely non-zero.

> m [1] 0.7263967

We would like to set the power of a test, in the same way we choose the level of confidence. But there is a problem: if H1 is not a simple hypothesis (i.e., if it is not a single hypothesis, as "mu=0" but a set of hypotheses, as "mu!=0", "mu>0" or "mu>1"), the power is not a number but a function, that depends on the simple hypotheses contained in H1.

A test is UMP (Uniformly Most Powerful) for a given confidence level alpha if, for any simple hypothesis in H1, it has the largest power among the tests with confidence level alpha.

Most of the time, statistical tests assume that the random variables studied are gaussian (and even, when there are several, that they have the same variance). Non-parametric tests do not make such assumptions -- xwe say that they are more "robust" -- but, as a counter part, they are less powerful.

Here are a few examples of parametric and non-parametric tests.

TODO: is it the right place for this list?

Aim Parametric tests Non-parametric tests ---------------------------------------------------------------------------- compare two means Student's T test Wilcoxon's U test compare more than Anova (analysis of Kruskal--Wallis test two means variance) Compare two Fisher's F test Ansari-Bradley or variances Mood test Comparing more than Bartlett test Fligner test

A (parametric) test is robust if its results are still valid when its assumptions are no longer satisfied (especially if the random variables studied are no longer gaussian).

A statistic (mean, median, variance, trimmed mean, etc.) is resistant if it does not depend much on extreme values. For instance, the mean is not resistant, while the median is: a single extreme value can drastically change the mean, while it will not change the median.

> x <- rnorm(10) > mean(x) [1] -0.08964153 > mean(c(x,10^10)) # We add an extreme value [1] 909090909 > median(x) [1] -0.2234618 > median(c(x,10^10)) # We add an extreme value [1] -0.1949206

One can sometimes spot outliers with the Pearsons residuals:

sample density / density according to the model - 1

TODO: Example with circular data.

TODO

- Should you want them, there are statistical tests for the presence of outliers - Outlier detection benchmark: use classical examples, such as library(robustbase) ?hkb

One should not try to automatically detect and remove the outliers: the first problem is that this is a multi-stage procedure (first the outlier detection and removal, then the rest of the analysis), whose performance should be assessed (the rest of the analysis will not perform as well as you think it should, because the data has been tampered with); the second problem is that the outlier removal is a black-and-white decision, either we keep the observation, or we remove it completely -- if we are unsure whether the observation is an actual outlier, we are sure to make mistakes.

Methods that remain efficient even in presence of outliers ("robust methods") do exist: use them!

The breaking point is the proportion of observations you can tamper with without being able to make the estimator arbitrarily "large".

This is a measure of robustness of an estimator.

For instance, the breaking point of the mean is zero: by changing a single observation, you can make the mean arbitrary large.

On the other hand, the breaking point of the median is 50%: if you change a single observation, the median will change, but its value vull be bounded by the rest of the cloud of points -- to make the median arbitrarily large, you would have to move (say) the top half of the data points.

The trimmed mean has a breaking point somewhere inbetween.

Location (mean): trimmed mean, median Dispersion (standard deviation): IQR, MAD, Sn, L-moments, MCD rlm, cov.rob library(rrcov) library(robustbase) (includes roblm)

1. We can look for a parameter of the distribution describing the population. If possible, we shall take an unbiased one, with the lowest variance possible. (However, we shall see later that certain biased estimators may be more interesting, because their mean square error is lower -- this is the case for ridge regression.)

2. Instead of a single value of this parameter, we can be looking for an interval containing it: we are then building a confidence interval.

3. We can also want to know if this parameter has (or not) a predefined value: we will then perform a test.

A p-value close to zero can mean two very different things: either the null hypothesis is very wrong, or ot is just slightly wrong but our sample is large enough to spot it. In the latter case, the difference between reality and the hypothesis is statistically significant, but for all practical purposes, it is negligible.

TODO: this problem with p-values is a starting point for the development of bayesian methods. http://www.stat.washington.edu/www/research/online/1994/bic.ps

Among all the possible tests, we want one for which the risks of type I and type II errors are as low as possible. Among the tests that can not be improved (i.e., we cannot modify them to get one with the same type I error risk and a lower type II error risk, and conversely), there is no means of choosing THE best. Indeed, we can plot those tests in the (type I error risk)x(type II error risk) plane: we get a curve. However, decision theory allows everyone to choose, among those tests, the one that becomes best one's taste taste for risk. One crude way of preceeding is to choose an upper bound on the type I error risk (alpha) and minimize the type II error risk.

For more details, read Simon's French book, "Decision Theory: an introduction to the mathematics of rationality", Ellis Horwood series in mathematics and its applications, Halsted Press, 1988.

Most R functions that perform (classical) tests are in the "stats" package (it is already loaded).

library(help="stats")

The list of functions is huge: look for those containing the "test" string.

> apropos(".test")

[1] "ansari.test" "bartlett.test" "binom.test"

[4] "Box.test" "chisq.test" "cor.test"

[7] "fisher.test" "fligner.test" "friedman.test"

[10] "kruskal.test" "ks.test" "mantelhaen.test"

[13] "mcnemar.test" "mood.test" "oneway.test"

[16] "pairwise.prop.test" "pairwise.t.test" "pairwise.wilcox.test"

[19] "power.anova.test" "power.prop.test" "power.t.test"

[22] "PP.test" "prop.test" "prop.trend.test"

[25] "quade.test" "shapiro.test" "t.test"

[28] "var.test" "wilcox.test"

One would expect those functions to yield a result as "Null hypothesis rejected" or "Null hypothesis not rejected" -- bad luck. The user has to know how to interpret the results, with a critical eye.

The result is mainly a number, the p-value. It is the probability to get a result at least as extreme. If it is close to one, we do not reject the hypothesis, i.e., the test did not find anything statistically significant; if it close to zero, we can reject the null hypothesis. More precisely, before performing the test, we choose a confidence level alpha (often 0.05; for human health, you will be more conservative an choose 0.01 or even less; if you want results with little data, if you do not mind that those results are not reliable, you can take 0.10): if p<alpha, you reject the null hypothesis, if p>alpha, you do not reject it.

Of course, you have to choose the confidence level alpha BEFORE performing the test (otherwise, you will choose it so that it produces the results you want)...

For instance,

> x <- rnorm(200)

> t.test(x)

One Sample t-test

data: x

t = 3.1091, df = 199, p-value = 0.002152

alternative hypothesis: true mean is not equal to 0

95 percent confidence interval:

0.07855896 0.35102887

sample estimates:

mean of x

0.2147939If we reject the null hypothesis (here: "the mean is zero"), we will be wrong with a probability 0.002152, i.e., in 2 cases out of 1,000.

We can check that with simulations: if the null hypothesis H0 is true, in 95% of the cases, we have p>0.05. Nore precisely, if H0 is true, the p-value is unifromly distributed in [0,1].

p <- c()

for (i in 1:1000) {

x <- rnorm(200)

p <- append(p, t.test(x)$p.value)

}

hist(p, col='light blue')

p <- sort(p) p[950] p[50] x <- 1:1000 plot(p ~ x, main="p-value of a Student T test when H0 is true")

That was the type I error risk (wrongly rejecting the null hypothesis). Let us now focus on the type II error risk. This time, the population mean is non-zero. The risk will depend on the actual value of this mean -- but we do not know this value. If the mean is close to zero, the risk is high (we need a lot of data to spot small differences), if it is farther, the risk is lower.

# Sample size

n <- 10

# Number of simulations

# (sufficiently large to get a good approximation

# of the probability)

m <- 1000

# Number of points to draw the curve

k <- 50

# Maximum value for the actual mean

M <- 2

r <- vector()

for (j in M*(0:k)/k) {

res <- 0

for (i in 1:m) {

x <- rnorm(10, mean=j)

if( t.test(x)$p.value > .05 ){

res <- res + 1

}

}

r <- append(r, res/m)

}

rr <- M*(0:k)/k

plot(r~rr, type="l",

xlab='difference in mean',

ylab="type II error probability")

# Comparison with the curve produced by "power.t.test"

x <- seq(0,2,length=200)

y <- NULL

for (m in x) {

y <- append(y, 1-power.t.test(delta=m, sd=1, n=10, sig.level=.05,

type='one.sample')$power)

}

lines(x,y,col='red')

# Theoretical curve

# (This is a Z test, not too different)

r2 <- function (p,q,conf,x) {

p(q(1-conf/2)-x) - p(q(conf/2)-x)

}

f <- function(x) {

p <- function (t) { pnorm(t, sd=1/sqrt(10)) }

q <- function (t) { qnorm(t, sd=1/sqrt(10)) }

r2(p,q,.05,x)

}

curve( f(x) , add=T, col="blue" )

# Theoretical curve (T test)

f <- function(x) {

p <- function (t) { pt(sqrt(10)*t, 10) } # Is this correct?

q <- function (t) { qt(t, 10)/sqrt(10) }

r2(p,q,.05,x)

}

curve( f(x) , add=T, col="green" )

legend(par('usr')[2], par('usr')[4], xjust=1,

c('simulation', 'power.t.test', '"exact" value, Z test',

'excat value'),

col=c(par('fg'),'red','blue','green'),

lwd=1,lty=1)

title(main="Type II error risk in a Student T test")

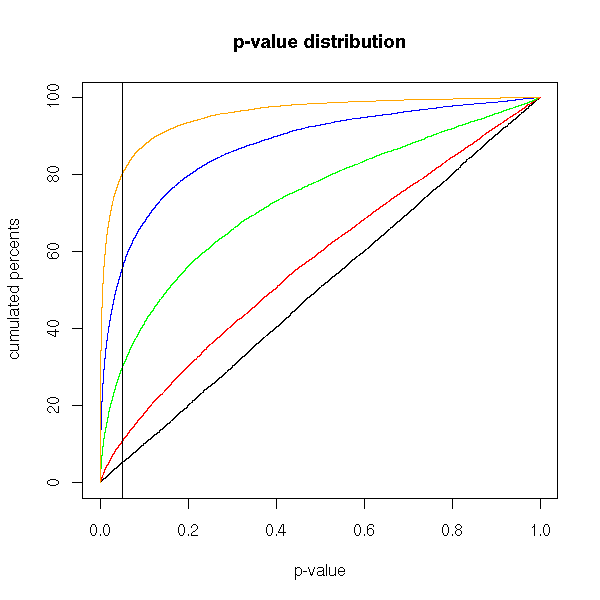

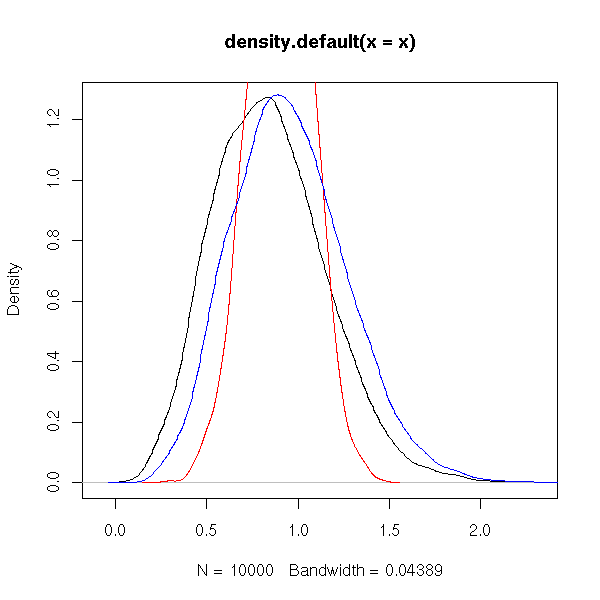

We saw earlier that, if the null hypothesis is true, the p-values are uniformly distributed in [0,1]. Here is their distribution if H0 is false, for different values of the population mean.

N <- 10000

x <- 100*(1:N)/N

plot( x~I(x/100), type='n', ylab="cumulated percents", xlab="p-value" )

do.it <- function (m, col) {

p <- c()

for (i in 1:N) {

x <- m+rnorm(200)

p <- append(p, t.test(x)$p.value)

}

p <- sort(p)

x <- 100*(1:N)/N

lines(x ~ p, type='l', col=col)

}

do.it(0, par('fg'))

do.it(.05, 'red')

do.it(.1, 'green')

do.it(.15, 'blue')

do.it(.2, 'orange')

abline(v=.05)

title(main='p-value distribution')

legend(par('usr')[2],par('usr')[3],xjust=1,yjust=1,

c('m=0', 'm=0.05', 'm=.01', 'm=.015', 'm=.02'),

col=c(par('fg'), 'red', 'green', 'blue', 'orange'),

lty=1,lwd=1)

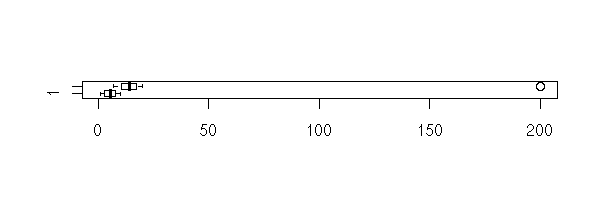

The manual gives this striking example, that explains why R does not give a clear result "Null hypothesis rejected" or "Null hypothesis not rejected". If we look at the data (with the eyes, on a plot -- always plot the data) we can state with little risk of error that the means are very different. However, the p-value is very high and suggests not to reject the null hypothesis that the means are equal. One problem is the size of the confidence interval: it is extremely large. Thus you should carefully look at the data. (On this example, there was one more problem: to perform a Student T test, the data have to be gaussian, to have the same variance, to be independant -- this is far from being the case).

TODO: somewhere in this document, state how to correctly perform a test. 0. Choose a confidence level, a test 1. Plot the data 2. Check the assumptions. Change the test if needed. 3. Perform the test. Check the confidence interval. TODO: where is my rant against T-values? x <- 1:10 y <- c(7:20, 200) boxplot(x,y, horizontal=T)

boxplot(x,y, log="x", horizontal=T)

> t.test(x, y)

Welch Two Sample t-test

data: x and y

t = -1.6329, df = 14.165, p-value = 0.1245

alternative hypothesis: true difference in means is not equal to 0

95 percent confidence interval:

-47.242900 6.376233

sample estimates:

mean of x mean of y

5.50000 25.93333Of course, if we remove the outlier, 200, the result conforms with our intuition.

> t.test(1:10,y=c(7:20))

Welch Two Sample t-test

data: 1:10 and c(7:20)

t = -5.4349, df = 21.982, p-value = 1.855e-05

alternative hypothesis: true difference in means is not equal to 0

95 percent confidence interval:

-11.052802 -4.947198

sample estimates:

mean of x mean of y

5.5 13.5Similarly, if we use a non parametric test -- what we should have done from the very beginning.

> wilcox.test(x,y)

Wilcoxon rank sum test with continuity correction

data: x and y

W = 8, p-value = 0.0002229

alternative hypothesis: true mu is not equal to 0

Warning message:

Cannot compute exact p-value with ties in: wilcox.test.default(x, y)

Most of these tests are only valid for gaussian variables. Furthermore, if there are several samples, they often ask them to be independant and have the same variance.

Here, we want to find the mean of a random variable or, more precisely, to compare it with a predefined value.

?t.test

Assumptions: The variable is gaussian (if it is not, you can use Wilcoxon's U test).

We already gave an example above.

Here is the theory.

The null hypothesis is H0: "the mean is m", the alternative hypothesis is H1: "the mean is not m". We compute

sample mean - m

T = ---------------------------------------

sample standard deviation / sqrt(n)(beware: there are two formulas for the standard deviation: the population standard deviation, with "n" in the denominator, and the sample standard deviation, with "n-1" -- R only uses the latter) (here, n is the sample size) and we reject H0 if

abs( T ) > t_{n-1} ^{-1} ( 1 - alpha/2 )where T_{n-1} is the student T distribution with n-1 degrees of freedom.

(With gaussian independant identically distributed random variables, T indeed follows this distribution -- you can actually define the Student T distribution that way.)

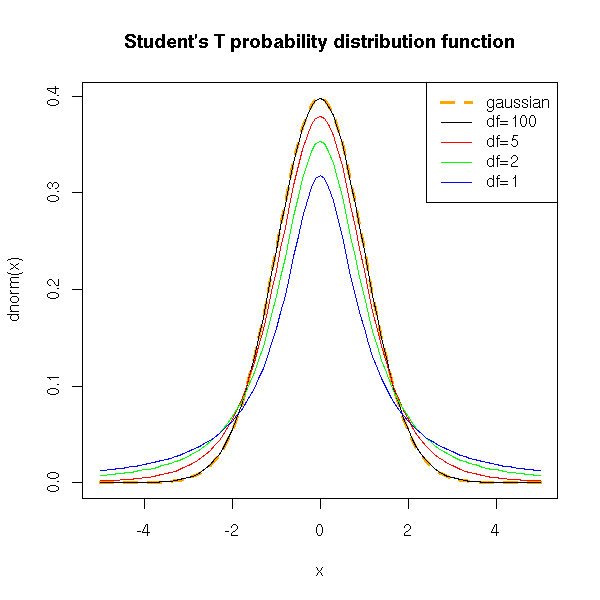

Here are a few Student T probability distribution functions. The larger n, the closer it is from a gaussian distribution.

curve(dnorm(x), from=-5, to=5, add=F, col="orange", lwd=3, lty=2)

curve(dt(x,100), from=-5, to=5, add=T, col=par('fg'))

curve(dt(x,5), from=-5, to=5, add=T, col="red")

curve(dt(x,2), from=-5, to=5, add=T, col="green")

curve(dt(x,1), from=-5, to=5, add=T, col="blue")

legend(par('usr')[2], par('usr')[4], xjust=1,

c('gaussian', 'df=100', 'df=5', 'df=2', 'df=1'),

col=c('orange', par('fg'), 'red', 'green', 'blue'),

lwd=c(3,1,1,1,1),

lty=c(2,1,1,1,1))

title(main="Student's T probability distribution function")

Let us now see how to use this, by hand.

We start with a sample from a gaussian population, whose mean we want to estimate.

> x <- rnorm(200) > m <- mean(x) > m [1] 0.05875323

We now try to find an interval, centered on this sample mean, in which we ahve a 95% probability of finding the actual (population) mean (it is zero).

x <- rnorm(100) n <- length(x) m <- mean(x) m alpha <- .05 m + sd(x)/sqrt(n)*qt(alpha/2, df=n-1, lower.tail=T) m + sd(x)/sqrt(n)*qt(alpha/2, df=n-1, lower.tail=F)

We get [m - 0.19, m + 0.19].

The "t.test" function provides a similar result.

> t.test(x, mu=0, conf.level=0.95)

One Sample t-test

data: x

t = 0.214, df = 99, p-value = 0.831

alternative hypothesis: true mean is not equal to 0

95 percent confidence interval:

-0.1987368 0.2467923

sample estimates:

mean of x

0.02402775You can also experimentally check this with, with a simulation.

> m = c()

> for (i in 1:10000) {

+ m <- append(m, mean(rnorm(100)) )

+ }

> m <- sort(m)

> m[250]

[1] -0.1982188

> m[9750]

[1] 0.1999646The interval is [-0.20, +0.20]: we get approximately the same result as the theoretical computations (this will always be the case with Monte-Carlo methods: the approximation is coarse, the convergence is slow, we need a lot of iterations to get a reliable result).

We can also plot the result of those simulations. We see that the actual mean is sometimes outside the confidence interval.

N <- 50

n <- 5

v <- matrix(c(0,0),nrow=2)

for (i in 1:N) {

x <- rnorm(n)

v <- cbind(v, t.test(x)$conf.int)

}

v <- v[,2:(N+1)]

plot(apply(v,2,mean), ylim=c(min(v),max(v)),

ylab='Confidence interval', xlab='')

abline(0,0)

c <- apply(v,2,min)>0 | apply(v,2,max)<0

segments(1:N,v[1,],1:N,v[2,], col=c(par('fg'),'red')[c+1], lwd=3)

title(main="The population mean need not be in the confidence interval")

Let us perform a simulation to see what happens with non normal data -- a situation in which one should be using the Wilcoxon U test.

Let us first check with a distribution less dispersed that the gaussian one: the uniform distribution.

> N <- 1000

> n <- 3

> v <- vector()

> for (i in 1:N) {

x <- runif(n, min=-1, max=1)

v <- append(v, t.test(x)$p.value)

}

> sum(v>.05)/N

[1] 0.932Now with gaussian data.

> N <- 1000

> n <- 3

> v <- vector()

> for (i in 1:N) {

x <- rnorm(n, sd=1/sqrt(3))

v <- append(v, t.test(x)$p.value)

}

> sum(v>.05)/N

[1] 0.952Thus, the p-value is wrong when the variable is no longer gaussian: when we think we are rejecting the null hypothesis with a 5% risk of type I error, this risk is actually (for a uniform distribution, which is very far from pathological) 7%.

Let us seewhat happens to the confidence interval: we should be in it in 95% of the cases.

For a uniform distribution:

> N <- 1000

> n <- 3

> v <- vector()

> for (i in 1:N) {

x <- runif(n, min=-1, max=1)

r <- t.test(x)$conf.int

v <- append(v, r[1]<0 & r[2]>0)

}

> sum(v)/N

[1] 0.919For a normal distribution:

> N <- 1000

> n <- 100

> v <- vector()

> for (i in 1:N) {

x <- rnorm(n, sd=1/sqrt(3))

r <- t.test(x)$conf.int

v <- append(v, r[1]<0 & r[2]>0)

}

> sum(v)/N

[1] 0.947For the uniform distribution, we are in the confidence interval in 92% of the cases instead of the expected 95%. Actually, when the distribution is not gaussian, the results can be "wrong" in two ways: either the distribution is less dispersed than the gaussian, and the p-value is higher than stated by the test and the confidence interval provided is too small; or the distribution is more dispersed than the gaussian, the p-value will always be high, the confidence interval will be huge, and the test will no longer test anything (it loses its power, it will always state: there is nothing noticeable -- you can say it is blinded by the outliers).

TODO: I will say this later...

However, if the sample is much larger, the error becomes negligible.

> N <- 1000

> n <- 100

> v <- vector()

> for (i in 1:N) {

x <- runif(n, min=-1, max=1)

v <- append(v, t.test(x)$p.value)

}

> sum(v>.05)/N

[1] 0.947

> N <- 1000

> n <- 100

> v <- vector()

> for (i in 1:N) {

x <- runif(n, min=-1, max=1)

r <- t.test(x)$conf.int

v <- append(v, r[1]<0 & r[2]>0)

}

> sum(v)/N

[1] 0.945Let us now check the power of the test.

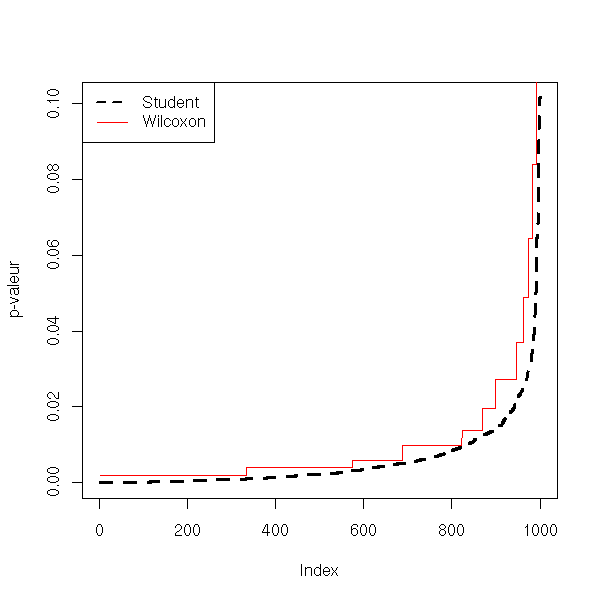

N <- 1000

n <- 10

delta <- .8

v <- vector()

w <- vector()

for (i in 1:N) {

x <- delta+runif(n, min=-1, max=1)

v <- append(v, t.test(x)$p.value)

w <- append(w, wilcox.test(x)$p.value)

}

plot(sort(v), type='l', lwd=3, lty=2, ylab="p-valeur")

lines(sort(w), col='red')

legend(par('usr')[1], par('usr')[4], xjust=0,

c('Student', 'Wilcoxon'),

lwd=c(2,1),

lty=c(2,1),

col=c(par("fg"), 'red'))

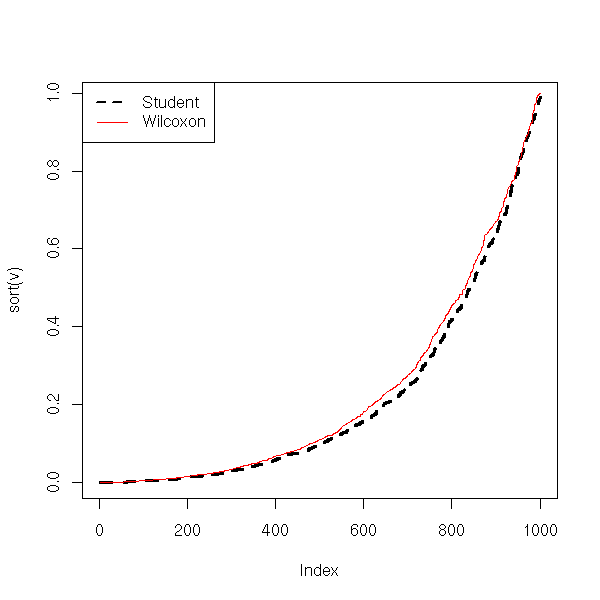

N <- 1000

n <- 100

delta <- .1

v <- vector()

w <- vector()

for (i in 1:N) {

x <- delta+runif(n, min=-1, max=1)

v <- append(v, t.test(x)$p.value)

w <- append(w, wilcox.test(x)$p.value)

}

plot(sort(v), type='l', lwd=3, lty=2)

lines(sort(w), col='red')

legend(par('usr')[1], par('usr')[4], xjust=0,

c('Student', 'Wilcoxon'),

lwd=c(2,1),

lty=c(2,1),

col=c(par("fg"), 'red'))

N <- 1000

n <- 100

delta <- .8

v <- vector()

w <- vector()

for (i in 1:N) {

x <- delta+runif(n, min=-1, max=1)

v <- append(v, t.test(x)$p.value)

w <- append(w, wilcox.test(x)$p.value)

}

plot(sort(v), type='l', lwd=3, lty=2)

lines(sort(w), col='red')

legend(par('usr')[1], par('usr')[4], xjust=0,

c('Student', 'Wilcoxon'),

lwd=c(2,1),

lty=c(2,1),

col=c(par("fg"), 'red'))

From this, Student's T test seems robust: if the data are less dispersed than with a gaussian distribution, the p-value and the confidence interval are underestimated, but not too much. This effect disappeard if the sample is large enough.

But wait! Let us now see waht happens with a distribution more dispersed than the gaussian -- e.g., the Cauchy distribution.

> N <- 1000

> n <- 3

> v <- vector()

> for (i in 1:N) {

+ x <- rcauchy(n)

+ v <- append(v, t.test(x)$p.value)

+ }

> sum(v>.05)/N

[1] 0.974

> N <- 1000

> n <- 3

> v <- vector()

> for (i in 1:N) {

+ x <- rcauchy(n)

+ r <- t.test(x)$conf.int

+ v <- append(v, r[1]<0 & r[2]>0)

+ }

> sum(v)/N

[1] 0.988The given confidence interval is too large: we are not in it in 95% of the cases, but much more. Things do not improve with an even larger sample.

> N <- 1000

> n <- 100

> v <- vector()

> for (i in 1:N) {

+ x <- rcauchy(n)

+ v <- append(v, t.test(x)$p.value)

+ }

> sum(v>.05)/N

[1] 0.982

> N <- 1000

> n <- 100

> v <- vector()

> for (i in 1:N) {

+ x <- rcauchy(n)

+ r <- t.test(x)$conf.int

+ v <- append(v, r[1]<0 & r[2]>0)

+ }

> sum(v)/N

[1] 0.986Let us now check the test power: this is disastrous...

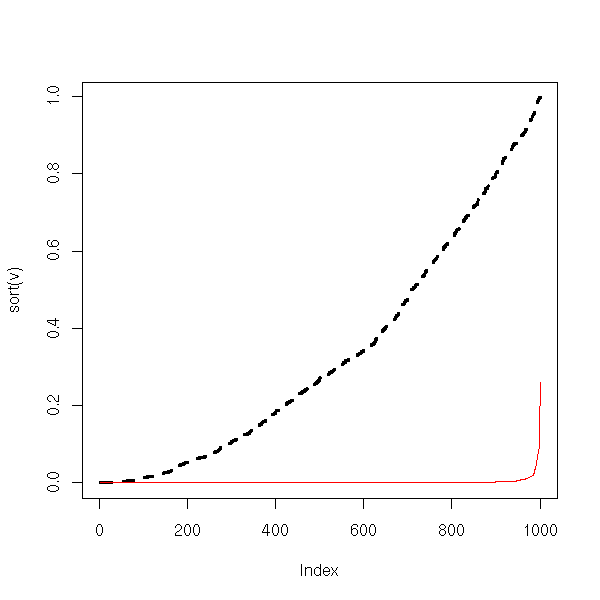

N <- 1000

n <- 10

delta <- 1

v <- vector()

w <- vector()

for (i in 1:N) {

x <- delta+rcauchy(n)

v <- append(v, t.test(x)$p.value)

w <- append(w, wilcox.test(x)$p.value)

}

plot(sort(v), type='l', lwd=3, lty=2)

lines(sort(w), col='red')

N <- 1000

n <- 100

delta <- 1

v <- vector()

w <- vector()

for (i in 1:N) {

x <- delta+rcauchy(n)

v <- append(v, t.test(x)$p.value)

w <- append(w, wilcox.test(x)$p.value)

}

plot(sort(v), type='l', lwd=3, lty=2)

lines(sort(w), col='red')

From this, we conclude that if the data are more dispersed than with a gaussian distribution, the power of the test decreases and nothing remains. Increasing th sample size does not improve things. In these situations, one should use non-parametric tests (or a parametric test adapted to the distribution at hand, or transform the data so that it looks more normal -- be beware, using the same data to choose the distribution or the trans formation and to perform the test will yield biased p-values).

> N <- 1000

> n <- 100

> v <- vector()

> w <- vector()

> for (i in 1:N) {

+ x <- rcauchy(n)

+ v <- append(v, t.test(x)$p.value)

+ w <- append(w, wilcox.test(x)$p.value)

+ }

> sum(v>.05)/N

[1] 0.976

> sum(w>.05)/N

[1] 0.948

> N <- 1000

> n <- 100

> v <- vector()

> w <- vector()

> for (i in 1:N) {

+ x <- 1+rcauchy(n)

+ v <- append(v, t.test(x)$p.value)

+ w <- append(w, wilcox.test(x)$p.value)

+ }

> sum(v<.05)/N

[1] 0.22

> sum(w<.05)/N

[1] 0.992

It is similar to the Student T test, but this time, we know the exact value of the variance: in this cas, the distribution of the test statistic is nolonger a Student T distribution but a gaussian distribution (often denoted Z).

In the real world, when we do not know the mean, we do not know the variance either -- thus, this test has little practical utility.

For large samples, Student's T distribution is well approximated by a gaussian distribution, so we can perform a Z test instead of a T test (that would be relevant if we we computing everything ourselves, but as the computer is there...)

We now consider two samples and we would like to know if they come from the same population. More simply, we would like to know if they have the same mean.

Here, we assume that the data are normal (do a qqplot or a Shapiro test), have the same variance (use an F test). If those conditions are not satisfied, you can use a Wilcoxon test.

We also assume that the variables are independant (this is not always the case: for instance, you might want to compare the length of the left and right humerus on a bunch of human skeletons; to get rid of the dependance problem, you can consider the length difference for each individual and compare it with zero.

For two samples of size n (it also works for samples of different sizes, but the formula is more complicated), one can show that the statistic

t = difference between the means / sqrt( (sum of the variances)/n )

follows a Student distribution with 2n-n degrees of freedom.

We shall reject the null hypothesis "the means are equal" if

abs(t) > abs( t(alpha, 2n-2) )

We could perform the test by hand, as above, but there is already a function to do so.

?t.test

> x <- rnorm(100)

> y <- rnorm(100)

> t.test(x,y)

Welch Two Sample t-test

data: x and y

t = -1.3393, df = 197.725, p-value = 0.182

alternative hypothesis: true difference in means is not equal to 0

95 percent confidence interval:

-0.46324980 0.08851724

sample estimates:

mean of x mean of y

-0.03608115 0.15128514

> t.test(x, y, alternative="greater")

Welch Two Sample t-test

data: x and y

t = -1.3393, df = 197.725, p-value = 0.909

alternative hypothesis: true difference in means is greater than 0

95 percent confidence interval:

-0.4185611 Inf

sample estimates:

mean of x mean of y

-0.03608115 0.15128514Here is the example from the manual: the study of the efficiency of a soporific drug.

> ?sleep

> data(sleep)

> plot(extra ~ group, data = sleep)

> t.test(extra ~ group, data = sleep)

Welch Two Sample t-test

data: extra by group

t = -1.8608, df = 17.776, p-value = 0.0794

alternative hypothesis: true difference in means is not equal to 0

95 percent confidence interval:

-3.3654832 0.2054832

sample estimates:

mean in group 1 mean in group 2

0.75 2.33Here, as the p-value is greater than 5%, we would conclude that the drug's effects are not noticeable in this sample. However, if you work for the drug's manufacturer, you would prefer a different conclusion. You can get it if you change the alternative hypothsis, that becomes H1: "the drug increases the time of sleep". (If you are honest, you choose the null and alternative hypotheses before performing the experiment, and this choice must be backed by prior data or knowledge: remarking that in this very experiment the sample mean is greater is not sufficient.)

> t.test(extra ~ group, data = sleep, alternative="less")

Welch Two Sample t-test

data: extra by group

t = -1.8608, df = 17.776, p-value = 0.0397

alternative hypothesis: true difference in means is less than 0

95 percent confidence interval:

-Inf -0.1066185

sample estimates:

mean in group 1 mean in group 2

0.75 2.33TODO: There should be a section "How to lie with statistics"

1. Reusing the same data several times to increase the

statistical significance of an effect. (examples:

choose H1 after looking at the data, use the data to

choose the transformation to apply, etc.)

2. Using a statistical test whose asumptions are not

satisfied (it can either yield more significant results

or less significant results: e.g., if the test assumes

that ******************** (deleted?)

3. Perform the same experiment several times and only

publish the results that go in the direction you

want. (Adverts by tobacco companies claiming that

passive smoking is harmless are done that way.)

4. Misleading plots

Adding a lot of unnecessary details

3D effects

- The most striking is the 3-dimensional piechart,

with the part of interest at the forefront, so that

it be deformed and larger)

- You can also plot a quantity as a sphere (ar any

3-dimensional object) of different scales: for a

quantity twice as large, you can multiply all the

dimensions by 2, thereby getting a volume eight

times as large.If you have more than two samples, you can perform an ANalysis Of VAriance (Anova). If you have two non-gaussain samples, you can perform a Wilcoxon U test. If you have more that two non-gaussian samples, you can turn to non-parametric analysis of variance with the Kruskal--Wallis test.

To compare means with a Student T test, we assume that: the samples are gaussian, independant and have the same variance.

Let us check what happens with gaussian non-equivariant samples.

TODO: check that there is no confusion between the Student T test and Welch's test (var.equal=T for the first, var.equal=F (the default) for the second).

N <- 1000

n <- 10

v <- 100

a <- NULL

b <- NULL

for (i in 1:N) {

x <- rnorm(n)

y <- rnorm(n, 0, v)

a <- append(a, t.test(x,y)$p.value)

b <- append(b, t.test(x/var(x), y/var(y))$p.value)

}

plot(sort(a), type='l', col="green")

points(sort(b), type="l", col="red")

abline(0,1/N)

And for the power:

N <- 1000

n <- 10

v <- 100

a <- NULL

b <- NULL

c <- NULL

d <- NULL

for (i in 1:N) {

x <- rnorm(n)

y <- rnorm(n, 100, v)

a <- append(a, t.test(x,y)$p.value)

b <- append(b, t.test(x/var(x), y/var(y))$p.value)

c <- append(c, t.test(x, y/10000)$p.value)

d <- append(d, wilcox.test(x, y)$p.value)

}

plot(sort(a), type='l', col="green")

points(sort(b), type="l", col="red")

points(sort(c), type="l", col="blue")

points(sort(d), type="l", col="orange")

abline(0,1/N)

legend(par('usr')[1], par('usr')[4],

c('Student Test', 'Renormalized Student Test',

'Student Test renormalized with the sample variances',

"(Non-Parametric) Wilcoxson's U Test"),

col=c('green', 'blue', 'red', 'orange'),

lwd=1,lty=1)

title(main="Student's T test on non-equivariant samples")

With non-equivariant samples, the p-value of the Student test remains correct, but the power dramatically decreases. If the data look gaussian but have different variances, you had better normalize them andto perform a Student T test than perform a non-parametric test.

TODO: What about the Welch test???

There are several ways of comparing two means.

data(sleep)

boxplot(extra ~ group, data=sleep,

horizontal=T,

xlab='extra', ylab='group')

With a Student T test, if the data are gaussian (or a Welch test, if they are gaussian but have different variances).

> t.test(extra ~ group, data=sleep)

Welch Two Sample t-test

data: extra by group

t = -1.8608, df = 17.776, p-value = 0.0794

alternative hypothesis: true difference in means is not equal to 0

95 percent confidence interval:

-3.3654832 0.2054832

sample estimates:

mean in group 1 mean in group 2

0.75 2.33

> t.test(extra ~ group, data=sleep, var.equal=T)

Two Sample t-test

data: extra by group

t = -1.8608, df = 18, p-value = 0.07919

alternative hypothesis: true difference in means is not equal to 0

95 percent confidence interval:

-3.3638740 0.2038740

sample estimates:

mean in group 1 mean in group 2

0.75 2.33With a Wilcoxon test, if we do not know that the data are gaussian, or if we suspect they are not (from a Shapiro--Wilk test, for instance).

> wilcox.test(extra ~ group, data=sleep)

Wilcoxon rank sum test with continuity correction

data: extra by group

W = 25.5, p-value = 0.06933

alternative hypothesis: true mu is not equal to 0

Warning message:

Cannot compute exact p-value with ties in: wilcox.test.default(x = c(0.7, -1.6,With an analysis of variance (we will present this later -- with only two samples, it yields exactly the same result as the Student T test).

> anova(lm(extra ~ group, data=sleep))

Analysis of Variance Table

Response: extra

Df Sum Sq Mean Sq F value Pr(>F)

group 1 12.482 12.482 3.4626 0.07919 .

Residuals 18 64.886 3.605

---

Signif. codes: 0 `***' 0.001 `**' 0.01 `*' 0.05 `.' 0.1 ` ' 1With a non parametric analysis of variance, i.e., a Kruskal--Wallis test.

> kruskal.test(extra ~ group, data=sleep)

Kruskal-Wallis rank sum test

data: extra by group

Kruskal-Wallis chi-squared = 3.4378, df = 1, p-value = 0.06372Here are the results

Method p-value Welch test 0.07919 Student T test 0.0794 Wilcoxon 0.06933 Analysis of Variance 0.07919 Kruskal--Wallis Test 0.06372

We are looking for the variance of a sample (whose mean is unknown).

Here is the theory.

The null hypothesis is H0: "the (population) variance is v", the alternative hypothesis is H1: "the variance is not v". We compute the statistic

Chi2 = (n-1) * (sample variance) / v

and je reject the null hypothesis H0 if

Chi2 > Chi2_{n-1} ^{-1} ( 1 - alpha/2 )

or

Chi2 < Chi2_{n-1} ^{-1} ( alpha/2 )where Chi2_{n-1} is the Chi2 distribution with n-1 degrees of freedom.

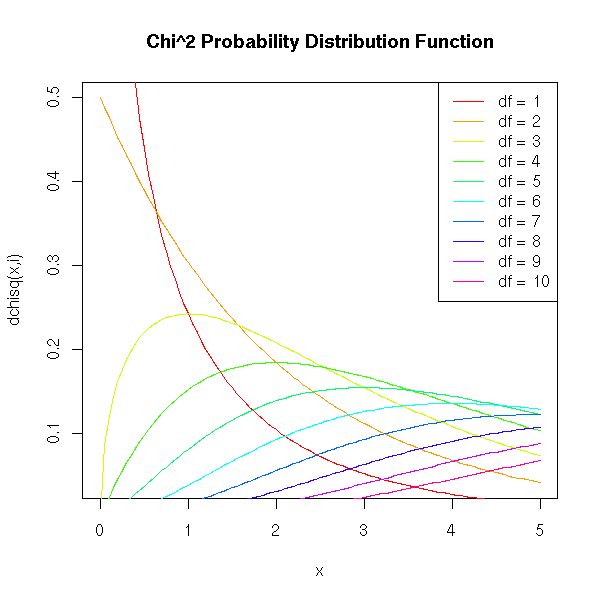

Here is the Chi^2 probability distribution function, with various degrees of freedom.

curve(dchisq(x,2), from=0, to=5, add=F, col="red",

ylab="dchisq(x,i)")

n <- 10

col <- rainbow(n)

for (i in 1:n) {

curve(dchisq(x,i), from=0, to=5, add=T, col=col[i])

}

legend(par('usr')[2], par('usr')[4], xjust=1,

paste('df =',1:n),

lwd=1,

lty=1,

col=col)

title(main="Chi^2 Probability Distribution Function")

We can get a confidence interval for the standard deviation, by hand, as follows.

alpha <- .05 x <- rnorm(200) n <- length(x) v = var(x) sd(x) sqrt( (n-1)*v / qchisq(alpha/2, df=n-1, lower.tail=F) ) sqrt( (n-1)*v / qchisq(alpha/2, df=n-1, lower.tail=T) )

We get [0.91, 1.11].

We can check this with a simulation:

v <- c(0)

for (i in 1:10000) {

v <- append(v, var(rnorm(200)) )

}

v <- sort(v)

sqrt(v[250])

sqrt(v[9750])We get [0.90, 1.10].

With smaller samples, the estimation is less reliable: the confidence interval is larger, [0.68, 1.32].

v <- c(0)

for (i in 1:10000) {

v <- append(v, var(rnorm(20)) )

}

v <- sort(v)

sqrt(v[250])

sqrt(v[9750])I have not found an R function that performs those computations (the "var.test" works with two samples): we can write our own.

chisq.var.test <- function (x, var=1, conf.level=.95,

alternative='two.sided') {

result <- list()

alpha <- 1-conf.level

n <- length(x)

v <- var(x)

result$var <- v

result$sd <- sd(x)

chi2 <- (n-1)*v/var

result$chi2 <- chi2

p <- pchisq(chi2,n-1)

if( alternative == 'less' ) {

stop("Not implemented yet")

} else if (alternative == 'greater') {

stop("Not implemented yet")

} else if (alternative == 'two.sided') {

if(p>.5)

p <- 1-p

p <- 2*p

result$p.value <- p

result$conf.int.var <- c(

(n-1)*v / qchisq(alpha/2, df=n-1, lower.tail=F),

(n-1)*v / qchisq(alpha/2, df=n-1, lower.tail=T),

)

}

result$conf.int.sd <- sqrt( result$conf.int.var )

result

}

x <- rnorm(100)

chisq.var.test(x)

# We can check tha the results are correct by looking at

# the distribution of the p-values: it should be uniform

# in [0,1].

v <- NULL

for (i in 1:1000) {

v <- append(v, chisq.var.test(rnorm(100))$p.value)

}

plot(sort(v))

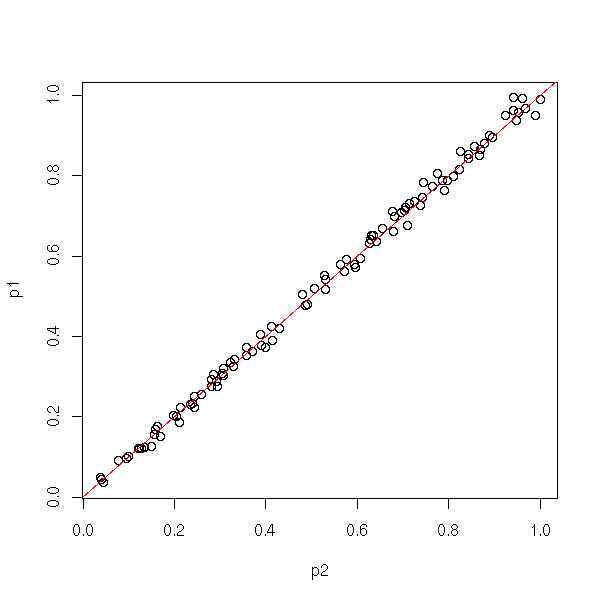

We can also compare the results with those of the "var.test" function, that works woth two samples. Either graphically,

p1 <- NULL

p2 <- NULL

for (i in 1:100) {

x <- rnorm(10)

p1 <- append(p1, chisq.var.test(x)$p.value)

p2 <- append(p2, var.test(x, rnorm(10000))$p.value)

}

plot( p1 ~ p2 )

abline(0,1,col='red')

or with a computation (we shall see later what it means: this is a test on a regression, that tells us that p1=p2 with a p-value equal to 0.325).

> summary(lm(p1-p2~0+p2))

Call:

lm(formula = p1 - p2 ~ 0 + p2)

Residuals:

Min 1Q Median 3Q Max

-0.043113 -0.007930 0.001312 0.009386 0.048491

Coefficients:

Estimate Std. Error t value Pr(>|t|)

p2 -0.002609 0.002638 -0.989 0.325

Residual standard error: 0.01552 on 99 degrees of freedom

Multiple R-Squared: 0.009787, Adjusted R-squared: -0.0002151

F-statistic: 0.9785 on 1 and 99 DF, p-value: 0.325

Here, we want to know if two samples come from populations from the same variance (we are not interested in the mean).

We proceed as for the comparison of means, but instead of considering the difference of means, we consider the quotient of variances.

One will use such a test before a Student T test (to compare the mean in two samples), to check that the equivariance assumption is valid.

Here is the example with which we had illustrated the Student T test: indeed, the variances do not seem too different.

?var.test

> var.test( sleep[,1] [sleep[,2]==1], sleep[,1] [sleep[,2]==2] )

F test to compare two variances

data: sleep[, 1][sleep[, 2] == 1] and sleep[, 1][sleep[, 2] == 2]

F = 0.7983, num df = 9, denom df = 9, p-value = 0.7427

alternative hypothesis: true ratio of variances is not equal to 1

95 percent confidence interval:

0.198297 3.214123

sample estimates:

ratio of variances

0.7983426Here is the theory behind this test.

The null hypothesis is H0: "the two populations have the same variance", the alternative hypothesis is H1: "the two populations do not have the same variance". We compute the statistic

variance of the first sample

F = -------------------------------

variance of the second sample(here, I assume that the two samples have the same size, otherwise the formula becomes more complicated) and we reject H0 if

F < F _{n1-1, n2-2} ^{-1} ( alpha/2 )

or

F > F _{n1-1, n2-2} ^{-1} ( 1 - alpha/2 )where F is Fisher's distribution.

Practically, we compute the quotient of variances with the largest variance in the numerator and we reject the null hypothesis that the variances are equal if

F > F(alpha/2, n1-1, n2-1)

where n1 and n2 are the sample sizes.

Here is an example, where the computations were performed by hand.

> x <- rnorm(100, 0, 1) > y <- rnorm(100, 0, 2) > f <- var(y)/var(x) > f [1] 5.232247 > qf(alpha/2, 99, 99) [1] 0.6728417 > f > qf(alpha/2, 99, 99) [1] TRUE

If we have more than two samples, we can use Bartlett's test. Of we have two non-gaussian samples, we can use Ansari's or Mood's non parametric test. Of there are more that two non-gaussian samples, we can use Fligner's test.

?bartlett.test ?ansari.test ?mood.test ?fligner.test

In a sample of 100 butterflies, we found 45 males and 55 females. Can we conclude that there are, in general, more males than females?

The number of female butterflies in a samples if 100 animals follows a binimial distribution B(100,p) and we want to test the null hypothesis H0: "p=0.5" against the alternative hypothesis H1: "p different from 0.5".

> binom.test(55, 100, .5)

Exact binomial test

data: 55 and 100

number of successes = 55, number of trials = 100, p-value = 0.3682

alternative hypothesis: true probability of success is not equal to 0.5

95 percent confidence interval:

0.4472802 0.6496798

sample estimates:

probability of success

0.55In this example, the difference is not statistically significant.

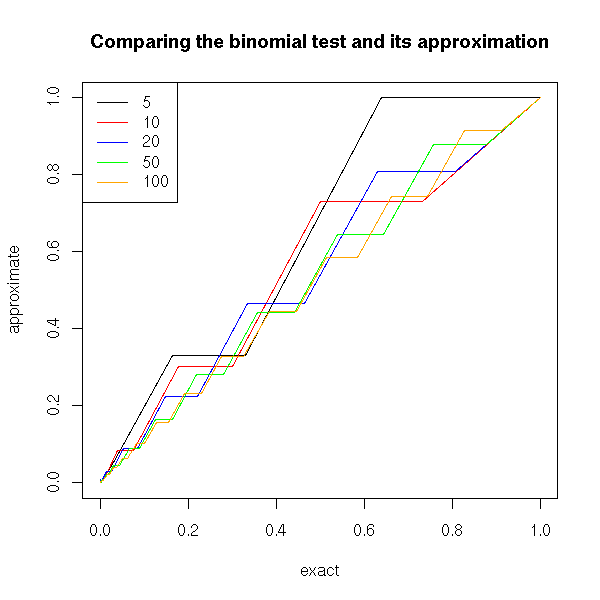

If we were doing the computations by hand, we would not use the binomial test, but an approximation, with the "prop.test". But as the computer performscarries out the computations for us, we need not use it.

p <- .3

col.values <- c(par('fg'),'red', 'blue', 'green', 'orange')

n.values <- c(5,10,20,50,100)

plot(0, type='n', xlim=c(0,1), ylim=c(0,1), xlab='exact', ylab='approximate')

for (i in 1:length(n.values)) {

n <- n.values[i]

x <- NULL

y <- NULL

for (a in 0:n) {

x <- append(x, binom.test(a,n,p)$p.value)

y <- append(y, prop.test(a,n,p)$p.value)

}

o <- order(x)

lines(x[o],y[o], col=col.values[i])

}

legend(par('usr')[1],par('usr')[4],

as.character(n.values),

col=col.values,

lwd=1,lty=1)

title(main="Comparing the binomial test and its approximation")

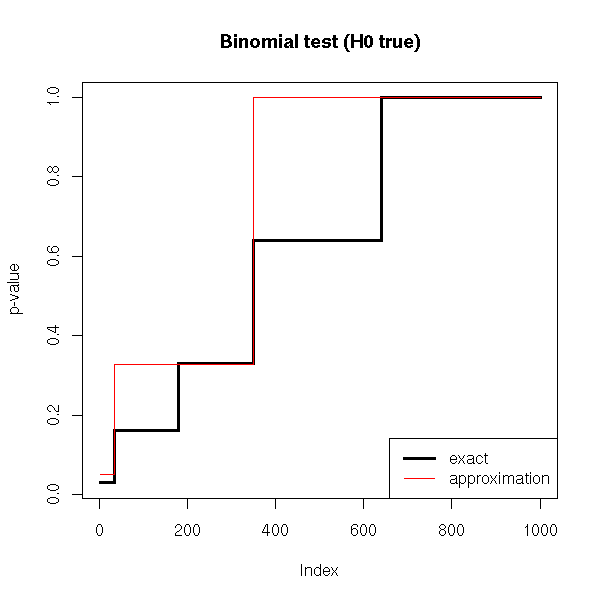

We can also compare the distribution of p-values of these two tests.

p <- .3

n <- 5

N <- 1000

e <- rbinom(N, n, p)

x <- y <- NULL

for (a in e) {

x <- append(x, binom.test(a,n,p)$p.value)

y <- append(y, prop.test(a,n,p)$p.value)

}

x <- sort(x)

y <- sort(y)

plot(x, type='l', lwd=3, ylab='p-value')

lines(y, col='red')

legend(par('usr')[2], par('usr')[3], xjust=1, yjust=0,

c('exact', 'approximation'),

lwd=c(3,1),

lty=1,

col=c(par("fg"),'red'))

title(main="Binomial test (H0 true)")

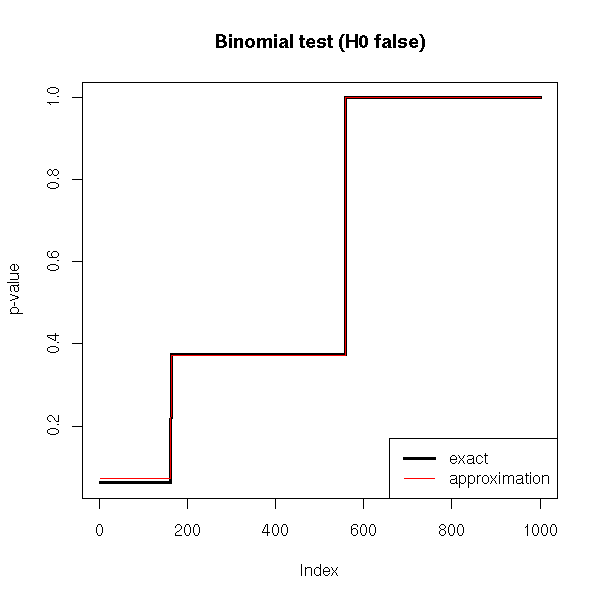

p1 <- .3

p2 <- .5

n <- 5

N <- 1000

e <- rbinom(N, n, p1)

x <- y <- NULL

for (a in e) {

x <- append(x, binom.test(a,n,p2)$p.value)

y <- append(y, prop.test(a,n,p2)$p.value)

}

x <- sort(x)

y <- sort(y)

plot(x, type='l', lwd=3, ylab='p-value')

lines(y, col='red')

legend(par('usr')[2], par('usr')[3], xjust=1, yjust=0,

c('exact', 'approximation'),

lwd=c(3,1),

lty=1,

col=c(par("fg"),'red'))

title(main="Binomial test (H0 false)")

We remark that if H0 is true, the p-value is over-estimated (the test is too conservative, it is less powerful, it does not see anything significant while there is), but the wronger H0, the better the approximation.

TODO: alternative to the binomial test: glm(y~x, family=binomial) # Logistic regression (well, for p=0.5) Question: and for p != 0.5? You can do the same for multilogistic regression

The binomial test is fine, but it does not generalize: it allows you to study a binary variable, nothing more. But sometimes we need a comparable test to study qualitative variables with more than 2 values or to study several qualitative variables. There is no such "exact multinomial test" (you can devise one, but you would have to implement it...): instead, one uses the approximate Chi2 test.

The Chi2 test is a non parametric , non-rigorous (it is an approximation) test to compare distributions of qualitative variables. In spite of that, it is the most important discrete test.

One can show that if (X1, X2, ..., Xr) is a multinomial random variable, then

( X_1 - n p_1 )^2 ( X_r - n p_r )^2

Chi^2 = ------------------- + ... + --------------------

n p_1 n p_rasymptotically follows a Chi^2 distribution with r-1 degrees of freedom. This tis is just an asymptotic result, that is sufficiently true if

n >= 100 (the sample is large enough) n p_i >= 10 (the theoretical frequencies (counts) are not too small)

In particular, we get another mock binomial test.

p <- .3

col.values <- c(par('fg'),'red', 'blue', 'green', 'orange')

n.values <- c(5,10,20,50,100)

plot(0, type='n', xlim=c(0,1), ylim=c(0,1), xlab='exact', ylab='approximate')

for (i in 1:length(n.values)) {

n <- n.values[i]

x <- NULL

y <- NULL

z <- NULL

for (a in 0:n) {

x <- append(x, binom.test(a,n,p)$p.value)

y <- append(y, chisq.test(c(a,n-a),p=c(p,1-p))$p.value)

z <- append(z, prop.test(a,n,p)$p.value)

}

o <- order(x)

lines(x[o],y[o], col=col.values[i])

lines(x[o],z[o], col=col.values[i], lty=3)

}

legend(par('usr')[1],par('usr')[4],

as.character(c(n.values, "prop.test", "chisq.test")),

col=c(col.values, par('fg'), par('fg')),

lwd=1,

lty=c(rep(1,length(n.values)), 1,3)

)

title(main="The binomial test and its approximations")

Or a mock multinomial test: let us check, with a simulation, that the p-values are close.

# A Monte Carlo multinomial test

multinom.test <- function (x, p, N=1000) {

n <- sum(x)

m <- length(x)

chi2 <- sum( (x-n*p)^2/(n*p) )

v <- NULL

for (i in 1:N) {

x <- table(factor(sample(1:m, n, replace=T, prob=p), levels=1:m))

v <- append(v, sum( (x-n*p)^2/(n*p) ))

}

sum(v>=chi2)/N

}

multinom.test( c(25,40,25,25), p=c(.25,.25,.25,.25) ) # 0.13

chisq.test( c(25,40,25,25), p=c(.25,.25,.25,.25) ) # 0.12

N <- 100

m <- 4

n <- 10

p <- c(.25,.25,.1,.4)

x <- NULL

y <- NULL

for (i in 1:N) {

a <- table( factor(sample(1:m, n, replace=T, prob=p), levels=1:m) )

x <- append(x, multinom.test(a,p))

y <- append(y, chisq.test(a,p=p)$p.value)

}

plot(y~x)

abline(0,1,col='red')

title("Monte Carlo Multinomial Test and Chi^2 Test")

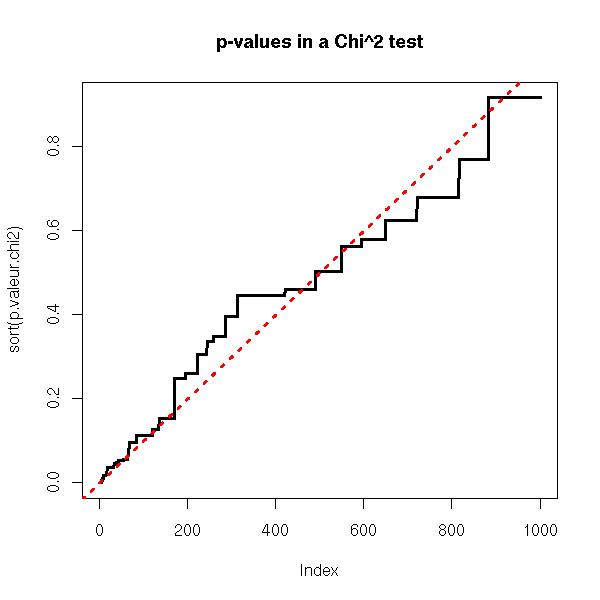

Here is the distribution of the p-values of a Chi^2 test.

# We sample 10 subjects in a 4-class population.

# We repeat the experiment 100 times.

N <- 1000

m <- 4

n <- 10

p <- c(.24,.26,.1,.4)

p.valeur.chi2 <- rep(NA,N)

for (i in 1:N) {

echantillon <- table(factor(sample(1:m, replace=T, prob=p), levels=1:m))

p.valeur.chi2[i] <- chisq.test(echantillon,p=p)$p.value

}

plot( sort(p.valeur.chi2), type='l', lwd=3 )

abline(0, 1/N, lty=3, col='red', lwd=3)

title(main="p-values in a Chi^2 test")

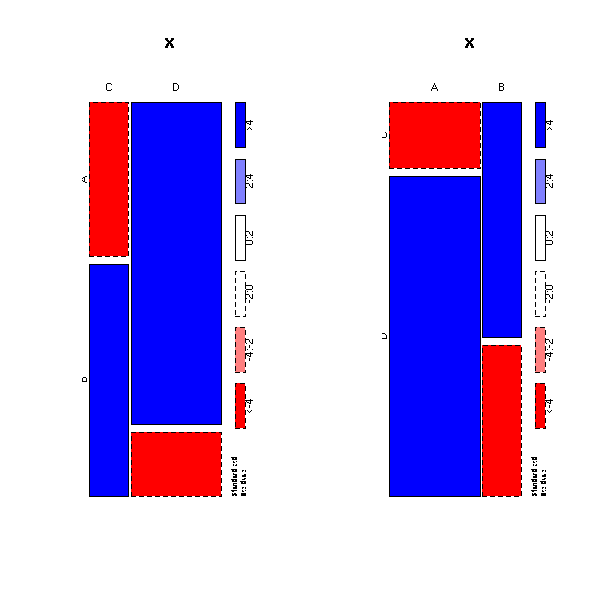

Let us consider the following situation: we measure two qualitative variables each with two values on a sample. In the whole population, the four classes occur with proportions 10%, 20%, 60%, 10%.

A B total

C 10 20 30

D 60 10 70

total 70 30 100We can get a sample as follows.

TODO: this code is ugly...

foo <- function (N) {

population1 <- c(rep('A',10), rep('B',20), rep('A',60), rep('B',10))

population1 <- factor(population1, levels=c('A','B'))

population2 <- c(rep('C',10), rep('C',20), rep('D',60), rep('D',10))

population2 <- factor(population2, levels=c('C','D'))

o <- sample(1:100, N, replace=T)

table( population2[o], population1[o] )

}

a <- foo(1000)

op <- par(mfcol=c(1,2))

plot( a, shade=T )

plot( t(a), shade=T )

par(op)

We would like to know wether these variables are independant or not. To do so, we take a sample (as always, we do not know the population direclty, we only know it through samples -- so we are not supposed to know the proportions I mentionned above); we compute the marginal proportions (i.e., the "total" row and column); the product of this row and column, so as to get the proportions one would observe if the variables were independant; we can then compare those proportions with the observed ones.

> n <- 100

> a <- foo(n)

> a/n

A B

C 0.09 0.15

D 0.65 0.11

> a1 <- apply(a/n,2,sum) # The "total" row

> a1

A B

0.74 0.26

> a2 <- apply(a/n,1,sum) # The "total" column

> a2

C D

0.24 0.76

> b <- a1 %*% t(a2)

> b

C D

[1,] 0.1776 0.5624

[2,] 0.0624 0.1976

> chisq.test(as.vector(a),p=as.vector(b))

Chi-squared test for given probabilities

data: as.vector(a)

X-squared = 591.7683, df = 3, p-value = < 2.2e-16

TODO

I think the syntax is different

chisq.test(rbind(as.vector(a), as.vector(b)))

Is the result the same?

TODO

Remark

One can also use the Chi^2 for a homogeneity test, i.e.,

to check if two samples come from the same population.

It IS an independance Chi^2 (independance between the

variable and the sample number): the syntax is the same.

chisq.test(rbind(as.vector(a), as.vector(b)))

Here, we want to check if two variables, given by a contingency table, are independant. It sounds like the Chi^2 test, but this time, it is an exact test, not an approximation.

Let us take the example we had examined above with the Chi^2 test.

> a

A B

C 9 15

D 65 11

> fisher.test(a)

Fisher's Exact Test for Count Data

data: a

p-value = 1.178e-05

alternative hypothesis: true odds ratio is not equal to 1

95 percent confidence interval:

0.03127954 0.32486526

sample estimates:

odds ratio

0.1048422With a smaller sample, it is less clear.

> fisher.test( foo(10) )

Fisher's Exact Test for Count Data

data: foo(10)

p-value = 1

alternative hypothesis: true odds ratio is not equal to 1

95 percent confidence interval:

0.003660373 39.142615141

sample estimates:

odds ratio

0.3779644

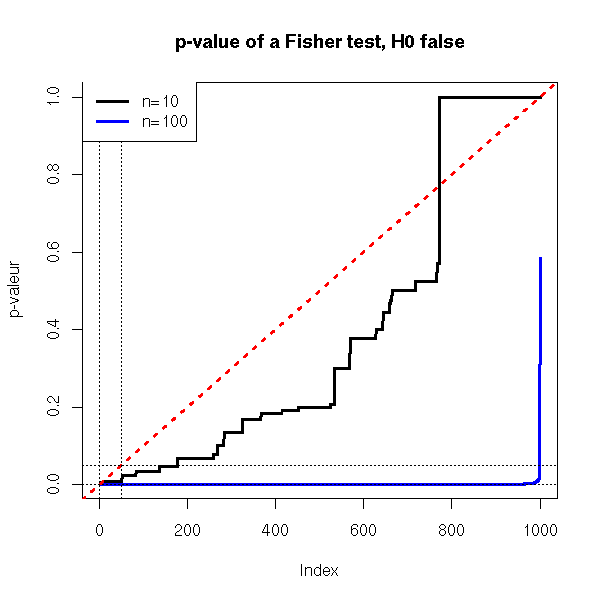

n1 <- 10

n2 <- 100

N <- 1000

x1 <- rep(NA,N)

x2 <- rep(NA,N)

for (i in 1:N) {

x1[i] <- fisher.test(foo(n1))$p.value

x2[i] <- fisher.test(foo(n2))$p.value

}

plot( sort(x1), type='l', lwd=3, ylab='p-valeur')

lines( sort(x2), col='blue', lwd=3 )

abline(0,1/N,col='red',lwd=3,lty=3)

abline(h=c(0,.05),lty=3)

abline(v=c(0,N*.05),lty=3)

title(main="p-value of a Fisher test, H0 false")

legend(par('usr')[1],par('usr')[4],

c("n=10", "n=100"),

col=c(par('fg'), 'blue'),

lwd=3,

lty=1)

foo <- function (N) {

population1 <- c(rep('A',2), rep('B',8), rep('A',18), rep('B',72))

population1 <- factor(population1, levels=c('A','B'))

population2 <- c(rep('C',2), rep('C',8), rep('D',18), rep('D',72))

population2 <- factor(population2, levels=c('C','D'))

o <- sample(1:100, N, replace=T)

table( population2[o], population1[o] )

}

n1 <- 10

n2 <- 100

N <- 1000

x1 <- rep(NA,N)

x2 <- rep(NA,N)

for (i in 1:N) {

x1[i] <- fisher.test(foo(n1))$p.value

x2[i] <- fisher.test(foo(n2))$p.value

}

plot( sort(x1), type='l', lwd=3, ylab='p-valeur', ylim=c(0,1))

lines( sort(x2), col='blue', lwd=3 )

abline(0,1/N,col='red',lwd=3,lty=3)

abline(h=c(0,.05),lty=3)

abline(v=c(0,N*.05),lty=3)

title(main="p-valueof a Fisher test, H0 true")

legend(par('usr')[2], .2, xjust=1, yjust=0,

c("n=10", "n=100"),

col=c(par('fg'), 'blue'),

lwd=3,

lty=1)

It is a nom-parametric test on the median of a random variable -- with no assumption on it.

The idea is simple: we count the number of values that are above the proposed median -- we know that of ot is the actual median, this number follows a binomial distribution, because each value has exactly one chance out of two to be above it.

I have not found a function to perform this test, so I wrote my own.

sign.test <- function (x, mu=0) { # does not handle NA

n <- length(x)

y <- sum(x<mu) # should warn about ties!

p.value <- min(c( pbinom(y,n,.5), pbinom(y,n,.5,lower.tail=F) ))*2

p.value

}To check that it works, let us simply remark that the distribution of the p-values is approximately uniform, as it should be.

sign.test <- function (x, mu=0) {

n <- length(x)

y <- sum(x<mu) # should warn about ties!

p.value <- min(c( pbinom(y,n,.5), pbinom(y,n,.5,lower.tail=F) ))*2

p.value

}

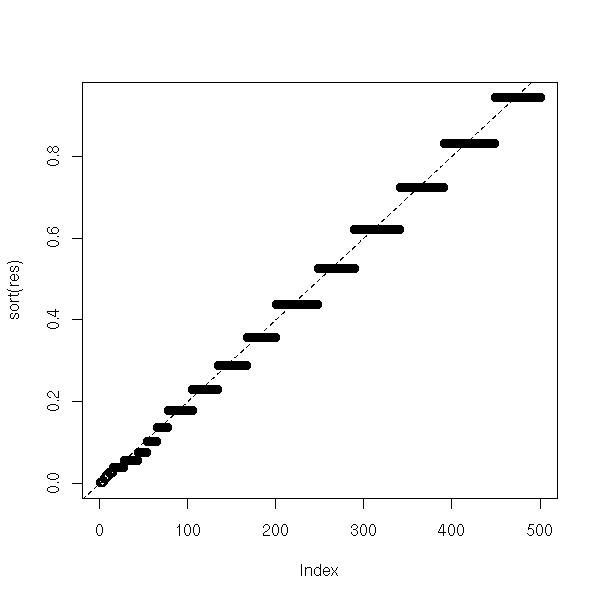

N <- 500

n <- 200

res <- rep(NA,N)

for (i in 1:N) {

res[i] <- sign.test(rlnorm(n),mu=1)

}

plot(sort(res))

abline(0,1/N,lty=2)

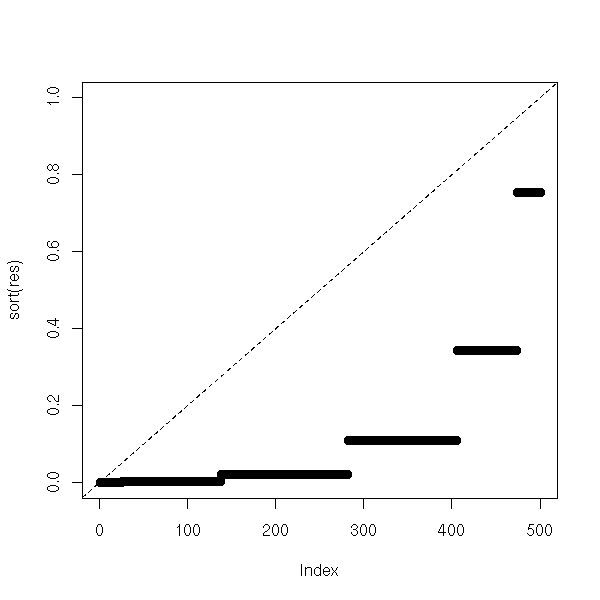

If the proposed median is wront, the p-values will be much lower.

N <- 500

n <- 10

res <- rep(NA,N)

for (i in 1:N) {

res[i] <- sign.test(rlnorm(n),mu=2)

}

plot(sort(res), ylim=c(0,1))

abline(0,1/N,lty=2)

We can now complete our function to have confidence intervals.

sign.test <- function (x, mu=0, alpha=0.05) {

n <- length(x)

y <- sum(x<mu) # should warn about ties!

p.value <- min(c( pbinom(y,n,.5), pbinom(y,n,.5,lower.tail=F) ))*2

x <- sort(x)

q1 <- qbinom(alpha/2,n,.5,lower.tail=T)

q2 <- qbinom(alpha/2,n,.5,lower.tail=F)

ci <- c(x[q1], x[q2])

new.alpha = pbinom(q1,n,.5) + (1-pbinom(q2,n,.5))

list(p.value=p.value, ci=ci, alpha=new.alpha)

}We can check that the confidence intervals actually have the advertised risk (as the binomial distribution is a discrete distribution, this risk will not be exactly 0.05, that is why the function returns the theoretical value).

test.sign.test <- function (n=100, N=500) {

N <- 500

res <- matrix(NA, nr=N, nc=3)

n <- 100

for (i in 1:N) {

r <- sign.test(rlnorm(n))

ci <- r$ci

res[i,] <- c( ci[1]<1 & 1<ci[2], n, r$alpha )

}

c(

1-sum(res[,1], na.rm=T)/sum(!is.na(res[,1])),

res[1,3]

)

}

N <- 10

res <- matrix(NA, nc=3, nr=N, dimnames=list(NULL,

c("Empirical Value", "Theoretical Value", "n")) )

for (i in 1:N) {

n <- sample(1:200, 1)

res[i,] <- round(c( test.sign.test(n), n ), digits=2)

}

resThis yields:

> res

Empirical Value Theoretical Value n

[1,] 0.06 0.05 96

[2,] 0.04 0.05 138

[3,] 0.05 0.05 6

[4,] 0.06 0.05 135

[5,] 0.03 0.05 138

[6,] 0.04 0.05 83

[7,] 0.03 0.05 150

[8,] 0.05 0.05 91

[9,] 0.05 0.05 144

[10,] 0.04 0.05 177

TODO: read and correct (if needed) this part.

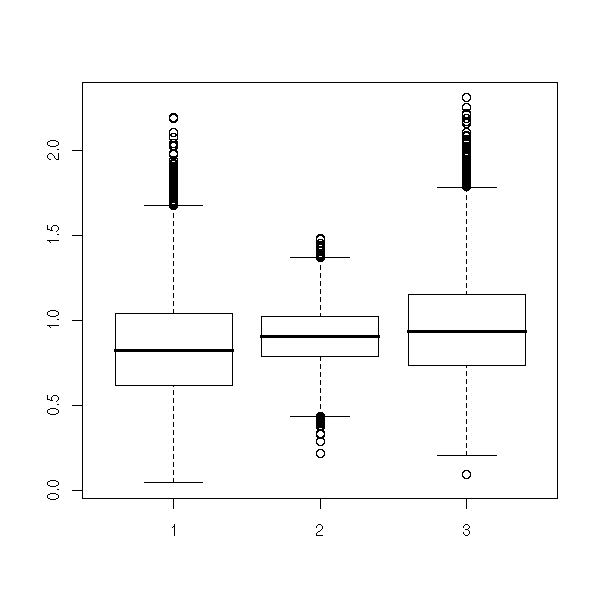

It is a non-parametric test: we do not know (or assume) anything on the distribution of the variables, in particular, we think it is not gaussian (to check ot, look at a quantile-quantile plot or perform a Shapiro--Wilk test) -- otherwise, we would use Student's T test, that is more powerful.

However, there IS an assumption: the variable is symetric (if it is not, consider the sign test). For this reason, we can speak of mean or of median -- for the whole distribution it is the same (but for a sample, that may be asymetric, it is different).

TODO: the following descrition is not that of the Wilcoxon test I know, that assumes the variable is symetric, that considers the variables Xij=(Xi+Xj)/2 and counts the number of those variables that are above the proposed mean.

The recipe is as follows. Take two samples, concatenate them, sort them. Then, look of the two samples are "well-shuffled" or if the elements from one sample are rather at the begining while those of the others are rather at the end.

The null hypothesis is H0: "P(X1_i > X2_i) = 0.5".

We first sort each sample (separately) and compute

U1 = number of pairs (i,j) such that X1_i>X2_j

+ (1/2) * number of pairs (i,j) so that X1_i=X2_j

U2 = number of pairs (i,j) so that X1_j>X2_i

+ (1/2) * number of pairs (i,j) so that X1_i=X2_j

U = min(U1,U2)Here is another method of computing this:

Contatenate the two samples, rank them R1 = sum of the ranks on the first sample R2 = sum of the ranks on the second sample U2 = n1*n2 + n1(n1+1)/2 - R1 U1 = n1*n2 + n2(n2+1)/2 - R2 U = min(U1, U2)

Here is example from the man page (here, we imagine that prior data suggests that x>y, so we choose an asymetric alternative hypothesis).

help.search("wilcoxon")

?wilcox.test

> x <- c(1.83, 0.50, 1.62, 2.48, 1.68, 1.88, 1.55, 3.06, 1.30)

> y <- c(0.878, 0.647, 0.598, 2.05, 1.06, 1.29, 1.06, 3.14, 1.29)

> wilcox.test(x, y, paired = TRUE, alternative = "greater", conf.level=.95, conf.int=T)

Wilcoxon signed rank test

data: x and y

V = 40, p-value = 0.01953

alternative hypothesis: true mu is greater than 0

95 percent confidence interval:

0.175 Inf

sample estimates:

(pseudo)median

0.46Let us check on an example that the test remains valid for non-gaussian distributions.

N <- 1000

n <- 4

v <- vector()

w <- vector()

for (i in 1:N) {

x <- runif(n, min=-10, max=-9) + runif(n, min=9, max=10)

v <- append(v, wilcox.test(x)$p.value)

w <- append(w, t.test(x)$p.value)

}

sum(v>.05)/N

sum(w>.05)/NWe get 1 and 0.93: we make fewer mistakes with Wilcoxons's test, but its power is lower, i.e., we miss many opportunities of rejecting the null hypothesis:

# Probability of rejecting H0, when H0 is false (power)

N <- 1000

n <- 5

v <- vector()

w <- vector()

for (i in 1:N) {

x <- runif(n, min=0, max=1)

v <- append(v, wilcox.test(x)$p.value)

w <- append(w, t.test(x)$p.value)

}

sum(v<.05)/N

sum(w<.05)/NWe get 0 (the power of the test is zero: it never rejects the null hypothesis -- if our sample is very small and we do not know anything about the distribution, we cannot say much) against 0.84.

Let us consider a more ungaussian distribution.

# Probability of rejecting H0, when H0 is false (power)

N <- 1000

n <- 5

v <- vector()

w <- vector()

for (i in 1:N) {

x <- runif(n, min=-10, max=-9) + runif(n, min=9, max=10)

v <- append(v, wilcox.test(x)$p.value)

w <- append(w, t.test(x)$p.value)

}

sum(v<.05)/N

sum(w<.05)/NWe get 0 and 0.05. For n=10, we would get 0.05 in both cases.

Let us now check the confidence interval.

N <- 1000

n <- 3

v <- vector()

w <- vector()

for (i in 1:N) {

x <- runif(n, min=-10, max=-9) + runif(n, min=9, max=10)

r <- wilcox.test(x, conf.int=T)$conf.int

v <- append(v, r[1]<0 & r[2]>0)

r <- t.test(x)$conf.int

w <- append(w, r[1]<0 & r[2]>0)

}

sum(v)/N

sum(w)/NWe get 0.75 and 0.93.

TODO: I do not understand. As the U test is non-parametric, to should give larger confidence intervals and make fewer mistakes. The confidence interval for the Wilcoxon is three times as small as that of Student's T test. ??? However, these simulations show that Student's T test is robust. TODO: read what I just wrote and check the power

The following example tests if the variable is symetric around its mean.

> x <- rnorm(100)^2

> x <- x - mean(x)

> wilcox.test(x)

Wilcoxon signed rank test with continuity correction

data: x

V = 1723, p-value = 0.005855

alternative hypothesis: true mu is not equal to 0Idem for the median.

> x <- x - median(x)

> wilcox.test(x)

Wilcoxon signed rank test with continuity correction

data: x

V = 3360.5, p-value = 0.004092

alternative hypothesis: true mu is not equal to 0If there are more that two samples, you can use the Kruskal--Wallis test.

?kruskal.test

We want to see if two (quantitative random variables) follow the same distribution.

> ks.test( rnorm(100), 1+rnorm(100) )

Two-sample Kolmogorov-Smirnov test

data: rnorm(100) and 1 + rnorm(100)

D = 0.43, p-value = 1.866e-08

alternative hypothesis: two.sided

> ks.test( rnorm(100), rnorm(100) )

Two-sample Kolmogorov-Smirnov test

data: rnorm(100) and rnorm(100)

D = 0.11, p-value = 0.5806

alternative hypothesis: two.sided

> ks.test( rnorm(100), 2*rnorm(100) )

Two-sample Kolmogorov-Smirnov test

data: rnorm(100) and 2 * rnorm(100)

D = 0.19, p-value = 0.0541

alternative hypothesis: two.sidedTODO: state the idea (If my memory is good, we consider the sample cumulative distribution function of both variables and compute the area between them).

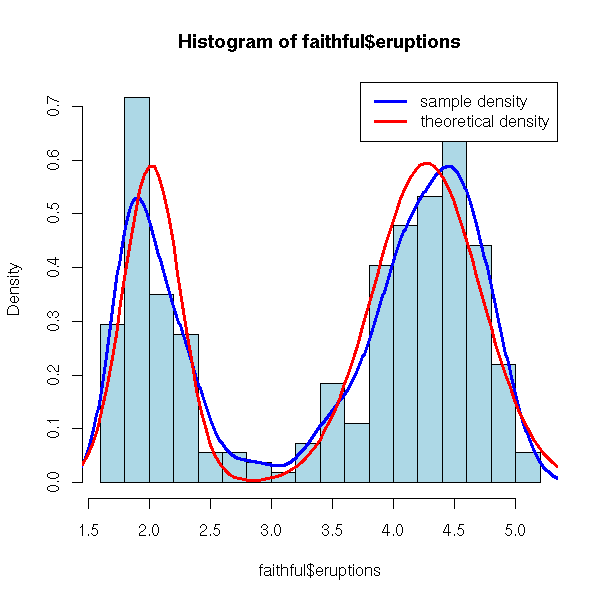

This test check if a random variable is gaussian. That might seem to be a special case of the Kolmogorov--Smirnov test, but actually we compare a random variable with the family of gaussian distributions, without specifying the mean and variance, while the K-S test want a completely specified distribution.

> shapiro.test(rnorm(10))$p.value [1] 0.09510165 > shapiro.test(rnorm(100))$p.value [1] 0.8575329 > shapiro.test(rnorm(1000))$p.value [1] 0.1853919 > shapiro.test(runif(10))$p.value [1] 0.5911485 > shapiro.test(runif(100))$p.value [1] 0.0002096377 > shapiro.test(runif(1000))$p.value [1] 2.385633e-17

It is a good idea to look at the quantile-quantile plot to see what happens, because the data might be non-gaussian in a benign way (either, as here, because the data are less dispersed that gaussian data, either because the deviation from a gaussian is statistically significant but practically negligible -- quite common if the sample is very large).

There is a wealth of other tests, we shall not detail them and merely refer to the manual.

?kruskal.test

?ansari.test

?mood.test

?fligner.test

library(help=ctest)

help.search('test')

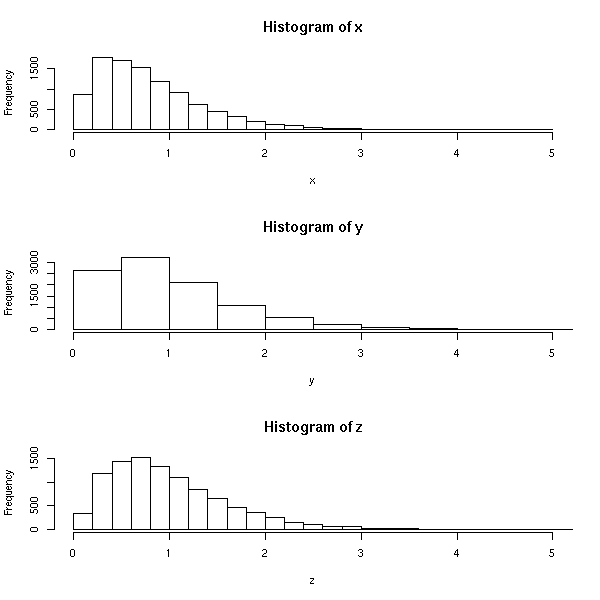

TODO: State the structure of this part -- and reorder it... 1. Generalities about estimators 2. Least Squares estimators. Example: NLS. 3. Maximum Likelihood Estimators (also: REML, Penalized Likelihood) 4. GMM Estimators

Quite often, the data we are studying (the "statistical series") is not the whole population but just a sample of it -- e.g., when you study birds on an island, you will not measure all of them, you will simply catch a few dozen specimens and work on them. You can easily compute the statistical parameters of this sample (mean, standard deviation, etc.), but they are only approximations of those parameters for the whole population: how can we measure the precision of those approximations?

Here are a few concrete examples of this situation.

An industrialist must choose a variety of maize. It will be used as (part of) an animal food and we want the variety that contains the most proteins: we want to know the average protein content of each variety -- not that of the sample at hand, but that of the variety as a whole. We might find that the sample of a variety has a larger protein content that the sample of another: but were to samples sufficiently large, was the difference sifficiently significant to extrapolate the results to the whole population?