Principal Component Analysis (PCA)

Distance-based methods

SOM (Self-Organizing Maps)

Simple Correspondance Analysis (CA)

Multiple Correspondance Analysis

Log-linear model (Poisson Regression)

Discriminant Analysis

Canonical analysis

Kernel methods

Neural networks

Dimension reduction: TODO: Rewrite/Remove this section

TODO: to sort

There are two kind of methods in multidimensional statistics: Factorial Methods, in which we project the data on a vector space, trying to lose as little information as possible; and Classification Methods, that try to cluster the points.

There are three main techniques among the Factorial Methods: Principal Component Analysis (PCA, with several quantitative variables), Correspondance Analysis (CA, two quanlitative variables, represented by a contingency table) and Multiple Correspondance Analysis (MCA, more that two variables, all quantitative).

The following table summarizes this:

Method Quantitative variables Qualitative variables ---------------------------------------------------------- PCA several none MDS several none CA none two MCA none several

There are other, non symetric, methods: one variable plays a special role, is given a different emphasis than the others (usually, we try to predict or explain one variable from the others).

Method Quantitative v. Qualitative v. Variable to predict ---------------------------------------------------------------------------------- regression several several quantitative anova none one quantitative logistic regression several several binary Poisson regression several several counting logistic regression several several ordered (qualitative) discriminant analysis several one CART several several binary ...

Here is a first presentation of PCA. We have a cloud of points in a high-dimensional space, too large for us to see something in it. The PCA will give us a subspace of reasonable dimension so that the projection onto this subspace retains "as much information as possible", i.e., so that the so that the projected cloud of points be as "dispersed" as possible. In other words, it reduces the dimension of the cloud of points, it helps choose a point of view to look at the cloud of points.

The algorithm is the following. We first translate the data so that its center of gravity be at the origin (always a good thing when you plan to use linear algebra). Then, we try to rotate it so that the standard deviation of the first coordinate be as large as possible (and then the second, third, etc.): this is equivalent to diagonalizing the variance-covariance matrix (it is a (positive) real symetric matrix, hence it is diagonalizable in an orthonormal basis), starting with the eigen vectors of largest eigen value. The first axis of this new coordinate system corresponds to an approximation of the cloud of points by a 1-dimensional space; if you want an approximation by a k-dimensional subspace, just take the first k eigen vectors.

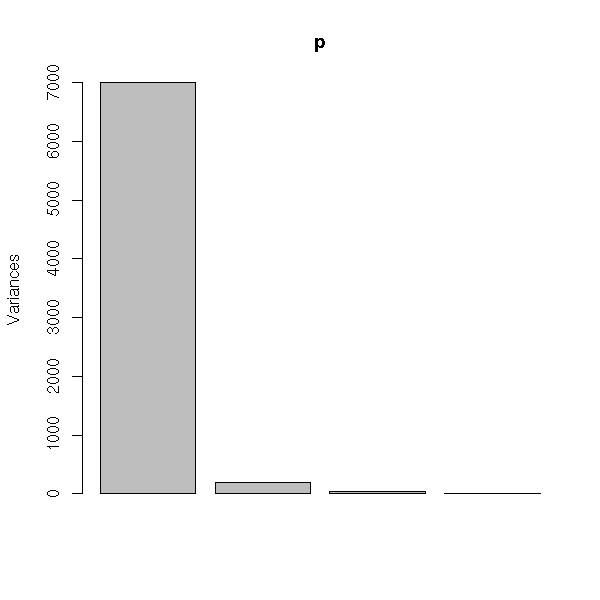

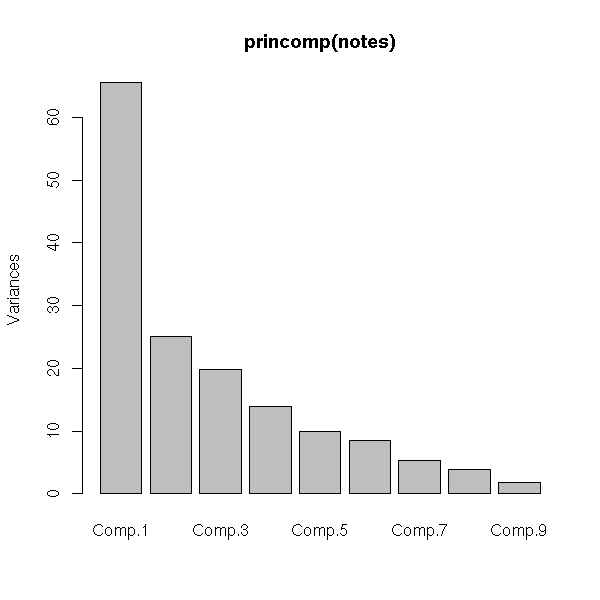

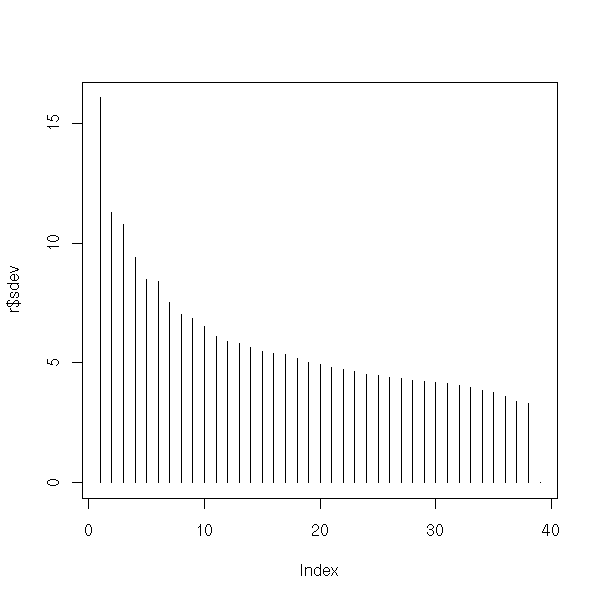

To choose the dimension of that subspace, we can look (graphically) at the eigen values and we stop when they start dropping (if they decrease slowly, we are in a bad situation: with our linear goggles, the data is inherently high-dimensional).

One may also see PCA as an analogue of the least squares method to find a line that goes as "near" the points as possible -- to simplify, let us assume there are just two dimensions. But while the least squares method is asymetric (the two variables play different roles: they are not interchangeable, we try to predict one from the others, we measure the distance parallel to one coordinate axis), the PCA is symetric (the distance is measured orthogonally to the line we are looking for).

Here is yet another way of presenting PCA. We model the data as X = Y + E, where X is the noisy data we have (in an n-dimensional space), Y is the real data (without the noise), in a smaller, k-dimensional space, and E is an error term (the noise). We want to find the k-dimensional space where Y lives.

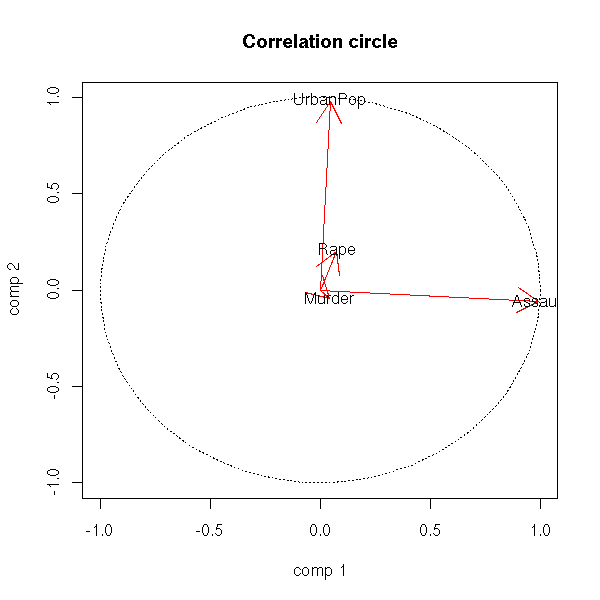

You can also see PCA as a game on a table of numbers (it is not as childish as it seems: it is actually a description of correspondance analysis). Thus, we can switch the role of rows and columns: on the one hand, we try to find similarities or differences between subjects, on the other, similarities or differences between variables. (There are two matrices to diagonalize, A*t(A) and t(A)*A, but but it suffices to diagonalize one: they have the same non-zero eigen values and the eigen vectors of one can easily be obtained from those of the other.) We usually ask that the variables be centered (and, often, normalized). We can plot both the variables on the same graph as the subjects: the variables will lie on the unit sphere; thei projection on the subspace spanned by the first two eigen vectors is the "correlation circle".

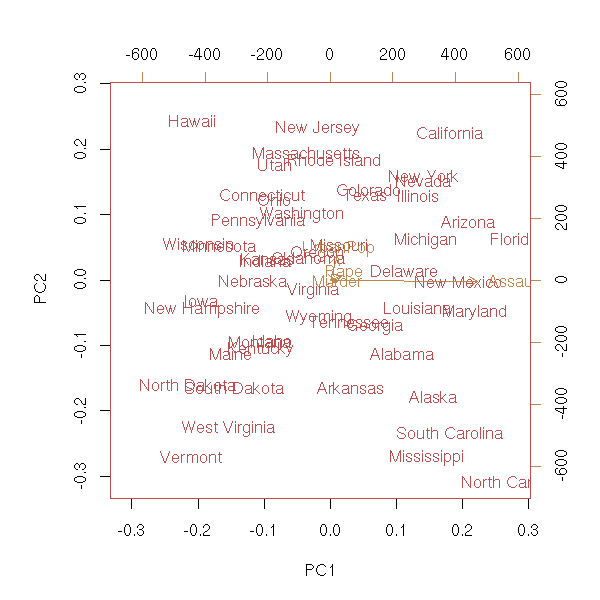

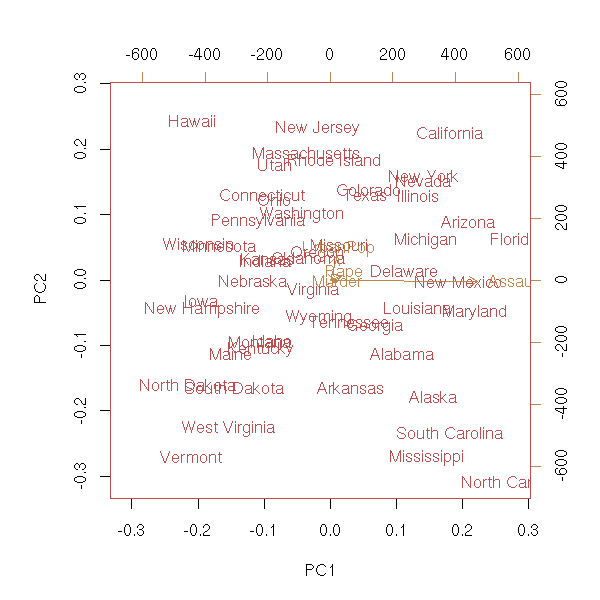

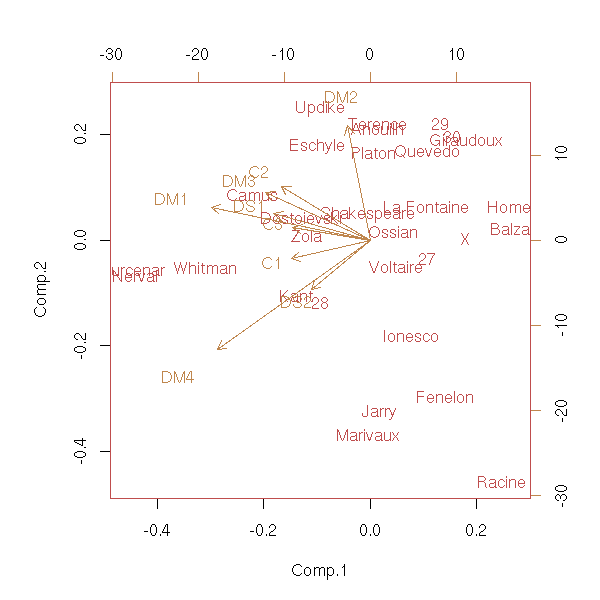

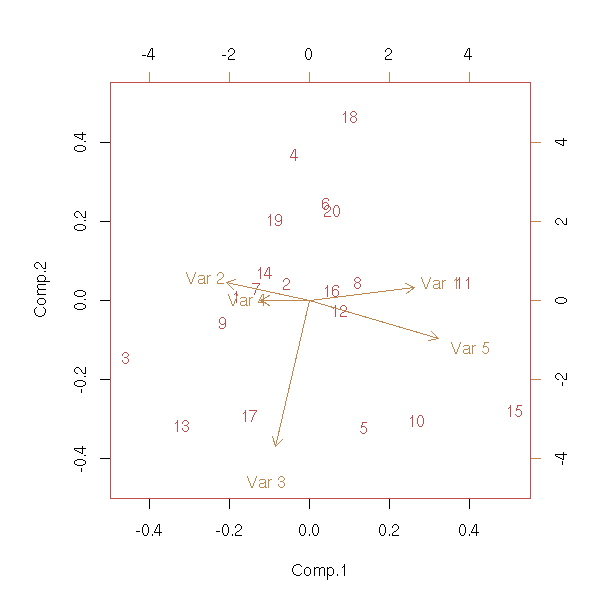

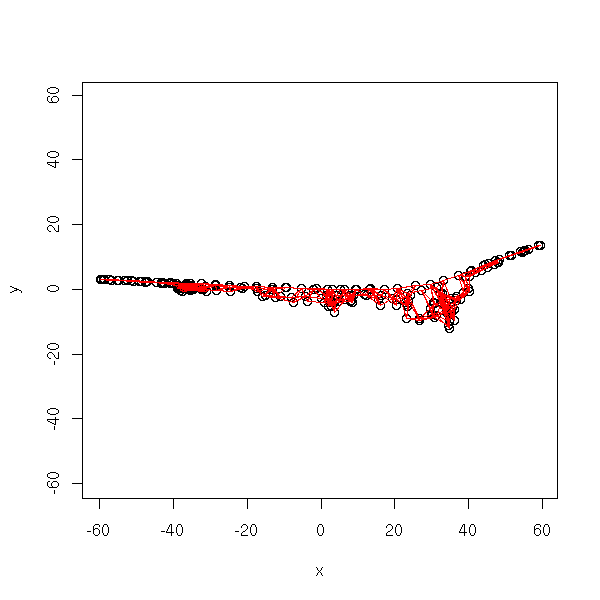

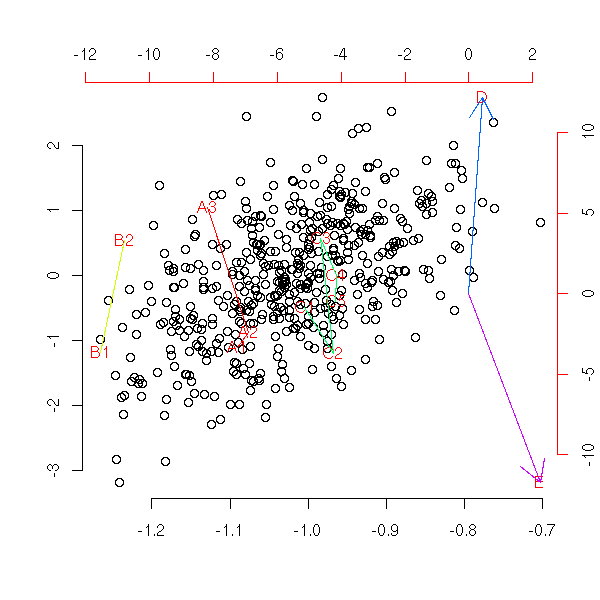

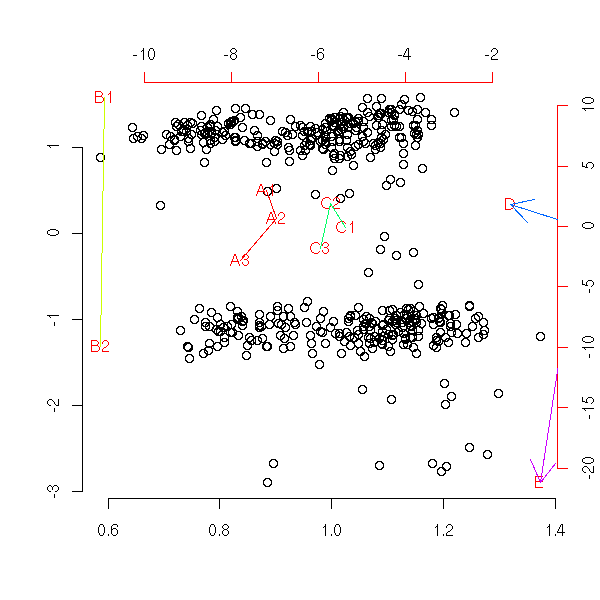

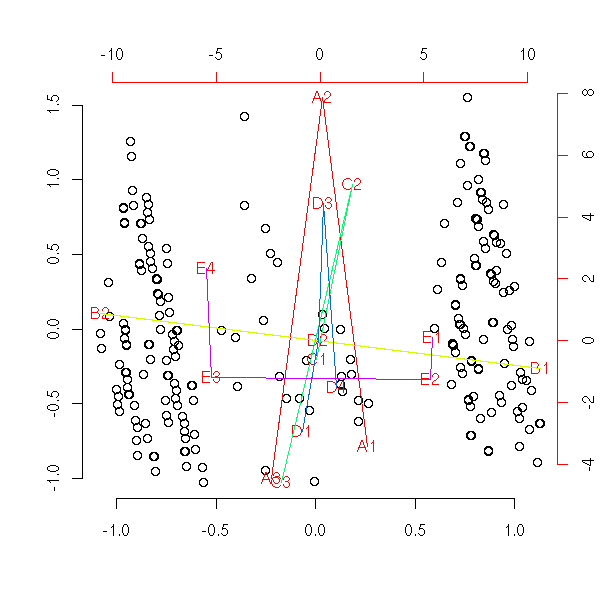

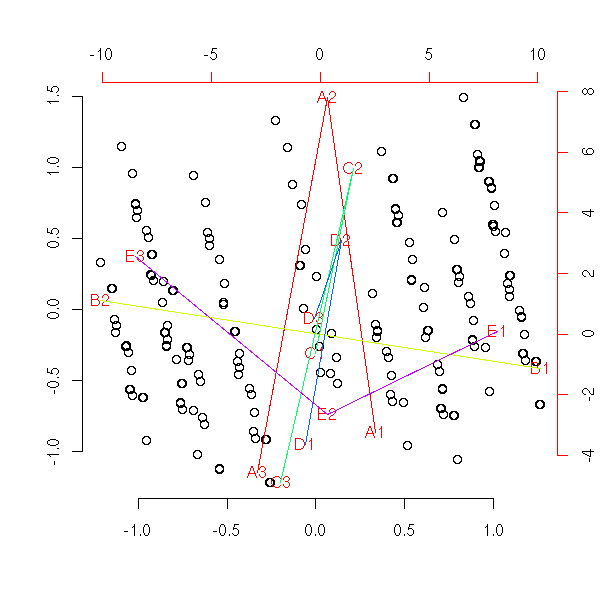

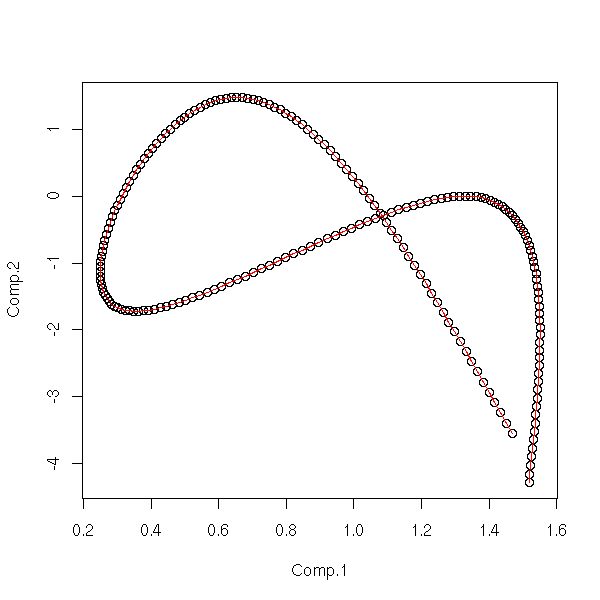

After performing the PCA, we can add new subjects on the plot (test (out-of-sample) subjects; fictitious sujbects, representative of certain classes of subjects): just use the "predict" function. Dually, we can add variables (for explanatory reasons), Of those variables are qualitative, we actually add an "average subject" for each value of this variable. This is often called a "biplot", because both subjects (in black) and variables (in red) appear on the plot.

data(USArrests) p <- prcomp(USArrests) biplot(p)

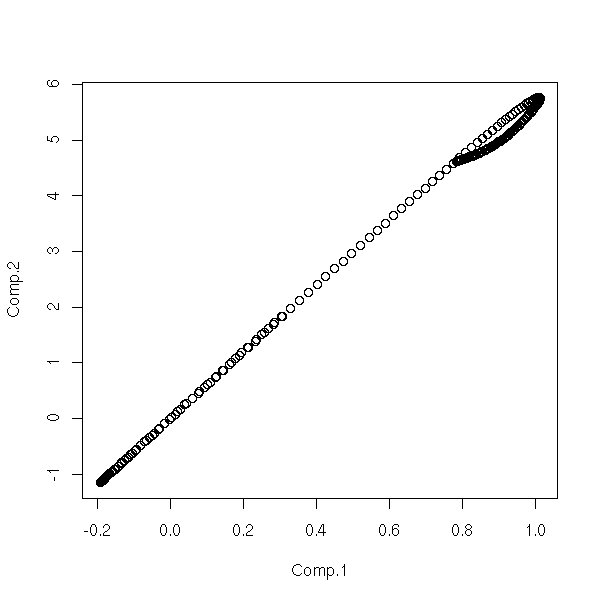

TODO: find a situation where you really want to add variables and/or subjects. n <- 100 # Number of subjects nn <- 10 # Number of out-of-sample subjects k <- 5 # Number of variable kk <- 3 # Number of new variables x <- matrix(rnorm(n*k), nr=n, nc=k) x <- t(rnorm(k) + t(x)) # Initial data y <- matrix(rnorm(n*kk), nr=n, nc=kk) # New variables z <- matrix(rnorm(nn*k), nr=nn, nc=k) # New subjects r <- prcomp(x) # I check that my change-of-base formulas were right all(abs( t(r$center + t(x %*% r$rotation)) - r$x )<1e-8) # Subjects coordinates t(r$center + t(x %*% r$rotation)) # Out-of-sample subject coordinates t(r$center + t(z %*% r$rotation)) # For each new variable, we add an "average" subject # (more precisely: x %*% t(yy[1,]) = orthogonal projection of # y[,1] on the subspace spanned by the columns of x). yy <- t(lm(y~x-1)$coef) t(r$center + t(yy %*% r$rotation))

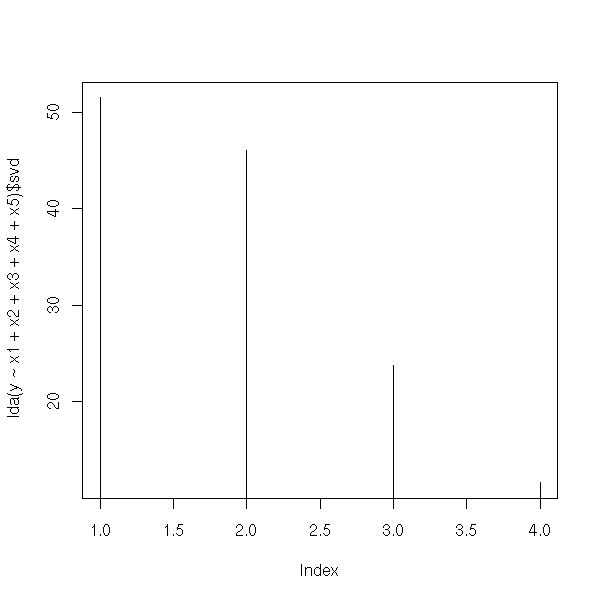

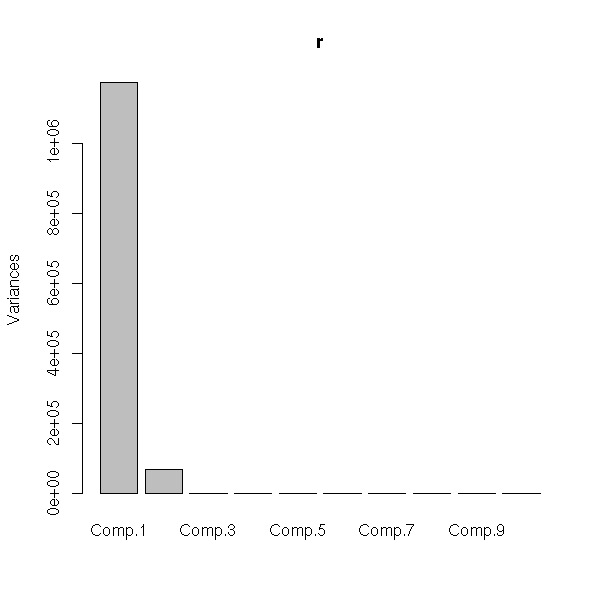

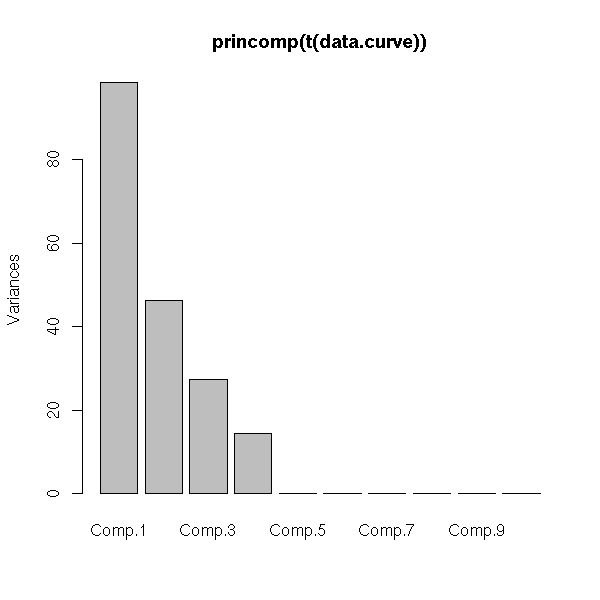

Here are the eigen values. They are plummeting: this means that the PCA is meaningful and that we can retain only the eigen vectors with the highest eigenvalues (here, the first: we could have done a 1-dimensional plot (a histogram)).

plot(p)

There is also a "prcomp" functions, that does the same computations, with a few more limitations (namely, you should have more observations that variables): do not use it.

The vocabulary used with principal components analysis may surprise you: people speak of "loadings" where you would expect "rotation matrix" and "scores" where you think "new coordinates".

In the preceeding plot, the old basis vectors are in red. There is the correlation circle -- those vectors lie on the unit sphere, which we project on the first two eigen vectors (it should not be called a circle but a disc -- the projection of a sphere is a disc, not a circle).

a <- seq(0,2*pi,length=100)

plot( cos(a), sin(a),

type = 'l', lty = 3,

xlab = 'comp 1', ylab = 'comp 2',

main = "Correlation circle")

v <- t(p$rotation)[1:2,]

arrows(0,0, v[1,], v[2,], col='red')

text(v[1,], v[2,],colnames(v))

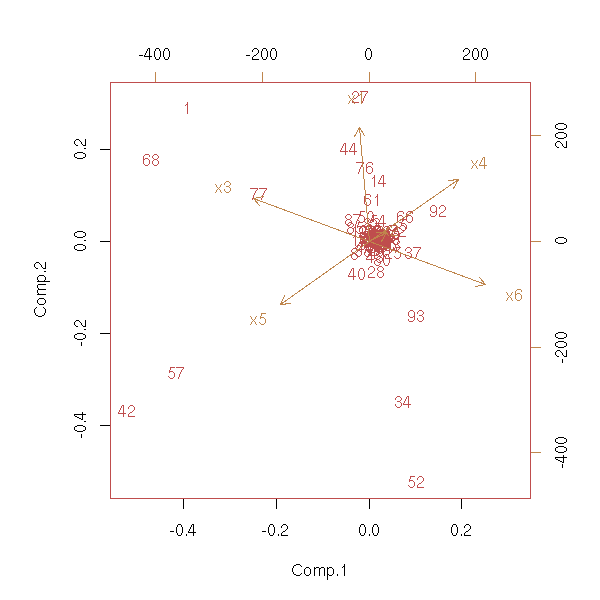

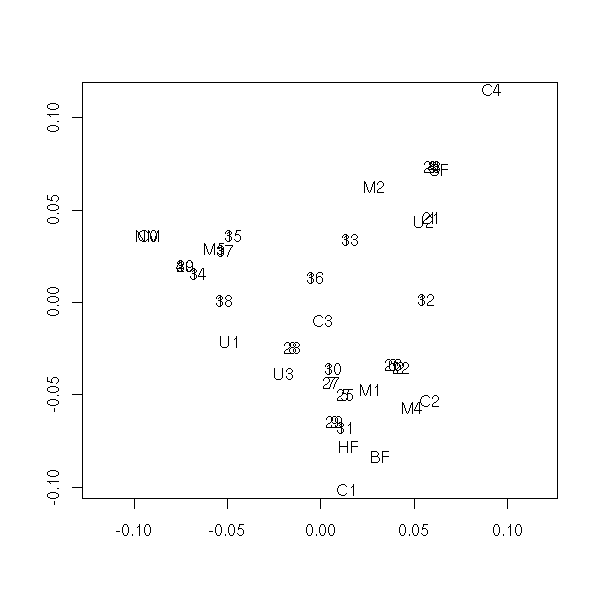

Here is a situation where PCA is not relevant (my pupils's marks, when I was a high school teacher).

# Copy-pasted with the help of the "deparse" command:

# cat( deparse(x), file='foobar')

notes <- matrix( c(15, NA, 7, 15, 11, 7, 7, 8, 11, 11, 13,

6, 14, 19, 9, 8, 6, NA, 7, 14, 11, 13, 16, 10, 18, 7, 7,

NA, 11, NA, NA, 6, 15, 5, 11, 7, 3, NA, 3, 1, 10, 1, 1,

18, 13, 2, 2, 0, 7, 9, 13, NA, 19, 0, 17, 8, 2, 9, 2, 5,

12, 0, 8, 12, 8, 4, 8, 0, 5, 5.5, 1, 12, 4, 13, 5, 11, 6,

0, 7, 8, 11, 9, 9, 9, 14, 8, 5, 8, 5, 5, 12, 6, 16.5,

13.5, 15, 3, 10.5, 1.5, 10.5, 9, 15, 7.5, 12, 13.5, 4.5,

13.5, 13.5, 6, 12, 7.5, 9, 6, 13.5, 13.5, 15, 13.5, 6, NA,

13.5, 4.5, 14, NA, 14, 14, 14, 8, 16, NA, 6, 6, 12, NA, 7,

15, 13, 17, 18, 5, 14, 17, 17, 13, NA, NA, 16, 14, 18, 13,

17, 17, 8, 4, 16, 16, 16, 10, 15, 8, 10, 13, 12, 14, 8,

19, 7, 7, 9, 8, 15, 16, 8, 7, 12, 5, 11, 17, 13, 13, 7,

12, 15, 8, 17, 16, 16, 6, 7, 11, 15, 15, 19, 12, 15, 16,

13, 19, 14, 4, 13, 13, 19, 11, 15, 7, 20, 16, 10, 12, 16,

14, 0, 0, 11, 9, 4, 10, 0, 0, 5, 11, 12, 7, 12, 17, NA, 6,

6, 9, 7, 0, 7, NA, 15, 3, 20, 11, 10, 13, 0, 0, 6, 1, 5,

6, 5, 4, 2, 0, 8, 9, NA, 0, 11, 11, 0, 7, 0, NA, NA, 7, 0,

NA, NA, 6, 9, 6, 4, 5, 4, 3 ), nrow=30)

notes <- data.frame(notes)

# These are not the real names

row.names(notes) <-

c("Anouilh", "Balzac", "Camus", "Dostoievski",

"Eschyle", "Fenelon", "Giraudoux", "Homer",

"Ionesco", "Jarry", "Kant", "La Fontaine", "Marivaux",

"Nerval", "Ossian", "Platon", "Quevedo", "Racine",

"Shakespeare", "Terence", "Updike", "Voltaire",

"Whitman", "X", "Yourcenar", "Zola", "27", "28", "29",

"30")

attr(notes, "names") <- c("C1", "DM1", "C2", "DS1", "DM2",

"C3", "DM3", "DM4", "DS2")

notes <- as.matrix(notes)

notes <- t(t(notes) - apply(notes, 2, mean, na.rm=T))

# Get rid of NAs

notes[ is.na(notes) ] <- 0

# plots

plot(princomp(notes))

biplot(princomp(notes))

Here, we gave the same weight to each mark and each subject; we could have give more weight to some marks, to reflect their nature (test or homework) or the weight of the subjects for the final exam (e.g., A-level): it is the same algorithm, only the scalar product on the space changes.

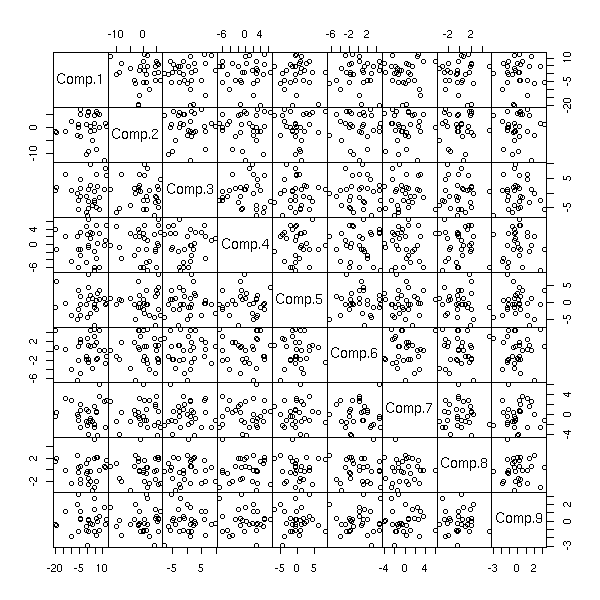

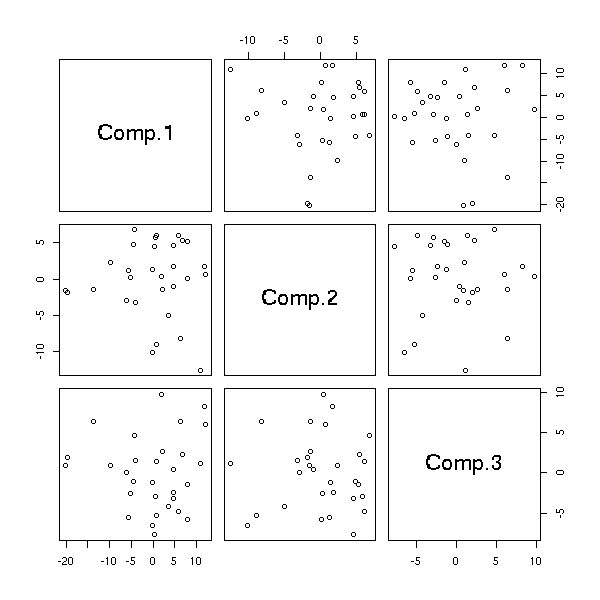

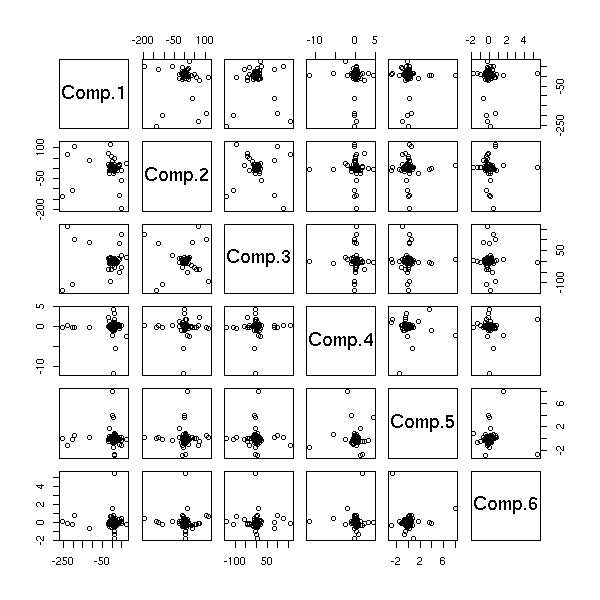

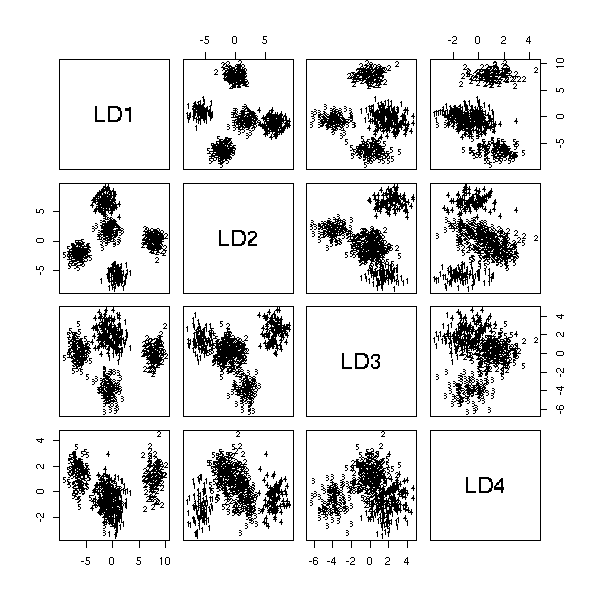

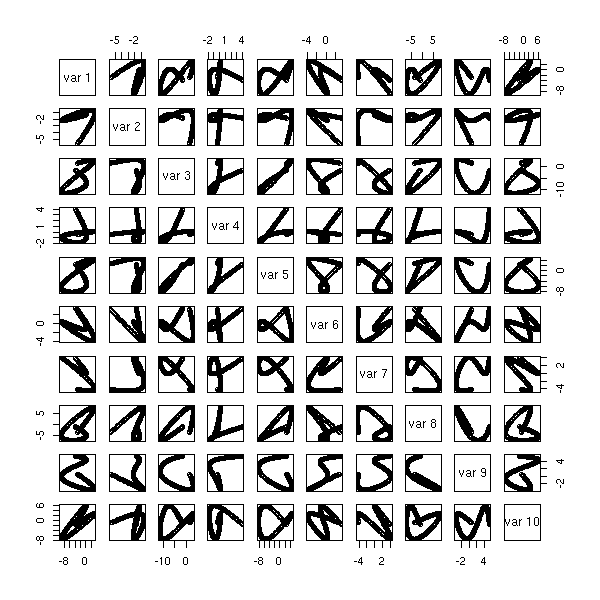

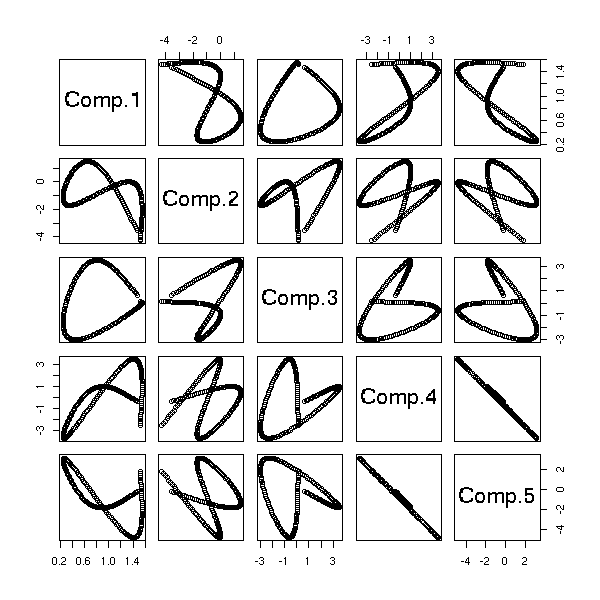

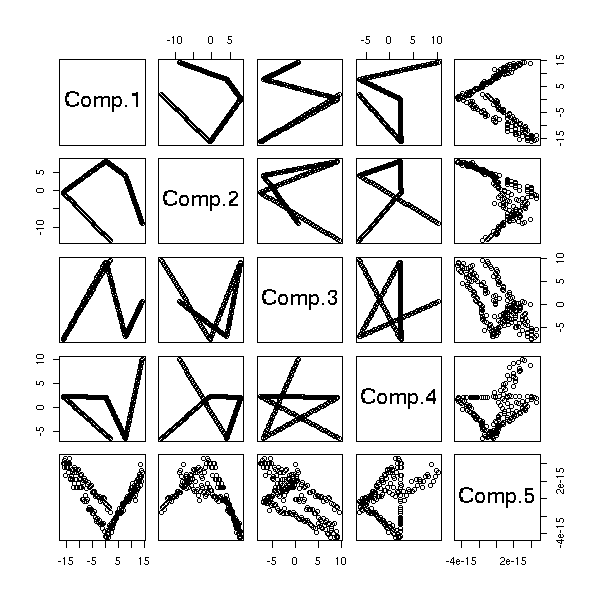

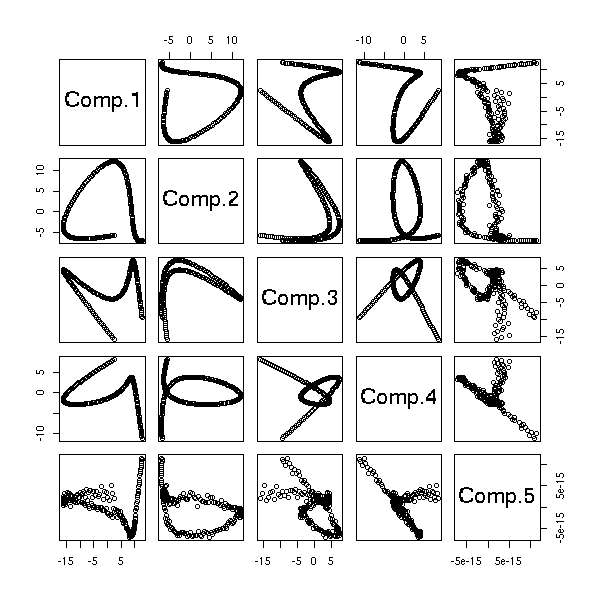

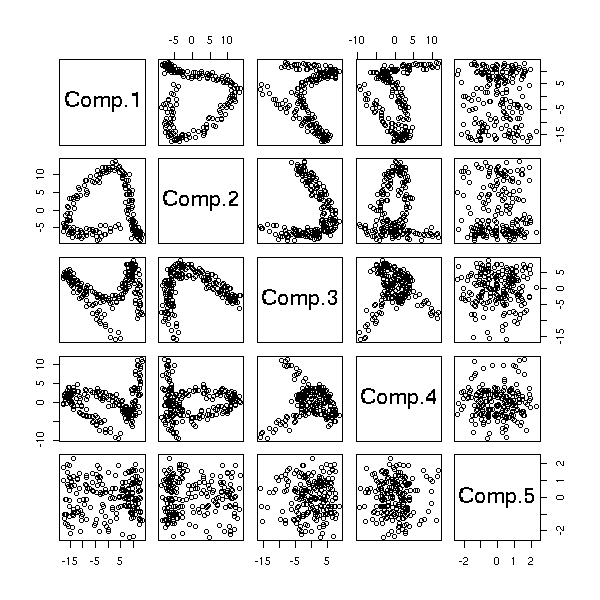

The "biplot" command anly gives the first two dimensions: we can sometimes see more with the "pairs" command.

pairs(princomp(notes)$scores, gap=0)

pairs(princomp(notes)$scores[,1:3])

p <- princomp(notes)

pairs( rbind(p$scores, p$loadings)[,1:3],

col=c(rep(1,p$n.obs),rep(2, length(p$center))),

pch=c(rep(1,p$n.obs),rep(3, length(p$center))),

)

We leave it to the reader to add red arrows (instead of red points) for the variables -- actually, the "pairs" function is not very configurable: the different panels take as arguments the coordinates of the points to draw, while one could want to plot, in the same panel, very different things -- but we do not even have the row and column numbers... The corresponding function in the "lattice" library seems scarcely more configurable.

library(lattice) splom(as.data.frame( princomp(notes)$scores[,1:3] ))

Here is another situation where one could want to use PCA: the classification of texts in a corpus. The rows of the table (the subjects) are the texts, the columns are the words (the vocabulary), the values are the number of occurrences of the words in the text. As the dimension of the space (the number of columns) is rather high (several thousands), it is not very easy to compute the covariance matrix, let alone diagonalize it. Yet, one can perform the PCA, in an approximate way, with neural networks.

http://www.loria.fr/projets/TALN/actes/TALN/articles/TALN02.pdf

Here is another means of tackling the same problem, without PCA but still with geometry in high-dimensional spaces:

http://www.perl.com/lpt/a/2003/02/19/engine.html

To understand what PCA really is, how it works, let us implement it ourselves. Here is one possible implementation.

my.acp <- function (x) {

n <- dim(x)[1]

p <- dim(x)[2]

# Translation, to use linear algebra

centre <- apply(x, 2, mean)

x <- x - matrix(centre, nr=n, nc=p, byrow=T)

# diagonalizations, base changes

e1 <- eigen( t(x) %*% x, symmetric=T )

e2 <- eigen( x %*% t(x), symmetric=T )

variables <- t(e2$vectors) %*% x

subjects <- t(e1$vectors) %*% t(x)

# The vectors we want are the columns of the

# above matrices. To draw them, with the "pairs"

# function, we have to transpose them.

variables <- t(variables)

subjects <- t(subjects)

eigen.values <- e1$values

# Plot

plot( subjects[,1:2],

xlim=c( min(c(subjects[,1],-subjects[,1])),

max(c(subjects[,1],-subjects[,1])) ),

ylim=c( min(c(subjects[,2],-subjects[,2])),

max(c(subjects[,2],-subjects[,2])) ),

xlab='', ylab='', frame.plot=F )

par(new=T)

plot( variables[,1:2], col='red',

xlim=c( min(c(variables[,1],-variables[,1])),

max(c(variables[,1],-variables[,1])) ),

ylim=c( min(c(variables[,2],-variables[,2])),

max(c(variables[,2],-variables[,2])) ),

axes=F, xlab='', ylab='', pch='.')

axis(3, col='red')

axis(4, col='red')

arrows(0,0,variables[,1],variables[,2],col='red')

# Return the data

invisible(list(data=x, centre=centre, subjects=subjects,

variables=variables, eigen.values=eigen.values))

}

n <- 20

p <- 5

x <- matrix( rnorm(p*n), nr=n, nc=p )

my.acp(x)

title(main="ACP by hand")

To check we did not make any error in implementing the algorithm, we can compare the result with that of the "printcomp" command.

biplot(princomp(x))

Exercise: Add the subject names; the variable names; plot the first three dimensions of the PCA (not just the first two), as with the "pairs" command; add new variables to the plot (if it is a quantitative variable, apply the same base change, if it is a qualitative variable, compute an average subject for each value of this variable and perform the base change).

Exercice: Modify the preceding code by replacing the "eigen" function by the "svd" command, that performs a Singular Value Decomposition.

TODO

There are several kind of PCA: centered or not, normalized (based on the correlations matrix) or not (based on the variance-covariance matrix).

prcomp(x, center=TRUE, scale.=FALSE) # default

Let us first consider the centered non-normalized principal component analysis, i.e., that based on the variance-covariance matrix.

TODO: This was written for the "princomp" function, not the "prcomp" one -- actually, the problems disappear.

d <- USArrests[,1:3] # Data dd <- t(t(d)-apply(d, 2, mean)) # Centered data m <- cov(d) # Covariances matrix e <- eigen(m) # Eigen values and eigen vectors p <- princomp( ~ Murder + Assault + UrbanPop, data = USArrests)

The change-of-base matrix is the same in both cases:

> e$vectors

[,1] [,2] [,3]

[1,] -0.04181042 -0.04791741 0.99797586

[2,] -0.99806069 -0.04410079 -0.04393145

[3,] -0.04611661 0.99787727 0.04598061

> unclass(p$loadings)

Comp.1 Comp.2 Comp.3

Murder -0.04181042 -0.04791741 0.99797586

Assault -0.99806069 -0.04410079 -0.04393145

UrbanPop -0.04611661 0.99787727 0.04598061and so are the coordinates:

> p$scores[1:3,]

Comp.1 Comp.2 Comp.3

Alabama -64.99204 -10.660459 2.188264

Alaska -91.34472 -21.676617 -2.651214

Arizona -123.68089 8.979374 -4.437864

> (dd %*% p$loadings) [1:3,]

Comp.1 Comp.2 Comp.3

Alabama -64.99204 -10.660459 2.188264

Alaska -91.34472 -21.676617 -2.651214

Arizona -123.68089 8.979374 -4.437864Let us now look at the normalized principal component analysis: we do not simply center each column of the matrix, we normalize them.

d <- USArrests[,1:3]

dd <- apply(d, 2, function (x) { (x-mean(x))/sd(x) })The variance-covariance matrix of this new matrix is the correlation matrix of the initial matrix.

> cov(dd)

Murder Assault UrbanPop

Murder 1.00000000 0.8018733 0.06957262

Assault 0.80187331 1.0000000 0.25887170

UrbanPop 0.06957262 0.2588717 1.00000000

> cor(d)

Murder Assault UrbanPop

Murder 1.00000000 0.8018733 0.06957262

Assault 0.80187331 1.0000000 0.25887170

UrbanPop 0.06957262 0.2588717 1.00000000Let us go on with the computations:

m <- cov(dd) e <- eigen(m) p <- princomp( ~ Murder + Assault + UrbanPop, data = USArrests, cor=T)

We got the right change-of-base matrix:

> e$vectors

[,1] [,2] [,3]

[1,] 0.6672955 0.30345520 0.6801703

[2,] 0.6970818 0.06713997 -0.7138411

[3,] 0.2622854 -0.95047734 0.1667309

> unclass(p$loadings)

Comp.1 Comp.2 Comp.3

Murder 0.6672955 -0.30345520 0.6801703

Assault 0.6970818 -0.06713997 -0.7138411

UrbanPop 0.2622854 0.95047734 0.1667309But the coordinates are not the same...

> p$scores[1:3,]

Comp.1 Comp.2 Comp.3

Alabama 1.2508055 -0.9341207 0.2015063

Alaska 0.8006592 -1.3941923 -0.6532667

Arizona 1.3542765 0.8368948 -0.8488785

> (dd %*% e$vectors) [1:3,]

[,1] [,2] [,3]

Alabama 1.2382343 0.9247323 0.1994810

Alaska 0.7926121 1.3801799 -0.6467010

Arizona 1.3406654 -0.8284836 -0.8403468However, the error is always the same (up to the sign, which is meaningless):

> p$scores[1:3,] / (dd %*% e$vectors) [1:3,]

Comp.1 Comp.2 Comp.3

Alabama 1.010153 -1.010153 1.010153

Alaska 1.010153 -1.010153 1.010153

Arizona 1.010153 -1.010153 1.010153The difference comes from the fact that there are two definitions of covariance, one in which you divide ny n, another in which you divide by n-1.

> dim(d) [1] 50 3 > sqrt(50/49) [1] 1.010153

TODO: this is not the right place.

Later, with LDA.

for i in `ls *.txt | cat_rand | head -20`

do

perl -n -e 'BEGIN {

foreach ("a".."z") { $a{$_}=0 }

};

y/A-Z/a-z/;

s/[^a-z]//g;

foreach (split("")) { $a{$_}++ }

END {

foreach("a".."z"){print "$a{$_} "}

}' <$i

echo E

done > ling.txt

# Then the same thing in a directory containing French texts

b <- read.table('ling.txt')

names(b) <- c(letters[1:26], 'language')

a <- b[,1:26]

a <- a/apply(a,1,sum)

biplot(princomp(a))

plot(hclust(dist(a)))

kmeans.plot <- function (data, n=2, iter.max=10) {

k <- kmeans(data,n,iter.max)

p <- princomp(data)

u <- p$loadings

# The observations

x <- (t(u) %*% t(data))[1:2,]

x <- t(x)

# The centers

plot(x, col=k$cluster, pch=3, lwd=3)

c <- (t(u) %*% t(k$center))[1:2,]

c <- t(c)

points(c, col = 1:n, pch=7, lwd=3)

# A segment joining each observation to its group center

for (i in 1:n) {

for (j in (1:length(data[,1]))[k$cluster==i]) {

segments( x[j,1], x[j,2], c[i,1], c[i,2], col=i )

}

}

text( x[,1], x[,2], attr(x, "dimnames")[[1]] )

}

kmeans.plot(a,2)

# plot(lda(a,b[,27])) # Bug? # plot(lda(as.matrix(a),b[,27])) # Newer bug?

Actually, computers do not perform Principal Component Analysis as we have just seen, by computing the variance-covariance matrix and diagonalizing it.

TODO

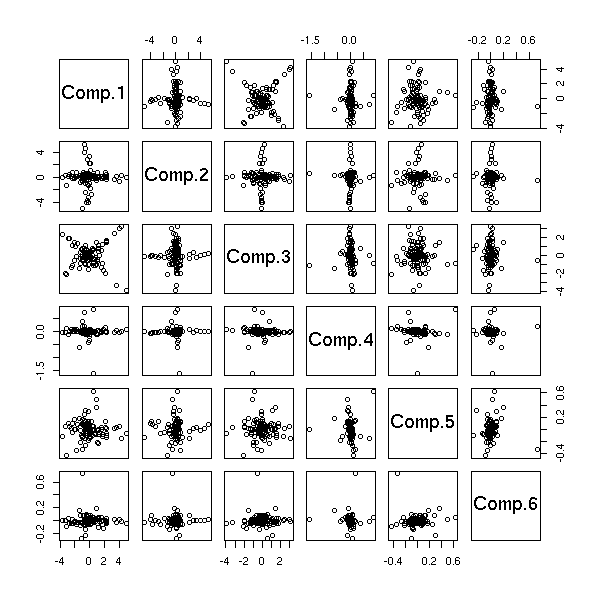

Principal component analysis assumes that the data is gaussian. If it is not, we can replace the values by their rank. But then, the variables follow a uniform distribution; We can transform those uniform data to get a gaussian distribution.

n <- 100

v <- .1

a <- rcauchy(n)

b <- rcauchy(n)

c <- rcauchy(n)

d <- data.frame( x1 = a+b+c+v*rcauchy(n),

x2 = a+b-c+v*rcauchy(n),

x3 = a-b+c+v*rcauchy(n),

x4 = -a+b+c+v*rcauchy(n),

x5 = a-b-c+v*rcauchy(n),

x6 = -a+b-c+v*rcauchy(n) )

biplot(princomp(d))

rank.and.normalize.vector <- function (x) {

x <- (rank(x)-.5)/length(x)

x <- qnorm(x)

}

rank.and.normalize <- function (x) {

if( is.vector(x) )

return( rank.and.normalize.vector(x) )

if( is.data.frame(x) ) {

d <- NULL

for (v in x) {

if( is.null(d) )

d <- data.frame( rank.and.normalize(v) )

else

d <- data.frame(d, rank.and.normalize(v))

}

names(d) <- names(x)

return(d)

}

stop("Data type not handled")

}

biplot(princomp(apply(d,2,rank.and.normalize)))

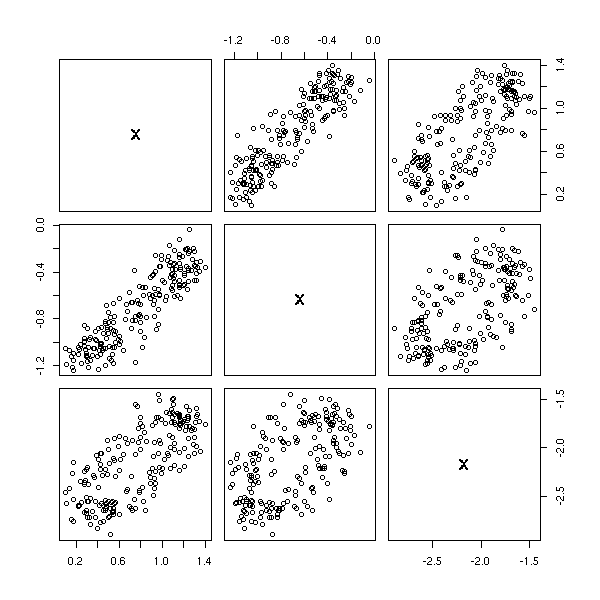

Let us check on the other components of the PCA.

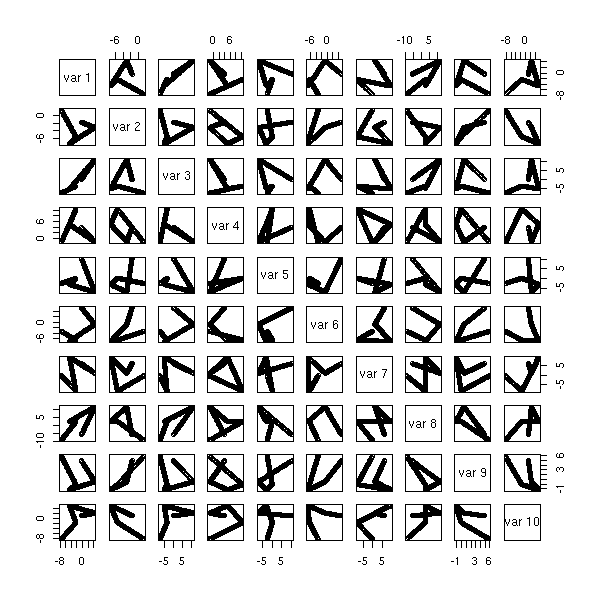

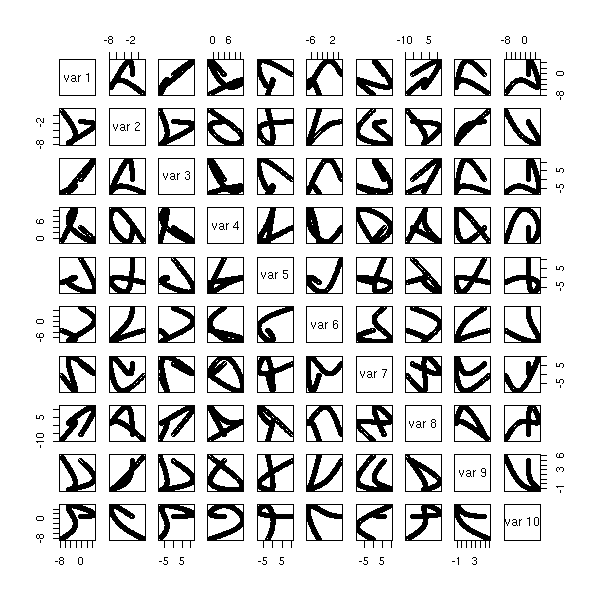

pairs( princomp(d)$scores )

pairs( princomp(apply(d,2,rank.and.normalize))$scores )

For non-linear problems, we can first embed our space in a larger one, with an application such as

(x,y) |--> (x^2, x*y, y^2).

In fact, as the PCA only involves scalar products, we do not really need to compute those new coordinates: it suffices to replace all the occurrences of the scalar product <x,y> by that of the new space (this function, that expresses the scalar product in the new space from the coordinates in the old space, is called a kernel -- and you can take any kernel).

TODO: an example

We shall reuse that idea later (to use a kernel to dive into a higher-dimensional space to linearize a non-linear problem) when we speak of SVM (Support Vector Machines).

http://www.kernel-machines.org/papers/talk-dagm99_4.ps.gz

TODO:

library(kernlab) ?kpca

Principal Component Analysis tries to find a plane (more generally, a subspace of reasonable dimension) in which (the orthogonal projection of) the data is as dispersed as possible, the dispersion being measured with the variance matrix.

Projection pursuit is a generalization of PCA in which one looks for a subspace which maximizes some "interestingness" criterion -- and everyone can define their own criterion. For instance, it could be a measure of the dispersion of the data (based on the variance matrix or on more robust dispersion estimators) or a measure of the non-gaussianity of the data (kurtosis, skewness, etc.).

Those measures of interestingness are called "indices".

The most common Projection Pursuits are PCA, ICA (Independant Component Analysis)m and robust PCA (replace the 1-dimensional variance you are trying to maximize by a robust equivalent).

http://www.r-project.org/useR-2006/Slides/Fritz.pdf library(pcaPP)

There is also a function called "ppr" (projection pursuit regression) but it seems to do something competely different: variable selection for linear regression, with several variables to predict.

TODO: Give an example

TODO

The grand tour is an animation, a continuous family of projections of the cloud of points onto a 2-dimensional space, obtained by interpolation between random projections: you are supposed to look at it until you see something (clusters, artefacts, alignments, etc.).

GGobi can display grand tours.

http://www.ggobi.org/

The guided tour is a variant of the grand tour, where one interpolates between local maxima of a projection pursuit function instead of random projections.

http://citeseer.ist.psu.edu/cook95grand.html

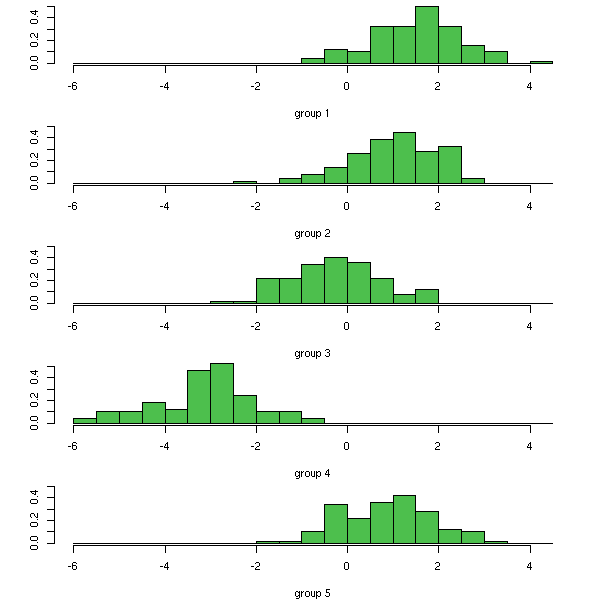

At first sight, it looks very similar to Principal component analysis -- but it is very different. The general idea is that the variables we are looking at are linear combinations of independant random variables; and we want to recover those random variables. The only assumption is that the random variables we are looking for are independant (well, actually, there is another assumption: those random variables have to be non-gaussian).

ICA has been mainly used in signal processing, the initial example being the cocktail party problem: you have two microphones (or two ears) and you are hearing two conversations at the same time; you want to separate them. Similarly, ICA has also been used to analyze EEG (electroencephalograms).

TODO: 2-dimensional applications, image compression, feature extraction.

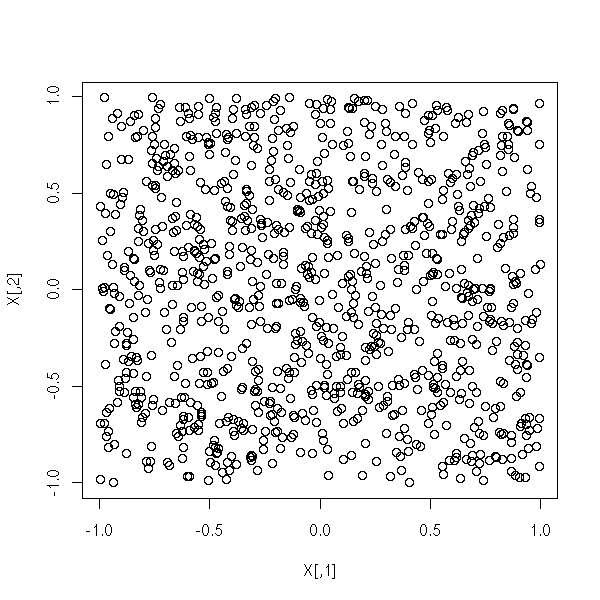

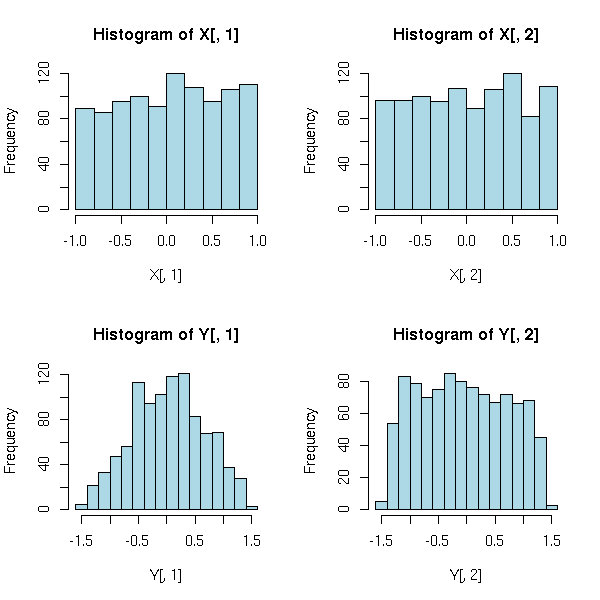

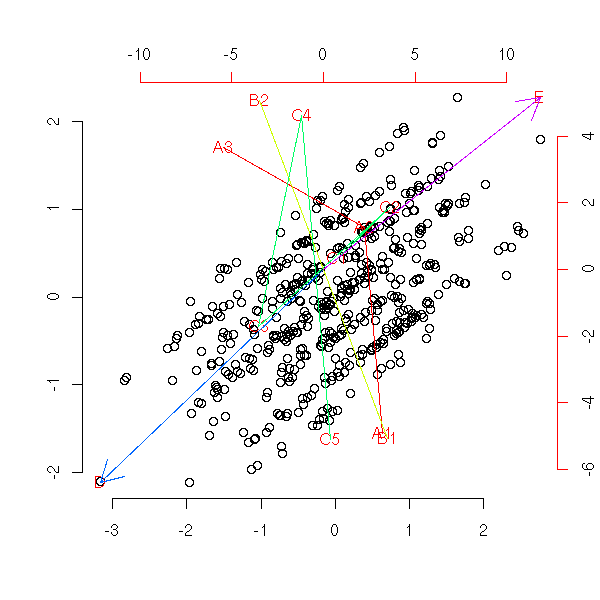

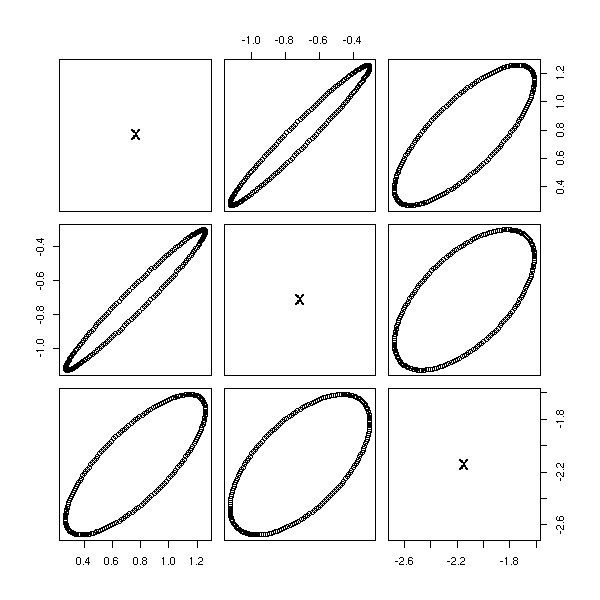

Let us see on an example how it differs with Principal Component Analysis: let us take two random variables X1 and X2, uniformly distributed

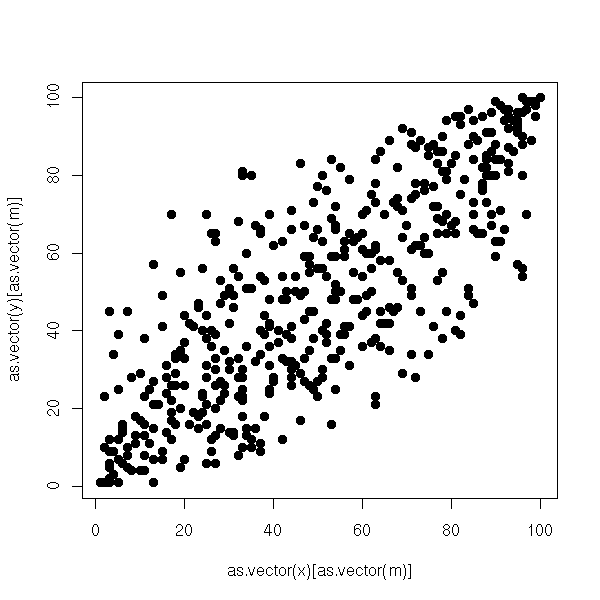

N <- 1000 X <- matrix(runif(2*N, -1, 1), nc=2) plot(X)

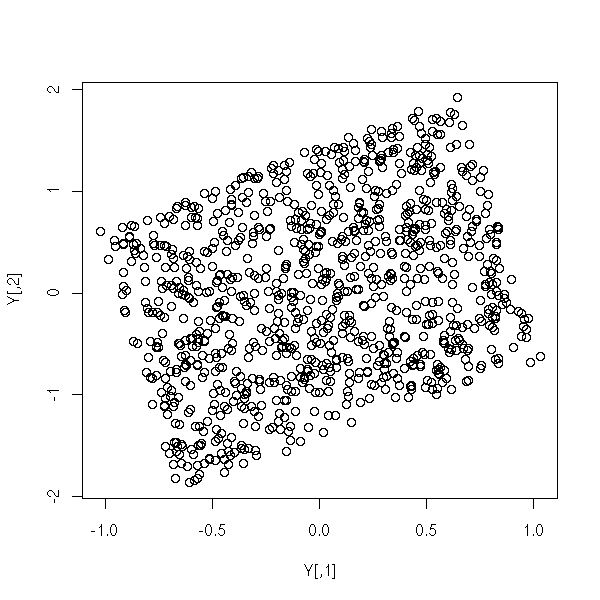

and transform them

M <- matrix(rnorm(4), nc=2) Y <- X %*% M plot(Y)

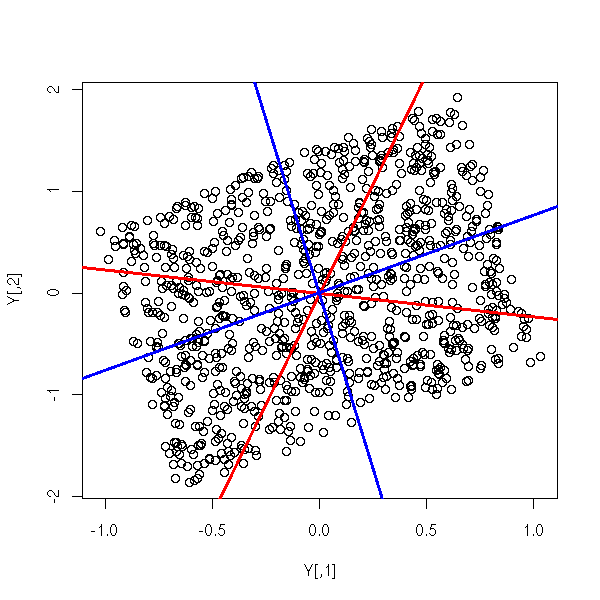

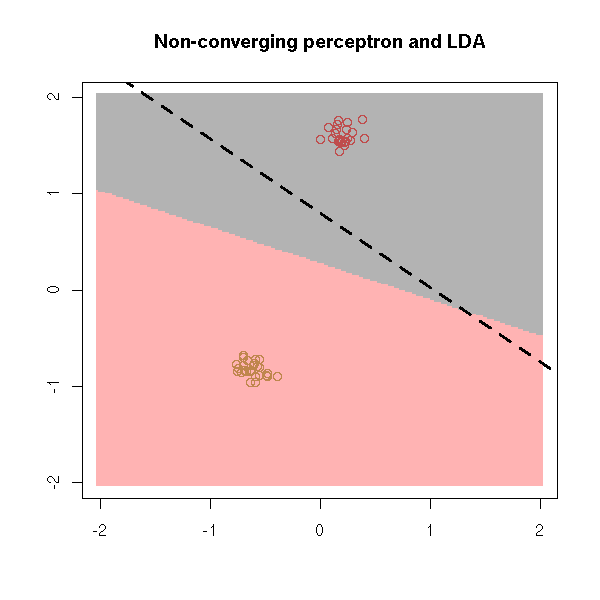

We get a parallelogram. PCA would yield something close to its largest diagonal (red, in the following plot); ICA would yield the image of the axes (blue), i.e., new axes parallel to the sides of the parallelogram.

plot(Y) p <- prcomp(Y)$rotation abline(0, p[2,1] / p[1,1], col="red", lwd=3) abline(0, -p[1,1] / p[2,1], col="red", lwd=3) abline(0, M[1,2]/M[1,1], col="blue", lwd=3) abline(0, M[2,2]/M[2,1], col="blue", lwd=3)

Other examples:

op <- par(mfrow=c(2,2), mar=c(1,1,1,1))

for (i in 1:4) {

N <- 1000

X <- matrix(runif(2*N, -1, 1), nc=2)

M <- matrix(rnorm(4), nc=2)

Y <- X %*% M

plot(Y, xlab="", ylab="")

p <- prcomp(Y)$rotation

abline(0, p[2,1] / p[1,1], col="red", lwd=3)

abline(0, -p[1,1] / p[2,1], col="red", lwd=3)

abline(0, M[1,2]/M[1,1], col="blue", lwd=3)

abline(0, M[2,2]/M[2,1], col="blue", lwd=3)

}

par(op)

The ICA yields the variables that were indeed used.

The main idea behind the algorithm is the fact that (from the central limit theorem) a linear combination of non-gaussian random variables is "more gaussian".

op <- par(mfrow=c(2,2)) hist(X[,1], col="light blue") hist(X[,2], col="light blue") hist(Y[,1], col="light blue") hist(Y[,2], col="light blue") par(op)

Therefore the algorithm goes as follows:

1. Normalize the data, so that they have a variance equal to 1 and that the be uncorrelated. 2. Find (with the usual numeric optimization algorithms) the linear transformation that maximizes the non-gaussianity.

To this end, one can use several measures of non-gaussianity: the kurtosis (i.e., the fourth moment), the entropy (integral of -f * log(f), where f is the pdf), mutual information, etc.

There are two implementations of this algorithm in R. The first one, "ica" in the "e1071" package, does not give the expected results.

library(e1071) r <- ica(Y,.1) plot(r$projection)

The second one, "mlica" in the "mlica" package, gives the expected result.

library(mlica)

ICA <- function (x,...) {

prPCA <- PriorNormPCA(x);

prNCP <- proposeNCP(prPCA,0.1);

mlica(prNCP,...)

}

set.seed(1) # It sometimes crashes...

N <- 1000

X <- matrix(runif(2*N, -1, 1), nc=2)

M <- matrix(rnorm(4), nc=2)

Y <- X %*% M

r <- ICA(Y)

plot(r$S)

TODO: Give more interesting results.

See also:

http://www.cis.hut.fi/projects/ica/icademo/ http://www.cs.helsinki.fi/u/ahyvarin/papers/NN00new.pdf

Principal Component Analysis is about approximating a variance matrix. The simplest approximation is a scalar matrix (a diagonal matrix with always the same element on the diagonal), the next simplest is a diagonal matrix; then comes the PCA, which writes the variance matrix as V = B B' for some rectangular matrix B, with as many columns as you want components. The matrix B can be characterized as the matrix that minimizes the norm of V - B B' (actually, it is not unique, but (a.s.) unique up to multiplication by an orthogonal matrix). The next step is the factor model: we write V as B B' + Delta, where Delta is a diagonal matrix.

In R, this is obtained with the factanal() function.

r <- factanal(covmat = V, factors = k) # The correlation matrix v <- r$loadings %*% t(r$loadings) + diag(r$uniquenesses) # The corresponding variance matrix v <- diag(sqrt(diag(V))) %*% v %*% diag(sqrt(diag(V)))

This is used, for instance, in finance: we model stock returns as a multidimensional gaussian distribution. A portfolio can be seen as a linear combination of stocks; the coefficients are called the weights of the portfolio and are usually assumed to sum up to 1 (you might also want them to be positive). If w is a portfolio and V the variance matrix of the distribution of returns, then the returns of the portfolio are gaussian with variance w' V w; the square root of this variance is called the risk of the portfolio. Finding the portfolio with the minimum risk possible sounds simple enough (it is an optimization problem), but you have to estimate the variance matrix in the first place. If your universe contains thousands of stocks, you actually want to estimate a 1000*1000 matrix: you will never have enough data to do that! You need a way to reduce the number of quantities to estimate, you need a way to more parsimoniously parametrize variance matrices. Principal Component Analysis is the classical way of doing this, but it assumes that all the stocks respond to the same few sources of randomness: this is not a reasonable assumption. Instead, we assume that the stocks respond to a few "risk factors" (they could be interpreted as, e.g., oil prices, comsumption indexes, interest rates, etc.) and an idiosyncratic component, specific to each stock: this is a factor model. Such an estimation of the variance matrix of stock returns is called a risk model.

TODO: An example with actual computations (portfolio optimization?)

TODO: Merge this section with the previous TODO: Decide whether to put this section here or together with the variance matrix (there is already a note about RMT somewhere)...

Principal Component analysis can also be seen as decomposition of a variance matrix, that can also lead to a simplification of variance matrices (by discarding the eigenvectors with low eigenvalues), to a parametrization of variance matrices with few parameters. Since large variance matrices require a huge amount of data to be reliably estimated, PCA can allow you to get a precise (but biased) estimation with little data.

Those parametrizations are often called "factor models". They are used, for instance, in finance: when you want to invest in stocks (or any other asset, actually), you do not buy the stocks for which you have a positive view in equal quantities: some of those stocks can be moving together. Consider for instance three stocks, A, B and C, on which you have a positive view, where A and B tend to mode together and C moves independently from A and B: it might not be a good idea to invest a third of your wealth in each of them -- it might be wiser to invest half in C, and a quarter in A and B. This reasoning can be formalized (and justified) if one assumes that the returns of those three assets follow a gaussian distribution with mean (mu, mu, mu) (where mu is the expected return of those assets -- here we assume they are equal) and correlation matrix

1 1/2 0 1/2 1 0 0 0 1

We want to find the portfolio (i.e., the combination of assets) with the lowest risk, i.e., the lowest variance.

But where do we get this variance matrix in the first place? We have to estimate it with historical data: for instance from the monthly returns of the stocks in the past three years. But if you have 1000 stocks (that is a small number: in real life, it is usually between 1000 and 10,000), that is 36,000 data points to estimate around 500,000 parameters -- this is not reasonable.

The idea is to assume that the variance matrix has some simple form, i.e., that it can be parametrized with a reasonable number of parameters. For instance, one can assume that the correlations between the returns is due to their being correlated with a small number of "factors": then, we just have to give the "exposure" of the each stock to each factor, B and the variance matrix of the factors, v. The variance matrix of the stock returns is then

V = B v B'

If one assumes that the factors are independant (and of variance 1), i.e., their variance matrix is the identity matrix, v=1, and V = B B'.

But this is very similar to the decomposition of a variance matrix provided by PCA: if, furthermore, we do not know the factors beforehand, we can simply select them as the first eigenvectors.

This is called a noiseless statistical factor model.

TODO: Noiseless factor model

TODO: Scalar factor model

TODO: Approximate factor model

TODO: The double Procrustes problem?

When the data you are modeling are functions (for instance, the evolution over time of some quantity), you might realize that the principal components are noisy and try to smooth them. Actually, this is not the optimal thing to do -- by smoothing the principal components, you run the risk of smoothing away the information they were supposed to contain. Instead, you can put the smoothing inside the PCA algorithm.

The first step is usually to express the functions to be studied in some basis -- by doing so, you already smooth them, but you should be careful not to discard the features of interest. Using functions instead of series of points allows you to have a different number of points, a different sampling frequency for each curve -- or even, irregular sampling. Using a basis of functions brings the problem back in the finite-dimensional realm and reduces it to a linear algebra problem.

In the second step, one computes the mean of all those curves, usually using the same basis.

TODO: Do we add a smoothing penalty for the mean? TODO: Formula...

In the third step, one actually performs the principal component analysis.

TODO: Explain how to introduce the smoothing penalties TODO: Formulas TODO: Implementation?

A few problems might occur.

For instance, the functions to estimate might have some very important properties: for instance, we might know that they have to be increasing, in spite of error measurements. We can enforce such a requirement by parametrizing the function via a differential equation. For instance, to get an increasing function H (say, height, when studying child growth), one just has to find a function w (without any restriction) such that H'' = w H -- w can be interpreted as the "relative acceleration".

Another problem is that the features in the various curves to study are not aligned: they occur in the same order, but with different amplitudes and locations. In that situation, the mean curve can fail to exhibit the very features we want to study -- they are averaged out. To palliate this problem, one can "align" the curves, by reparametrizing the curves (i.e., by considering an alternate time: clock time versus physiological time in biology, clock time versus market time or transaction time in finance, etc.). This is called "registration" or "(dynamic) time warping".

TODO: More details on time warping. TODO: 1. Write the functions to study in some basis 2. Smoothed mean 3. Smoothed PCA

For more details, check:

Applied Functional Data Analysis, Methods and Case Studies J.O. Ramsay and B.W. Silverman, Springer Verlag (2002) http://www.stats.ox.ac.uk/~silverma/fdacasebook/ TODO: An online reference?

The components you get as the result of a principal component analysis are not always directly interpretable: even if all the relevant information is in the first two principal components, they are not as "pure" as you would like. But by rotating them, they can be easier to interpret. Since you use the amplitude of the loadings of the PCA to interpret the principal components, you can try to simplify them, as follows: find a rotation (not a rotation of the whole space, but only a rotation of the subspace spanned by the first components) that maximizes the variance of the loadings (contrary to PCA, you do not consider the components one at a time, but all at the same time). This will actually try to make the loadings either very large or very small, easing the interpretation of the components.

?varimax ?promax

When you apply Principal Component Analysis (PCA) to the pixels of a series of images, you forget the 2-dimensional structure and hope that the PCA will somehow recover it, by itself. Instead, one can try to retain that information by representing the data as matrices. This can be generalized to higher-dimensional data with higher dimensional arrays (learned people may call those arrays "tensors").

Tensor algebra, motivations:

- Taylor expansion f(x+h) = f(x) + f'(x).h + f''(x).h.h + ...

where f(x) is a number

f'(x) is a 1-form: it transforms a vector h into

a number f'(x).h

f''(x) is a symetric 2-form: it takes a two

vectors h and k and turns them

into a number f''(x).h.k

- Higher order statistics (HOS) (e.g., the autocorrelation

of x^2 is a fourth moment).

- [For category-theorists] The tensor algebra TV of a

vector space V is obtained from the adjoint of the

forgetful functor Alg --> Vect from the category of

R-algebras to that of R-vector spaces. Similarly, one can

define the symetric tensor algebra SV from the category of

commutative R-algebras. The alternating algebra AV is

often defined as AV=TV/SV.

- In mathematical physics, a tensor is a section of some

vector bundle built from the tangent buldle of a

manifold (if you do not know about manifolds, read "The

large scale structure of space-time" by S. Hawking et

al.). For instance:

- a section of the tangent bundle TX --> X is a field of

tangent vectors;

- a section of the cotangent bundle (the dual of the

tangent space) TX' --> X is a (field of) 1-forms;

- a section of the maximum alternating power of the

cotangent bundle is an n-form, that you can use to

integrate a (real-valued) function on the manifold.

(You can also consider sections of other vector bundles,

e.g., the Levi-Civita connection -- some people call

those "holors" -- see "Theory of Holors : A Generalization

of Tensors", P. Moon and E. Spencer)Tentative implementation:

# From "Out-of-code tensor approximation of

# multi-dimensional matrices of visual data"

# H. Wang et al., Siggraph 2005

# http://vision.ai.uiuc.edu/~wanghc/research/siggraph05.html

# http://vision.ai.uiuc.edu/~wanghc/papers/siggraph05_tensor_hongcheng.pdf

mode.n.vector <- function (A, n) { # aka "unfolding"

stopifnot( n == floor(n),

n >= 1,

n <= length(dim(A)) )

res <- apply(A, n, as.vector)

if (is.vector(res)) {

res <- matrix(res, nr=dim(A)[n], nc=prod(dim(A))/dim(A)[n] )

} else {

res <- t(res)

}

stopifnot( dim(res) == c( dim(A)[n], prod(dim(A))/dim(A)[n] ) )

res

}

n.rank <- function (A, n) {

sum( svd( mode.n.vector(A, n) )$d

>= 1000 * .Machine$double.eps )

}

n.ranks <- function (A) {

n <- length(dim(A))

res <- integer(n)

for (i in 1:n) {

res[i] <- n.rank(A, i)

}

res

}

# The norm is the same as usual: sqrt(sum(A^2))

n.mode.svd <- function (A) {

res <- list()

for (n in 1:length(dim(A))) {

res[[n]] <- svd( mode.n.vector(A, n) )

}

res

}

product <- function (A, B, i) {

# Multiply the ith dimension of the tensor A with the

# columns of the matrix B

stopifnot( is.array(A),

i == floor(i),

length(i) == 1,

i >= 1,

i <= length(dim(A)),

is.matrix(B),

dim(A)[i] == dim(B)[1]

)

res <- array( apply(A, i, as.vector) %*% B,

dim = c(dim(A)[-i], dim(B)[2]) )

N <- length(dim(A))

ind <- 1:N

ind[i] <- N

ind[ 1:N > i ] <- ind[ 1:N > i ] - 1

res <- aperm(res, ind)

stopifnot( dim(res)[-i] == dim(A)[-i],

dim(res)[i] == dim(B)[2] )

res

}

# A few tests...

A <- array(1:8, dim=c(2,2,2))

mode.n.vector(A, 1)

mode.n.vector(A, 2)

mode.n.vector(A, 3)

n.rank(A, 1)

n.rank(A, 2)

n.rank(A, 3)

n.ranks(A)

B1 <- matrix(round(10*rnorm(6)), nr=2, nc=3)

B2 <- matrix(round(10*rnorm(12)), nr=3, nc=4)

all( product(B1, B2, 2) == B1 %*% B2 )

# The main function...

tensor.approximation <- function (A, R) {

stopifnot( is.array(A),

length(dim(A)) == length(R),

is.vector(R),

R == floor(R),

R >= 0,

R <= dim(A),

R <= n.ranks(A),

R <= prod(R)/R # If all the elements of R

# are equal, this is fine.

)

N <- length(R)

I <- dim(A)

U <- list()

for (i in 1:N) {

U[[i]] <- matrix( rnorm(I[i] * R[i]), nr = I[i], nc = R[i] )

}

finished <- FALSE

BB <- NULL

while (!finished) {

cat("Iteration\n")

Utilde <- list()

for (i in 1:N) {

Utilde[[i]] <- A

for (j in 1:N) {

if (i != j) {

Utilde[[i]] <- product( Utilde[[i]], U[[j]], j)

}

cat(" Utilde[[", i, "]]: ",

paste(dim(Utilde[[i]]),collapse=","),

"\n", sep="")

}

stopifnot( length(dim(Utilde[[i]])) == N,

dim(Utilde[[i]])[i] == dim(A)[i],

dim(Utilde[[i]])[-i] == R[-i] )

Utilde[[i]] <- mode.n.vector( Utilde[[i]], i )

cat(" Utilde[[", i, "]]: ",

paste(dim(Utilde[[i]]),collapse=","),

"\n", sep="")

}

for (i in 1:N) {

U[[i]] <- svd( Utilde[[i]] ) $ u [ , 1: R[i], drop=FALSE ]

#U[[i]] <- svd( Utilde[[i]] ) $ v

stopifnot( dim(U[[i]]) == c(I[i], R[i]) )

}

B <- A

for (j in 1:N) {

B <- product( B, U[[j]], j )

}

res <- B

for (j in 1:N) {

res <- product( res, t(U[[j]]), j )

}

cat("Approximating A:", sum((res - A)^2), "\n")

if (!is.null(BB)) {

eps <- sum( (B - BB)^2 )

cat("Improvement on B:", eps, "\n")

finished <- eps <= 1e-6

}

BB <- B

}

res <- B

for (j in 1:N) {

res <- product( res, t(U[[j]]), j )

}

attr(res, "B") <- B

attr(res, "U") <- U

attr(res, "squared.error") <- sum( (A-res)^2 )

res

}

A <- array(1:24, dim=c(4,3,2))

x <- tensor.approximation(A, c(1,1,1))

x <- tensor.approximation(A, c(2,2,2))

The problem is now the following: given a set of points in an n-dimensional space, find their coordinates kwnowing only their distances. Usually, you do not know the space dimension either.

This is very similar to Principal Component Analysis (PCA), with a few differences, though: PCA looks for relations between the variables while PCO focuses on similarities between the observations; you can interpret the PCA axes with the variables while you cannot with PCO (indeed, there are no variables).

As PCA, PCO is linear and the computations are the same: in PCA, we start with the variance-covariance matrix, i.e., a matrix whose entries are sums of squares; in distance analysis, we consider a matrix of distances or a distance of squares of matrices, i.e., from the pythagorean theorem, a matrix of sums of squares.

In other words, PCO is just PCA without its first step: the computation of the variance-covariance (or "squared distances") matrix.

?cmdscale library(MASS)

MDS is a non-linear equivalent of Principal Coordinate Analysis (PCO). We have a set of distances between points and we try to assign coordinates to those points so as to minimize a "stress function" -- for instance, the sum of squares between the distances we have and the distances obtained from the coordinates.

There are several R functions to perform those computations.

?cmdscale Principal coordinate analysis (metric, linear) library(MASS) ?sammon (metric, non-linear) ?isoMDS (non-metric)

There was a comparison of them in RNews:

http://cran.r-project.org/doc/Rnews/Rnews_2003-3.pdf

library(xgobi)

?xgvis

TODO: speak a little more of xgvis

xgvis slides and tutorial:

http://industry.ebi.ac.uk/%257Ealan/VisWorkshop99/XGvis_Talk/sld001.htm

http://industry.ebi.ac.uk/%257Ealan/VisWorkshop99/XGvis_Tutorial/index.html

(restart the MDS optimization with a different starting point to

avoid local minima ("scramble" button))

(one may change the parameters (euclidian/L1/Linfty distance,

removal of outliers, weights, etc.)

(one may ask for a 3D or 4D layout and the rotate it in xgobi)

(restart the MDS optimization with only a subset of the data --

interactivity)

What is a Shepard diagram ??? (change the power transformation)

MDS to get a picture of protein similarity

MDS warning: The global shape of MDS configurations is determined by

the large dissimilarities; consequently, small distances should be

in terpreted with caution: they may not reflect small

dissimilarities.

The applications are manifold, for instance:

1. Dimension reduction: we start with a set of points in a high-dimensional space, compute their pairwise distances, and try to put the points in a space of smaller dimension while retaining as much as possible the information ("proximity") present in the distance matrix.

Thus, we can see MDS as a non-linear analogue of Principal Component Analysis (PCA).

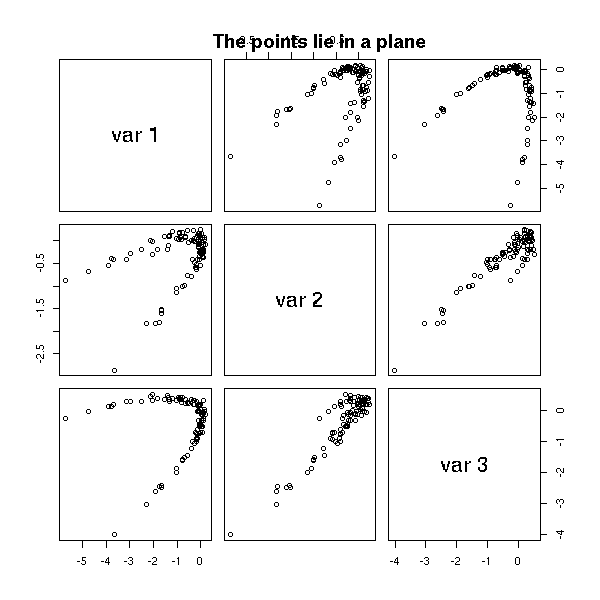

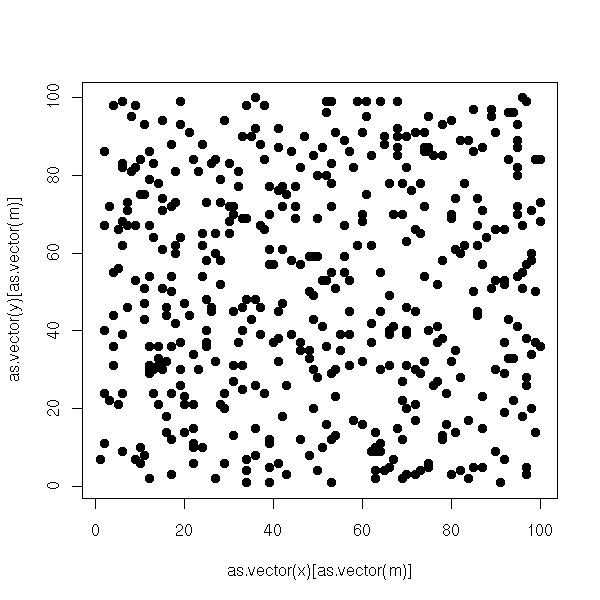

n <- 100

v <- .1

# (Almost) planar data

x <- rnorm(n)

y <- x*x + v*rnorm(n)

z <- v*rnorm(n)

d <- cbind(x,y,z)

# A rotation

random.rotation.matrix <- function (n=3) {

m <- NULL

for (i in 1:n) {

x <- rnorm(n)

x <- x / sqrt(sum(x*x))

y <- rep(0,n)

if (i>1) for (j in 1:(i-1)) {

y <- y + sum( x * m[,j] ) * m[,j]

}

x <- x - y

x <- x / sqrt(sum(x*x))

m <- cbind(m, x)

}

m

}

m <- random.rotation.matrix(3)

d <- t( m %*% t(d) )

pairs(d)

title(main="The points lie in a plane")

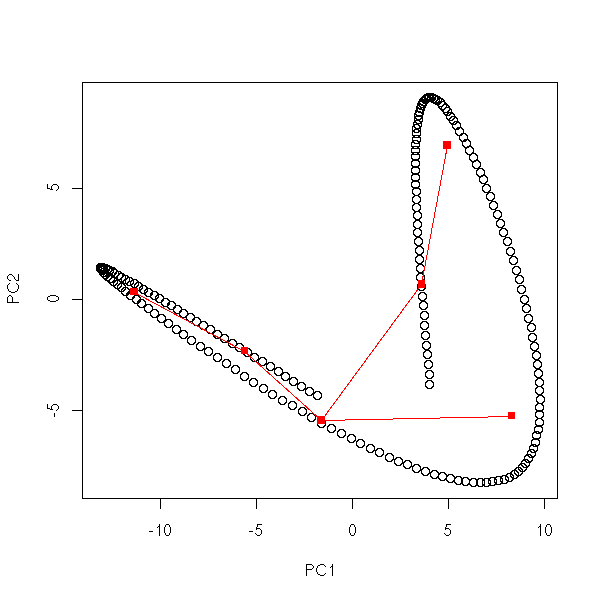

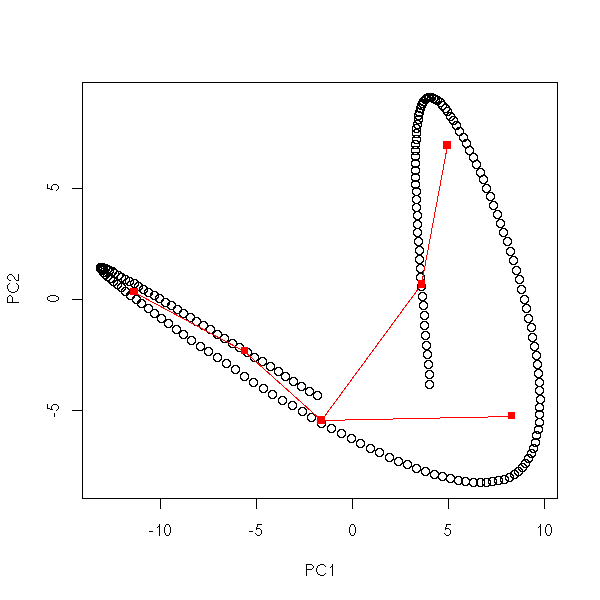

pairs(cmdscale(dist(d),3)) title(main="MDS")

pairs(princomp(d)$scores) title(main="Principal Component Analysis")

2. Study of data with no coordinates, for instance protein similarity: we can tell when two proteins are similar, we can quantify this similarity -- but we have no coordinates, we cannot think of a simple vector space whose points could be interpreted as proteins. MDS can help build such a space and graphically represent the proximity relations among a set of proteins.

The same process also appears in psychology: we can ask test subjects to identify objects (faces, Morse-coded letters, etc.) and count the number of confusions between two objects. This measures the similarity of these objects (a subjective similarity, that stems from their representation in the human mind); MDS can assign coordinates to those objects and represent those similarities in a graphical way.

Those misclassifications also appear in statistics: we can use MDS to study forecast errors of a given statistical algorithm (logistic regression, neural nets, etc.) when trying to predict a qualitative variable from quantitative variables.

TODO: speak about MDS when I tackle those algorithms...

3. MDS can also graphically represent variables (and not observations), after estimating the "distances" between variables from their correlations.

TODO: such a plot when I speak about variable selection...

4. We can also use MDS to plot graphs (the combinatorial objects we study in graph theory -- see the "graph" package), described by theiir vertices and edges.

Alternatively, you can use GraphViz,

http://www.graphviz.org/

outside R or from R -- see the "sem" and "Rgraphviz" libraries.

TODO

5. Reconstruct a molecule, especially its shape, from the distance between its atoms.

For more details, see

http://www.research.att.com/areas/stat/xgobi/papers/xgvis.ps.gz

1. Put the points into 4 or 5 classes (e.g., by hierarchical classification, or with the k-means algorithm). 2. Take the median point of those classes. 3. Is the Minimum Spanning Tree (MST) of those points ramified?

On our examples:

# Data

n <- 200 # Number of patients, number of columns

k <- 10 # Dimension of the ambient space

nb.points <- 5

p <- matrix( 5*rnorm(nb.points*k), nr=k )

barycentre <- function (x, n) {

# Add number between the values of x in order to get a length n vector

i <- seq(1,length(x)-.001,length=n)

j <- floor(i)

l <- i-j

(1-l)*x[j] + l*x[j+1]

}

m <- apply(p, 1, barycentre, n)

data.broken.line <- t(m)

data.noisy.broken.line <- data.broken.line + rnorm(length(data.broken.line))

library(splines)

barycentre2 <- function (y,n) {

m <- length(y)

x <- 1:m

r <- interpSpline(x,y)

#r <- lm( y ~ bs(x, knots=m) )

predict(r, seq(1,m,length=n))$y

}

data.curve <- apply(p, 1, barycentre2, n)

data.curve <- t(data.curve)

data.noisy.curve <- data.curve + rnorm(length(data.curve))

data.real <- read.table("Tla_z.txt", sep=",")

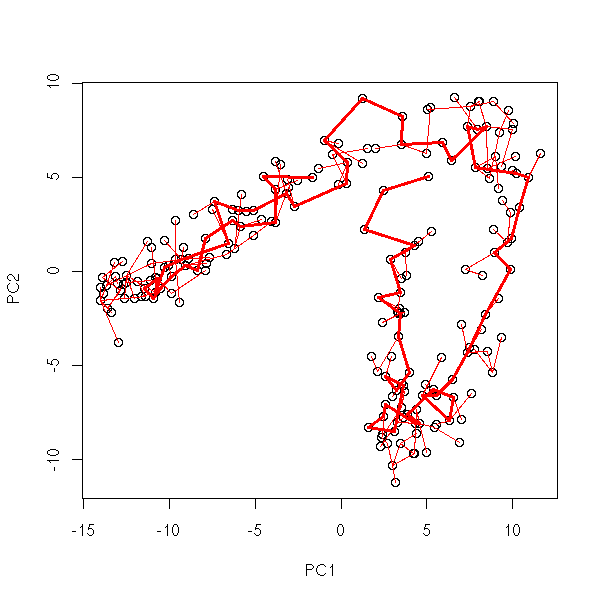

r <- prcomp(t(data.real))

data.real.3d <- r$x[,1:3]

library(cluster)

library(ape)

mst.of.classification <- function (x, k=6, ...) {

x <- t(x)

x <- t( t(x) - apply(x,2,mean) )

r <- prcomp(x)

y <- r$x

u <- r$rotation

r <- kmeans(y,k)

z <- r$centers

m <- mst(dist(z))

plot(y[,1:2], ...)

points(z[,1:2], col='red', pch=15)

w <- which(m!=0)

i <- as.vector(row(m))[w]

j <- as.vector(col(m))[w]

segments( z[i,1], z[i,2], z[j,1], z[j,2], col='red' )

}

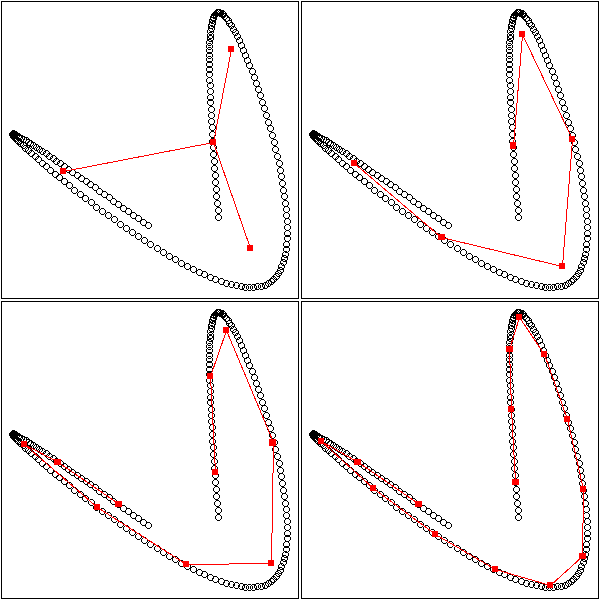

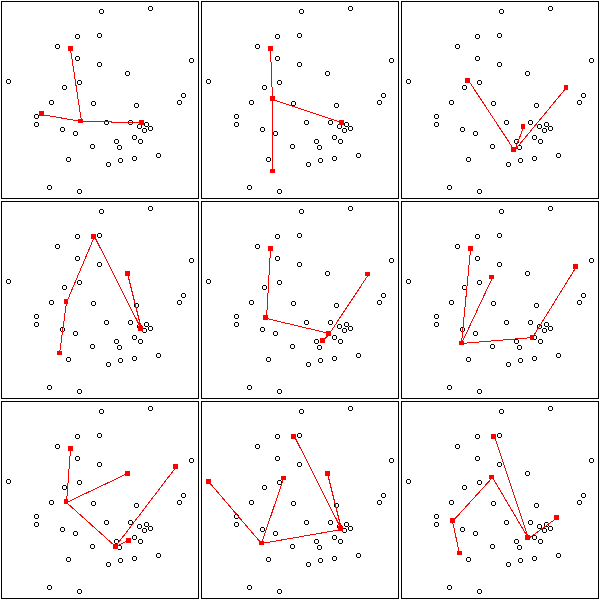

set.seed(1)

mst.of.classification(data.curve, 6)

mst.of.classification(data.curve, 6)

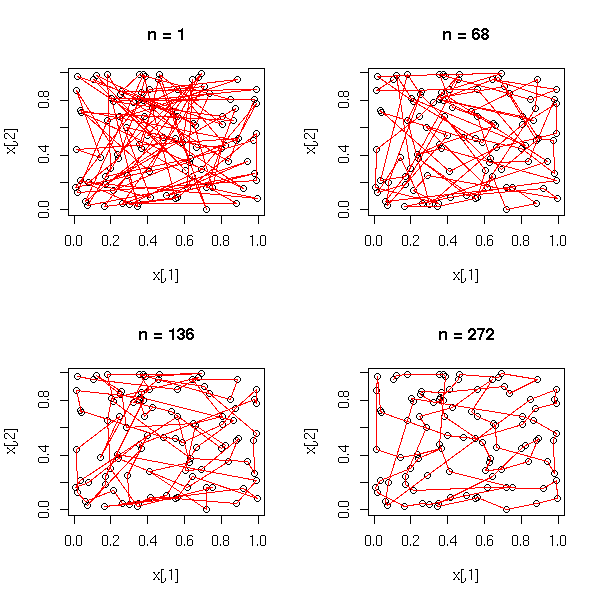

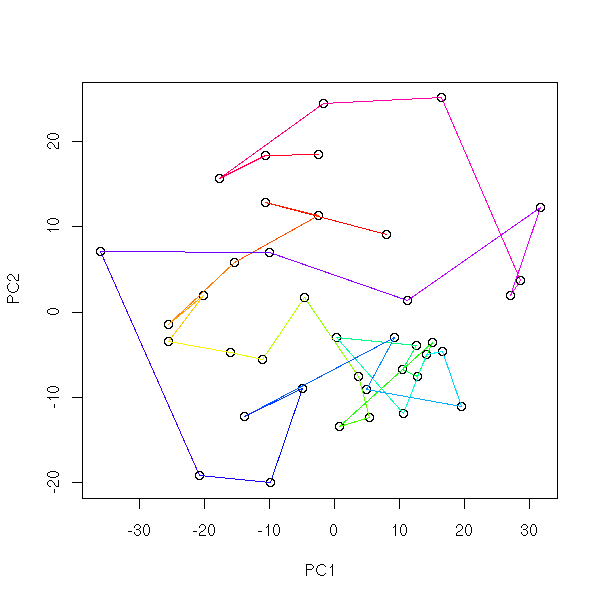

op <- par(mfrow=c(2,2),mar=.1+c(0,0,0,0))

for (k in c(4,6,10,15)) {

mst.of.classification(data.curve, k, axes=F)

box()

}

par(op)

op <- par(mfrow=c(2,2),mar=.1+c(0,0,0,0))

for (k in c(4,6,10,15)) {

mst.of.classification(data.noisy.curve, k, axes=F)

box()

}

par(op)

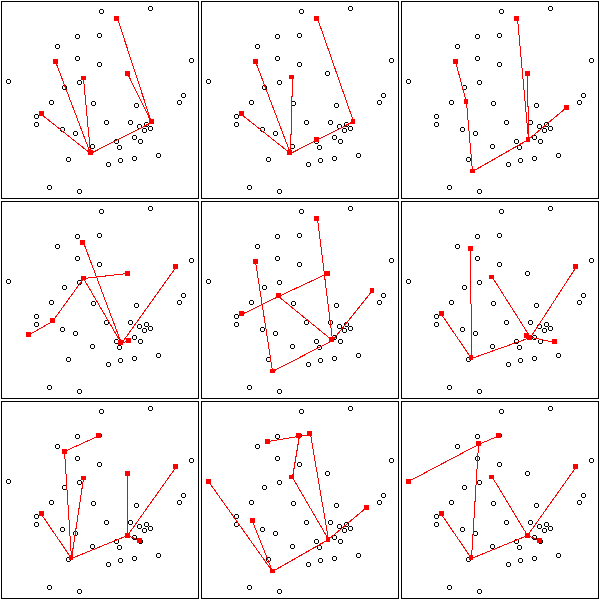

op <- par(mfrow=c(2,2),mar=.1+c(0,0,0,0))

for (k in c(4,6,10,15)) {

mst.of.classification(data.broken.line, k, axes=F)

box()

}

par(op)

op <- par(mfrow=c(2,2),mar=.1+c(0,0,0,0))

for (k in c(4,6,10,15)) {

mst.of.classification(data.noisy.broken.line, k, axes=F)

box()

}

par(op)

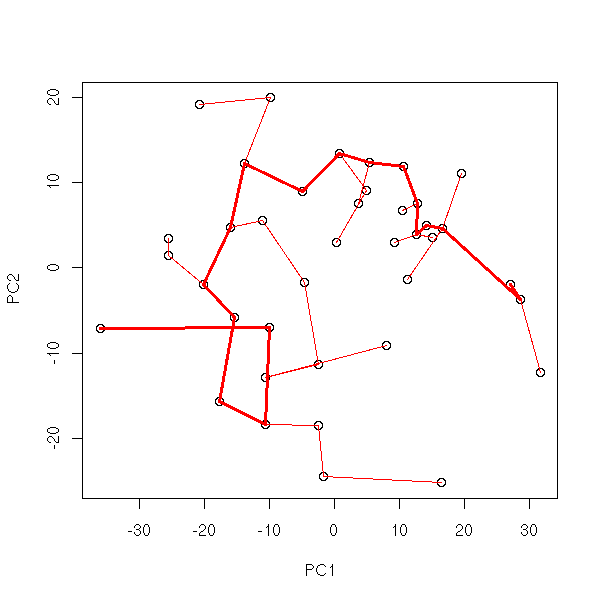

With real data, it does not work that well:

op <- par(mfrow=c(2,2),mar=.1+c(0,0,0,0))

for (k in c(4,6,10,15)) {

mst.of.classification(data.real, k, axes=F)

box()

}

par(op)

Details:

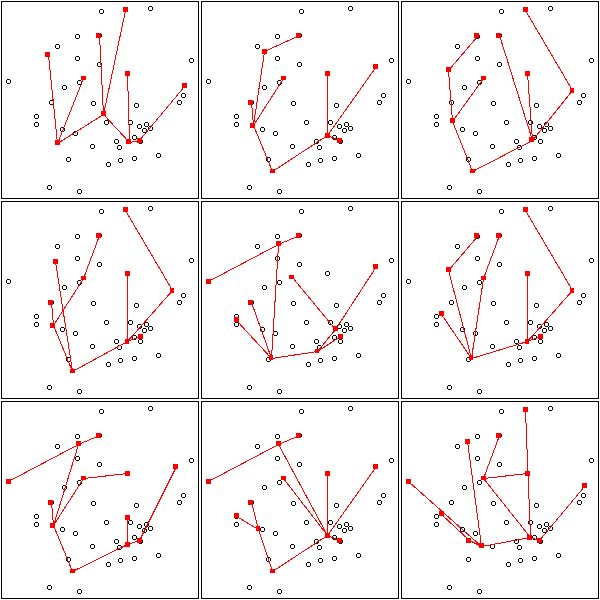

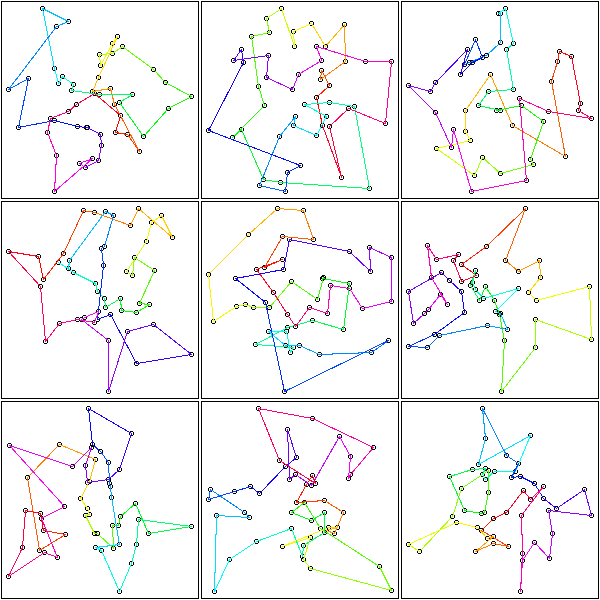

op <- par(mfrow=c(3,3),mar=.1+c(0,0,0,0))

for (k in c(4:6)) {

for (i in 1:3) {

mst.of.classification(data.real, k, axes=F)

box()

}

}

par(op)

op <- par(mfrow=c(3,3),mar=.1+c(0,0,0,0))

for (k in c(7:9)) {

for (i in 1:3) {

mst.of.classification(data.real, k, axes=F)

box()

}

}

par(op)

op <- par(mfrow=c(3,3),mar=.1+c(0,0,0,0))

for (k in c(10:12)) {

for (i in 1:3) {

mst.of.classification(data.real, k, axes=F)

box()

}

}

par(op)

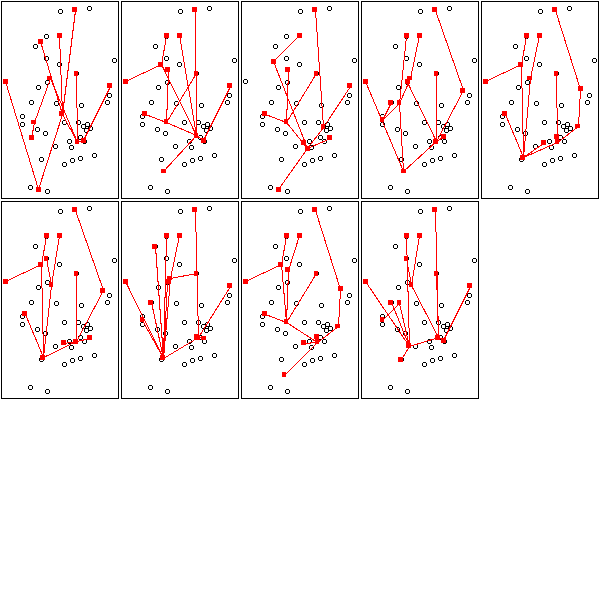

op <- par(mfrow=c(3,5),mar=.1+c(0,0,0,0))

for (k in c(13:15)) {

for (i in 1:3) {

mst.of.classification(data.real, k, axes=F)

box()

}

}

par(op)

library(ape)

my.plot.mst <- function (d) {

r <- mst(dist(t(d)))

d <- prcomp(t(d))$x[,1:2]

plot(d)

n <- dim(r)[1]

w <- which(r!=0)

i <- as.vector(row(r))[w]

j <- as.vector(col(r))[w]

segments( d[i,1], d[i,2], d[j,1], d[j,2], col='red' )

}

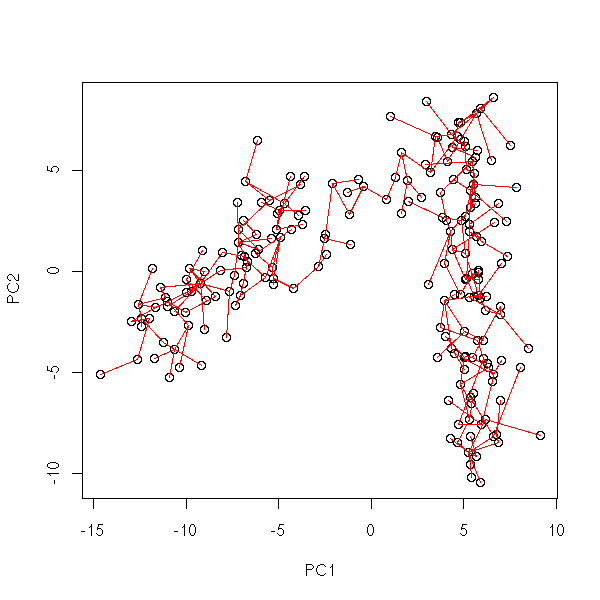

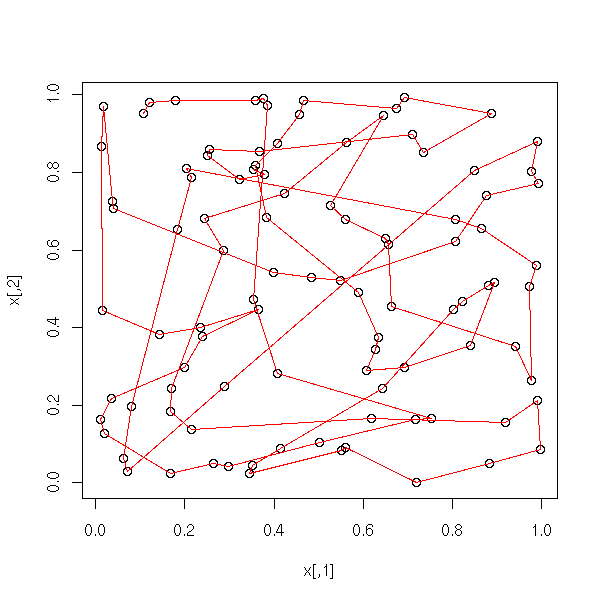

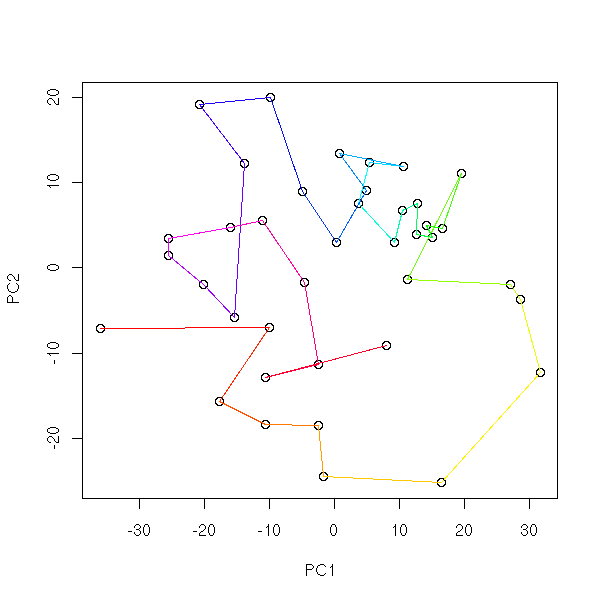

my.plot.mst(data.broken.line)

my.plot.mst(data.noisy.broken.line)

my.plot.mst(data.curve)

my.plot.mst(data.noisy.curve)

my.plot.mst(data.real)

my.plot.mst(t(data.real.3d))

Pruned MST

# Gives the list of the oaths from the branching nodes to the leaves

chemins.vers.les.feuilles <- function (G) {

nodes <- which(apply(G,2,sum)>2)

leaves <- which(apply(G,2,sum)==1)

res <- list()

for (a in nodes) {

for (b in which(G[a,]>0)) {

if (! b %in% nodes) {

res <- append(res,list(c(a,b)))

}

}

}

chemins.vers.les.feuilles.suite(G, nodes, leaves, res)

}

# Last coordinate of a vector

end1 <- function (x) {

n <- length(x)

x[n]

}

# Last two coordinates of a vector

end2 <- function (x) {

n <- length(x)

x[c(n-1,n)]

}

chemins.vers.les.feuilles.suite <- function (G, nodes, leaves, res) {

new <- list()

done <- T

for (ch in res) {

if( end1(ch) %in% nodes ) {

# Pass

} else if ( end1(ch) %in% leaves ) {

new <- append(new, list(ch))

} else {

done <- F

a <- end2(ch)[1]

b <- end2(ch)[2]

for (x in which(G[b,]>0)) {

if( x != a ){

new <- append(new, list(c( ch, x )))

}

}

}

}

if(done) {

return(new)

} else {

return(chemins.vers.les.feuilles.suite(G,nodes,leaves,new))

}

}

G <- matrix(c(0,1,0,0, 1,0,1,1, 0,1,0,0, 0,1,0,0), nr=4)

chemins.vers.les.feuilles(G)

# Compute the length of a path

longueur.chemin <- function (chemin, d) {

d <- as.matrix(d)

n <- length(chemin)

i <- chemin[ 1:(n-1) ]

j <- chemin[ 2:n ]

if (n==2) {

d[i,j]

} else {

sum(diag(d[i,][,j]))

}

}

G <- matrix(c(0,1,0,0, 1,0,1,1, 0,1,0,0, 0,1,0,0), nr=4)

d <- matrix(c(0,2,4,3, 2,0,2,1, 4,2,0,3, 3,1,3,0), nr=4)

chemins <- chemins.vers.les.feuilles(G)

chemins

l <- sapply(chemins, longueur.chemin, d)

l

stopifnot( l == c(2,2,1) )

elague <- function (G0, d0) {

d0 <- as.matrix(d0)

G <- G0

d <- d0

res <- 1:dim(d)[1]

chemins <- chemins.vers.les.feuilles(G)

while (length(chemins)>0) {

longueurs <- sapply(chemins, longueur.chemin, d)

# Number of the shortest path

i <- which( longueurs == min(longueurs) )[1]

cat(paste("Removing", paste(res[chemins[[i]]],collapse=' '), "length =", longueurs[i],"\n"))

# Nodes to remove

j <- chemins[[i]] [-1]

res <- res[-j]

G <- G[-j,][,-j]

d <- d[-j,][,-j]

cat(paste("Removing", paste(j), "\n" ))

cat(paste("", paste(res, collapse=' '), "\n"))

chemins <- chemins.vers.les.feuilles(G)

}

res

}

library(ape)

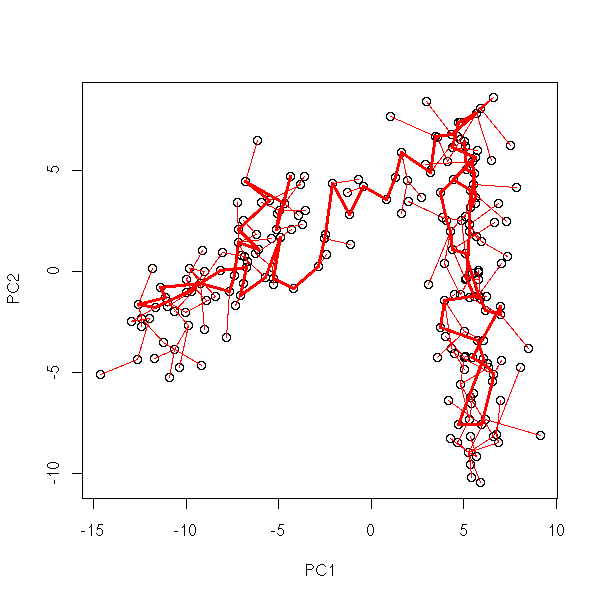

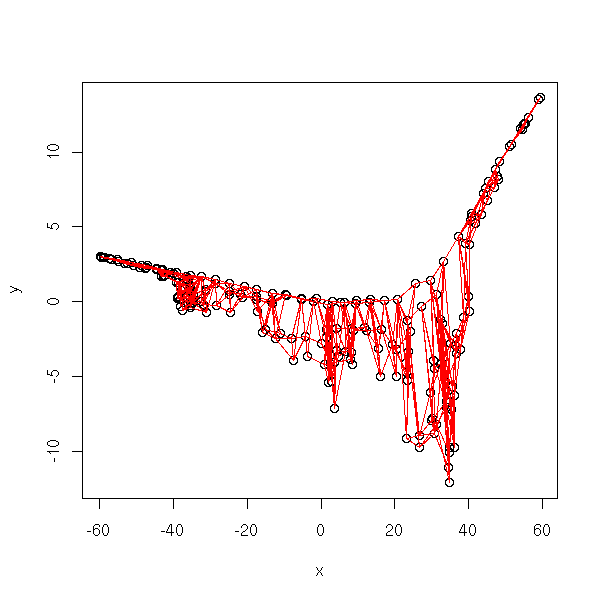

my.plot.mst <- function (x0) {

cat("Plotting the points\n")

x <- prcomp(t(x0))$x[,1:2]

plot(x)

cat("Computing the distance matrix\n")

d <- dist(t(x0))

cat("Computing the MST (Minimum Spanning Tree)\n")

G <- mst(d)

cat("Plotting the MST\n")

n <- dim(G)[1]

w <- which(G!=0)

i <- as.vector(row(G))[w]

j <- as.vector(col(G))[w]

segments( x[i,1], x[i,2], x[j,1], x[j,2], col='red' )

cat("Pruning the tree\n")

k <- elague(G,d)

cat("Plotting the pruned tree\n")

x <- x[k,]

G <- G[k,][,k]

n <- dim(G)[1]

w <- which(G!=0)

i <- as.vector(row(G))[w]

j <- as.vector(col(G))[w]

segments( x[i,1], x[i,2], x[j,1], x[j,2], col='red', lwd=3 )

}

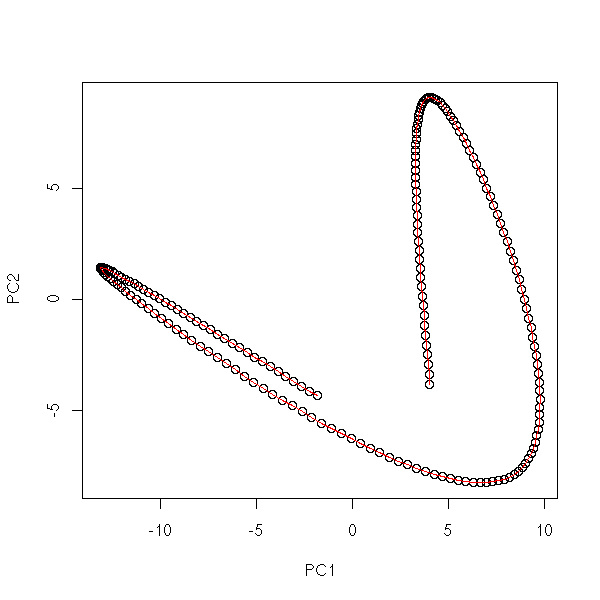

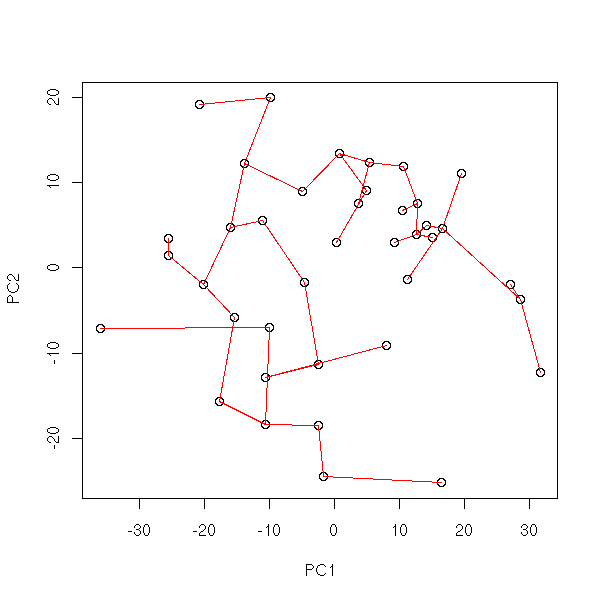

my.plot.mst(data.noisy.broken.line)

my.plot.mst(data.noisy.curve)

my.plot.mst(data.real)

my.plot.mst(t(data.real.3d))

Remark: in image analysis, we sometimes us a simplification of this algorithm (still called "pruning" to get rid of the ramifications in the skeleton of an image: we just gnaw two of three segments from each leaf.

TODO: a reference

TODO: reference

TODO: explain

Let us first construct the graph.

# k: each point is linked to its k nearest neighbors

# eps: each point is linked to all its neighbors within a radius eps

isomap.incidence.matrix <- function (d, eps=NA, k=NA) {

stopifnot(xor( is.na(eps), is.na(k) ))

d <- as.matrix(d)

if(!is.na(eps)) {

im <- d <= eps

} else {

im <- apply(d,1,rank) <= k+1

diag(im) <- F

}

im | t(im)

}

plot.graph <- function (im,x,y=NULL, ...) {

if(is.null(y)) {

y <- x[,2]

x <- x[,1]

}

plot(x,y, ...)

k <- which( as.vector(im) )

i <- as.vector(col(im))[ k ]

j <- as.vector(row(im))[ k ]

segments( x[i], y[i], x[j], y[j], col='red' )

}

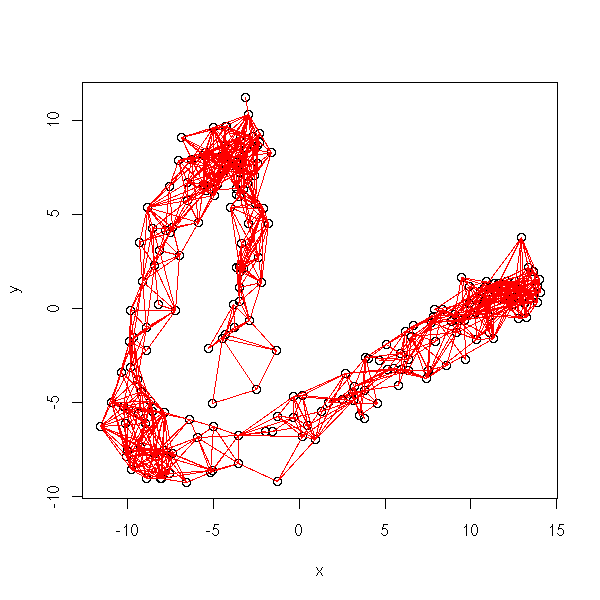

d <- dist(t(data.noisy.curve))

r <- princomp(t(data.noisy.curve))

x <- r$scores[,1]

y <- r$scores[,2]

plot.graph(isomap.incidence.matrix(d, k=5), x, y)

So far, there is a problem: the resulting grah need not be connected -- this is a problem if we want to compute distances...

plot.graph(isomap.incidence.matrix(d, eps=quantile(as.vector(d), .05)),

x, y)

The graph is connected if and only if it contains the Minimum Spanning Tree (MST): thus, we shall just add the edges of this MST taht are not already there.

isomap.incidence.matrix <- function (d, eps=NA, k=NA) {

stopifnot(xor( is.na(eps), is.na(k) ))

d <- as.matrix(d)

if(!is.na(eps)) {

im <- d <= eps

} else {

im <- apply(d,1,rank) <= k+1

diag(im) <- F

}

im | t(im) | mst(d)

}

plot.graph(isomap.incidence.matrix(d, eps=quantile(as.vector(d), .05)),

x, y)

TODO: distance in this graph. It is a classical problem: shortest path in a graph.

inf <- function (x,y) { ifelse(x<y,x,y) }

isomap.distance <- function (im, d) {

d <- as.matrix(d)

n <- dim(d)[1]

dd <- ifelse(im, d, Inf)

for (k in 1:n) {

dd <- inf(dd, matrix(dd[,k],nr=n,nc=n) + matrix(dd[k,],nr=n,nc=n,byrow=T))

}

dd

}

isomap <- function (x, d=dist(x), eps=NA, k=NA) {

im <- isomap.incidence.matrix(d, eps, k)

dd <- isomap.distance(im,d)

r <- list(x,d,incidence.matrix=im,distance=dd)

class(r) <- "isomap"

r

}

r <- isomap(t(data.noisy.curve), k=5)

xy <- cmdscale(r$distance,2) # long: around 30 seconds

plot.graph(r$incidence.matrix, xy)

In an orthonormal basis:

plot.graph(r$incidence.matrix, xy, ylim=range(xy))

TODO:

Other ways of representing the results: 1. Initial data, graph 2. MDS 3. Initial coordinates, graph, colors for the first coordinate of the MDS. 4. The curve?

TODO: apply this to our data.

TODO: Choosing the parameters (eps or k)

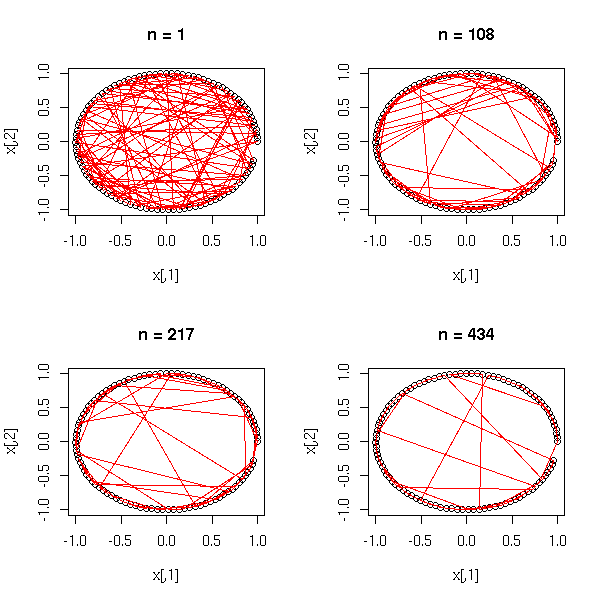

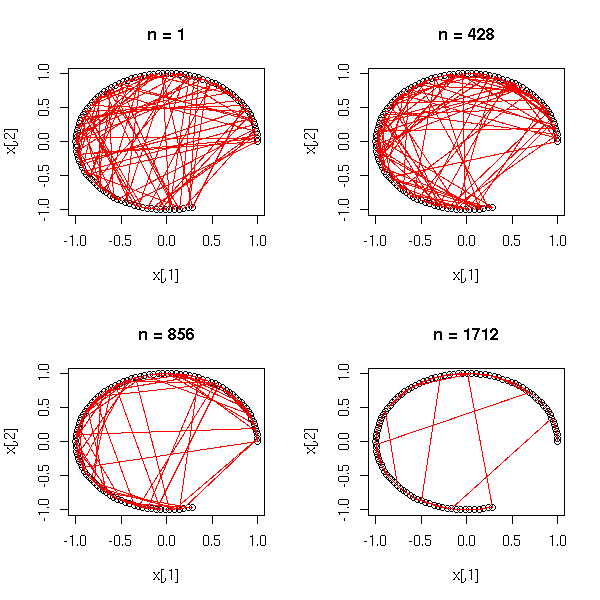

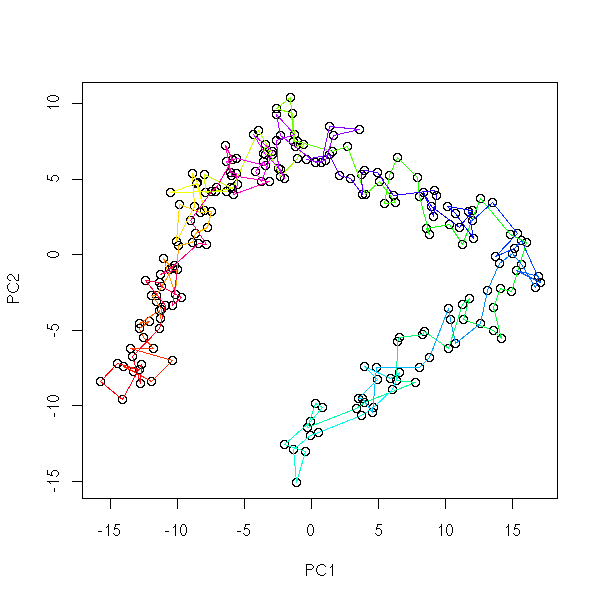

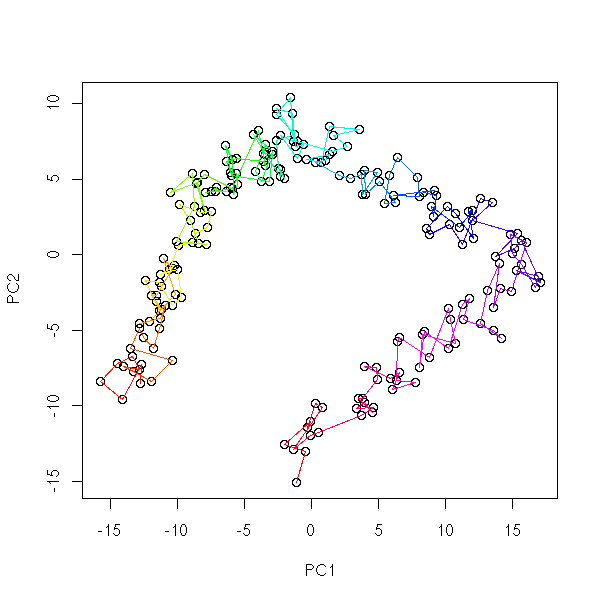

Kohonen networks (or SOM, Self-Organizing Maps) are a qualitative, non-linear equivalent of Principal Component (or Coordinate) Analysis (PCA, PCO): we try to classify observations in several classes (unknown classes) and to organize those classes into a spacial layout. For instance, we can want the classes to be aligned:

* * * * * * * * * *

or to form a grid:

* * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * *

We start with a cloud of points in an n-dimensional space, whose coordinates will be written (x1,x2,...,xi,...,xn) and we try to find, for each element j of the grid, coordinates (w(1,j),w(2,j),...,w(i,j),...,w(n,j)).

In other words, we try to embed the grid in this n-dimensional space, so that it comes as close as possible to the points (as if the points were magnetic and were attracting the grid vertices) and so that it retains its grid shape (as if the grid edges were springs).

1. Choose random values for the w(i,j).

2. Take a point x = (x1,...,xn) in the cloud.

2a. Consider the point j on the gris whose coordinates are the closest from x:

j = ArgMin Sum( (x_i - w(i,j))^2 )

i2b. Compute the error vector:

d_i = x_i - w(i,j)

2c. For each point k of the grid in the neighbourhood of j, replace the value of w(i,k):

w(i,k) <- w(i,k) + h * d_i.

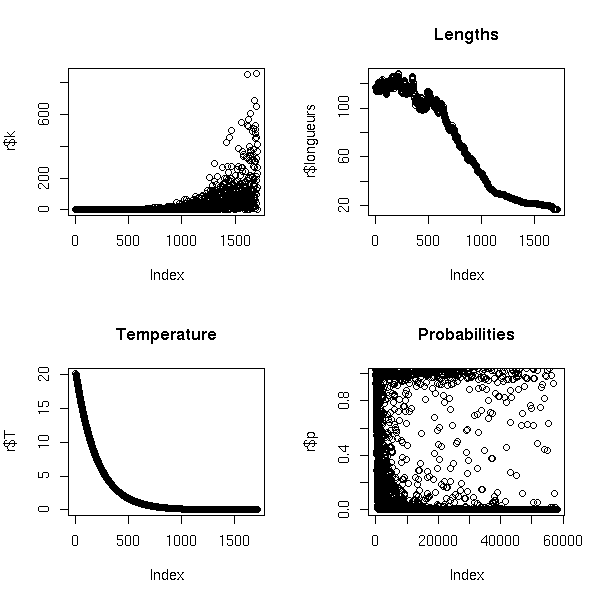

3. Go back to point 2, with a smaller neighbourhood and a smaller value for the learning coefficient h.

You can replace the notion of neighbourhood by a "neighbourhood function":

w(i,k) <- w(i,k) + h * v(i,k) * d_i

where v(i,i) = 1 and v(i,k) decreases when k goes away from i. Iteration after iteration, you will replace the function one with a more and more visible peak.

You can choose the grid geometry: often, a table of dimension 1, 2 or 3, but it could also be a circle, a cylinder, a torus, etc.

In that sense, we can say that Kohonen networks are non-linear (a would be tempted to say "homotopically non-linear").

You can also interpret Kohonen networks as a mixture of Principal Component Analysis (finding a graphical representation, in the plane, of a cloud of points) and unsupervised classification (assign the points to classes, a priori unknown).

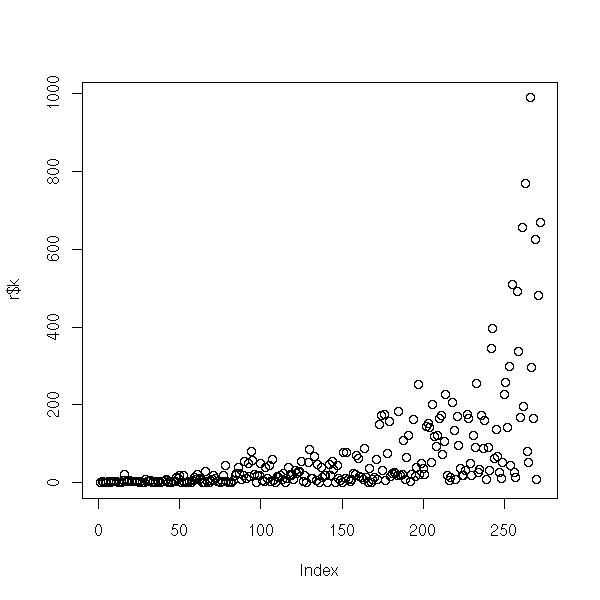

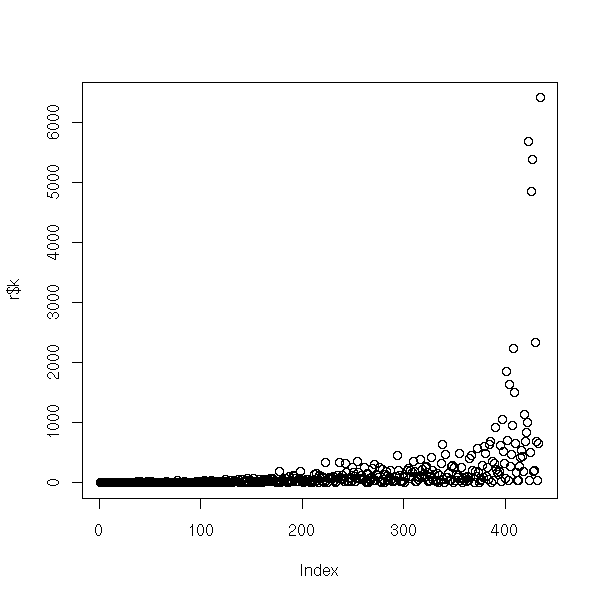

You can assess the quality of the result by looking at the error, i.e., the average of the

Sum( (x_i - w(i,j))^2 ) i

when x runs over the points to classify and where j is the class that minimizes this sum (i.e., j is the class to which x_i is assigned):

MSE = Mean Min Sum (x_i - w(i,j))^2

x j i

Kohonen networks are often presented as a special case of neural networks: if the doodles used to explain them are similar, trying hard to see a neural network in a Kohonen network might slow your understanding of the subject (the weights are completely different, there is no transfer function, etc.).

You can plot each class j by a disc or a square, and put, in each square, its coordinates w(1,j),...,w(n,j), as a star plot or as a parallel plot.

Under R, there are two functions to compute Kohonen maps, in the "class" and "som" packages (if you hesitate, choose "som").

The following examples use a Kohonen map to describe the palette (i.e., the colors) of an image (this is supposed to be a landscape: an island in the middle of a river).

library(pixmap)

x <- read.pnm("photo1.ppm")

d <- cbind( as.vector(x@red),

as.vector(x@green),

as.vector(x@blue) )

m <- apply(d,2,mean)

d <- t( t(d) - m )

s <- apply(d,2,sd)

d <- t( t(d) / s )

library(som)

r1 <- som(d,5,5)

plot(r1)

x <- r1$code.sum$x

y <- r1$code.sum$y

n <- r1$code.sum$nobs

co <- r1$code # Is it in the same order that x, y and n?

co <- t( t(co) * s + m )

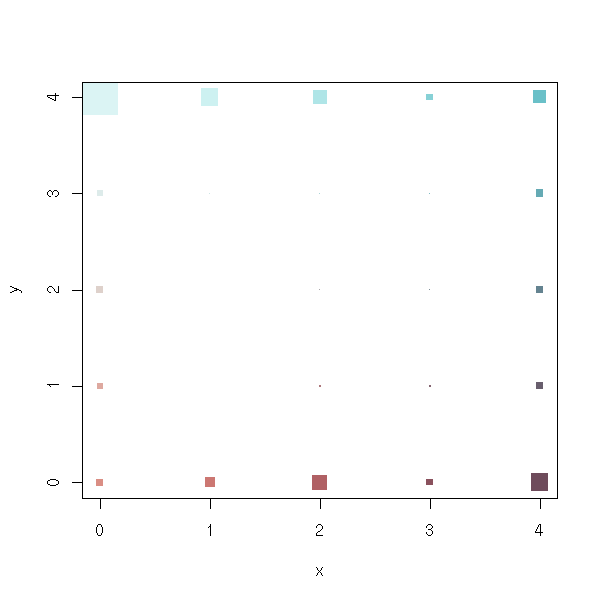

plot(x, y,

pch=15,

cex=5,

col=rgb(co[,1], co[,2], co[,3])

)

x <- r1$code.sum$x

y <- r1$code.sum$y

n <- r1$code.sum$nobs

co <- r1$code # Is it in the same order that x, y and n?

co <- t( t(co) * s + m )

plot(x, y,

pch=15,

cex=5*n/max(n),

col=rgb(co[,1], co[,2], co[,3])

)

library(class) r2 <- SOM(d) plot(r2)

x <- r2$grid$pts[,1]

y <- r2$grid$pts[,2]

n <- 1 # Where???

co <- r2$codes

co <- t( t(co) * s + m )

plot(x, y,

pch=15,

cex=5*n/max(n),

col=rgb(co[,1], co[,2], co[,3])

)

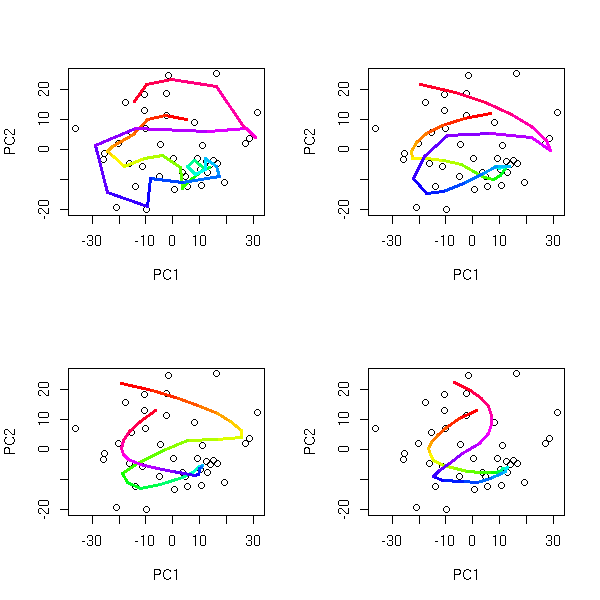

You will notice that the result is not always the same:

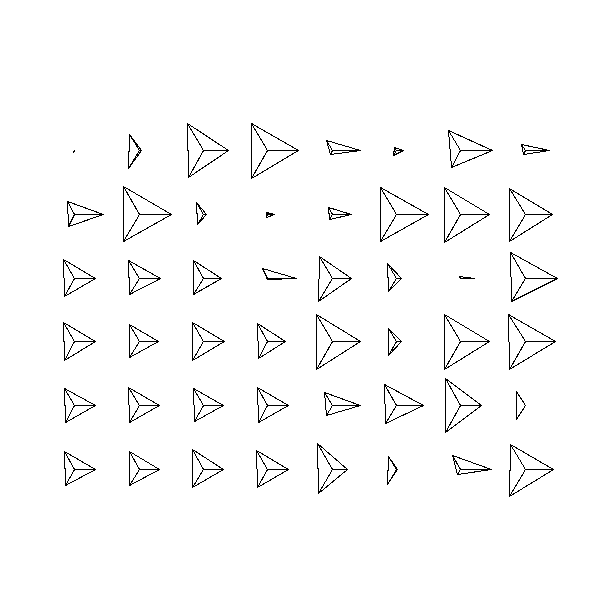

op <- par(mfrow=c(2,2))

for (i in 1:4) {

r2 <- SOM(d)

plot(r2)

}

par(op)

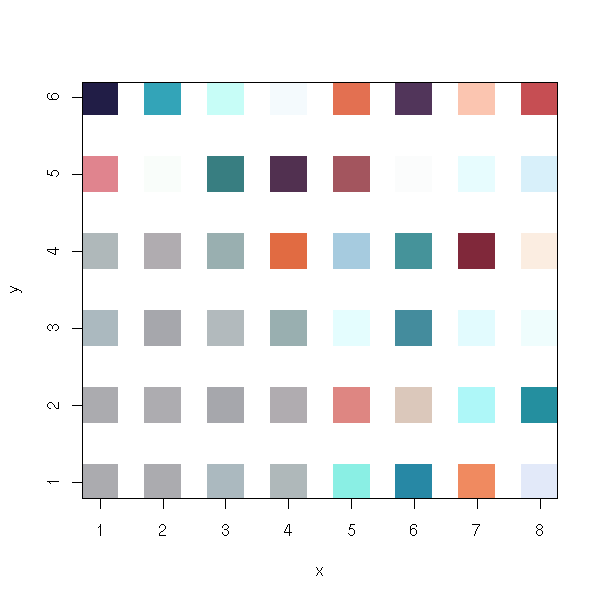

You may want to plot the vertex coordinates: you can use one colour per coordinate. This allows you to graphically choose which variables to use: you can eliminate those that play little role in the classification and those that bring the same information as already selected variables.

In the first example, the first coordinate is informative (it coincides with one of the map coordinates). The two others contain the same information (we can get rid of one) and correspond to a diagonal direction on the map.

x <- r1$code.sum$x

y <- r1$code.sum$y

v <- r1$code

op <- par(mfrow=c(2,2), mar=c(1,1,1,1))

for (k in 1:dim(v)[2]) {

m <- matrix(NA, nr=max(x), nc=max(y))

for (i in 1:length(x)) {

m[ x[i], y[i] ] <- v[i,k]

}

image(m, col=rainbow(255), axes=F)

}

par(op)

x <- r2$grid$pts[,1]

y <- r2$grid$pts[,2]

v <- r2$codes

op <- par(mfrow=c(2,2), mar=c(1,1,1,1))

for (k in 1:dim(v)[2]) {

m <- matrix(NA, nr=max(x), nc=max(y))

for (i in 1:length(x)) {

m[ x[i], y[i] ] <- v[i,k]

}

image(m, col=rainbow(255), axes=F)

}

par(op)

The vertex coordinates define a map from the plane (the space in which the Kohonen map lives) towards R^n (the space in which our cloud of points lives): we can plot this application (or, more precisely, its image), especially when the space R^n is of low dimension -- if the dimension is high, you would resort to high-dimensional visualization tools, such as ggobi.

TODO: plot

TODO: shards plot, to represent the differences between a cell and its neighbours. library(klaR) ?shardsplot

TODO: sammon plot, to see how distorted the map is.

TODO: colouring a SOM; one can use another, 1-dimensional, SOM to choose the colours (it can be a colour ring or a colour segment).

One can plot the grid and visualize how well it fits the cloud of points using a grand tour -- for instance, using ggobi.

Image analysis (medicine: echography, etc.; handwriting): an n*m image can be seen as a point an an n*m-dimensional space. The euclidian distance is not the most adequate one, but it works pretty well nonetheless.

You can also use Kohonen maps to forecast time series: usually, we try to write

y[n+1] = f( y[n], y[n-1], ..., y[n-k] )

for a "well-chosen" k and a map f to be determined (for example, by a linear regression, a non-linear regression, a Principal Component Analysis (PCA), a Curvilinear Component Analysis (CCA), etc.). Instead of this, you can build a Kohonen map with ( y[n], y[n-1], ..., y[n-k] ) and look at the values of y[n+1] at each vertex of the map. You could also use ( y[n+1], y[n], y[n-1], ..., y[n-k] ) to build the map.

TODO: develop this example, either here or in the Time Series chapter

You can use Kohonen maps to measure the colours in an image (for instance, to convertr it from 16 bits to 8 bits, or to convert it to an indexedd format, that limits the number of colors but lets you choose those colours): the vertex coordinates will be the quantified colors.

http://www.cis.hut.fi/~lendasse/pdf/esann00.pdf

You can also use Kohonen maps for supervised learning: you still start with a cloud of points, but this time, each point already belongs to a class -- in other words, we have quantitative predictive variables, as before, and a further qualitative variable, to predict/explain.

We run the algorithm on the predictive variables and we associate one (or several) class(es) to each node of the map: the classes of a node are the classes of the points it contains. Vertices with several classes are in a "clash state".

The interest is twofold: first, you can use the mao to predict the class of new observations, second, you get a graphical representation of the qualitative variable to predict, i.e., you have a notion of proximity, similarity, between the classes.

We said above that Kohonen maps were not very stable: it you change a few points in the cloud of points, or simply if you change the initialization of the map, you get completely different results.

However, small maps (3x3) are rather stable -- actually, the fact is more general: unsupervised learning methods give reproducible results up to 10 classes, but not above.

However, you can simplify a large Kohonen map in the following way: if you colour the map vertices with the number of points it contains, you can apply image processing algorithms (mathematical morphology), such as the opening (to get rid of noise, i.e., small elements) or the watershed (that divides the image in several bassins of attraction).

TODO: translate the last word... TODO: what about the hierarchical algorithm: a 3x3 SOM, then a 3x3 SOM in each of its cells, etc.? Do I mention it later?

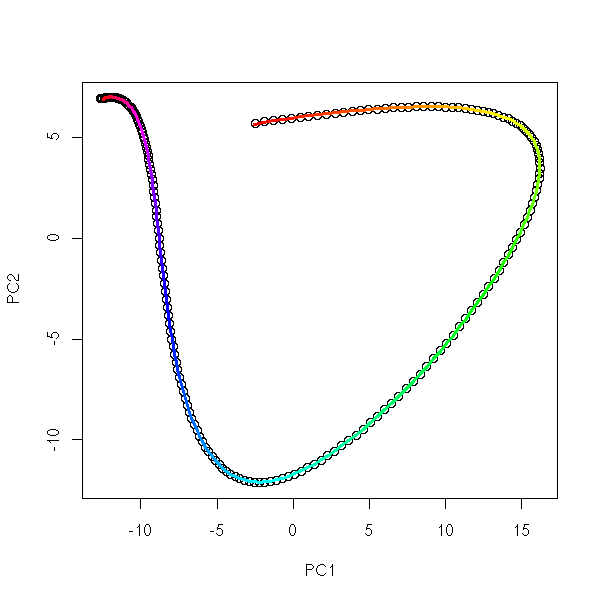

You can model the situation described by a SOM (i.e., simulate data that will be efficiently analyzed by a SOM) as follows. To get a cloud of points:

1. Take a point at random in the plane, following some distribution. One of the aims of the SOM algorithm is to recover this distribution.

2. Apply a transformation (one other aim of the SOM distribution is to recover this transformation) to send our point in an n-dimensional space. Our point is then on a 2-dimensional submanifold (a submanifold is like a subspace, but it need not be "straight", it may be "curved") of R^n.

3. Add some noise.

The Kohonen map is an estimation of the distribution of the first step: the rows and columns correspond to the coordinates in the plane; the number of points in each vertex is an estimation of the density.

The coordinates w(i,j) of the vertices are an estimation of the submanifold in step 2.

In those estimations, the plane R^2 and the submanifold of R^n have been discretized.

Generative Topographic Mappings (GTM) are a possible replacement of SOM that formalize this geometric description of Kohonen maps.

http://research.microsoft.com/~cmbishop/downloads/Bishop-GTM-Ncomp-98.pdf

TODO: introduction TODO: proofread the following paragraph.

The following methods are all based on principal component analysis: we start with a table of numbers (the values of our variables for PCA, a contingency table for CA), we consider its columns as points in an n-dimensional space (same for the rows), and we look for the 2-dimensional subspace of R^n (the space in which the columns live -- and we would do the same for that in which the rows live) on which we can see "as much information as possible" (we project the cloud of points onto it, orthogonally with respect to the canonical scalar product or another scalar product, more appropriate to the problem at hand.

Correspondance analysis focuses on contingency tables, i.e., qualitative variables. Let us first consider the case of two variables.

TODO: recall what a contingency table is...

We first transform the contingency table into two tables: that of row-profiles (the sum of the elements in a row is always 1 (or 100%)) and that of column-profiles.

TODO: define f(i,*) and f(*,j)

If the two variables are independant, we have f(i,j)=f(i,*)f(*,j). You can use Pearson's Chi^2 test to compare the distributions of f(i,j) and f(i,*)f(*,j).

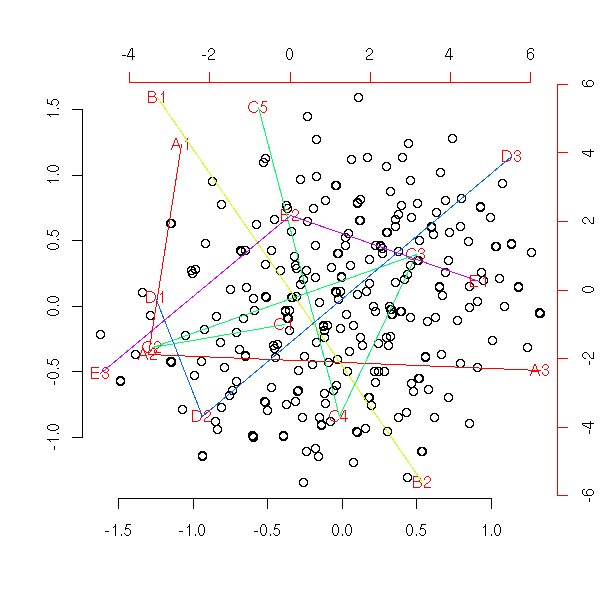

From a technical point of vue, Correspondance Analysis analysis looks like Principal Component Analysis. We try to find a graphical representation of the rows and colums of the table, as points in some space; we want the points to reflect the information contained in the table as faithfully as possible (technically, we try to maximize the variance of the resulting cloud of points). Correspondance Analysis proceeds in a similar way, the only difference being that the distance is not given by the canonical scalar product but is the "Chi^2 distance".

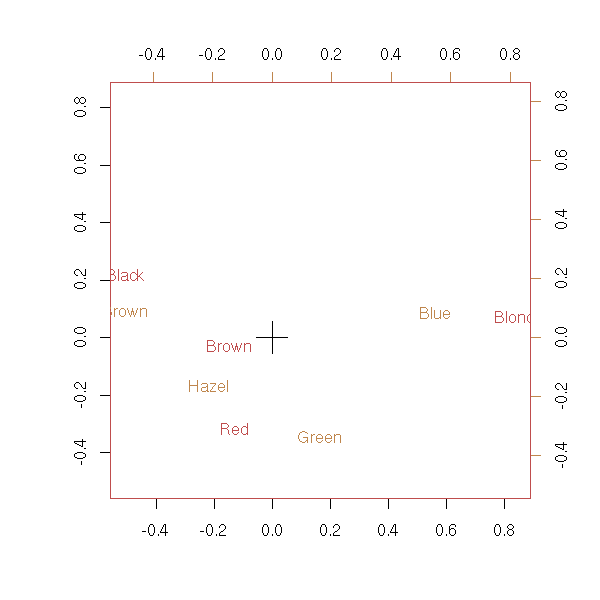

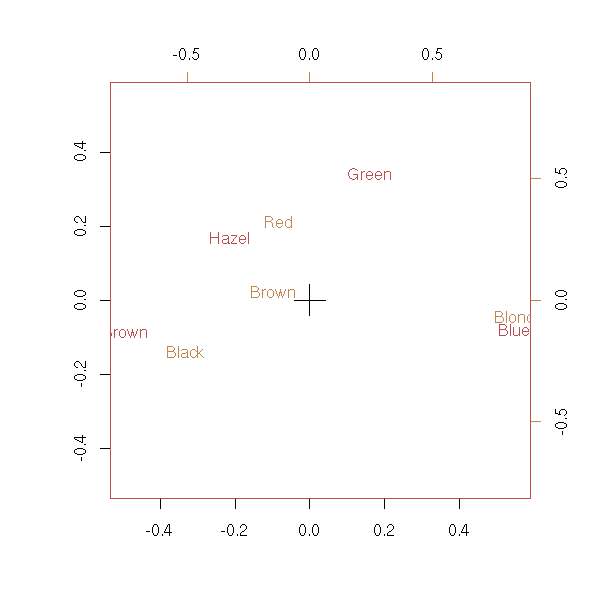

library(MASS) data(HairEyeColor) x <- HairEyeColor[,,1]+HairEyeColor[,,2] biplot(corresp(x, nf = 2))

biplot(corresp(t(x), nf = 2))

# ??? plot(corresp(x, nf=1))

If there are more variables, we can add them, a posteriori, on the plot, with the predict.mca function.

Other examples

n <- 100 m <- matrix(sample(c(T,F),n^2,replace=T), nr=n, nc=n) biplot(corresp(m, nf=2), main="Correspondance Analysis of Random Data")

vp <- corresp(m, nf=100)$cor

plot(vp, ylim=c(0,max(vp)), type='l',

main="Correspondance Analysis of Random Data")

n <- 100

x <- matrix(1:n, nr=n, nc=n, byrow=F)

y <- matrix(1:n, nr=n, nc=n, byrow=T)

m <- abs(x-y) <= n/10

biplot(corresp(m, nf=2),

main='Correspondance Analysis of "Band" Data')

vp <- corresp(m, nf=100)$cor

plot(vp, ylim=c(0,max(vp)), type='l',

main='Correspondance Analysis of "Band" Data')

You can also check the "ca" function.

library(multiv) ?ca

We start with a table, containing 0s and 1s, with a very simple form

n <- 100

x <- matrix(1:n, nr=n, nc=n, byrow=F)

y <- matrix(1:n, nr=n, nc=n, byrow=T)

m <- abs(x-y) <= n/10

plot.boolean.matrix <- function (m) { # Voir aussi levelplot

nx <- dim(m)[1]

ny <- dim(m)[2]

x <- matrix(1:nx, nr=nx, nc=ny, byrow=F)

y <- matrix(1:ny, nr=nx, nc=ny, byrow=T)

plot( as.vector(x)[ as.vector(m) ], as.vector(y)[ as.vector(m) ], pch=16 )

}

plot.boolean.matrix(m)

But someone changed (or forgot to give us) the order of the rows and columns -- if the data is experimental, we might not have any a priori clue as to the order.

ox <- sample(1:n, n, replace=F)

oy <- sample(1:n, n, replace=F)

reorder.matrix <- function (m,ox,oy) {

m <- m[ox,]

m <- m[,oy]

m

}

m2 <- reorder.matrix(m,ox,oy)

plot.boolean.matrix(m2)

We can reorder rows and columns as follows:

a <- corresp(m2) o1 <- order(a$rscore) o2 <- order(a$cscore) m3 <- reorder.matrix(m2,o1,o2) plot.boolean.matrix(m3)

Had we started with random data, the result could have been:

n <- 100

p <- .05

done <- F

while( !done ){

# We often get singular matrices

m2 <- matrix( sample(c(F,T), n*n, replace=T, prob=c(1-p, p)), nr=n, nc=n )

done <- det(m2) != 0

}

plot.boolean.matrix(m2)

a <- corresp(m2) o1 <- order(a$rscore) o2 <- order(a$cscore) m3 <- reorder.matrix(m2,o1,o2) plot.boolean.matrix(m3)

The idea is very simple. We start with a contingency table (for two qualitative variables), we transform it into a frequency table, we compute the marginal frequencies and the frequencies we would have had if the two variables had been independant, we compute the difference, so as to get a "centered" matrix, that describes the lack of independance of the two variables. We then perform a Principal Component Analysis, but not with the canonical distance: instead, we use a weighted distance between row-profiles (resp. column-profiles) so that each column (resp. row) have the same importance. This is called the Chi^2 distance.

# Euclidian Distance

sum ( f_{i,j} - f_{i',j} )^2

# Euclidian distance between the row-profiles

f_{i,j} f_{i',j}

sum ( --------- - ---------- )^2

j f_{i,.} f_{i',.}

# Chi^2 distance

1 f_{i,j} f_{i',j}

sum --------- ( --------- - ---------- )^2

j j_{.,j} f_{i,.} f_{i',.}We choose this metric because it has the following property: we can group some values of a variable without changing the result. (For instance, we could replace one of the two variables, X, whose values are A, B, C, D, E, F, by another variable X' with values A, BC, D, E, F, in the obvious way, if we think that the differences between B and C are not that relevant.)

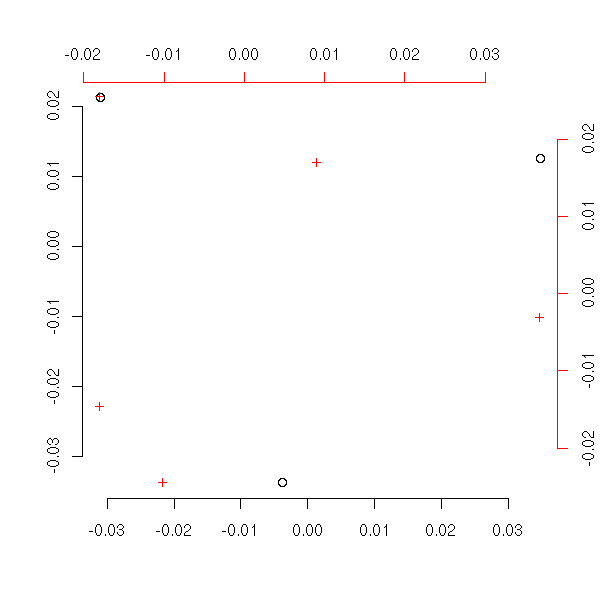

# Correspondance Analysis

my.ac <- function (x) {

if(any(x<0))

stop("Expecting a contingency matrix -- with no negative entries")

x <- x/sum(x)

nr <- dim(x)[1]

nc <- dim(x)[2]

marges.lignes <- apply(x,2,sum)

marges.colonnes <- apply(x,1,sum)

profils.lignes <- x / matrix(marges.lignes, nr=nr, nc=nc, byrow=F)

profils.colonnes <- x / matrix(marges.colonnes, nr=nr, nc=nc, byrow=T)

# Do not forget to center the matrix: we compute the frequency matrix

# we would have if the variables were independant and we take the difference.

x <- x - outer(marges.colonnes, marges.lignes)

e1 <- eigen( t(x) %*% diag(1/marges.colonnes) %*% x %*% diag(1/marges.lignes) )

e2 <- eigen( x %*% diag(1/marges.lignes) %*% t(x) %*% diag(1/marges.colonnes) )

v.col <- solve( e2$vectors, x )

v.row <- solve( e1$vectors, t(x) )

v.col <- t(v.col)

v.row <- t(v.row)

if(nr<nc)

valeurs.propres <- e1$values

else

valeurs.propres <- e2$values

# Dessin

plot( v.row[,1:2],

xlab='', ylab='', frame.plot=F )

par(new=T)

plot( v.col[,1:2], col='red',

axes=F, xlab='', ylab='', pch='+')

axis(3, col='red')

axis(4, col='red')

# Return the data

invisible(list(donnees=x, colonnes=v.col, lignes=v.row,

valeurs.propres=valeurs.propres))

}

nr <- 3

nc <- 5

x <- matrix(rpois(nr*nc,10), nr=nr, nc=nc)

my.ac(x)

Let us compare our result with that of the "corresp" function.

plot(corresp(x,nf=2))

Exercice: Modify the code above so that it uses the "svd" function instead of "eigen" (this is a real problem: as the matrix is no longer symetric, numeric computations can yield non-real eigen values).

On the plot representing the first variable, we can add the points corresponding to the second: we just take the barycenters of the first points, weighted by the corresponding (row- or column-) profile. We can do the same thing of the plot for the second variable: up to the scale, we should get the same result -- it is this property that justifies the superposition of both plots.

TODO: both plots

The barycenter also allows us to add new variables to the plots.

TODO

library(CoCoAn) library(multiv) library(ade4)

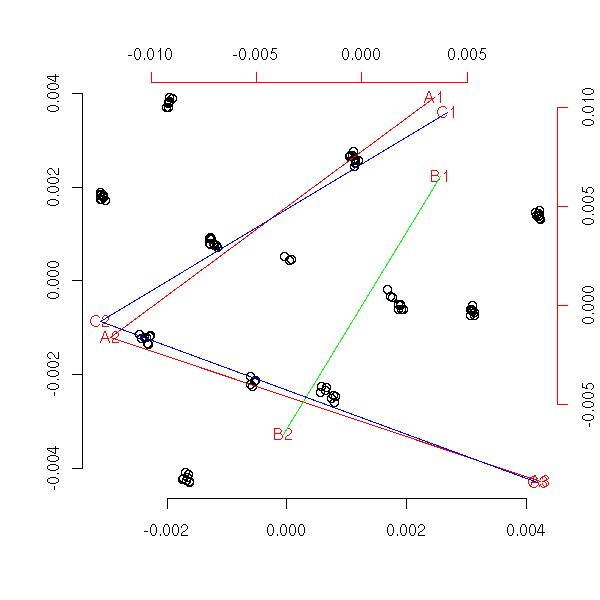

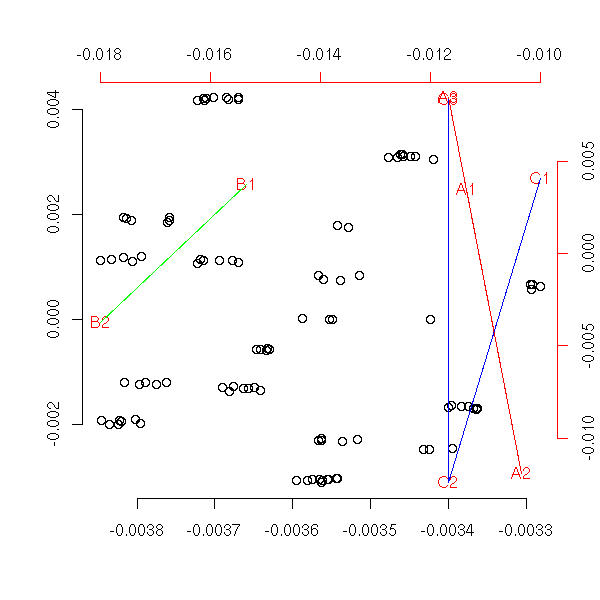

Simple Correspondance Analysis tackled the problem of two qualitative variables; multiple correspondance analysis caters to more than two variables. Usually, the data are not given as a contingency matrix (or "hypermatrix": if there are n variables, it should be an n-dimensional table), because this matrix would have a huge number of elements, most of them null. We use a table similar to that used to quantitative variables:

Hair Eye Sex

1 Blond Blue Male

2 Brown Brown Female

3 Brown Blue Female

4 Red Brown Male

5 Red Brown Female

6 Brown Brown Female

7 Brown Brown Female

8 Black Brown Male

9 Brown Blue Female

10 Blond Blue Male

...Here is the example from the manual.

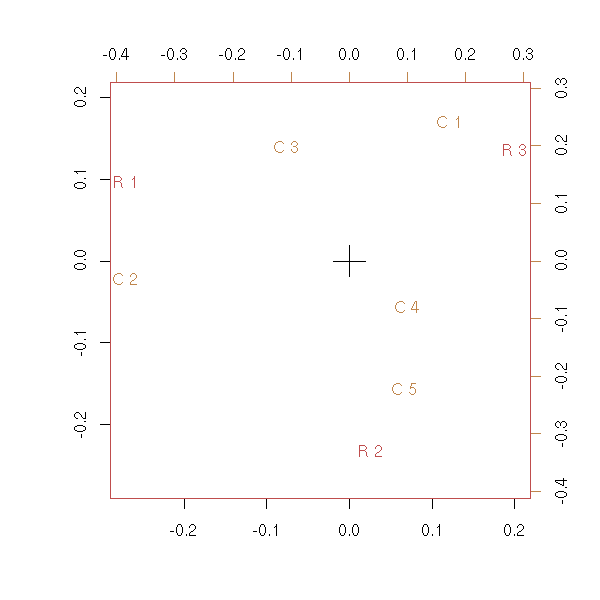

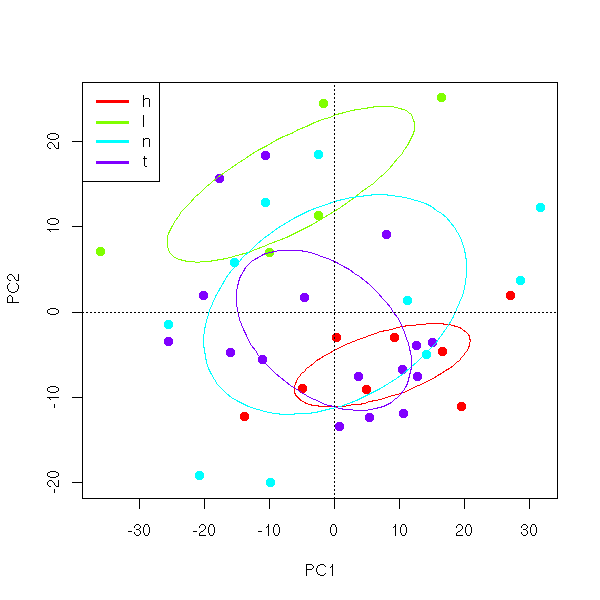

library(MASS) data(farms) farms.mca <- mca(farms, abbrev=TRUE) farms.mca plot(farms.mca)

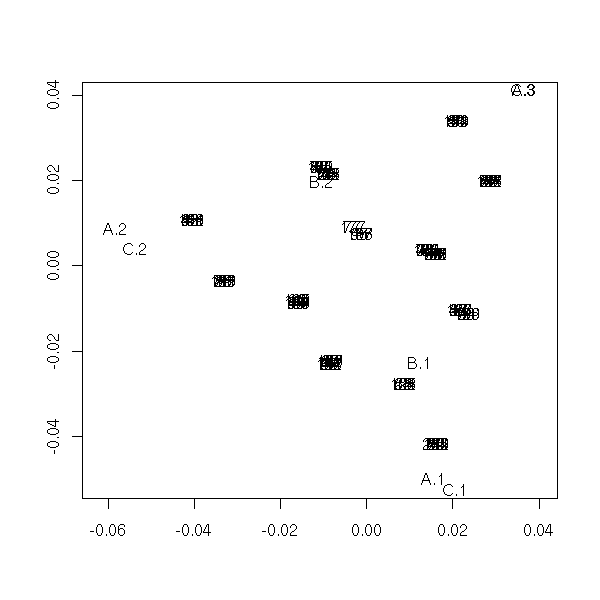

Let us consider again the eye and hair colour data set. As the data are given by a contingency hypermatrix, we first have to transform it.

# Not pretty

my.table.to.data.frame <- function (a) {

r <- NULL

d <- as.data.frame.table(a)

n1 <- dim(d)[1]

n2 <- dim(d)[2]-1

for (i in 1:n1) {

for (j in 1:(d[i,n2+1])) {

r <- rbind(r, d[i,1:n2])

}

row.names(r) <- 1:dim(r)[1]

}

r

}

r <- my.table.to.data.frame(HairEyeColor)

plot(mca(r))

We can also add mor subjects or variables, afterwards, with the "predict.mca" function.

?predict.mca

If there are only two variables, we can perform a simple or multiple correspondance analysis: the result is not exactly the same, but remains very similar.

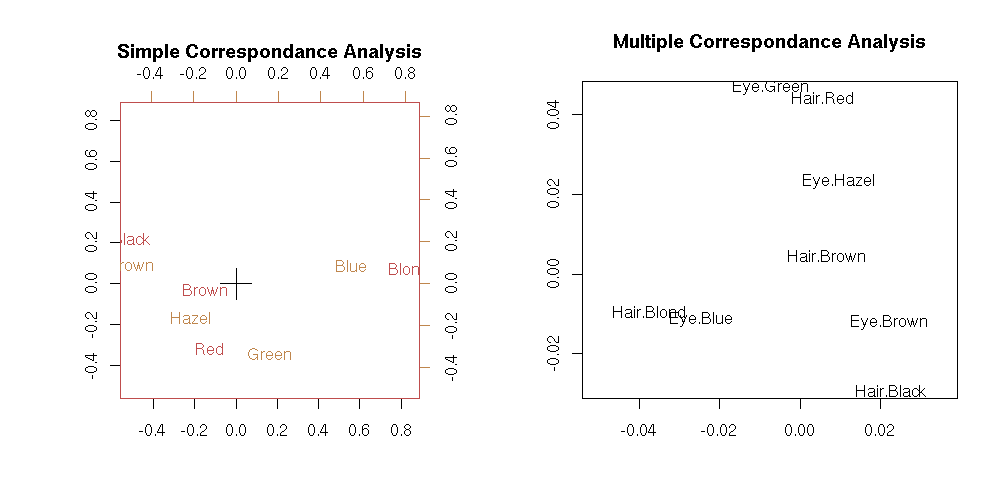

x <- HairEyeColor[,,1]+HairEyeColor[,,2]

op <- par(mfcol=c(1,2))

biplot(corresp(x, nf = 2),

main="Simple Correspondance Analysis")

plot(mca(my.table.to.data.frame(x)), rows=F,

main="Multiple Correspondance Analysis")

par(op)

We first transform the data, to turn it into a "disjunctive table":

HairBlack HairBrown HairRed HairBlond EyeBrown EyeBlue EyeHazel EyeGreen SexMale SexFemale

[1,] 0 0 0 1 0 1 0 0 1 0

[2,] 0 1 0 0 1 0 0 0 0 1

[3,] 0 1 0 0 0 1 0 0 0 1

[4,] 0 0 1 0 1 0 0 0 1 0

[5,] 0 0 1 0 1 0 0 0 0 1

[6,] 0 1 0 0 1 0 0 0 0 1

[7,] 0 1 0 0 1 0 0 0 0 1

[8,] 1 0 0 0 1 0 0 0 1 0

[9,] 0 1 0 0 0 1 0 0 0 1