Sample data

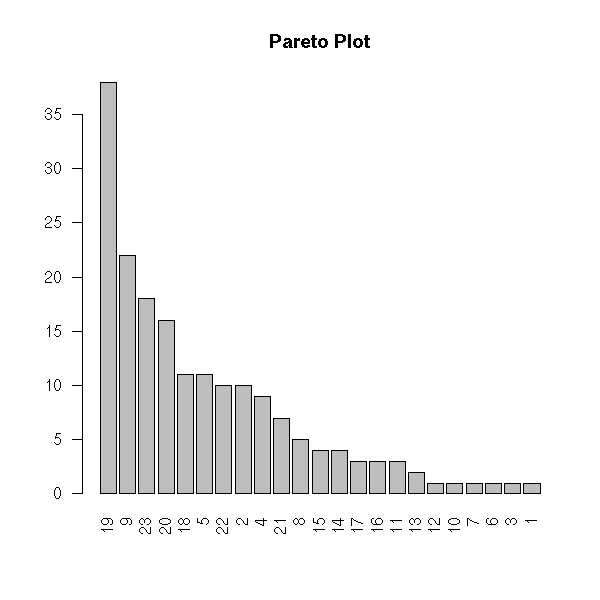

Quantitative univariate data

Ordered univariate data

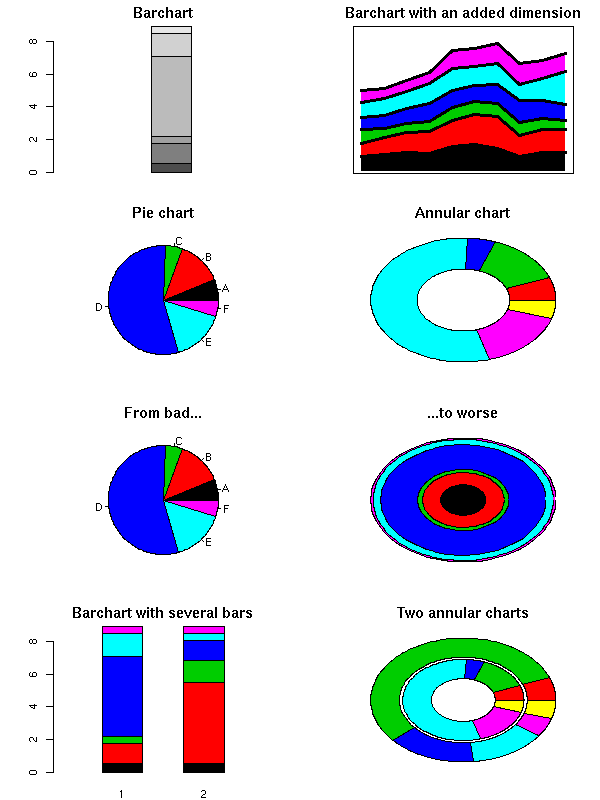

Qualitative univariate variables

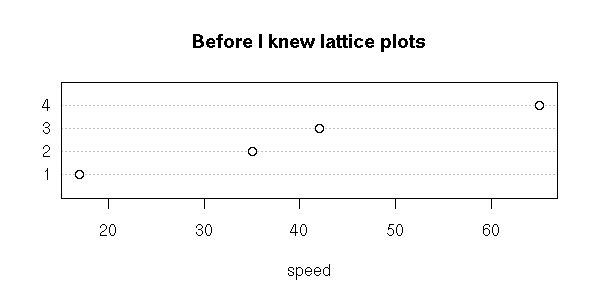

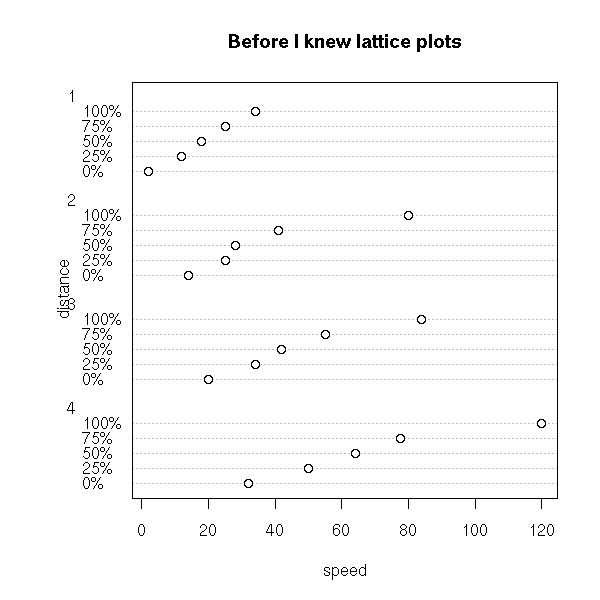

Quantitative bivariate data

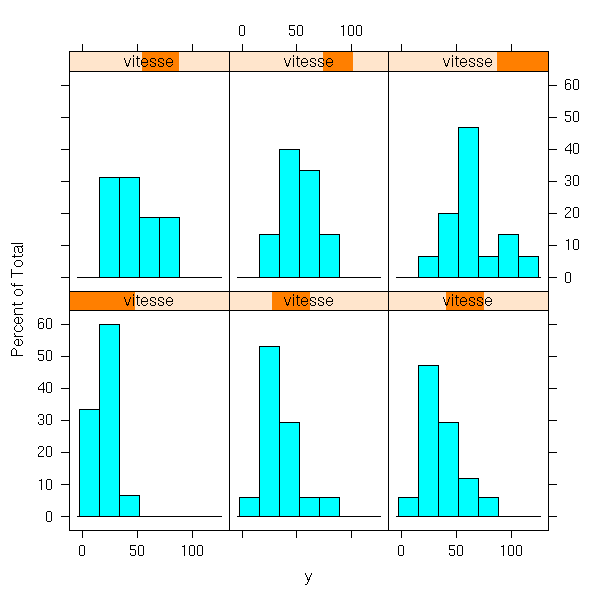

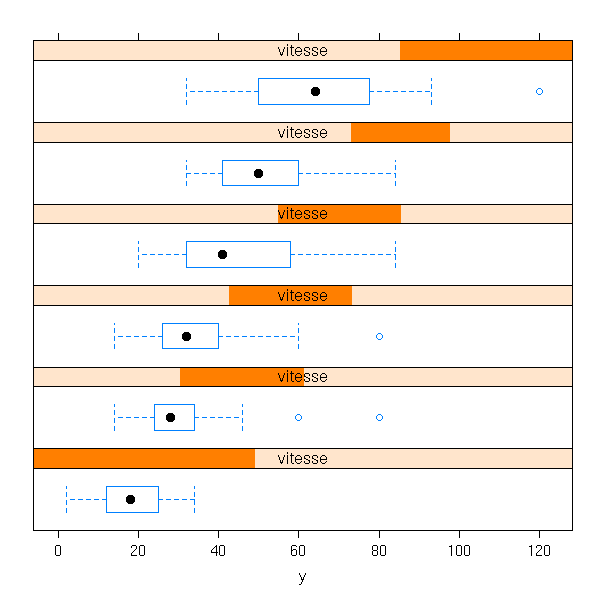

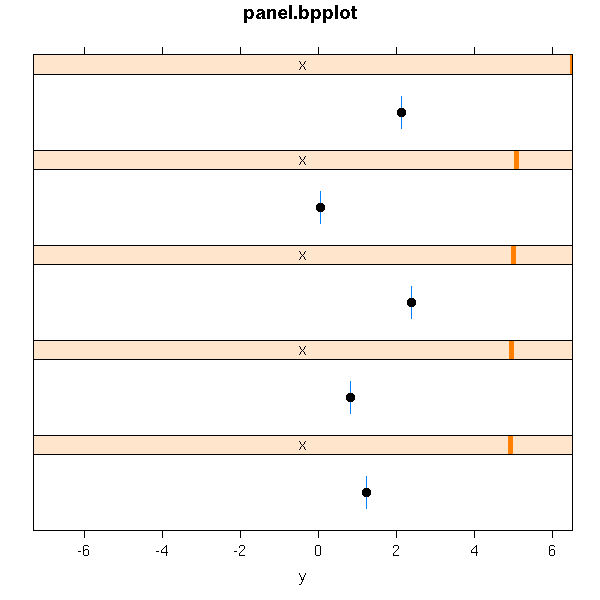

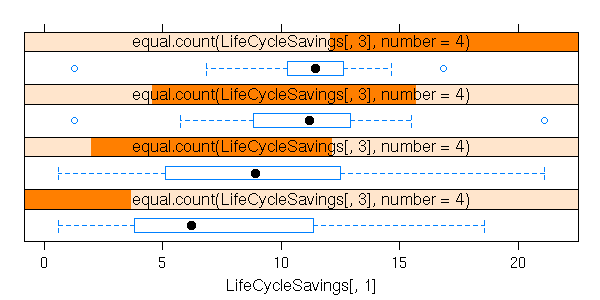

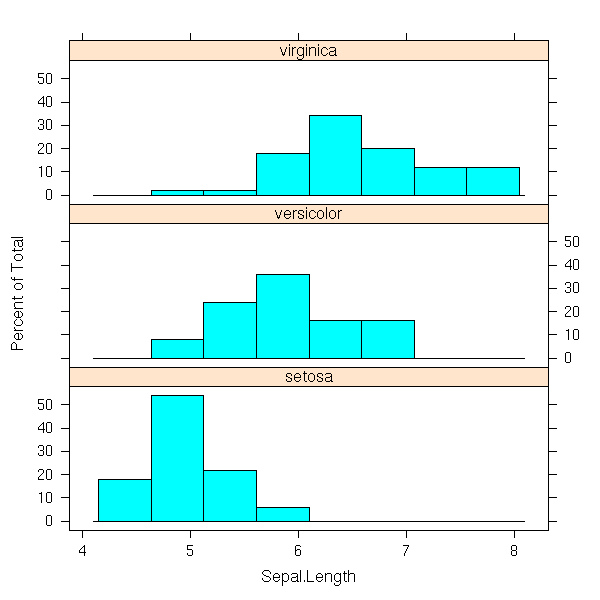

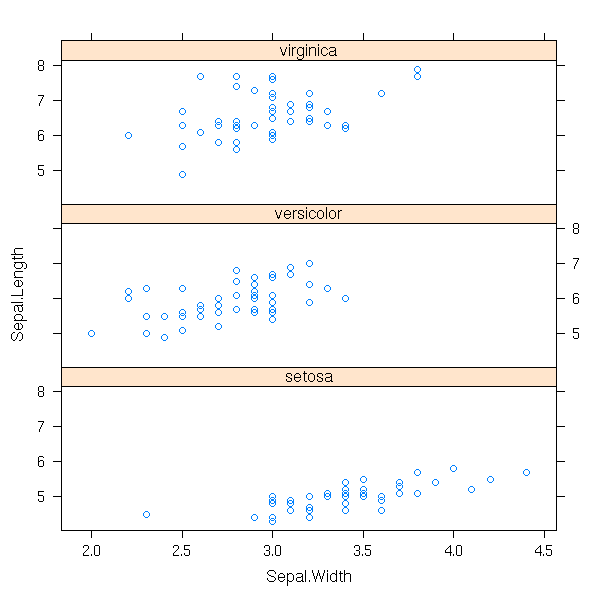

Qualitative/quantitative bivariate data

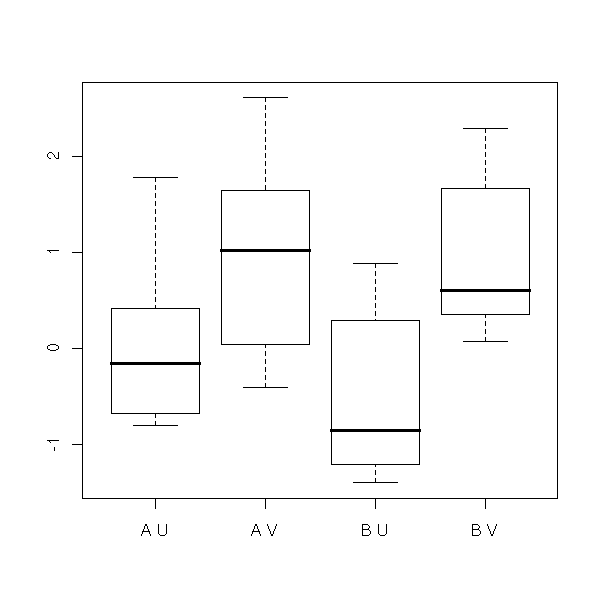

Qualitative bivariate data

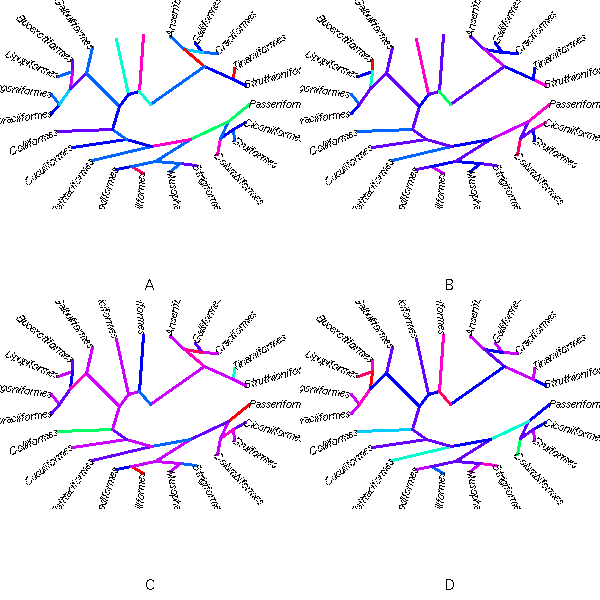

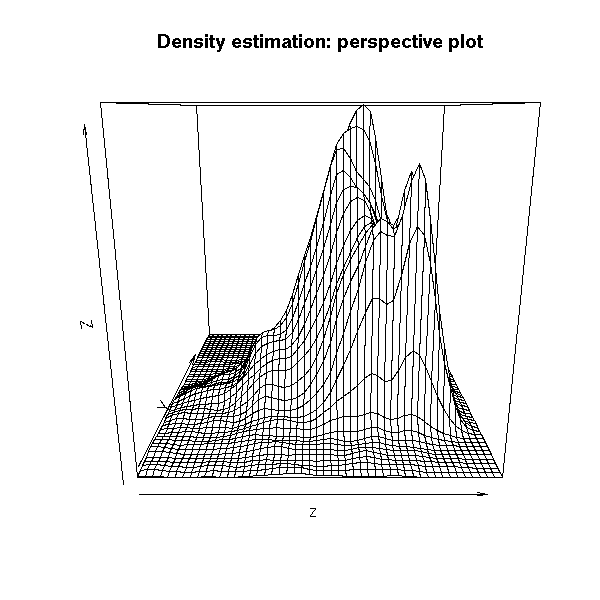

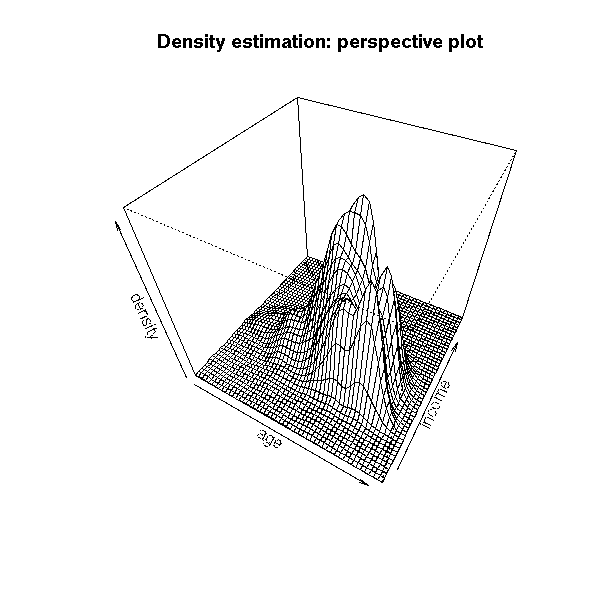

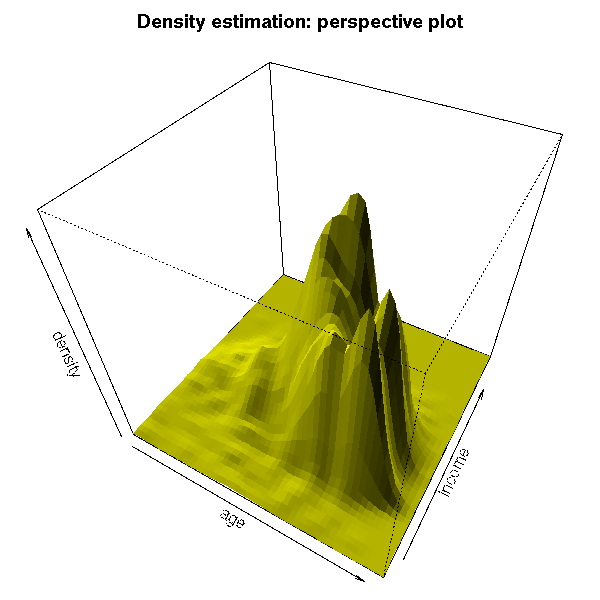

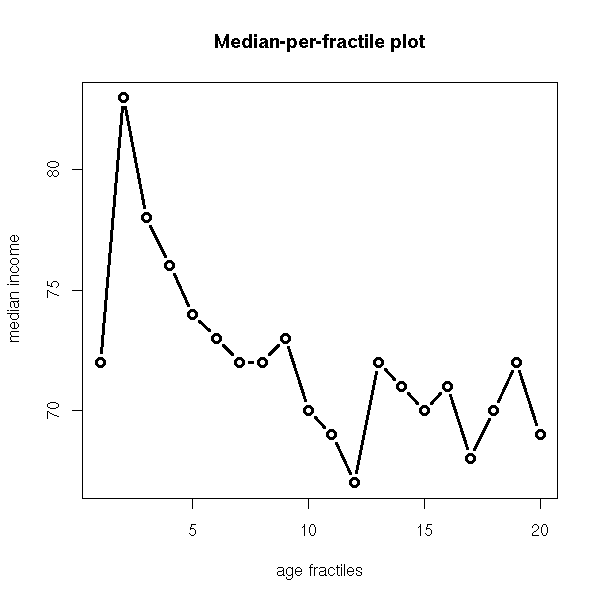

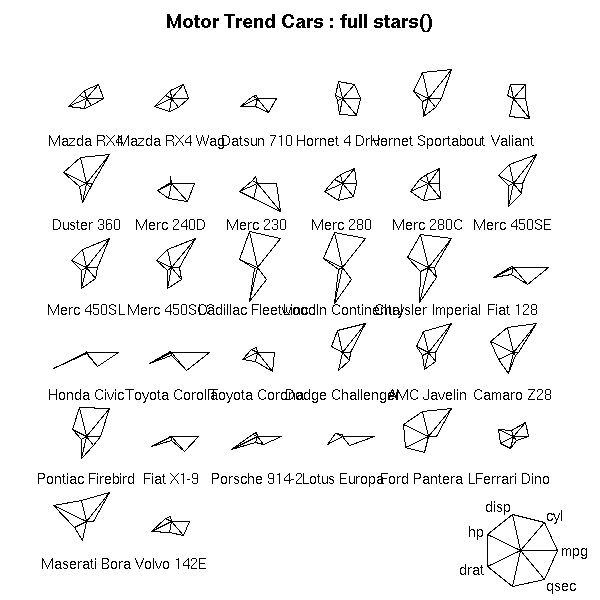

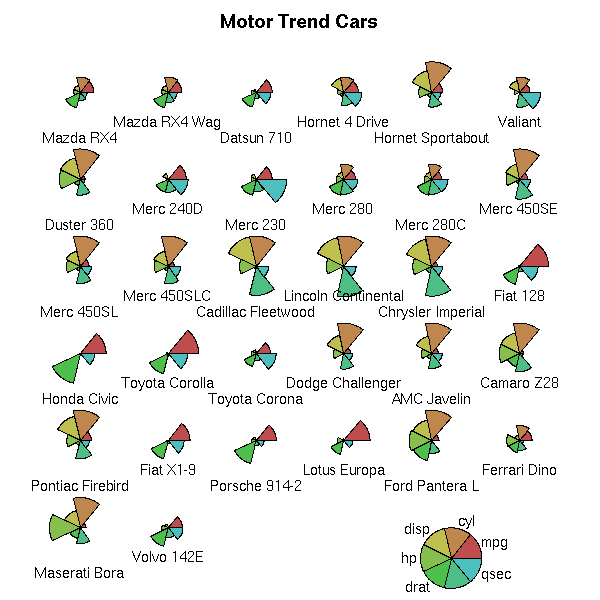

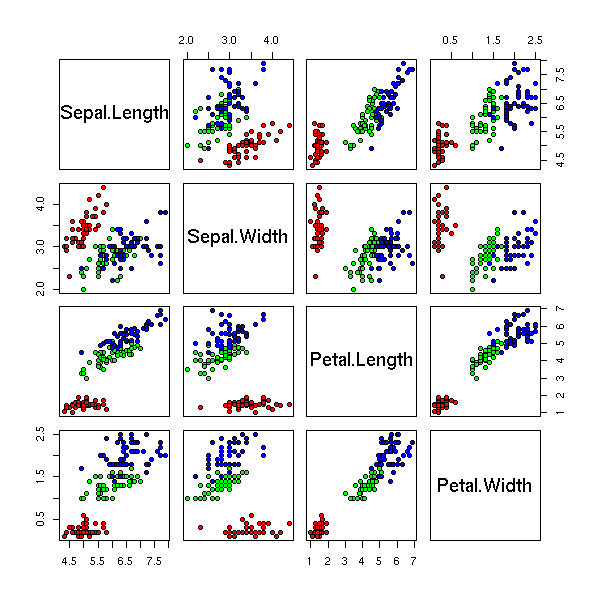

Three variables and more

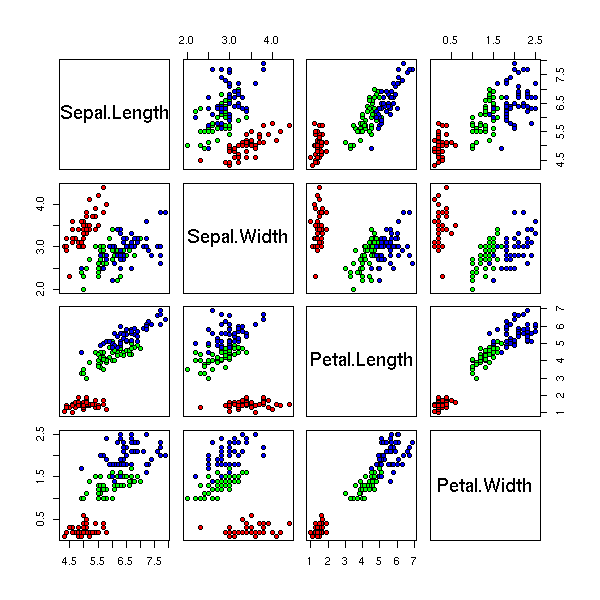

Multivariate data, with some qualitative variables

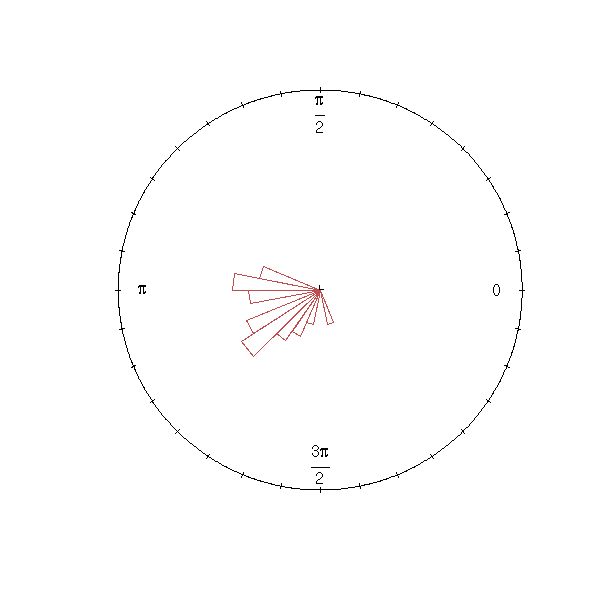

Fun

TO SORT

In this chapter, we explain how to turn data (heaps of numbers) into graphics, be they simple graphics for uni- or bi-variate data, or less straightforward ones, involving some linear algebra or non trivial algorithms.

Where does the data come from, in the first place? If you are being asked or are asking yourself, genuine questions, about real-world problems, you probably already have your data. On the other hand, if you want to teach you R, you will need some data to play with. Luckily, R comes with a wealth of data sets.

Here is one -- the data used in the cover art of this book: eruption time and time between eruptions for a Geyser.

> ?faithful

> data(faithful)

> faithful

eruptions waiting

1 3.600 79

2 1.800 54

3 3.333 74

...

270 4.417 90

271 1.817 46

272 4.467 74

> str(faithful)

`data.frame': 272 obs. of 2 variables:

$ eruptions: num 3.60 1.80 3.33 2.28 4.53 ...

$ waiting : num 79 54 74 62 85 55 88 85 51 85 ...

Each package usually comes with a few datasets, used in its examples. The "data" function lists (or loads) those datasets.

data(package='ts')

Data sets in package `ts':

AirPassengers Monthly Airline Passenger Numbers 1949-1960

austres Quarterly Time Series of the Number of

Australian Residents

beavers Body Temperature Series of Two Beavers

BJsales Sales Data with Leading Indicator.

EuStockMarkets Daily Closing Prices of Major European Stock

Indices, 1991-1998.

JohnsonJohnson Quarterly Earnings per Johnson & Johnson Share

LakeHuron Level of Lake Huron 1875--1972

lh Luteinizing Hormone in Blood Samples

lynx Annual Canadian Lynx trappings 1821--1934

Nile Flow of the River Nile

nottem Average Monthly Temperatures at Nottingham,

1920--1939

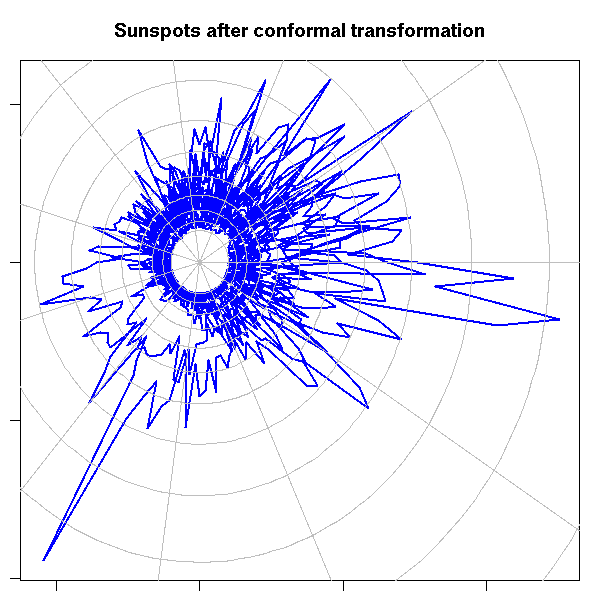

sunspot Yearly Sunspot Data, 1700--1988. Monthly

Sunspot Data, 1749--1997.

treering Yearly Treering Data, -6000--1979.

UKDriverDeaths Road Casualties in Great Britain 1969--84

UKLungDeaths Monthly Deaths from Lung Diseases in the UK

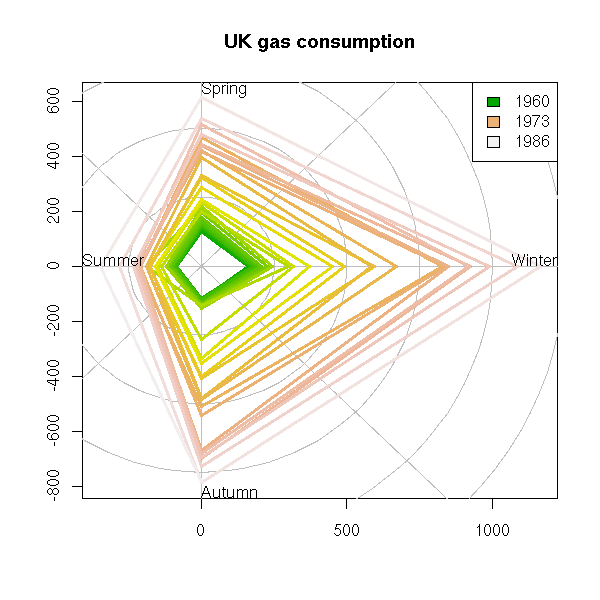

UKgas UK Quarterly Gas Consumption

USAccDeaths Accidental Deaths in the US 1973--1978

WWWusage Internet Usage per MinuteHere are the datasets from the "base" package:

Data sets in package `base':

Formaldehyde Determination of Formaldehyde concentration

HairEyeColor Hair and eye color of statistics students

InsectSprays Effectiveness of insect sprays

LifeCycleSavings Intercountry life-cycle savings data

OrchardSprays Potency of orchard sprays

PlantGrowth Results from an experiment on plant growth

Titanic Survival of passengers on the Titanic

ToothGrowth The effect of vitamin C on tooth growth in

guinea pigs

UCBAdmissions Student admissions at UC Berkeley

USArrests Violent crime statistics for the USA

USJudgeRatings Lawyers' ratings of state judges in the US

Superior Court

USPersonalExpenditure Personal expenditure data

VADeaths Death rates in Virginia (1940)

airmiles Passenger miles on US airlines 1937-1960

airquality New York Air Quality Measurements

anscombe Anscombe's quartet of regression data

attenu Joiner-Boore Attenuation Data

attitude Chatterjee-Price Attitude Data

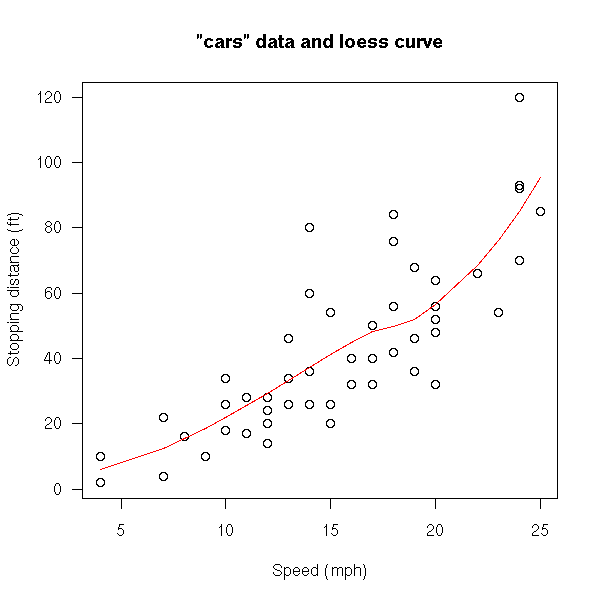

cars Speed and Stopping Distances for Cars

chickwts The Effect of Dietary Supplements on Chick

Weights

co2 Moana Loa Atmospheric CO2 Concentrations

discoveries Yearly Numbers of `Important' Discoveries

esoph (O)esophageal Cancer Case-control study

euro Conversion rates of Euro currencies

eurodist Distances between European Cities

faithful Old Faithful Geyser Data

freeny Freeny's Revenue Data

infert Secondary infertility matched case-control

study

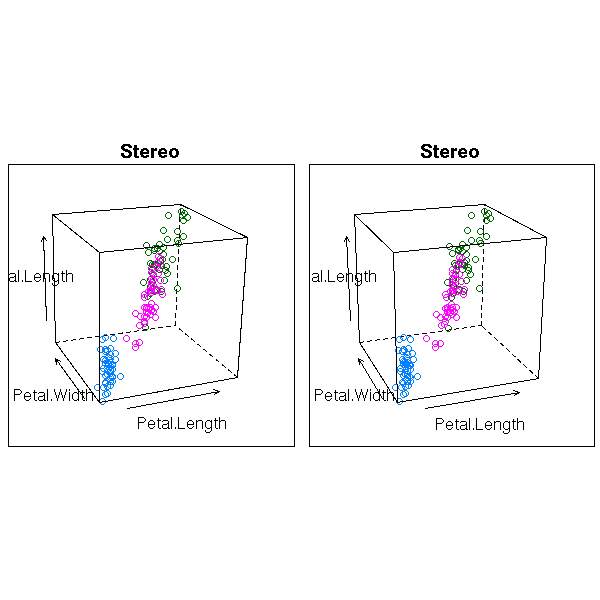

iris Edgar Anderson's Iris Data as data.frame

iris3 Edgar Anderson's Iris Data as 3-d array

islands World Landmass Areas

longley Longley's Economic Regression Data

morley Michaelson-Morley Speed of Light Data

mtcars Motor Trend Car Data

nhtemp Yearly Average Temperatures in New Haven CT

phones The Numbers of Telephones

precip Average Precipitation amounts for US Cities

presidents Quarterly Approval Ratings for US Presidents

pressure Vapour Pressure of Mercury as a Function of

Temperature

quakes Earthquake Locations and Magnitudes in the

Tonga Trench

randu Random Numbers produced by RANDU

rivers Lengths of Major Rivers in North America

sleep Student's Sleep Data

stackloss Brownlee's Stack Loss Plant Data

state US State Facts and Figures

sunspots Monthly Mean Relative Sunspot Numbers

swiss Swiss Demographic Data

trees Girth, Height and Volume for Black Cherry

Trees

uspop Populations Recorded by the US Census

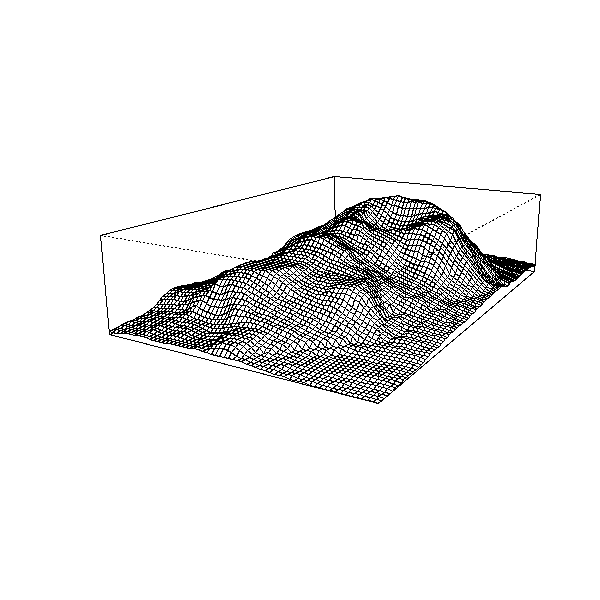

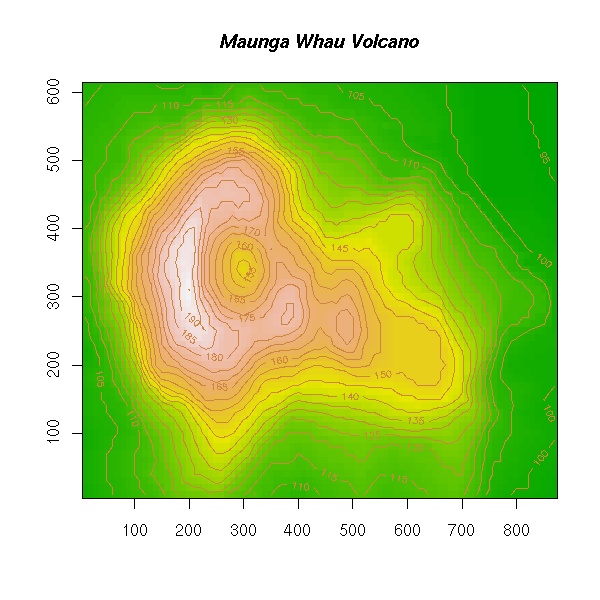

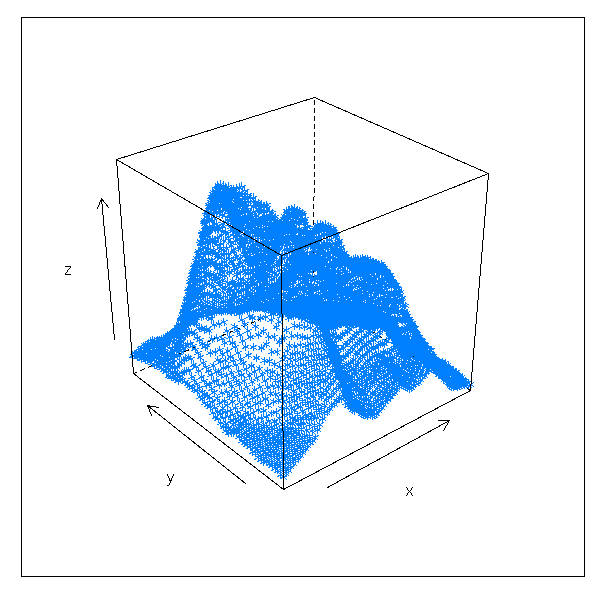

volcano Topographic Information on Auckland's Maunga

Whau Volcano

warpbreaks Breaks in Yarn during Weaving

women Heights and Weights of WomenThere are also many in the "MASS" package (that illustrates the book "Modern Applied Statistics with S" -- which I have never read).

data(package='MASS')

R even says:

Use `data(package = .packages(all.available = TRUE))' to list the data sets in all *available* packages.

It is "a bit" violent, but you can take ALL the example datasets and have them undergo some statistical operation. I did use such manipulations while writing those notes, in order to find an example satisfying some properties.

#!/bin/sh # This is not R code but (Bourne) shell code

cd /usr/lib/R/library/

for lib in *(/)

do

if [ -d $lib/data ]

then

(

cd $lib/data

echo 'library('$lib')'

ls

) | grep -vi 00Index | perl -p -e 's#(.*)\..*?$#data($1); doit($1)#'

fi

done > /tmp/ALL.RThe list of all the datasets:

doit <- function (...) {}

source("/tmp/ALL.R")

sink("str_data")

ls.str()

q()All the datasets with outliers:

doit <- function (d) {

name <- as.character(substitute(d))

cat(paste("Processing", name, "\n"))

if (!exists(name)) {

cat(paste(" Skipping (does not exist!)", name, "\n"))

} else if (is.vector(d)) {

doit2(d, name)

} else if (is.data.frame(d)) {

for (x in names(d)) {

cat(paste("Processing", name, "/", x, "\n"))

doit2(d[[x]], paste(name,"/",x))

}

} else

cat(paste(" Skipping (unknown reason)", substitute(d), "\n"))

}

doit2 <- function (x,n) {

if( is.numeric(x) )

really.do.it(x,n)

else

cat(" Skipping (non numeric)\n")

}

really.do.it <- function (x,name) {

x <- x[!is.na(x)]

m <- median(x)

i <- IQR(x)

n1 <- sum(x>m+1.5*i)

n2 <- sum(x<m-1.5*i)

n <- length(x)

p1 <- round(100*n1/n, digits=0)

p2 <- round(100*n2/n, digits=0)

if( n1+n2>0 ) {

boxplot(x, main=name)

cat(paste(" OK ", n1+n2, "/", n, " (",p1,"%, ",p2,"%)\n", sep=''))

}

}

source("ALL.R", echo=F)You can also use this to TEST your code -- more about tests and test-driven development (TDD) in the "Programing in R" chapter.

Statistical data is typically represented by a table, one row per observation, one column per variable. For instance, if you measure squirrels, you will have one row per squirrel, one column for the weight, another for the tail length, another for the height, another for the fur colour, etc.

Data are said to be univariate when there is only one variable (one column), bivariate when there are two, multivariate when there are more.

A variable is said to be quantitative (or numeric) when it contains numbers with which one can do arithmetic: for instance temperature (multiplication or addition of temperatures is not meaningful, but difference or mean is), but not flat number.

Otherwise, the variable is said to be qualitative: for instance, a yes/no answer, colors or postcodes.

There are sonetimes ordered qualitative variables, for instance, a variable whose values would be "never", "seldom", "sometimes", "often", "always". These data are sometimes obtained by binning quantitative data.

Here, we consider a single, numeric, statistical variable (typically some quantity (height, length, weight, etc.) measured on each subject of some experiment); the data usually comes as a vector. We shall often call this vector a "statistical series".

One can give a summary of such data with a few numbers: the mean, the minimum, the maximum, the median (to get it, sort the numbers and take the middle one), the quantiles (idem, but take the numbers a quarter from the beginning and a quarter from the end). Unsurprisingly, this is what the "summary" function gives us.

> summary(faithful$eruptions) Min. 1st Qu. Median Mean 3rd Qu. Max. 1.600 2.163 4.000 3.488 4.454 5.100 > mean(faithful$eruptions) [1] 3.487783

Always be critical when observing data: in particular, you should check that the extreme values are not aberrant, that they do not come from some mistake.

You can also check various dispersion measures such as the Median Absolute Deviation (MAD), the "standard deviation" or the Inter-Quartile Range (IQR).

> mad(faithful$eruptions) [1] 0.9510879 > sd(faithful$eruptions) [1] 1.141371 > IQR(faithful$eruptions) [1] 2.2915

But you might not be familiar with those notions: let us recall the links between the mean, variance, median and MAD. When you have a set of numbers x1,x2,...,xn, you can try to find the real number m that minimizes the sum

abs(m-x1) + abs(m-x2) + ... + abs(m-xn).

One can show that it is the median. You can also try to find the real number that minimizes the sum

(m-x1)^2 + (m-x2)^2 + ... + (m-xn)^2.

One can show that it is the mean. This property of the mean is called the "Least Squares Property".

Hence the following definition: the Variance of a statistical series x1,x2,...,xn is the mean of the squares deviations from the mean:

(m-x1)^2 + (m-x2)^2 + ... + (m-xn)^2

Var(x) = --------------------------------------

n

where m is the mean,(some books or software replace "n" by "n-1": we shall see why in a later chapter) the standard deviation is the square root of the variance, similarly, the Median Absolute Deviation (MAD) is the mean of the absolute values of the deviation from the median:

abs(x1-m) + ... + abs(xn-m)

MAD(x) = -----------------------------

n

where m is the median.At first, the relevance of that notion as a measure of dispersion was not obvious to me; why should we take the mean of the _squares_ of the deviations from the mean, why not simply the mean of the absolute value of those deviations? The preceeding definition of the mean provides one motivation of the notion of variance (or standard deviation). There are other motivations: for instance, the variance is easy to compute, iteratively, contrary to the MAD (it was important in the early days of the Computer Era, when computer power was very limited); another motivation is that the notion of variance is central in many theoretical results (Bienaime-Tchebychev inequality, etc. -- this is due to the good properties of the "square" function as opposed to the absolute value function -- the former is differentiable, not the latter).

But beware: the notions of mean and variance lose their relevance when the data is not symetric or when it contains many extreme values ("outliers", "aberrant values" or "fat tails").

On the contrary, the median, the Inter-Quartile Range (IQR) or the Median Absolute Deviation (MAD) are "robust" dispersion measures: they give good results, even on a non-gaussian sample, contrary to the mean or the standard deviation. (This notion of robustness is very important; robust methods used to be overlooked because they are more computer-intensive -- but this argument has become outdates.) Yet, if your data is gaussian, they are less precise. We shall come back and say more about this problem and others when we introduce the notion of "estimator".

TODO: Does this belong here or should it be in the "dimension reduction" section?

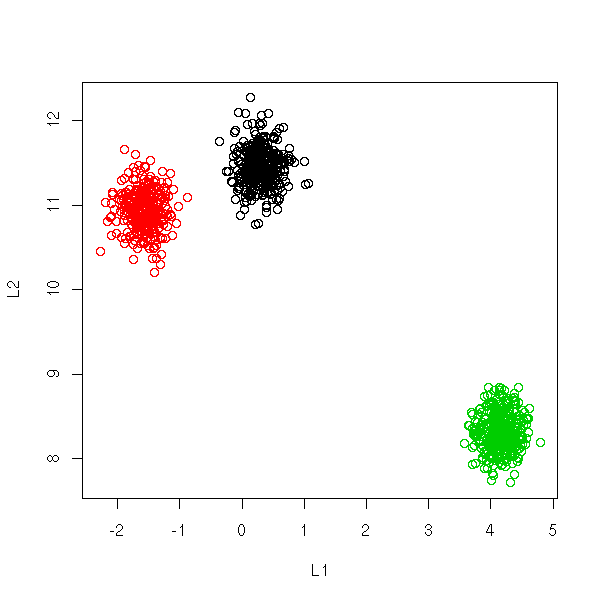

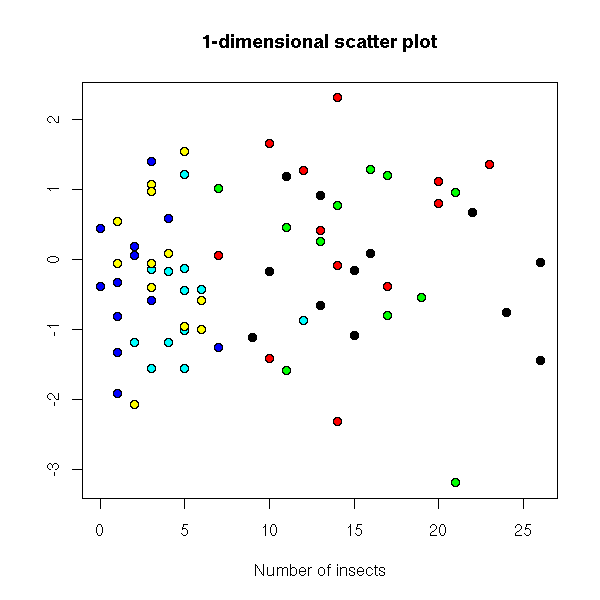

You can display high-dimensional datasets in the L1-L2 space: average value of the coordinates and standard deviation of the coordinates.

TODO: is this correct???

If the data were gaussian, the cloud of points should exhibit a linear shape.

If the data is a mixture of gaussians, if there are several clusters,

you should see several lines. (???)

n <- 1000

k <- 20

p <- 3

i <- sample(1:p, n, replace=TRUE)

x <- 10 * matrix(rnorm(p*k), nr=p, nc=k)

x <- x[i,] + matrix(rnorm(n*k), nr=n, nc=k)

L1L2 <- function (x) {

cbind(L1 = apply(x, 1, mean),

L2 = apply(x, 1, sd))

}

plot(L1L2(x), col=i)

This representation can be used to spot outliers.

This kind of representation is used in finance, where the coordinates are the "returns" of assets, at different points in time, and the axes are the average return (vertical axis) and the risk (horizontal axis).

TODO: A plot, with financial data. e.g., retrieve from Google the returns of half a dozen indices, say, FTSE100, CAC40, DAX, Nikkei225, DJIA.

On this plot, you can overlay the set of all possible (risk,return) pairs of portfolios built from those assets (a portfolio is simply a linear combination of assets): the frontier of that domain is called the efficient frontier.

TODO: plot it...

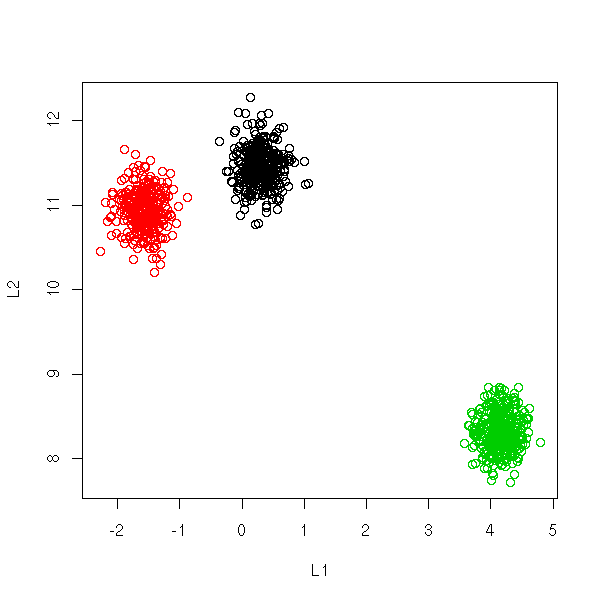

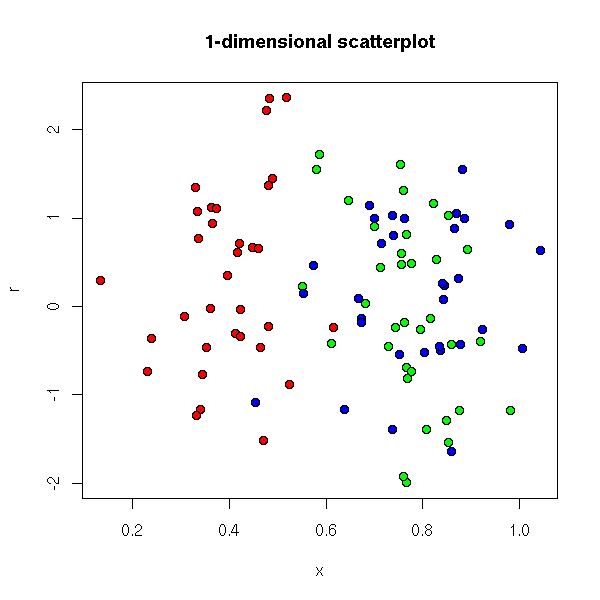

To compare two different statistical series that may not have the same unit we can rescale them so that their means be zero and their variance 1.

xn - m

yn = --------

s

where m is the mean of the series

and s its standard deviation.

x <- crabs$FL

y <- crabs$CL # The two vectors need not

# have the same length

op <- par(mfrow=c(2,1))

hist(x, col="light blue", xlim=c(0,50))

hist(y, col="light blue", xlim=c(0,50))

par(op)

op <- par(mfrow=c(2,1))

hist( (x - mean(x)) / sd(x),

col = "light blue",

xlim = c(-3, 3) )

hist( (y - mean(y)) / sd(y),

col = "light blue",

xlim = c(-3, 3) )

par(op)

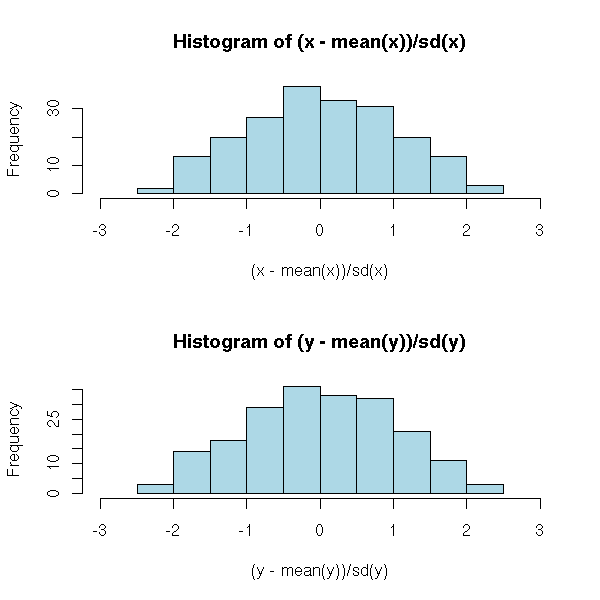

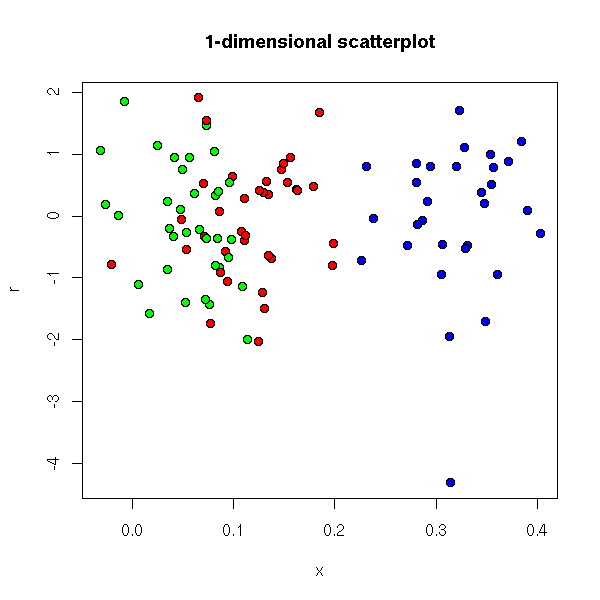

But beware: normalization will just rescale your data, it will not solve other problems. In particular, if your data are not gaussian (i.e., if the histogram is not "bell-shaped"), they will not become gaussian. Furthermore, the presence of even a single extreme value ("outlier") will change the value of the mean and the standard deviation and therefore change the scaling.

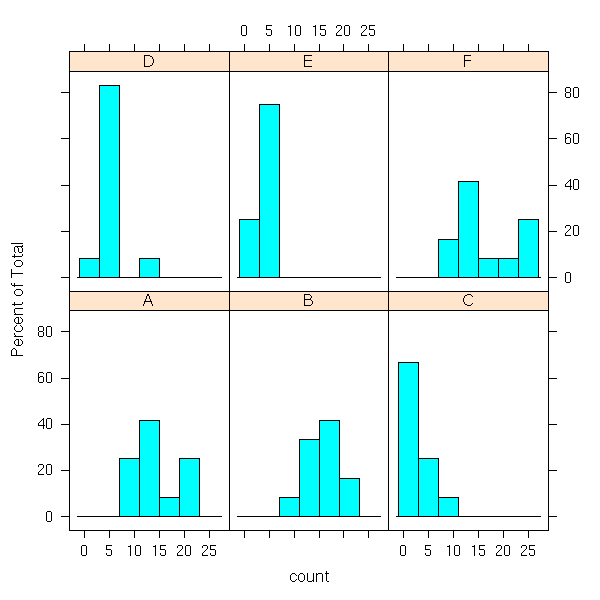

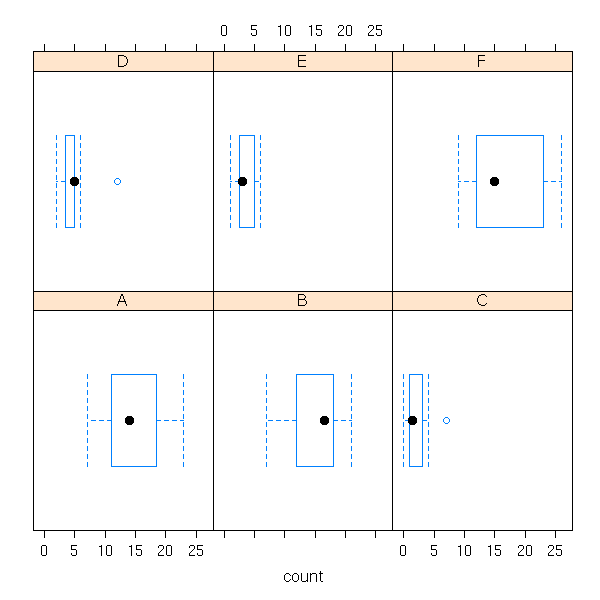

N <- 50 # Sample size

set.seed(2)

x1 <- runif(N) # Uniform distribution

x2 <- rt(N,2) # Fat-tailed distribution

x3 <- rexp(N) # Skewed distribution

x4 <- c(x2,20) # Outlier (not that uncommon,

# with fat-tailed distributions)

f <- function (x, ...) {

x <- (x - mean(x)) / sd(x)

N <- length(x)

hist( x,

col = "light blue",

xlim = c(-3, 3),

ylim = c(0, .8),

probability = TRUE,

...

)

lines(density(x),

col = "red", lwd = 3)

rug(x)

}

op <- par(mfrow=c(2,2))

f(x1, main = "Uniform distribution")

f(x2, main = "Fat-tailed distribution")

f(x3, main = "Skewed distribution")

f(x4, main = "As above, with one outlier")

par(op)

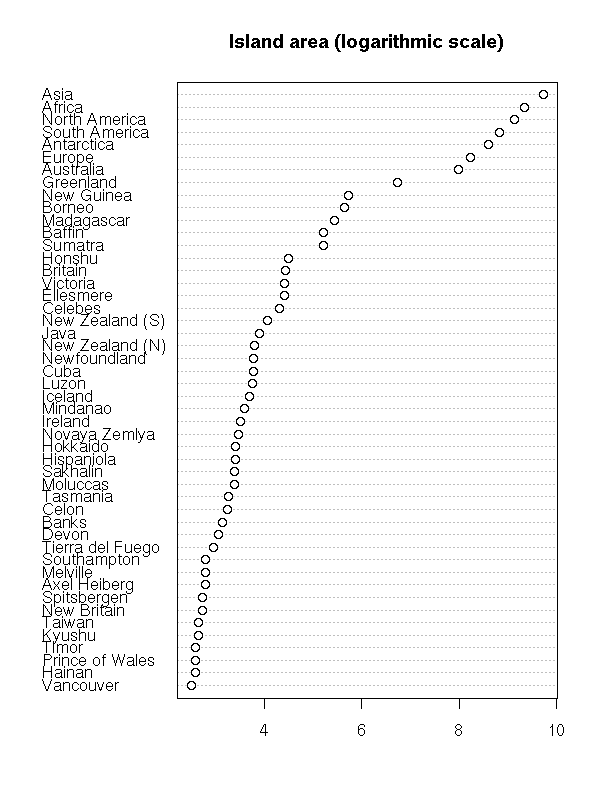

Sometimes, other transformations might make the distribution closer to a gaussian (i.e., bell-shaped) one. For instance, for skewed distributions, taking the logarithm or the square root is often a good idea (other sometimes used transformations include: power scales, arcsine, logit, probit, Fisher).

x <- read.csv("2006-08-27_pe.csv")

op <- par(mfrow=c(1,2))

plot(p ~ eps, data=x, main="Before")

plot(log(p) ~ log(eps), data=x, main="After")

par(op)

In some situations, other transformations are meaningful: power scales, arcsine, logit, probit, Fisher, etc.

Whatever the analysis you perform, it is very important to look at your data and to transform them if needed and possible.

f <- function (x, main, FUN) {

hist(x,

col = "light blue",

probability = TRUE,

main = paste(main, "(before)"),

xlab = "")

lines(density(x), col = "red", lwd = 3)

rug(x)

x <- FUN(x)

hist(x,

col = "light blue",

probability = TRUE,

main = paste(main, "(after)"),

xlab = "")

lines(density(x), col = "red", lwd = 3)

rug(x)

}

op <- par(mfrow=c(2,2))

f(x3,

main="Skewed distribution",

FUN = log)

f(x2,

main="Fat tailed distribution",

FUN = function (x) { # If you have an idea of the

# distribution followed by

# your variable, you can use

# that distribution to get a

# p-value (i.e., a number between

# 0 and 1: just apply the inverse

# of the cumulative distribution

# function -- in R, it is called

# the p-function of the

# distribution) then apply the

# gaussian cumulative distribution

# function (in R, it is called the

# quantile function or the

# q-function).

qnorm(pcauchy(x))

}

)

par(op)

If you really want your distribution to be bell-shaped, you can "forcefully normalize" it -- but bear in mind that this discards relevant information: for instance, if the distribution was bimodal, i.e., if it had the shape of two bells instead of one, that information will be lost.

uniformize <- function (x) { # This could be called

# "forceful uniformization".

# More about it when we introduce

# the notion of copula.

x <- rank(x,

na.last = "keep",

ties.method = "average")

n <- sum(!is.na(x))

x / (n + 1)

}

normalize <- function (x) {

qnorm(uniformize(x))

}

op <- par(mfrow=c(4,2))

f(x1, FUN = normalize, main = "Uniform distribution")

f(x3, FUN = normalize, main = "Skewed distribution")

f(x2, FUN = normalize, main = "Fat-tailed distribution")

f(x4, FUN = normalize, main = "Idem with one outlier")

par(op)

If you have skipped the last section, read this one. If you have not, skip to the next.

We have just seen that (for a centered statistical series, i.e., a series whose mean is zero), the variance is the mean of the squares of the values. One may replace the squares by another power: the k-th moment M_k of a series is the mean of its k-th powers. One can show (exercice) that:

mean = M_1 Variance = M_2 - M_1^2

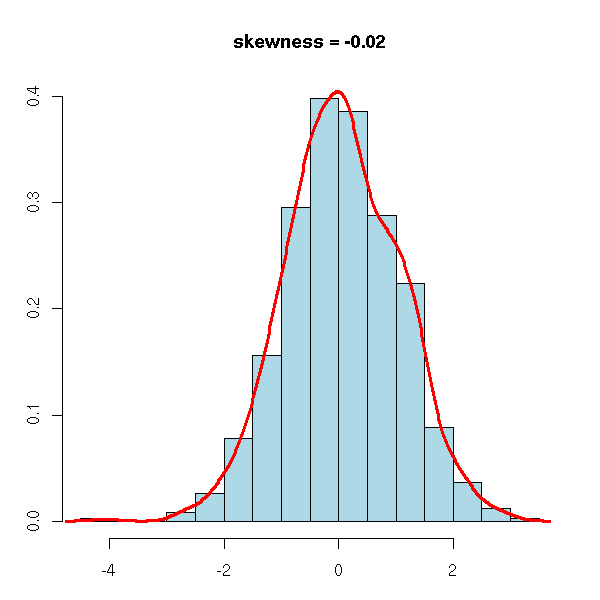

The third moment of a centered statistical series is called skewness. For a symetric series, it is zero. To check if a series is symetric and to quantify the departure from symetry, it suffices to compute the third moment of the normalized series.

library(e1071) # For the "skewness" and "kurtosis" functions

n <- 1000

x <- rnorm(n)

op <- par(mar=c(3,3,4,2)+.1)

hist(x, col="light blue", probability=TRUE,

main=paste("skewness =", round(skewness(x), digits=2)),

xlab="", ylab="")

lines(density(x), col="red", lwd=3)

par(op)

x <- rexp(n)

op <- par(mar=c(3,3,4,2)+.1)

hist(x, col="light blue", probability=TRUE,

main=paste("skewness =", round(skewness(x), digits=2)),

xlab="", ylab="")

lines(density(x), col="red", lwd=3)

par(op)

x <- -rexp(n)

op <- par(mar=c(3,3,4,2)+.1)

hist(x, col="light blue", probability=TRUE,

main=paste("skewness =", round(skewness(x), digits=2)),

xlab="", ylab="")

lines(density(x), col="red", lwd=3)

par(op)

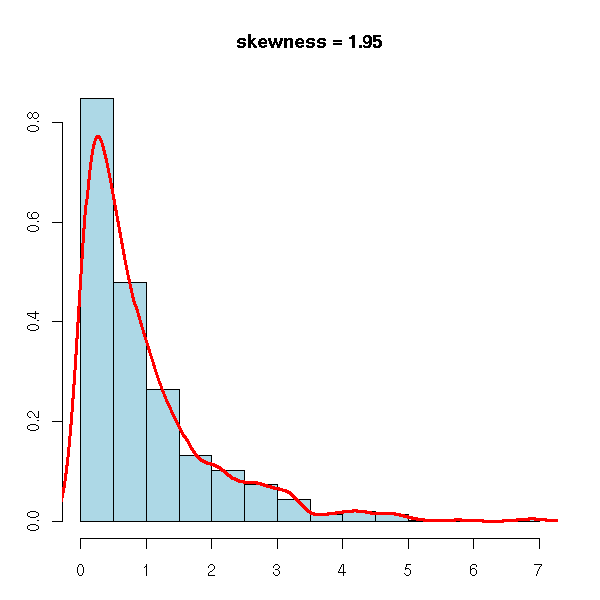

The fourth moment, tells if a series has fatter tails (i.e., more extreme values) than a gaussian distribution and quantifies the departure from gaussian-like tails. The fourth moment of a gaussian random variable is 3; one defines the kurtosis as the fourth moment minus 3, so that the kurtosis of a gaussian distribution be zero, that of a fat-tailed one be positive, that of a no-tail one be negative.

library(e1071) # For the "skewness" and "kurtosis" functions

n <- 1000

x <- rnorm(n)

qqnorm(x, main=paste("kurtosis =", round(kurtosis(x), digits=2),

"(gaussian)"))

qqline(x, col="red")

op <- par(fig=c(.02,.5,.5,.98), new=TRUE)

hist(x, probability=T,

col="light blue", xlab="", ylab="", main="", axes=F)

lines(density(x), col="red", lwd=2)

box()

par(op)

set.seed(1)

x <- rt(n, df=4)

qqnorm(x, main=paste("kurtosis =", round(kurtosis(x), digits=2),

"(T, df=4)"))

qqline(x, col="red")

op <- par(fig=c(.02,.5,.5,.98), new=TRUE)

hist(x, probability=T,

col="light blue", xlab="", ylab="", main="", axes=F)

lines(density(x), col="red", lwd=2)

box()

par(op)

x <- runif(n)

qqnorm(x, main=paste("kurtosis =", round(kurtosis(x), digits=2),

"(uniform)"))

qqline(x, col="red")

op <- par(fig=c(.02,.5,.5,.98), new=TRUE)

hist(x, probability=T,

col="light blue", xlab="", ylab="", main="", axes=F)

lines(density(x), col="red", lwd=2)

box()

par(op)

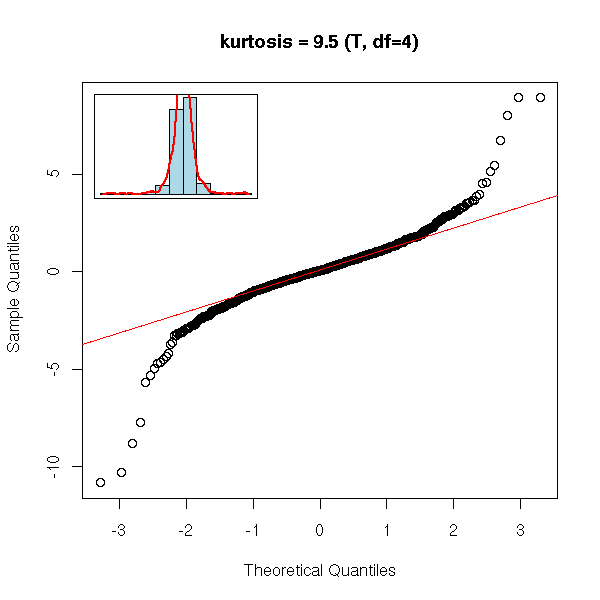

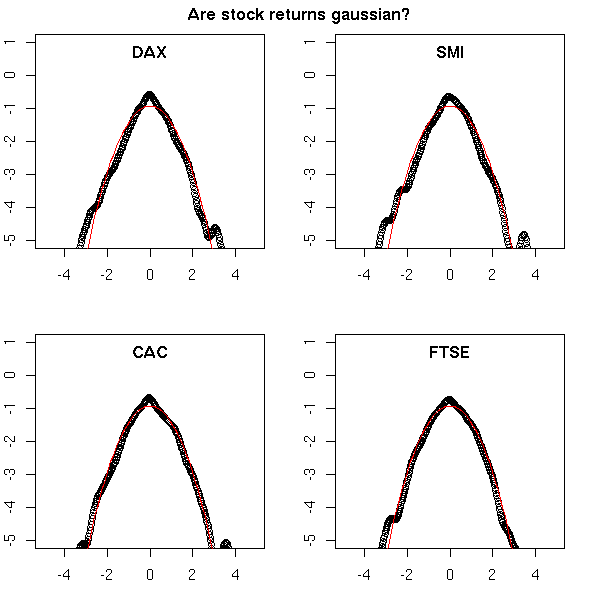

You stumble upon this notion, for instance, when you study financial data: we often assume that the data we study follow a gaussian distribution, but in finance (more precisely, with high-frequency (intra-day) financial data), this is not the case. The problem is all the more serious that the data exhibits an abnormal number of extreme values (outliers). To see it, we have estimated the density of the returns and we overlay this curve with the density of a gaussian distribution. The vertical axis is logarithmic.

You can notice two things: first, the distribution has a higher, narrower peak, second, there are more extreme values.

op <- par(mfrow=c(2,2), mar=c(3,2,2,2)+.1)

data(EuStockMarkets)

x <- EuStockMarkets

# We aren't interested in the spot prices, but in the returns

# return[i] = ( price[i] - price[i-1] ) / price[i-1]

y <- apply(x, 2, function (x) { diff(x)/x[-length(x)] })

# We normalize the data

z <- apply(y, 2, function (x) { (x-mean(x))/sd(x) })

for (i in 1:4) {

d <- density(z[,i])

plot(d$x,log(d$y),ylim=c(-5,1),xlim=c(-5,5))

curve(log(dnorm(x)),col='red',add=T)

mtext(colnames(x)[i], line=-1.5, font=2)

}

par(op)

mtext("Are stock returns gaussian?", line=3, font=2)

You can check this with a computation:

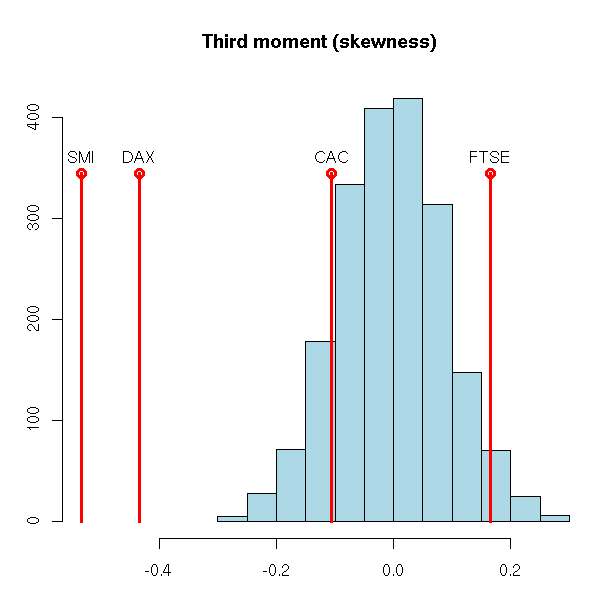

> apply(z^3,2,mean)

DAX SMI CAC FTSE

-0.4344056 -0.5325112 -0.1059855 0.1651614

> apply(z^4,2,mean)

DAX SMI CAC FTSE

8.579151 8.194598 5.265506 5.751968While a gaussian distribution would give 0 and 3.

> mean(rnorm(100000)^3) [1] -0.003451044 > mean(rnorm(100000)^4) [1] 3.016637

You can do several simulations (as we have just done) and look at the distribution of the resulting values: by comparison, are those that come from our data that large? (they were large, but were they significantly large?)

For the third moment, two values are extreme, but the two others look normal.

n <- dim(z)[1]

N <- 2000 # Two thousand samples of the same size

m <- matrix(rnorm(n*N), nc=N, nr=n)

a <- apply(m^3,2,mean)

b <- apply(z^3,2,mean)

op <- par(mar=c(3,3,4,1)+.1)

hist(a, col='light blue', xlim=range(c(a,b)),

main="Third moment (skewness)",

xlab="", ylab="")

h <- rep(.2*par("usr")[3] + .8*par("usr")[4], length(b))

points(b, h, type='h', col='red',lwd=3)

points(b, h, col='red', lwd=3)

text(b, h, names(b), pos=3)

par(op)

On the contrary, for the kurtosis, our values are really high.

n <- dim(z)[1]

N <- 2000

m <- matrix(rnorm(n*N), nc=N, nr=n)

a <- apply(m^4,2,mean) - 3

b <- apply(z^4,2,mean) - 3

op <- par(mar=c(3,3,4,1)+.1)

hist(a, col='light blue', xlim=range(c(a,b)),

main="Expected kurtosis distribution and observed values",

xlab="", ylab="")

h <- rep(.2*par("usr")[3] + .8*par("usr")[4], length(b))

points(b, h, type='h', col='red',lwd=3)

points(b, h, col='red', lwd=3)

text(b, h, names(b), pos=3)

par(op)

We shall see again, later, that kind of measurement of departure from gaussianity -- the computation we just made can be called a "parametric bootstrap p-value computation".

For more moments, see the "moments" packages and, later in this document, the Method of Moments Estimators (MME) and the Generalized Method of Moments (GMM).

Moments allow you to spot non-gaussian features in your data, but they are very imprecise (they have a large variance) and are very sensitive to outliers -- simply because they are defined with powers, that amplify those problems.

One can define similar quantities without any power, with a simple linear combination of order statistics.

If X_{k:n} is the k-th element of a sample of n observations of the distribution you are studying, then the L-moments are

L1 = E[ X_{1:1} ]

L2 = 1/2 E[ X_{2:2} - X{1:2} ]

L3 = 1/3 E[ X_{3:3} - 2 X{2:3} + X{1:3} ]

L4 = 1/4 E[ X_{4:4} - 3 X{3:4} + 3 X{2:4} - X{1:4} ]

...L1 is the usual mean; L2 is a measure of dispersion: the average distance between two observations; L3 is a measure of asymetry, similar to the skewness; L4 is a measure of tail thickness, similar to the kurtosis.

data(EuStockMarkets)

x <- EuStockMarkets

y <- apply(x, 2, function (x) { diff(x)/x[-length(x)] })

library(lmomco)

n <- dim(z)[1]

N <- 200

m <- matrix(rnorm(n*N), nc=N, nr=n)

# We normalize the data in the same way

f <- function (x) {

r <- lmom.ub(x)

(x - r$L1) / r$L2

}

z <- apply(y, 2, f)

m <- apply(m, 2, f)

a <- apply(m, 2, function (x) lmom.ub(x)$TAU3)

b <- apply(z, 2, function (x) lmom.ub(x)$TAU3)

op <- par(mar=c(3,3,4,1)+.1)

hist(a, col='light blue', xlim=range(c(a,b)),

main="Expected L-skewness distribution and observed values",

xlab="", ylab="")

h <- rep(.2*par("usr")[3] + .8*par("usr")[4], length(b))

points(b, h, type='h', col='red',lwd=3)

points(b, h, col='red', lwd=3)

text(b, h, names(b), pos=3)

par(op)

a <- apply(m, 2, function (x) lmom.ub(x)$TAU4)

b <- apply(z, 2, function (x) lmom.ub(x)$TAU4)

op <- par(mar=c(3,3,4,1)+.1)

hist(a, col='light blue', xlim=range(c(a,b)),

main="Expected L-kurtosis distribution and observed values",

xlab="", ylab="")

h <- rep(.2*par("usr")[3] + .8*par("usr")[4], length(b))

points(b, h, type='h', col='red',lwd=3)

points(b, h, col='red', lwd=3)

text(b, h, names(b), pos=3)

par(op)

We can see the data we are studying as an untidy bunch of numbers, in which we cannot see anything (that is why you will often see me using the "str" command that only displays the beginning of the data: displaying everything would not be enlightening).

There is a simple way of seeing someting in that bunch of numbers: just sort them. That is better, but we still have hundreds of numbers, we still do not see anything.

> str( sort(faithful$eruptions) ) num [1:272] 1.60 1.67 1.70 1.73 1.75 ...

In those ordered numbers, you may remark that the first two digits are often the same. Furthermore, after those two digits, there is only one left. Thus, we can put them in several classes (or "bins") according to the first two digits and write, on the bin, the remaining digit. This is called a "stem-and-leaf plot". It is just an orderly way of writing down our bunch of number (we have not summurized the data yet, we have not discarded any information, any number).

> stem(faithful$eruptions) The decimal point is 1 digit(s) to the left of the | 16 | 070355555588 18 | 000022233333335577777777888822335777888 20 | 00002223378800035778 22 | 0002335578023578 24 | 00228 26 | 23 28 | 080 30 | 7 32 | 2337 34 | 250077 36 | 0000823577 38 | 2333335582225577 40 | 0000003357788888002233555577778 42 | 03335555778800233333555577778 44 | 02222335557780000000023333357778888 46 | 0000233357700000023578 48 | 00000022335800333 50 | 0370

We could also do that by hand (before the advent of computers, people used to do that by hand -- actually, it is no longer used).

http://www.shodor.org/interactivate/discussions/steml.html http://davidmlane.com/hyperstat/A28117.html http://www.google.fr/search?q=stem-and-leaf&ie=UTF-8&oe=UTF-8&hl=fr&btnG=Recherche+Google&meta=

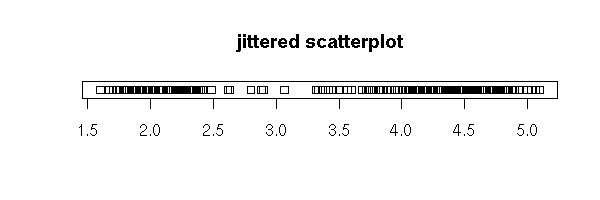

One can graphically represent a univariate series by putting the data on an axis.

data(faithful) stripchart(faithful$eruptions, main="The \"stripchart\" function")

Yet, if there are many data, or if there are several observations with the same value, the resulting graph is not very readable. We can add some noise to that the points do not end up on top of one another.

# Only horizontal noise

stripchart(faithful$eruptions, jitter=TRUE,

main="jittered scatterplot")

stripchart(faithful$eruptions, method='jitter',

main="jittered scatterplot")

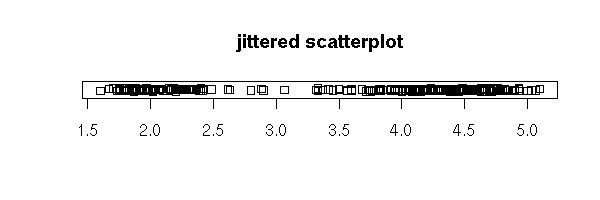

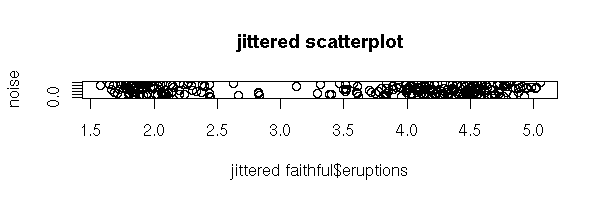

Exercise: to familiarize yourself with the "rnorm" command (and a few others), try to do that yourself.

my.jittered.stripchart <- function (x) {

x.name <- deparse(substitute(x))

n <- length(x)

plot( runif(n) ~ x, xlab=x.name, ylab='noise',

main="jittered scatterplot" )

}

my.jittered.stripchart(faithful$eruptions)

my.jittered.stripchart <- function (x) {

x.name <- deparse(substitute(x))

n <- length(x)

x <- x + diff(range(x))*.05* (-.5+runif(n))

plot( runif(n) ~ x,

xlab=paste("jittered", x.name), ylab='noise',

main="jittered scatterplot" )

}

my.jittered.stripchart(faithful$eruptions)

You can also plot the sorted data:

op <- par(mar=c(3,4,2,2)+.1)

plot( sort( faithful$eruptions ),

xlab = ""

)

par(op)

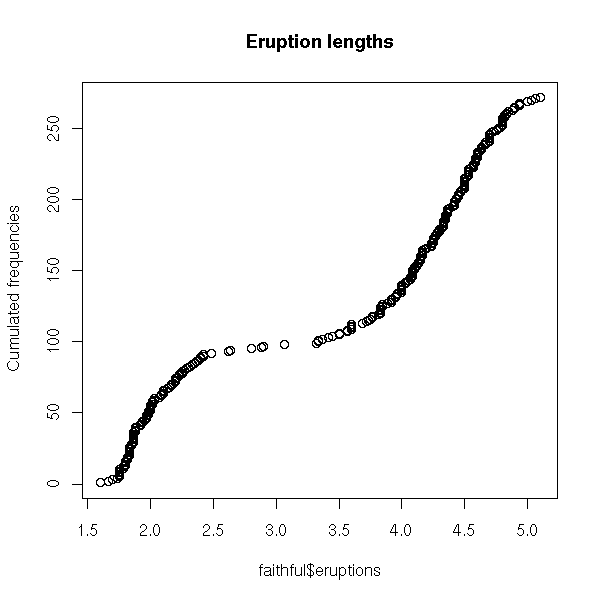

The two horizontal parts correspond to the two peaks of the histogram, to the two modes of the distribution.

Actually, it is just a scatter plot with an added dimension. (The "rug" function adds a scatter plot along an axis.)

op <- par(mar=c(3,4,2,2)+.1) plot(sort(faithful$eruptions), xlab="") rug(faithful$eruptions, side=2) par(op)

It also helps to see that the data is discrete -- in a scatter plot with no added noise (no jitter), you would not see it).

op <- par(mar=c(3,4,2,2)+.1) x <- round( rnorm(100), digits=1 ) plot(sort(x)) rug(jitter(x), side=2) par(op)

You can also plot the cumulated frequencies (this plot is symetric to the previous one).

cumulated.frequencies <- function (x, main="") {

x.name <- deparse(substitute(x))

n <- length(x)

plot( 1:n ~ sort(x),

xlab = x.name,

ylab = 'Cumulated frequencies',

main = main

)

}

cumulated.frequencies(faithful$eruptions,

main = "Eruption lengths")

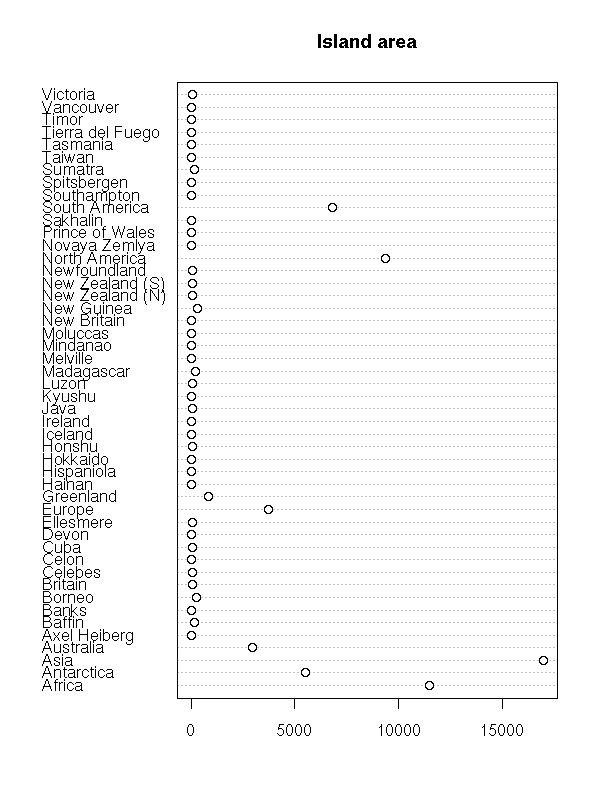

In some cases, the observations (the subjects) are named: we can add the names to the plot (it is the same plot as above, unsorted and rotated by 90 degrees).

data(islands) dotchart(islands, main="Island area")

dotchart(sort(log(islands)),

main="Island area (logarithmic scale)")

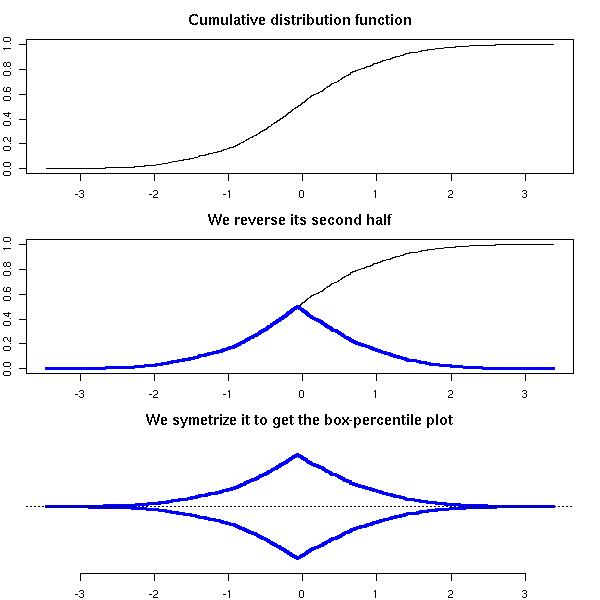

From a theoretical point of view, the cumulative distribution curve is very important, even if its interpretation deos not spring to the eyes. In the following examples, we present the cumulative distribution plot of several distributions.

op <- par(mfcol=c(2,4), mar=c(2,2,1,1)+.1)

do.it <- function (x) {

hist(x, probability=T, col='light blue',

xlab="", ylab="", main="", axes=F)

axis(1)

lines(density(x), col='red', lwd=3)

x <- sort(x)

q <- ppoints(length(x))

plot(q~x, type='l',

xlab="", ylab="", main="")

abline(h=c(.25,.5,.75), lty=3, lwd=3, col='blue')

}

n <- 200

do.it(rnorm(n))

do.it(rlnorm(n))

do.it(-rlnorm(n))

do.it(rnorm(n, c(-5,5)))

par(op)

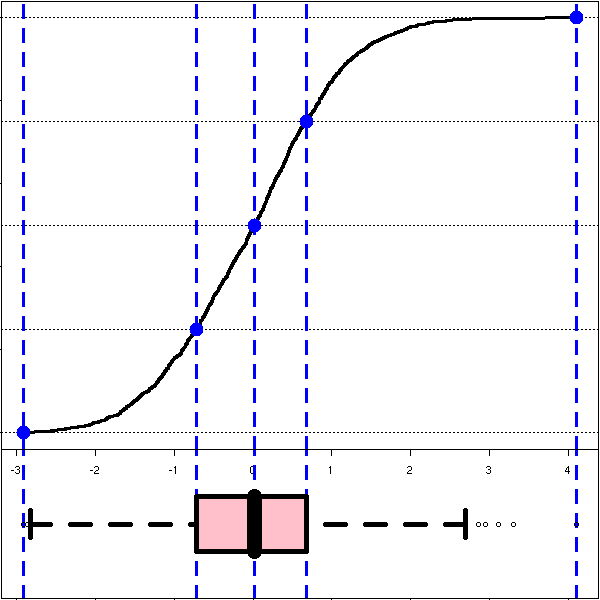

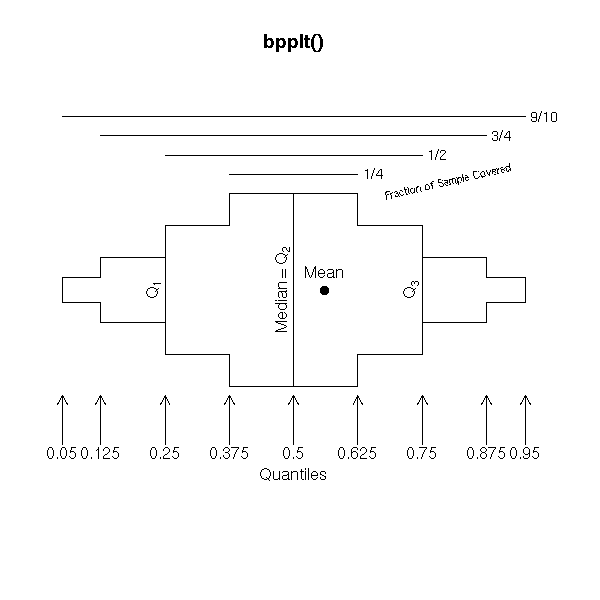

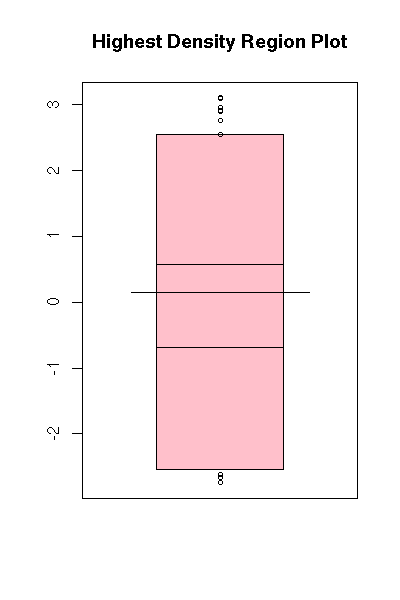

The box-and-whiskers plots are a simplified view of the cumulative distribution plot: they just contain the quartiles, i.e., the intersections with the horizontal dotted blue lines above.

N <- 2000

x <- rnorm(N)

op <- par(mar=c(0,0,0,0), oma=c(0,0,0,0)+.1)

layout(matrix(c(1,1,1,2), nc=1))

y <- ppoints( length(x) )

plot(sort(x), y, type="l", lwd=3,

xlab="", ylab="", main="")

abline(h=c(0,.25,.5,.75,1), lty=3)

abline(v = quantile(x), col = "blue", lwd = 3, lty=2)

points(quantile(x), c(0,.25,.5,.75,1), lwd=10, col="blue")

boxplot(x, horizontal = TRUE, col = "pink", lwd=5)

abline(v = quantile(x), col = "blue", lwd = 3, lty=2)

par(new=T)

boxplot(x, horizontal = TRUE, col = "pink", lwd=5)

par(op)

TODO: Check the vocabulary I have used: "empirical cumulative distribution function" State the (much more technical) use of this ECDF to devise a test comparing two distributions.

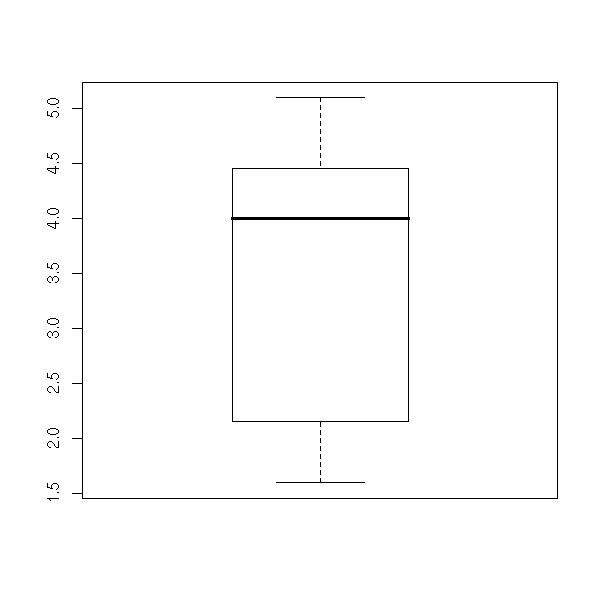

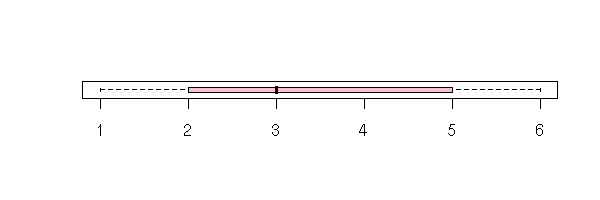

A box-and-whiskers plot is a graphical representation of the 5 quartiles (minimum, first quartile, median, third quartile, maximum).

boxplot(faithful$eruptions, range=0)

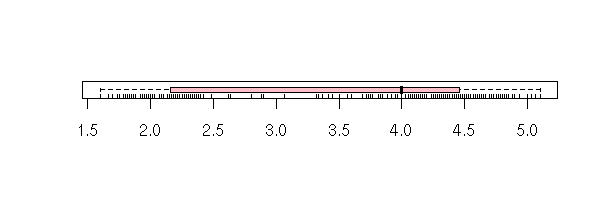

The name of this plot is more understandable if it is drawn horizontally.

boxplot(faithful$eruptions, range=0, horizontal=TRUE)

On this example, we can clearly see that the data are not symetric: thus, we know that it would be a bad idea to apply statistical procedures that assume they are symetric -- or even, normal.

This is one of the main uses of this kind of plot: assessing the symetry of your data.

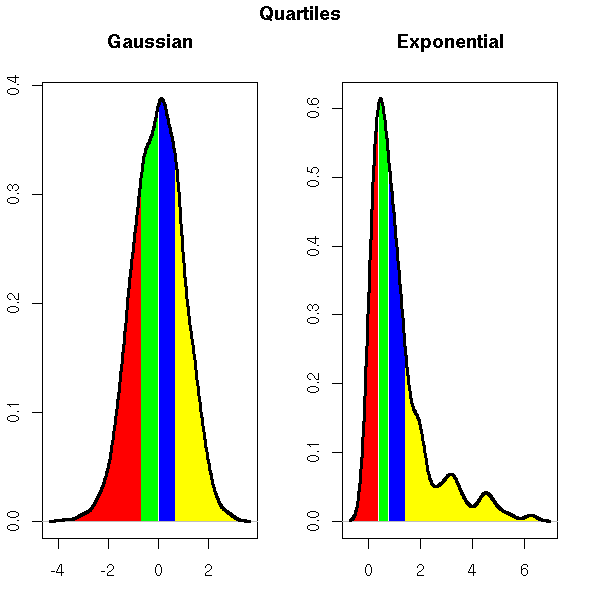

This graphical representation of the quartiles is simpler and more directly understandable than the following, in terms of area.

op <- par(mfrow=c(1,2), mar=c(3,2,4,2)+.1)

do.it <- function (x, xlab="", ylab="", main="") {

d <- density(x)

plot(d, type='l', xlab=xlab, ylab=ylab, main=main)

q <- quantile(x)

do.it <- function (i, col) {

x <- d$x[i]

y <- d$y[i]

polygon( c(x,rev(x)), c(rep(0,length(x)),rev(y)), border=NA, col=col )

}

do.it(d$x <= q[2], 'red')

do.it(q[2] <= d$x & d$x <= q[3], 'green')

do.it(q[3] <= d$x & d$x <= q[4], 'blue')

do.it(d$x >= q[4], 'yellow')

lines(d, lwd=3)

}

do.it( rnorm(2000), main="Gaussian" )

do.it( rexp(200), main="Exponential" )

par(op)

mtext("Quartiles", side=3, line=3, font=2, cex=1.2)

(In this example, the four areas are equal; this highlights the often-claimed fact that the human eye cannot compare areas.)

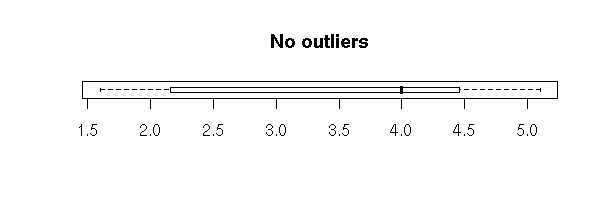

Without the "range=0" option, the plot also underlines the presence of outliers, i.e., points far away from the median (beyond 1.5 times the InterQuartile Range (IQR)). In this example, there are no outliers.

boxplot(faithful$eruptions, horizontal = TRUE,

main = "No outliers")

In some cases, these "outliers" are perfectly normal.

# There are outliers, they might bring trouble,

# but it is normal, it is not pathological

boxplot(rnorm(500), horizontal = TRUE,

main = "Normal outliers")

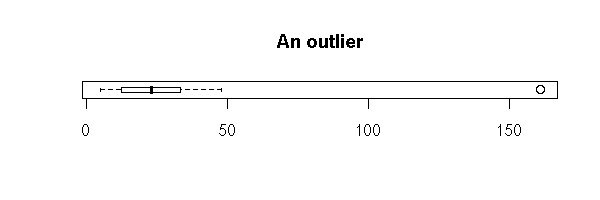

If there are only a few outliers, really isolated, they might be errors -- yes, in the real life, the data is "dirty"...

x <- c(rnorm(30),20)

x <- sample(x, length(x))

boxplot( x, horizontal = TRUE,

main = "An outlier" )

library(boot)

data(aml)

boxplot( aml$time, horizontal = TRUE,

main = "An outlier" )

They can also be the sign that the distribution is not gaussian at all.

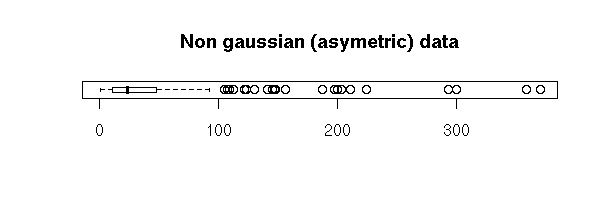

data(attenu)

boxplot(attenu$dist, horizontal = TRUE,

main = "Non gaussian (asymetric) data")

Then, we usually transform the data, by applying a simple and well-chosen function, so that it becomes gaussian (more about this later).

data(attenu)

boxplot(log(attenu$dist), horizontal = TRUE,

main = "Transformed variable")

Outliers _are_ troublesome, because many statistical procedures are sensitive to them (mean, standard deviation, regression, etc.).

> x <- aml$time > summary(x) Min. 1st Qu. Median Mean 3rd Qu. Max. 5.00 12.50 23.00 29.48 33.50 161.00 > y <- x[x<160] > summary(y) Min. 1st Qu. Median Mean 3rd Qu. Max. 5.00 12.25 23.00 23.50 32.50 48.00

This was a second use of box-and-whiskers plots: spotting outliers. Their presence may be perfectly normal (but you must beware that they might bias later computations -- unless you choose robust algorithms); they may be due to errors, that are to be corrected; they may also reveal that the distribution is not gaussian and naturally contains many outliers ("fat tails" -- more about this later, when we mention the "extreme distributions" and high-frequency (intra-day) financial data).

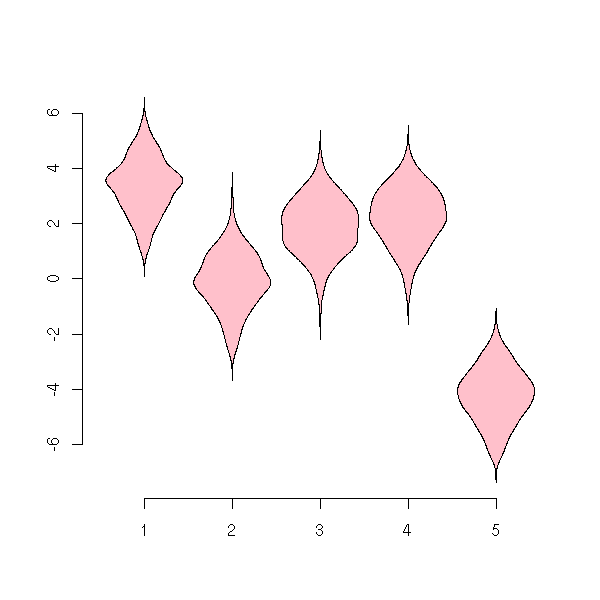

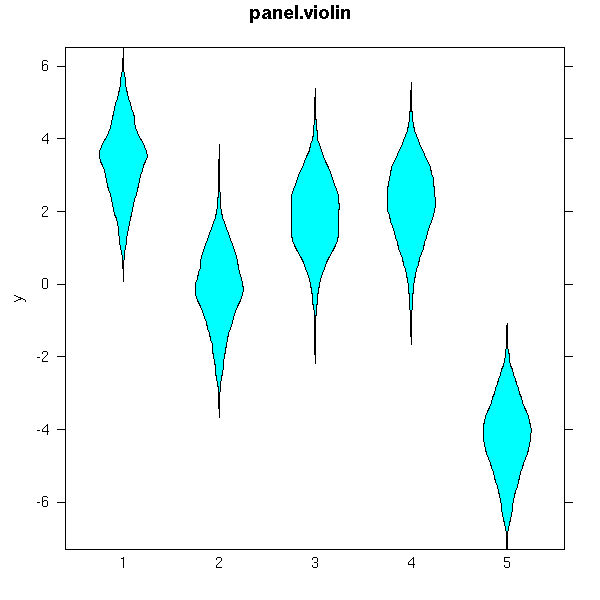

Actually, the larger the sample, the more outliers.

y <- c(rnorm(10+100+1000+10000+100000))

x <- c(rep(1,10), rep(2,100), rep(3,1000), rep(4,10000), rep(5,100000))

x <- factor(x)

plot(y~x,

horizontal = TRUE,

col = "pink",

las = 1,

xlab = "", ylab = "",

main = "The larger the sample, the more outliers")

You could plot boxes whose whiskers would extend farther for larger samples, but beware: even if the presence of extreme values in larger samples is normal, it can have an important leverage effect, an important influence on the results of your computations.

Exercise: plot box-and-whiskers whose whisker length varies with the sample size.

You can ask R to plot a confidence interval on the median:

boxplot(faithful$eruptions,

notch = TRUE,

horizontal = TRUE,

main = "Confidence interval on the median...")

library(boot)

data(breslow)

boxplot(breslow$n,

notch = TRUE,

horizontal = TRUE,

col = "pink",

main = "...that goes beyond the quartiles")

You can also add a scatter plot.

boxplot(faithful$eruptions,

horizontal = TRUE,

col = "pink")

rug(faithful$eruption,

ticksize = .2)

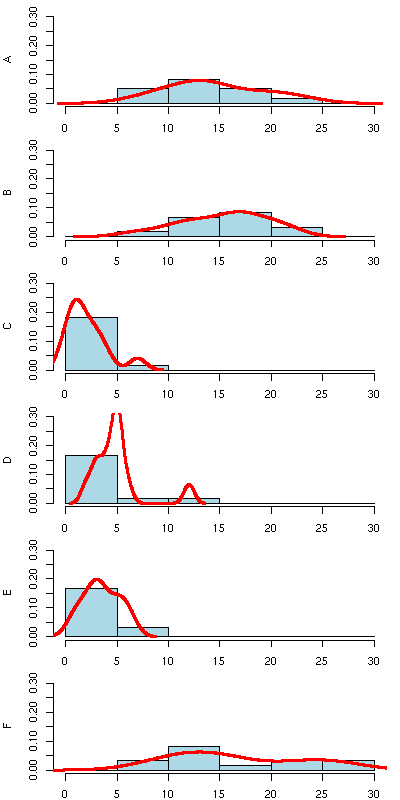

You can also represent those data with a histogram: put each observation in a class (the computer can do this) and, for each class, plot a vertical bar whose height (or area) is proportionnal to the number of elements.

hist(faithful$eruptions)

There is a big, unavoidable prolem with histograms: a different choice of classes can lead to a completely different histogram.

First, the width of the classes can play a role.

hist(faithful$eruptions, breaks=20, col="light blue")

TODO: give an example!

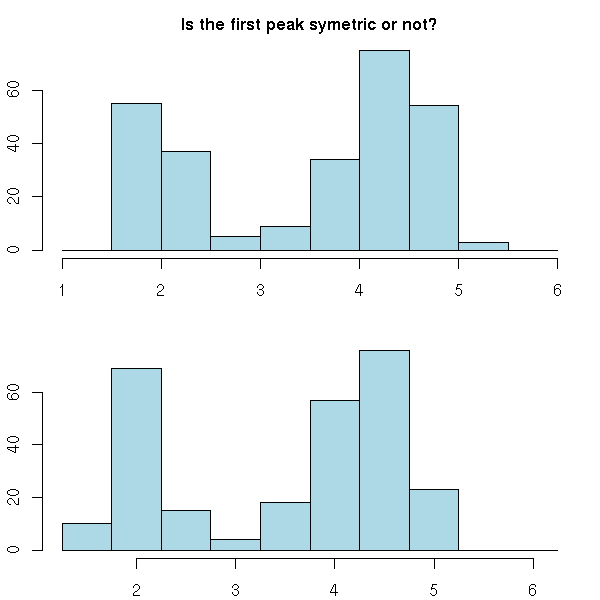

But their position, as well, can completely change the histogram and have it look sometimes symetric, sometimes not. For instance, in neither of the following histograms does the peak look symetric but the asymetry is not in the same direction.

op <- par(mfrow=c(2,1), mar=c(2,2,2,1)+.1)

hist(faithful$eruptions, breaks=seq(1,6,.5),

col='light blue',

xlab="", ylab="", main="")

hist(faithful$eruptions, breaks=.25+seq(1,6,.5),

col='light blue',

xlab="", ylab="", main="")

par(op)

mtext("Is the first peak symetric or not?",

side=3, line=2.5, font=2.5, size=1.5)

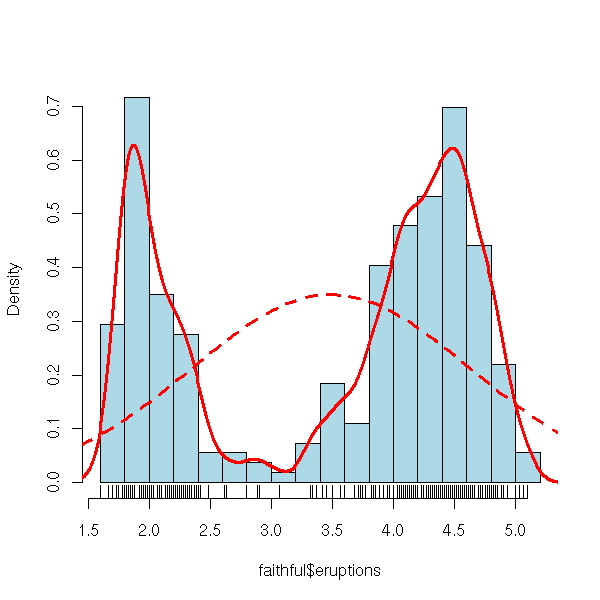

You can replace the histogram with a curve, a "density estimation". If you see the data as a sum of Dirac masses, you can obtain such a function by convolving this sum with a well-chosen "kernel", e.g., a gaussian density -- but you have to choose the "bandwidth" of this kernel, i.e., the standard deviation of the gaussian density.

This density estimation can be adaptive: the bandwidth of this gaussian kernel can change along the sample, being larger when the point density becomes higher (the "density" function does not use an adaptive kernel -- check function akj in the quantreg package if you want one).

hist(faithful$eruptions,

probability=TRUE, breaks=20, col="light blue",

xlab="", ylab="",

main="Histogram and density estimation")

points(density(faithful$eruptions, bw=.1), type='l',

col='red', lwd=3)

Density estimations still have the first problem of histograms: a different kernel may yield a completely different curve -- but the second problem disappears.

hist(faithful$eruptions,

probability=TRUE, breaks=20, col="light blue",

xlab="", ylab="",

main="Histogram and density estimation")

points(density(faithful$eruptions, bw=1), type='l',

lwd=3, col='black')

points(density(faithful$eruptions, bw=.5), type='l',

lwd=3, col='blue')

points(density(faithful$eruptions, bw=.3), type='l',

lwd=3, col='green')

points(density(faithful$eruptions, bw=.1), type='l',

lwd=3, col='red')

One can add many other elements to a histogram. For instance, a scatterplot, or a gaussian density (to compare with the estimated density).

hist(faithful$eruptions,

probability=TRUE, breaks=20, col="light blue",

main="")

rug(faithful$eruptions)

points(density(faithful$eruptions, bw=.1), type='l', lwd=3, col='red')

f <- function(x) {

dnorm(x,

mean=mean(faithful$eruptions),

sd=sd(faithful$eruptions),

)

}

curve(f, add=T, col="red", lwd=3, lty=2)

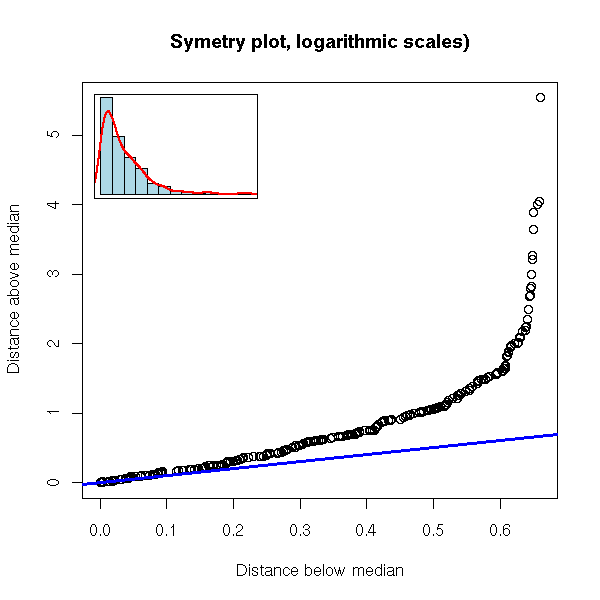

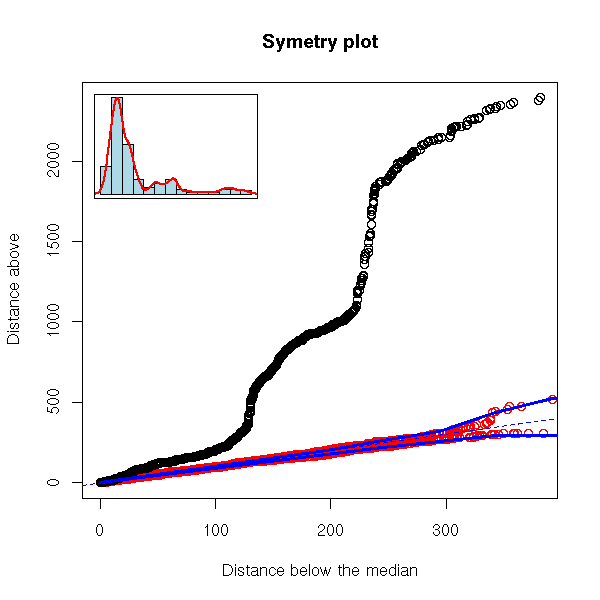

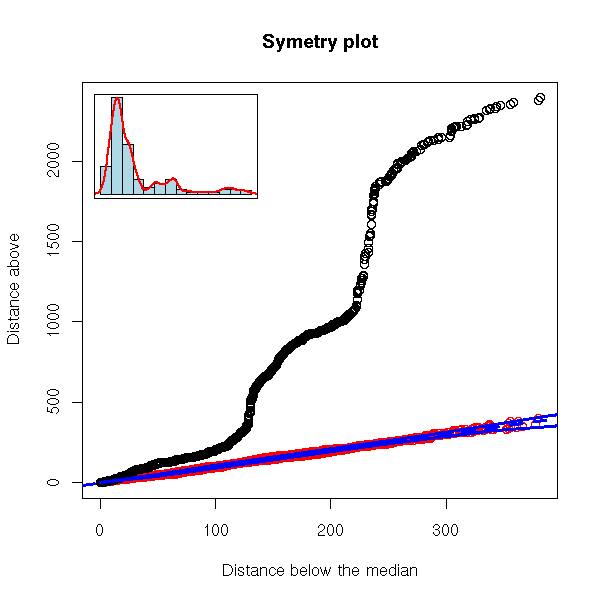

When you look at your data, one of the first questions you may ask is "are they symetric?". The following plot simply sorts the data and tries to pair the first with the last, the second with the second from the end, etc.. The following is a plot of the distance to the median of the (n-i)-th point versus that of the i-th point.

symetry.plot <- function (x0,

main="Symetry plot",

breaks="Sturges", ...) {

x <- x0[ !is.na(x0) ]

x <- sort(x)

x <- abs(x - median(x))

n <- length(x)

nn <- ceiling(n/2)

plot( x[n:(n-nn+1)] ~ x[1:nn] ,

xlab='Distance below median',

ylab='Distance above median',

main=main,

...)

abline(0,1, col="blue", lwd=3)

op <- par(fig=c(.02,.5,.5,.98), new=TRUE)

hist(x0, probability=T, breaks=breaks,

col="light blue", xlab="", ylab="", main="", axes=F)

lines(density(x0), col="red", lwd=2)

box()

par(op)

}

symetry.plot(rnorm(500),

main="Symetry plot (gaussian distribution)")

symetry.plot(rexp(500),

main="Symetry plot (exponential distribution)")

symetry.plot(-rexp(500),

main="Symetry plot (negative skewness)")

symetry.plot(rexp(500),

main="Symetry plot, logarithmic scales)")

symetry.plot(faithful$eruptions, breaks=20)

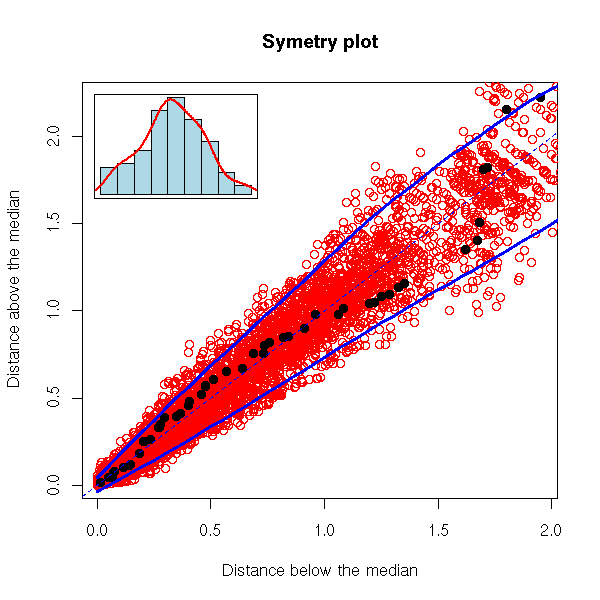

The problem is that it is rather hard to see if you are "far away" from the line: the more points the sample has, the more the plot looks like a line. Here, with a 100 points (this is a lot), we are still far away from a line.

symetry.plot.2 <- function (x, N=1000,

pch=".", cex=1, ...) {

x <- x[ !is.na(x) ]

x <- sort(x)

x <- abs(x - median(x))

n <- length(x)

nn <- ceiling(n/2)

plot( x[n:(n-nn+1)] ~ x[1:nn] ,

xlab='Distance below median',

ylab='Distance above median',

...)

for (i in 1:N) {

y <- sort( rnorm(n) )

y <- abs(y - median(y))

m <- ceiling(n/2)

points( y[n:(n-m+1)] ~ y[1:m],

pch=pch, cex=cex, col='red' )

}

points(x[n:(n-nn+1)] ~ x[1:nn] , ...)

abline(0,1, col="blue", lwd=3)

}

n <- 100

symetry.plot.2( rnorm(n), pch='.', lwd=3,

main=paste("Symetry plot: gaussian,", n, "observations"))

With 10 points, it is even worse...

(It might be because of that that this plot it is rarely used...)

n <- 10

symetry.plot.2( rnorm(n), pch=15, lwd=3, type="b", cex=.5,

main=paste("Symetry plot: gaussian,", n, "observations"))

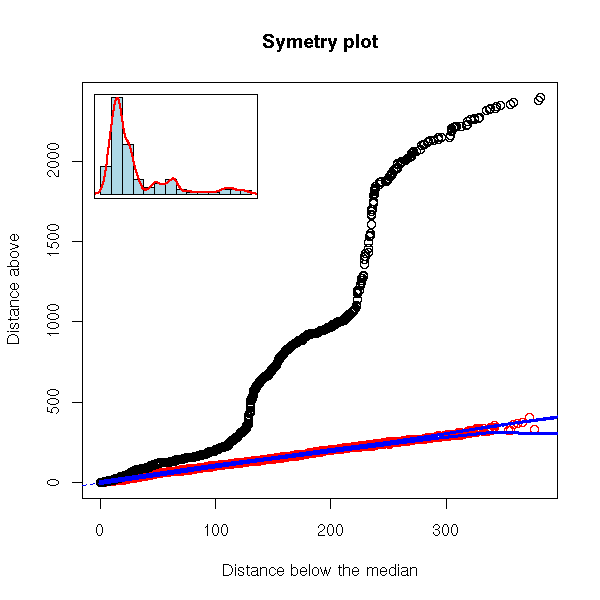

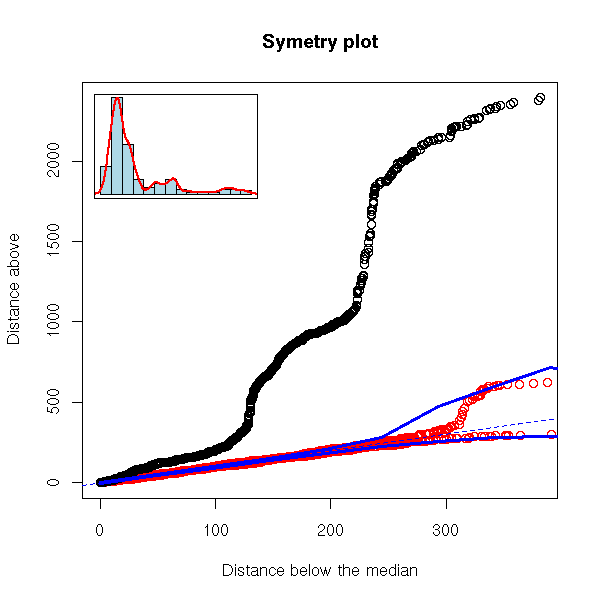

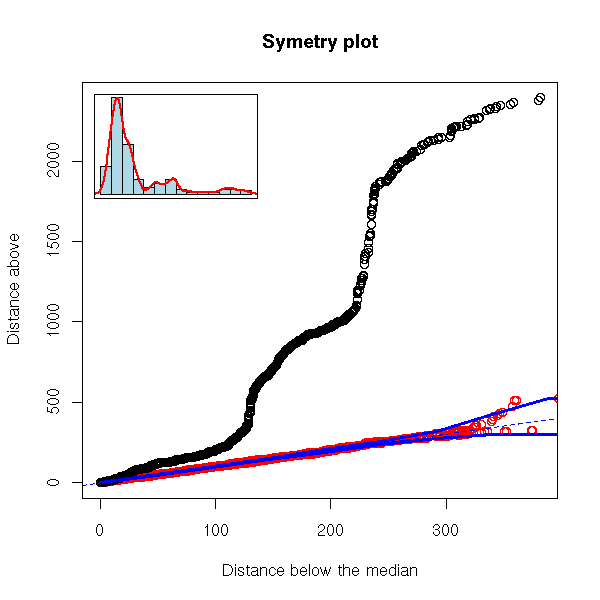

But here, we are just comparing the symetry of our distribution with that of a gaussian one: the differences can come either from our distribution not being symetric or from its being non gaussian. Instead, we can compare our distribution with its symetrization, or samples taken (with replacement) from our symetrized sample -- to symetrize, simply concatenate the x_i and the M - (x_i - M).

robust.symetry.plot <- function (x,

N = max(ceiling(1000/length(x)),2),

alpha = .05,

xlab = "Distance below the median",

ylab = "Distance above",

main = "Symetry plot",

...) {

cat(N, "\n")

# The symetry plot

x <- x[!is.na(x)]

n <- length(x)

nn <- ceiling(n/2)

x <- sort(x)

d <- abs(x - median(x)) # Distance to the median

plot( d[1:nn], d[n:(n-nn+1)],

xlab = xlab, ylab = ylab,

main = main,

... )

# The symetry plot of resampled, symetric data

y <- c(x, 2 * median(x) - x) # We symetrize the data

X <- Y <- rep(NA, N * nn)

for (i in 1:N) {

a <- sort(sample(y, n))

a <- abs(a - median(a))

j <- ((i-1) * nn + 1) : (i * nn)

X[j] <- a[1:nn]

Y[j] <- a[n:(n-nn+1)]

}

points(X, Y, col="red")

points( d[1:nn], d[n:(n-nn+1)], ...)

# The 5% confidence interval stemming from the resampled data

require(quantreg)

for (tau in c(alpha, 1-alpha)) {

r <- lprq(X, Y,

h = bw.nrd0(x), # See ?density

tau = tau)

lines(r$xx, r$fv, col = "blue", lwd = 3)

}

abline(0, 1, col = "blue", lty = 2)

# The histogram, in a corner

op <- par(fig = if (skewness(x)>0)

c(.02,.5,.5,.98) # Top left corner

else c(.5,.98,.02,.5), # Bottom right

new = TRUE)

hist(x, probability=T,

col="light blue", xlab="", ylab="", main="", axes=F)

lines(density(x), col="red", lwd=2)

box()

par(op)

}

robust.symetry.plot(EuStockMarkets[,"CAC"])

robust.symetry.plot <- function (x,

N = max(ceiling(1000/length(x)),2),

alpha = .05,

xlab = "Distance below the median",

ylab = "Distance above",

main = "Symetry plot",

...) {

cat(N, "\n")

# The symetry plot

x <- x[!is.na(x)]

n <- length(x)

nn <- ceiling(n/2)

x <- sort(x)

d <- abs(x - median(x)) # Distance to the median

plot( d[1:nn], d[n:(n-nn+1)],

xlab = xlab, ylab = ylab,

main = main,

... )

# The symetry plot of resampled, symetric data

y <- c(x, 2 * median(x) - x) # We symetrize the data

X <- Y <- rep(NA, N * nn)

for (i in 1:N) {

a <- sort(sample(y, n))

a <- abs(a - median(a))

j <- ((i-1) * nn + 1) : (i * nn)

X[j] <- a[1:nn]

Y[j] <- a[n:(n-nn+1)]

}

points(X, Y, col="red")

points( d[1:nn], d[n:(n-nn+1)], ...)

# The 5% confidence interval stemming from the resampled data

require(quantreg)

for (tau in c(alpha, 1-alpha)) {

r <- lprq(X, Y,

h = bw.nrd0(x), # See ?density

tau = tau)

lines(r$xx, r$fv, col = "blue", lwd = 3)

}

abline(0, 1, col = "blue", lty = 2)

# The histogram, in a corner

op <- par(fig = if (skewness(x)>0)

c(.02,.5,.5,.98) # Top left corner

else c(.5,.98,.02,.5), # Bottom right

new = TRUE)

hist(x, probability=T,

col="light blue", xlab="", ylab="", main="", axes=F)

lines(density(x), col="red", lwd=2)

box()

par(op)

}

robust.symetry.plot(EuStockMarkets[,"CAC"])

robust.symetry.plot <- function (x,

N = max(ceiling(1000/length(x)),2),

alpha = .05,

xlab = "Distance below the median",

ylab = "Distance above",

main = "Symetry plot",

...) {

cat(N, "\n")

# The symetry plot

x <- x[!is.na(x)]

n <- length(x)

nn <- ceiling(n/2)

x <- sort(x)

d <- abs(x - median(x)) # Distance to the median

plot( d[1:nn], d[n:(n-nn+1)],

xlab = xlab, ylab = ylab,

main = main,

... )

# The symetry plot of resampled, symetric data

y <- c(x, 2 * median(x) - x) # We symetrize the data

X <- Y <- rep(NA, N * nn)

for (i in 1:N) {

a <- sort(sample(y, n))

a <- abs(a - median(a))

j <- ((i-1) * nn + 1) : (i * nn)

X[j] <- a[1:nn]

Y[j] <- a[n:(n-nn+1)]

}

points(X, Y, col="red")

points( d[1:nn], d[n:(n-nn+1)], ...)

# The 5% confidence interval stemming from the resampled data

require(quantreg)

for (tau in c(alpha, 1-alpha)) {

r <- lprq(X, Y,

h = bw.nrd0(x), # See ?density

tau = tau)

lines(r$xx, r$fv, col = "blue", lwd = 3)

}

abline(0, 1, col = "blue", lty = 2)

# The histogram, in a corner

op <- par(fig = if (skewness(x)>0)

c(.02,.5,.5,.98) # Top left corner

else c(.5,.98,.02,.5), # Bottom right

new = TRUE)

hist(x, probability=T,

col="light blue", xlab="", ylab="", main="", axes=F)

lines(density(x), col="red", lwd=2)

box()

par(op)

}

robust.symetry.plot(EuStockMarkets[,"CAC"])

robust.symetry.plot <- function (x,

N = max(ceiling(1000/length(x)),2),

alpha = .05,

xlab = "Distance below the median",

ylab = "Distance above",

main = "Symetry plot",

...) {

cat(N, "\n")

# The symetry plot

x <- x[!is.na(x)]

n <- length(x)

nn <- ceiling(n/2)

x <- sort(x)

d <- abs(x - median(x)) # Distance to the median

plot( d[1:nn], d[n:(n-nn+1)],

xlab = xlab, ylab = ylab,

main = main,

... )

# The symetry plot of resampled, symetric data

y <- c(x, 2 * median(x) - x) # We symetrize the data

X <- Y <- rep(NA, N * nn)

for (i in 1:N) {

a <- sort(sample(y, n))

a <- abs(a - median(a))

j <- ((i-1) * nn + 1) : (i * nn)

X[j] <- a[1:nn]

Y[j] <- a[n:(n-nn+1)]

}

points(X, Y, col="red")

points( d[1:nn], d[n:(n-nn+1)], ...)

# The 5% confidence interval stemming from the resampled data

require(quantreg)

for (tau in c(alpha, 1-alpha)) {

r <- lprq(X, Y,

h = bw.nrd0(x), # See ?density

tau = tau)

lines(r$xx, r$fv, col = "blue", lwd = 3)

}

abline(0, 1, col = "blue", lty = 2)

# The histogram, in a corner

op <- par(fig = if (skewness(x)>0)

c(.02,.5,.5,.98) # Top left corner

else c(.5,.98,.02,.5), # Bottom right

new = TRUE)

hist(x, probability=T,

col="light blue", xlab="", ylab="", main="", axes=F)

lines(density(x), col="red", lwd=2)

box()

par(op)

}

robust.symetry.plot(EuStockMarkets[,"CAC"])

robust.symetry.plot <- function (x,

N = max(ceiling(1000/length(x)),2),

alpha = .05,

xlab = "Distance below the median",

ylab = "Distance above",

main = "Symetry plot",

...) {

cat(N, "\n")

# The symetry plot

x <- x[!is.na(x)]

n <- length(x)

nn <- ceiling(n/2)

x <- sort(x)

d <- abs(x - median(x)) # Distance to the median

plot( d[1:nn], d[n:(n-nn+1)],

xlab = xlab, ylab = ylab,

main = main,

... )

# The symetry plot of resampled, symetric data

y <- c(x, 2 * median(x) - x) # We symetrize the data

X <- Y <- rep(NA, N * nn)

for (i in 1:N) {

a <- sort(sample(y, n))

a <- abs(a - median(a))

j <- ((i-1) * nn + 1) : (i * nn)

X[j] <- a[1:nn]

Y[j] <- a[n:(n-nn+1)]

}

points(X, Y, col="red")

points( d[1:nn], d[n:(n-nn+1)], ...)

# The 5% confidence interval stemming from the resampled data

require(quantreg)

for (tau in c(alpha, 1-alpha)) {

r <- lprq(X, Y,

h = bw.nrd0(x), # See ?density

tau = tau)

lines(r$xx, r$fv, col = "blue", lwd = 3)

}

abline(0, 1, col = "blue", lty = 2)

# The histogram, in a corner

op <- par(fig = if (skewness(x)>0)

c(.02,.5,.5,.98) # Top left corner

else c(.5,.98,.02,.5), # Bottom right

new = TRUE)

hist(x, probability=T,

col="light blue", xlab="", ylab="", main="", axes=F)

lines(density(x), col="red", lwd=2)

box()

par(op)

}

robust.symetry.plot(EuStockMarkets[,"CAC"])

robust.symetry.plot <- function (x,

N = max(ceiling(1000/length(x)),2),

alpha = .05,

xlab = "Distance below the median",

ylab = "Distance above",

main = "Symetry plot",

...) {

cat(N, "\n")

# The symetry plot

x <- x[!is.na(x)]

n <- length(x)

nn <- ceiling(n/2)

x <- sort(x)

d <- abs(x - median(x)) # Distance to the median

plot( d[1:nn], d[n:(n-nn+1)],

xlab = xlab, ylab = ylab,

main = main,

... )

# The symetry plot of resampled, symetric data

y <- c(x, 2 * median(x) - x) # We symetrize the data

X <- Y <- rep(NA, N * nn)

for (i in 1:N) {

a <- sort(sample(y, n))

a <- abs(a - median(a))

j <- ((i-1) * nn + 1) : (i * nn)

X[j] <- a[1:nn]

Y[j] <- a[n:(n-nn+1)]

}

points(X, Y, col="red")

points( d[1:nn], d[n:(n-nn+1)], ...)

# The 5% confidence interval stemming from the resampled data

require(quantreg)

for (tau in c(alpha, 1-alpha)) {

r <- lprq(X, Y,

h = bw.nrd0(x), # See ?density

tau = tau)

lines(r$xx, r$fv, col = "blue", lwd = 3)

}

abline(0, 1, col = "blue", lty = 2)

# The histogram, in a corner

op <- par(fig = if (skewness(x)>0)

c(.02,.5,.5,.98) # Top left corner

else c(.5,.98,.02,.5), # Bottom right

new = TRUE)

hist(x, probability=T,

col="light blue", xlab="", ylab="", main="", axes=F)

lines(density(x), col="red", lwd=2)

box()

par(op)

}

robust.symetry.plot(EuStockMarkets[,"CAC"])

robust.symetry.plot <- function (x,

N = max(ceiling(1000/length(x)),2),

alpha = .05,

xlab = "Distance below the median",

ylab = "Distance above",

main = "Symetry plot",

...) {

cat(N, "\n")

# The symetry plot

x <- x[!is.na(x)]

n <- length(x)

nn <- ceiling(n/2)

x <- sort(x)

d <- abs(x - median(x)) # Distance to the median

plot( d[1:nn], d[n:(n-nn+1)],

xlab = xlab, ylab = ylab,

main = main,

... )

# The symetry plot of resampled, symetric data

y <- c(x, 2 * median(x) - x) # We symetrize the data

X <- Y <- rep(NA, N * nn)

for (i in 1:N) {

a <- sort(sample(y, n))

a <- abs(a - median(a))

j <- ((i-1) * nn + 1) : (i * nn)

X[j] <- a[1:nn]

Y[j] <- a[n:(n-nn+1)]

}

points(X, Y, col="red")

points( d[1:nn], d[n:(n-nn+1)], ...)

# The 5% confidence interval stemming from the resampled data

require(quantreg)

for (tau in c(alpha, 1-alpha)) {

r <- lprq(X, Y,

h = bw.nrd0(x), # See ?density

tau = tau)

lines(r$xx, r$fv, col = "blue", lwd = 3)

}

abline(0, 1, col = "blue", lty = 2)

# The histogram, in a corner

op <- par(fig = if (skewness(x)>0)

c(.02,.5,.5,.98) # Top left corner

else c(.5,.98,.02,.5), # Bottom right

new = TRUE)

hist(x, probability=T,

col="light blue", xlab="", ylab="", main="", axes=F)

lines(density(x), col="red", lwd=2)

box()

par(op)

}

robust.symetry.plot(EuStockMarkets[,"CAC"])

robust.symetry.plot <- function (x,

N = max(ceiling(1000/length(x)),2),

alpha = .05,

xlab = "Distance below the median",

ylab = "Distance above",

main = "Symetry plot",

...) {

cat(N, "\n")

# The symetry plot

x <- x[!is.na(x)]

n <- length(x)

nn <- ceiling(n/2)

x <- sort(x)

d <- abs(x - median(x)) # Distance to the median

plot( d[1:nn], d[n:(n-nn+1)],

xlab = xlab, ylab = ylab,

main = main,

... )

# The symetry plot of resampled, symetric data

y <- c(x, 2 * median(x) - x) # We symetrize the data

X <- Y <- rep(NA, N * nn)

for (i in 1:N) {

a <- sort(sample(y, n))

a <- abs(a - median(a))

j <- ((i-1) * nn + 1) : (i * nn)

X[j] <- a[1:nn]

Y[j] <- a[n:(n-nn+1)]

}

points(X, Y, col="red")

points( d[1:nn], d[n:(n-nn+1)], ...)

# The 5% confidence interval stemming from the resampled data

require(quantreg)

for (tau in c(alpha, 1-alpha)) {

r <- lprq(X, Y,

h = bw.nrd0(x), # See ?density

tau = tau)

lines(r$xx, r$fv, col = "blue", lwd = 3)

}

abline(0, 1, col = "blue", lty = 2, lwd = 3)

# The histogram, in a corner

op <- par(fig = if (skewness(x)>0)

c(.02,.5,.5,.98) # Top left corner

else c(.5,.98,.02,.5), # Bottom right

new = TRUE)

hist(x, probability=T,

col="light blue", xlab="", ylab="", main="", axes=F)

lines(density(x), col="red", lwd=2)

box()

par(op)

}

robust.symetry.plot(EuStockMarkets[,"CAC"])

robust.symetry.plot <- function (x,

N = max(ceiling(1000/length(x)),2),

alpha = .05,

xlab = "Distance below the median",

ylab = "Distance above the median",

main = "Symetry plot",

...) {

cat(N, "\n")

# The symetry plot

x <- x[!is.na(x)]

n <- length(x)

nn <- ceiling(n/2)

x <- sort(x)

d <- abs(x - median(x)) # Distance to the median

plot( d[1:nn], d[n:(n-nn+1)],

xlab = xlab, ylab = ylab,

main = main,

... )

# The symetry plot of resampled, symetric data

y <- c(x, 2 * median(x) - x) # We symetrize the data

X <- Y <- rep(NA, N * nn)

for (i in 1:N) {

a <- sort(sample(y, n))

a <- abs(a - median(a))

j <- ((i-1) * nn + 1) : (i * nn)

X[j] <- a[1:nn]

Y[j] <- a[n:(n-nn+1)]

}

points(X, Y, col="red")

points( d[1:nn], d[n:(n-nn+1)], ...)

# The 5% confidence interval stemming from the resampled data

require(quantreg)

for (tau in c(alpha, 1-alpha)) {

r <- lprq(X, Y,

h = bw.nrd0(x), # See ?density

tau = tau)

lines(r$xx, r$fv, col = "blue", lwd = 3)

}

abline(0, 1, col = "blue", lty = 2)

# The histogram, in a corner

op <- par(fig = if (skewness(x)>0)

c(.02,.5,.5,.98) # Top left corner

else c(.5,.98,.02,.5), # Bottom right

new = TRUE)

hist(x, probability=T,

col="light blue", xlab="", ylab="", main="", axes=F)

lines(density(x), col="red", lwd=2)

box()

par(op)

}

robust.symetry.plot(EuStockMarkets[,"FTSE"])

robust.symetry.plot(rnorm(100), N=100, pch=16)

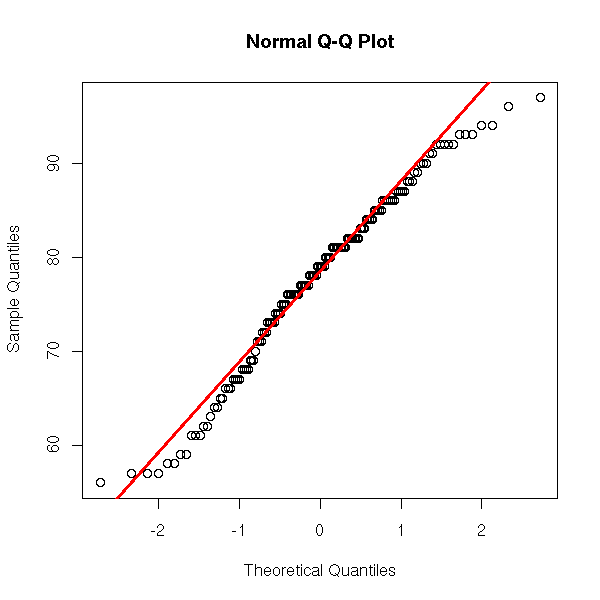

We have just seen a graphical way of assessing the symetry of a sample. One other thing we like, about our data, is when they follow a gaussian distribution -- or any well-known, reference distribution.

In some cases, it is obvious that the distribution is not gaussian: this was the case for the Old Faithful geyser eruption lengths (the data were bimodal, i.e., the density had two peaks). In other cases, it is not that obvious. A first means of checking this is to compare the estimated density with a gaussian density.

data(airquality)

x <- airquality[,4]

hist(x, probability=TRUE, breaks=20, col="light blue")

rug(jitter(x, 5))

points(density(x), type='l', lwd=3, col='red')

f <- function(t) {

dnorm(t, mean=mean(x), sd=sd(x) )

}

curve(f, add=T, col="red", lwd=3, lty=2)

You can also see, graphically, wether a variable is gaussian: just plot the gaussian quantiles versus the sample quantiles.

There is already a function to do that. (The "qqline" function plots a line through the first and third quartiles.) In this example, the data are roughly gaussian, but we can see that they are discrete.

x <- airquality[,4]

qqnorm(x)

qqline(x,

col="red", lwd=3)

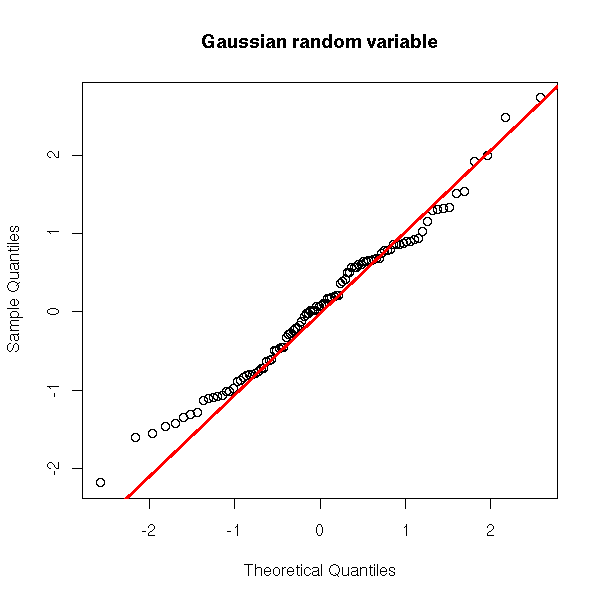

Here is what we would get with a truly gaussian variable.

y <- rnorm(100)

qqnorm(y, main="Gaussian random variable")

qqline(y,

col="red", lwd=3)

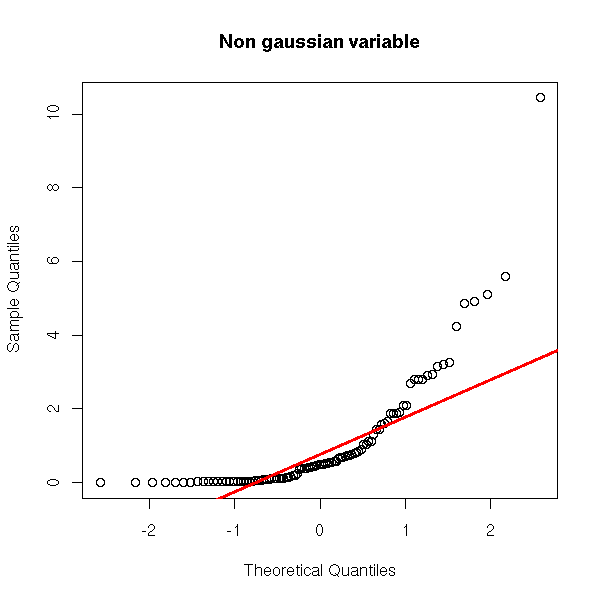

And now, a non gaussian variable.

y <- rnorm(100)^2

qqnorm(y, main="Non gaussian variable")

qqline(y,

col="red", lwd=3)

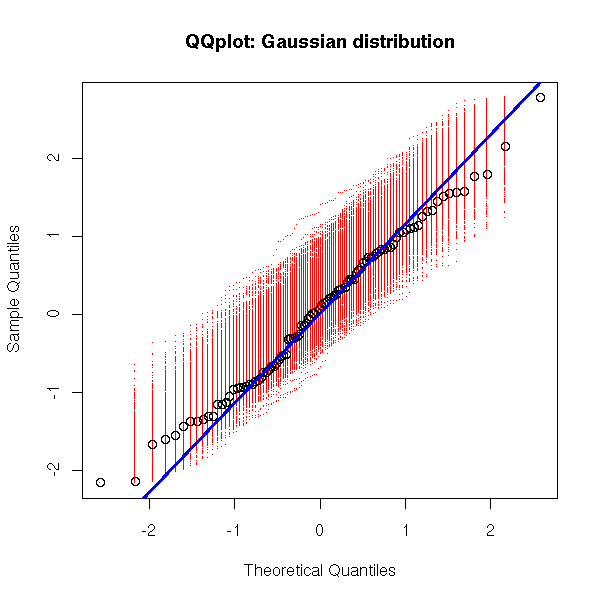

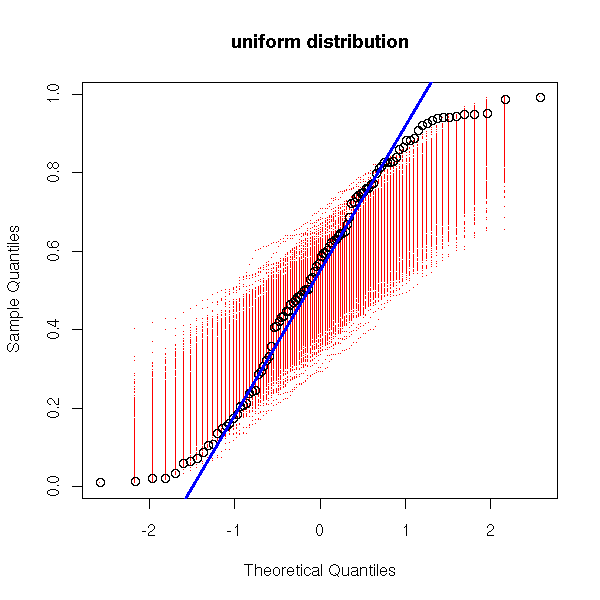

As before, we can overlay several gaussian qqplots to our plot, to see how far from gaussian our data are.

my.qqnorm <- function (x, N=1000, ...) {

op <- par()

x <- x[!is.na(x)]

n <- length(x)

m <- mean(x)

s <- sd(x)

print("a")

qqnorm(x, axes=F, ...)

for (i in 1:N) {

par(new=T)

qqnorm(rnorm(n, mean=m, sd=s), col='red', pch='.',

axes=F, xlab='', ylab='', main='')

}

par(new=T)

qqnorm(x, ...)

qqline(x, col='blue', lwd=3)

par(op)

}

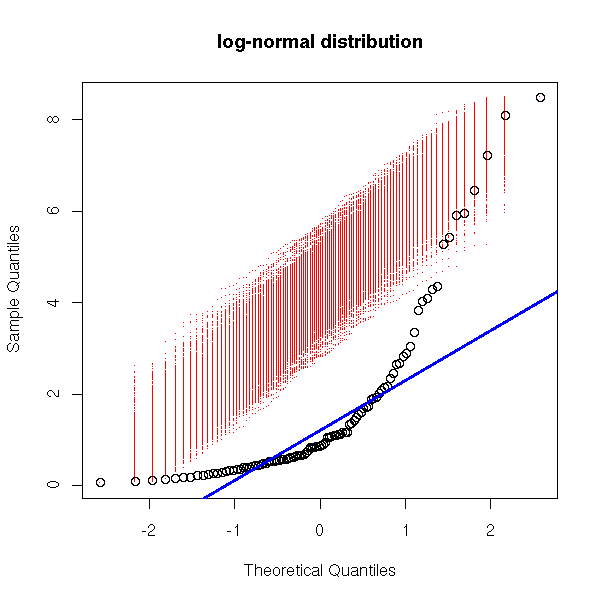

my.qqnorm(rnorm(100),

main = "QQplot: Gaussian distribution")

my.qqnorm(runif(100),

main = "uniform distribution")

my.qqnorm(exp(rnorm(100)),

main = 'log-normal distribution')

my.qqnorm(c(rnorm(50), 5+rnorm(50)),

main = 'bimodal distribution')

my.qqnorm(c(rnorm(50), 20+rnorm(50)),

main = 'two remote peaks')

x <- rnorm(100) x <- x + x^3 my.qqnorm(x, main = 'fat tails')

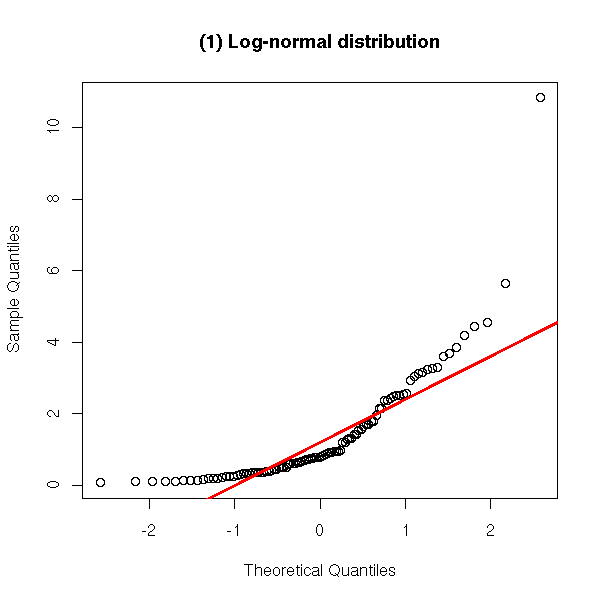

Here are other qqplot examples.

Two distributions shifted to the left.

y <- exp(rnorm(100))

qqnorm(y,

main = '(1) Log-normal distribution')

qqline(y,

col = 'red', lwd = 3)

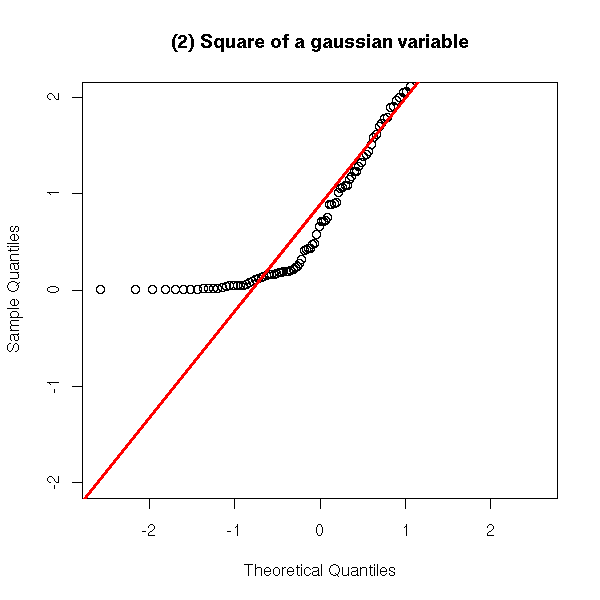

y <- rnorm(100)^2

qqnorm(y, ylim = c(-2,2),

main = "(2) Square of a gaussian variable")

qqline(y,

col = 'red', lwd = 3)

A distribution shifted to the right.

y <- -exp(rnorm(100))

qqnorm(y, ylim = c(-2,2),

main = "(3) Opposite of a log-normal variable")

qqline(y,

col = 'red', lwd = 3)

A distribution less dispersed that the gaussian distribution (this is called a leptokurtic distribution).

y <- runif(100, min=-1, max=1)

qqnorm(y, ylim = c(-2,2),

main = '(4) Uniform distribution')

qqline(y,

col = 'red', lwd = 3)

A distribution more dispersed that the gaussian distribution (this is called a platykurtic distribution).

y <- rnorm(10000)^3

qqnorm(y, ylim = c(-2,2),

main = "(5) Cube of a gaussian r.v.")

qqline(y,

col = 'red', lwd = 3)

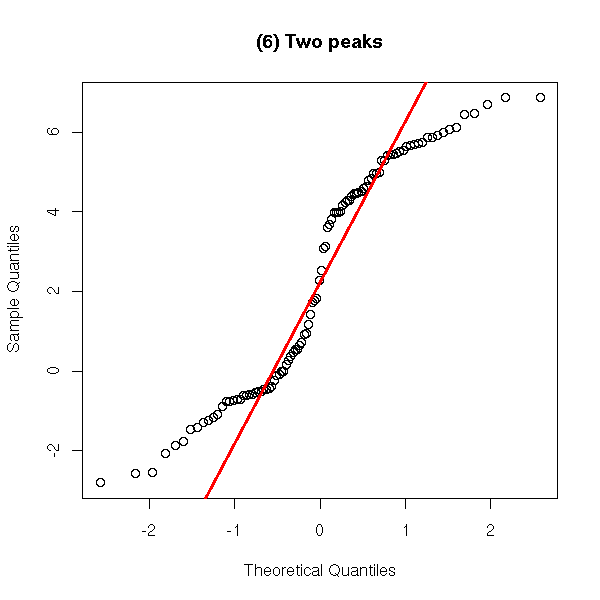

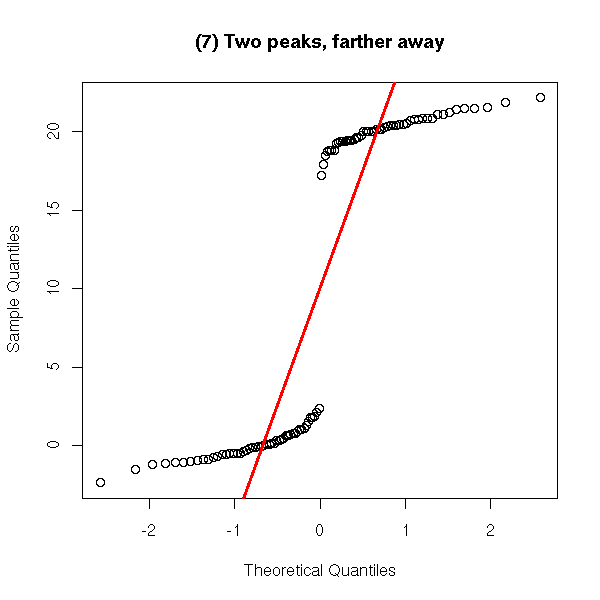

A distribution with several peaks.

y <- c(rnorm(50), 5+rnorm(50))

qqnorm(y,

main = '(6) Two peaks')

qqline(y,

col = 'red', lwd = 3)

y <- c(rnorm(50), 20+rnorm(50))

qqnorm(y,

main = '(7) Two peaks, farther away')

qqline(y,

col = 'red', lwd = 3)

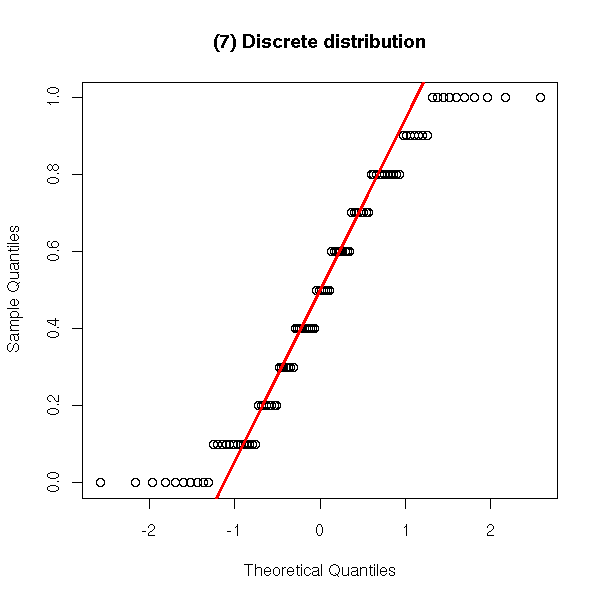

y <- sample(seq(0,1,.1), 100, replace=T)

qqnorm(y,

main = '(7) Discrete distribution')

qqline(y,

col = 'red', lwd = 3)

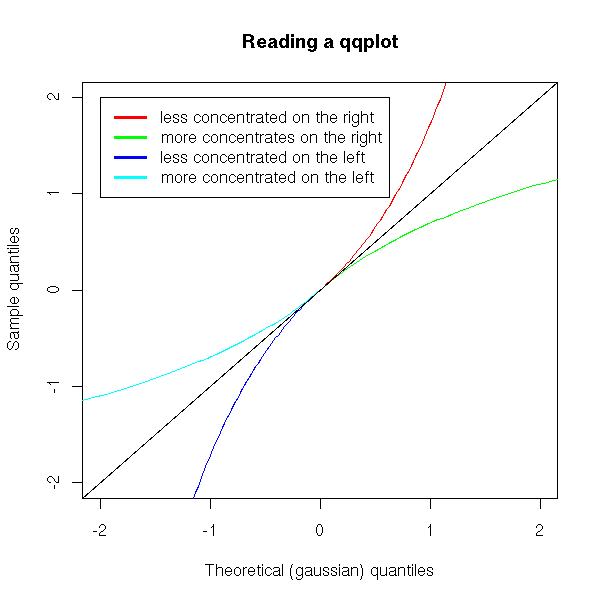

You can read those plots as follows.

a. If the distribution is more concentrated to the left than the gaussian distribution, the left part of the plot is above the line (examples 1, 2 and 4 above).

b. If the distribution is less concentrated to the left than the gaussian distribution, the left part of the plot is under the line (example 3 above).

c. If the distribution is more concentrated to the right than the gaussian distribution, the right part of the plot is under the line (examples 3 and 4 above).

d. If the distribution is less concentrated to the right than the gaussian distribution, the right part of the plot is above the line (examples 1 and 2 above).

For instance, example 5 can be interpreted as: the distribution is symetric, to the left of 0, near 0, it is more concentrated that a gaussian distribution ; to the left of 0, far from 0, it is less concentrated than a gaussian distribution; on the right of 0, it is the same.

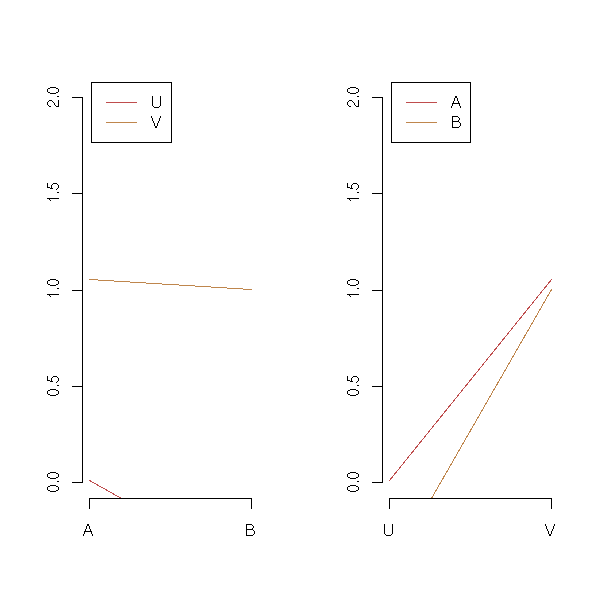

x <- seq(from=0, to=2, length=100)

y <- exp(x)-1

plot( y ~ x, type = 'l', col = 'red',

xlim = c(-2,2), ylim = c(-2,2),

xlab = "Theoretical (gaussian) quantiles",

ylab = "Sample quantiles")

lines( x~y, type='l', col='green')

x <- -x

y <- -y

lines( y~x, type='l', col='blue', )

lines( x~y, type='l', col='cyan')

abline(0,1)

legend( -2, 2,

c( "less concentrated on the right",

"more concentrates on the right",

"less concentrated on the left",

"more concentrated on the left"

),

lwd=3,

col=c("red", "green", "blue", "cyan")

)

title(main="Reading a qqplot")

e. If the distribution is "off-centered to the left" (think: if the median is less than the mean between the first and third quartiles), then the curve is under the line in the center of the plot (examples 1 and 2 above).

f. If the distribution is "off-centered to the right" (think: if the median is more than the mean between the first and third quartiles), then the curve is above the line in the center of the plot (example 3 above).

g. If the distribution is symetric (think: if the median coincides with the average of the first and third quartiles), then the curve cuts the line in the center of the plot (examples 4 and 5 above).

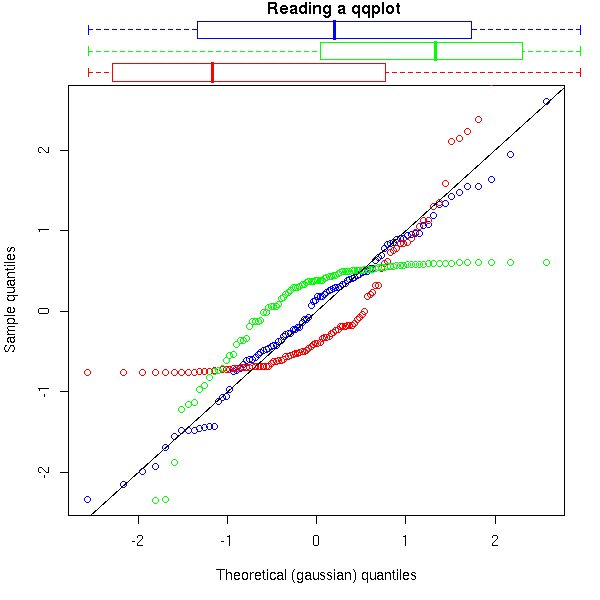

op <- par()

layout( matrix( c(2,2,1,1), 2, 2, byrow=T ),

c(1,1), c(1,6),

)

# The plot

n <- 100

y <- rnorm(n)

x <- qnorm(ppoints(n))[order(order(y))]

par(mar=c(5.1,4.1,0,2.1))

plot( y ~ x, col = "blue",

xlab = "Theoretical (gaussian) quantiles",

ylab = "Sample quantiles" )

y1 <- scale( rnorm(n)^2 )

x <- qnorm(ppoints(n))[order(order(y1))]

lines(y1~x, type="p", col="red")

y2 <- scale( -rnorm(n)^2 )

x <- qnorm(ppoints(n))[order(order(y2))]

lines(y2~x, type="p", col="green")

abline(0,1)

# The legend

par(bty='n', ann=F)

g <- seq(0,1, length=10)

e <- g^2

f <- sqrt(g)

h <- c( rep(1,length(e)), rep(2,length(f)), rep(3,length(g)) )

par(mar=c(0,4.1,1,0))

boxplot( c(e,f,g) ~ h, horizontal=T,

border=c("red", "green", "blue"),

col="white", # Something prettier?

xaxt='n',

yaxt='n',

)

title(main="Reading a qqplot")

par(op)

You can roll up your own qqplot, by going back to the definition.

y <- rnorm(100)^2 y <- scale(x) y <- sort(x) x <- qnorm( seq(0,1,length=length(y)) ) plot(y~x) abline(0,1)

Let us have a look at the way the "qqnorm" function is programmed.

> help.search("qqnorm")

Help files with alias or concept or title matching "qqnorm" using

fuzzy matching:

qqnorm.acomp(compositions)

Normal quantile plots for compositions and

amounts

qqnorml(faraway) Labeled QQ plot

GarchDistributions(fSeries)

GARCH Distributions

qqnorm.aov(gplots) Makes a half or full normal plot for the

effects from an aov model

tnorm(msm) Truncated Normal distribution

qqnorm.gls(nlme) Normal Plot of Residuals from a gls Object

qqnorm.lm(nlme) Normal Plot of Residuals or Random Effects

from an lme Object

pnorMix(nor1mix) Normal Mixture Cumulative Distribution and

Quantiles

qnormp(normalp) Quantiles of an exponential power distribution

qqnormp(normalp) Quantile-Quantile plot for an exponential

power distribution

pcdiags.plt(pcurve) Diagnostic Plots for Principal Curve Analysis

Lognormal(stats) The Log Normal Distribution

Normal(stats) The Normal Distribution

qqnorm(stats) Quantile-Quantile Plots

NormScore(SuppDists) Normal Scores distribution

> qqnorm

function (y, ...)

UseMethod("qqnorm")

> apropos("qqnorm")

[1] "my.qqnorm" "qqnorm" "qqnorm.default"

> qqnorm.default

function (y, ylim, main = "Normal Q-Q Plot", xlab = "Theoretical Quantiles",

ylab = "Sample Quantiles", plot.it = TRUE, ...)

{

y <- y[!is.na(y)]

if (0 == (n <- length(y)))

stop("y is empty")

if (missing(ylim))

ylim <- range(y)

x <- qnorm(ppoints(n))[order(order(y))]

if (plot.it)

plot(x, y, main = main, xlab = xlab, ylab = ylab, ylim = ylim,

...)

invisible(list(x = x, y = y))

}You can reuse the same idea to compare your data with other distributions.

qq <- function (y, ylim, quantiles=qnorm,

main = "Q-Q Plot", xlab = "Theoretical Quantiles",

ylab = "Sample Quantiles", plot.it = TRUE, ...)

{

y <- y[!is.na(y)]

if (0 == (n <- length(y)))

stop("y is empty")

if (missing(ylim))

ylim <- range(y)

x <- quantiles(ppoints(n))[order(order(y))]

if (plot.it)

plot(x, y, main = main, xlab = xlab, ylab = ylab, ylim = ylim,

...)

# From qqline

y <- quantile(y, c(0.25, 0.75))

x <- quantiles(c(0.25, 0.75))

slope <- diff(y)/diff(x)

int <- y[1] - slope * x[1]

abline(int, slope, ...)

invisible(list(x = x, y = y))

}

y <- runif(100)

qq(y, quantiles=qunif)

(The various interpretations of the qqplot remain valid, but points e, f ang g no longer assess the symetry of the distribution but compare this symetry with that of the reference distribution, which need not be symetric.)

People sometimes use a quantile-quantile plot to compare a positive variable with a half-gaussian -- it may help you spot outliers: we shall use it later to look at Cook's distances.

You can turn the quantile-quantile plot so that the line (through the first and third quartiles) be horizontal.

two.point.line <- function (x1,y1,x2,y2, ...) {

a1 <- (y2-y1)/(x2-x1)

a0 <- y1 - a1 * x1

abline(a0,a1,...)

}

trended.probability.plot <- function (x, q=qnorm) {

n <- length(x)

plot( sort(x) ~ q(ppoints(n)),

xlab='theoretical quantiles',

ylab='sample quantiles')

two.point.line(q(.25), quantile(x,.25),

q(.75), quantile(x,.75), col='red')

}

detrended.probability.plot <- function (x, q=qnorm,

xlab="", ylab="") {

n <- length(x)

x <- sort(x)

x1 <- q(.25)

y1 <- quantile(x,.25)

x2 <- q(.75)

y2 <- quantile(x,.75)

a1 <- (y2-y1)/(x2-x1)

a0 <- y1 - a1 * x1

u <- q(ppoints(n))

x <- x - (a0 + a1 * u)

plot(x ~ u,

xlab=xlab, ylab=ylab)

abline(h=0, col='red')

}

op <- par(mfrow = c(3,2), mar = c(2,2,2,2) + .1)

x <- runif(20)

trended.probability.plot(x)

detrended.probability.plot(x)

x <- runif(500)

trended.probability.plot(x)

detrended.probability.plot(x)

trended.probability.plot(x, qunif)

detrended.probability.plot(x,qunif)

par(op)

mtext("Detrended quantile-quantile plots",

side=3, line=3, font=2, size=1.5)

Recently, I had to follow a "data analysis course" (statistical tests, regression, Principal Component Analysis, Correspondance Analysis, Hierarchical analysis -- but, as these are very classical subjects and as I had already started to write this document, I did not learn much) during which we discovered the Gini (or Lorentz) concentration curve.

This is the curve that summarises statements such as "20% of the patients are responsible for 90% of the NHS spendings". For instance:

Proportion of patients | Proportions of spendings

----------------------------------------------------

20% | 90%

30% | 95%

50% | 99%These are cumulated percents: the table should be read as

The top 20% are responsible for 90% of the expenses The top 30% are responsible for 95% of the expenses The top 50% are responsible for 99% of the expenses

We can plot those figures:

xy <- matrix(c( 0, 0,

.2, .9,

.3, .95,

.5, .99,

1, 1), byrow = T, nc = 2)

plot(xy, type = 'b', pch = 15,

main = "Conventration curve",

xlab = "patients",

ylab = "expenses")

polygon(xy, border=F, col='pink')

lines(xy, type='b', pch=15)

abline(0,1,lty=2)

The more inegalities in the situation, the larger the area between the curve and the diagonal: this area (actually, we multiply this area by 2, so that the index varies between 0 (equality) and 1 (maximum inequalities)) is called the "Gini index".

Here is another example:

In a cell,

20 genes are expressed 10^5 times ("expressed" means

"transcribed into ARNm")

2700 genes are expressed 10^2 times

280 genes are expressed 10 timesLet us convert this into cumulated numbers:

20 genes 100000 ARNm 2720 genes 100100 ARNm 3000 genes 100110 ARNm

and then into cumulated frequencies:

Genes | ARNm ------------------- 0.7% | 0.9989012 90.7% | 0.9999001 100% | 1

Here is the curve (the situation is much worse than the preceding!):

x <- c(0,20,2720,3000)/3000

y <- c(0,100000,100100,100110)/100110

plot(x,y, type='b', pch=15,

xlab = "Genes", ylab = "ARNm",

main = "Conventration curve")

polygon(x,y, border=F, col='pink')

lines(x,y, type='b', pch=15)

abline(0,1,lty=2)

The classical example for the Gini curve is the study of incomes.

The "ineq" package contains functions to plot the Gini curve and compute the Gini index (well, the curves are the symetrics of mine, but that does not change the results).

The "Gini" function, in the "ineq" package, computes the Gini concentration index. It is only defined if the variable studied is POSITIVE (in the examples above -- "NHS spendings", "number of transcribed genes", "income", etc. -- we did not explicitely mention the variable but merely gave its cumulated frequencies).

> n <- 500

> library(ineq)

> Gini(runif(n))

[1] 0.3241409

> Gini(runif(n,0,10))

[1] 0.3459194

> Gini(runif(n,10,11))

[1] 0.01629126

> Gini(rlnorm(n))

[1] 0.5035944

> Gini(rlnorm(n,0,2))

[1] 0.8577991

> Gini(exp(rcauchy(n,1)))

[1] 0.998

> Gini(rpois(n,1))

[1] 0.5130061

> Gini(rpois(n,10))

[1] 0.1702435

library(ineq)

op <- par(mfrow=c(3,3), mar=c(2,3,3,2)+.1, oma=c(0,0,2,0))

n <- 500

set.seed(1)

plot(Lc(runif(n,0,1)),

main="uniform on [0,1]", col='red',

xlab="", ylab="")

do.it <- function (x, main="", xlab="", ylab="") {

plot(Lc(x), col = "red",

main=main, xlab=xlab, ylab=ylab)

}

do.it(runif(n,0,10), main="uniform on [0,10]")

do.it(runif(n,10,11), main="uniform on [10,11]")

do.it(rlnorm(n), main="log-normal")

do.it(rlnorm(n,0,4), main="log-normal, wider")

do.it(abs(rcauchy(n,1)), main="half-Cauchy")

do.it(abs(rnorm(n,1)), main="half-Gaussian")

do.it(rpois(n,1), main="Poisson with mean 1")

do.it(rpois(n,10), main="Poisson with mean 10")

par(op)

mtext("Gini concentration curves", side=3, line=3,

font=2, cex=1.5)

We can compute the Gini index ourselves:

n <- 500 x <- qlnorm((1:(n-1))/n, 1, 2.25) x <- sort(x) i <- (1:n)/n plot(cumsum(x)/sum(x) ~ i, lwd=3, col='red') abline(0,1) 2*sum(i-cumsum(x)/sum(x))/n

Exercise: the the examples above, the data were presented in two different forms: either cumulated frequencies or a random variable. Write some code to turn one representation into the other.

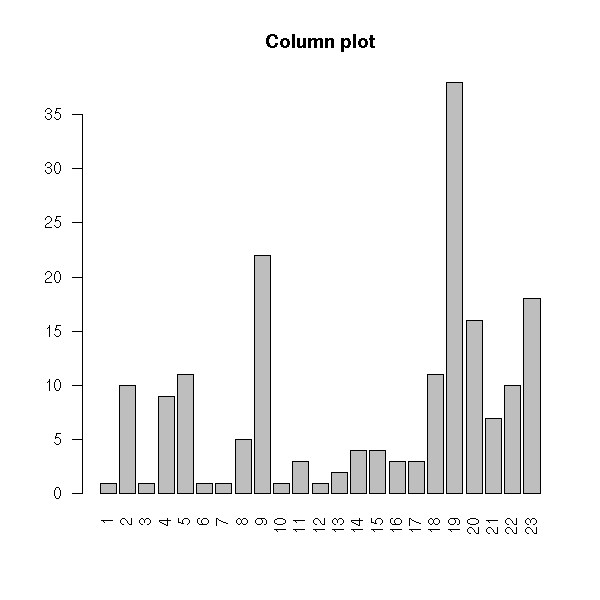

We are no longer interested in precise numeric values but in rankings. Sometimes, one will cheat and consider them as quantitative variables (and indeed, ordered variables are sometimes a simplification of quantitative variables, when they are put into classes -- but beware, by doing so, you lose information), after transforming them if needed (so that they look more gaussian) or as qualitative variables (because, as qualitative variables, they only assume a finite number of values).

In R, qualitative variables are stored in "factors" and ordered variables in "ordered factors": just replace the "factor" function by "ordered".

> l <- c('petit', 'moyen', 'grand')

> ordered( sample(l,10,replace=T), levels=l)

[1] grand moyen moyen grand moyen petit moyen petit moyen moyen

Levels: petit < moyen < grandThe plots are the same as with factors, but the order between the levels is not arbitrary.

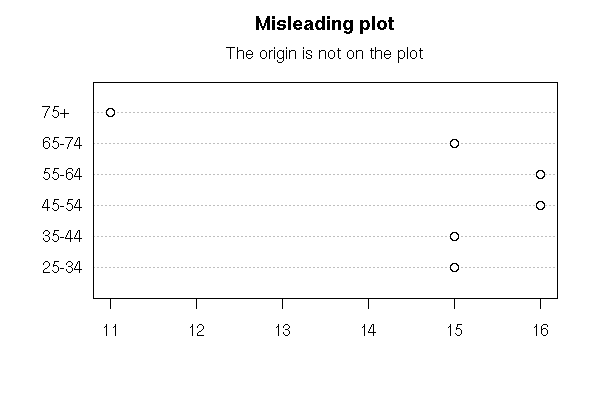

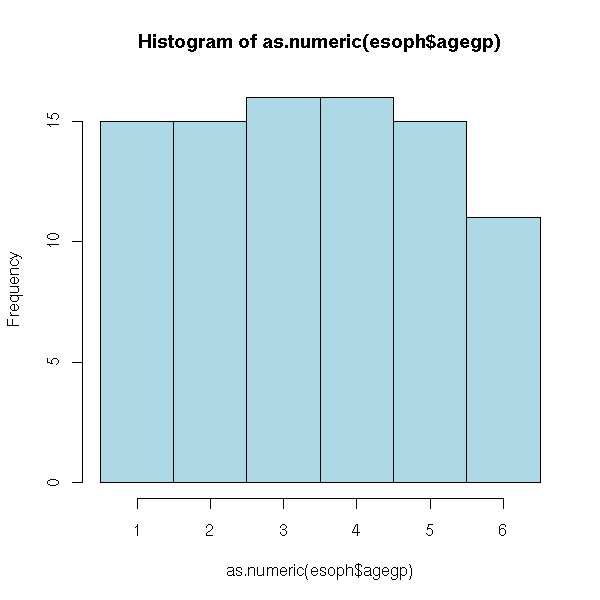

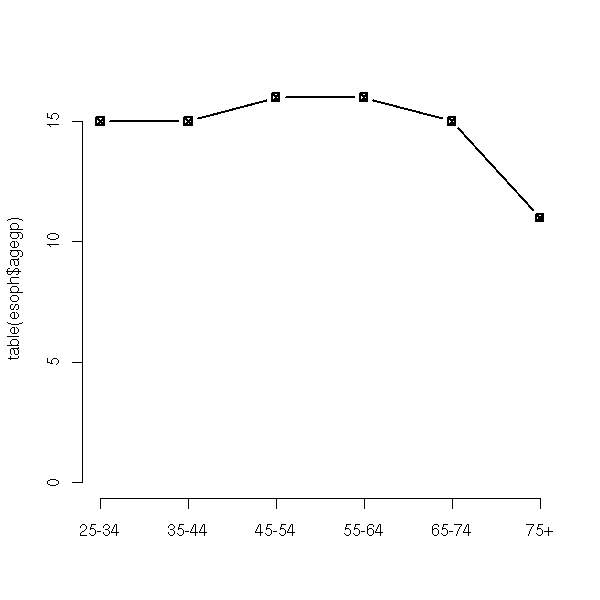

data(esoph)

dotchart(table(esoph$agegp))

mtext("Misleading plot", side=3, line=2.5, font=2, cex=1.2)

mtext("The origin is not on the plot", side=3, line=1)

barplot(table(esoph$agegp))

hist(as.numeric(esoph$agegp),

breaks=seq(.5,.5+length(levels(esoph$agegp)),step=1),

col='light blue')

boxplot(as.numeric(esoph$agegp),

horizontal = T, col = "pink")

stripchart(jitter(as.numeric(esoph$agegp),2), method='jitter')

plot(table(esoph$agegp), type='b', pch=7)

We have a list of non-numeric values (actually, the data can be coded by numbers, but they are arbitrary, a difference of those numbers is meaningless: for instance, zip codes, phone numbers, or answer numbers in a questionnaire).

There are two ways of presenting such data: either a vector of strings (more precisely, of "factors": when you print it, it looks like strings, but as we expect there will be few values, often repeated, it is coded more efficiently -- you can convert a factor into an actual vector of strings with the "as.character" function), or a contingency table.

> p <- factor(c("oui", "non"))

> x <- sample(p, 100, replace=T)

> str(x)

Factor w/ 2 levels "non","oui": 1 2 2 2 2 1 2 2 2 2 ...

> table(x)

x

non oui

48 52The second representation is much more compact. If you only have quantitative variables, and few of them, you might want to favour it. In the following example, there are three variables and the contingency table is consequently 3-dimensional.

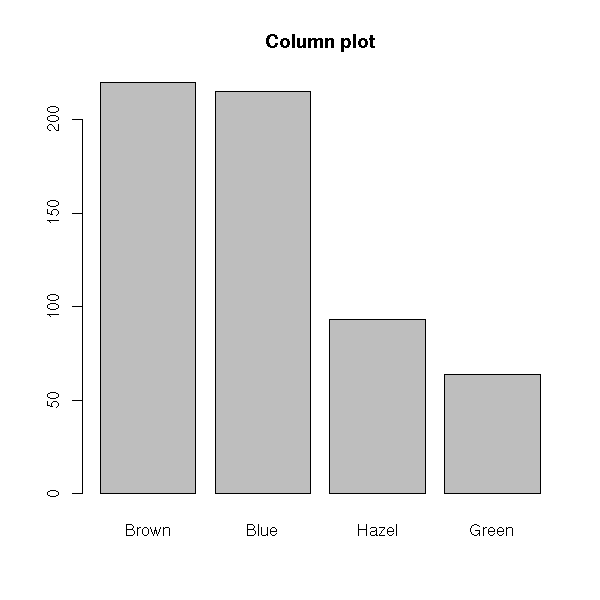

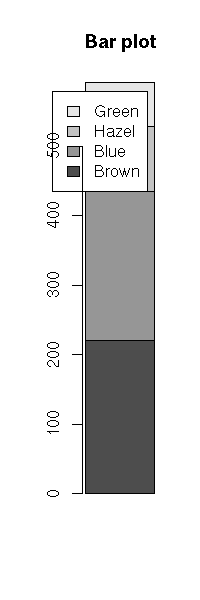

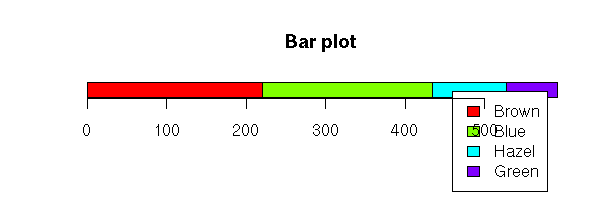

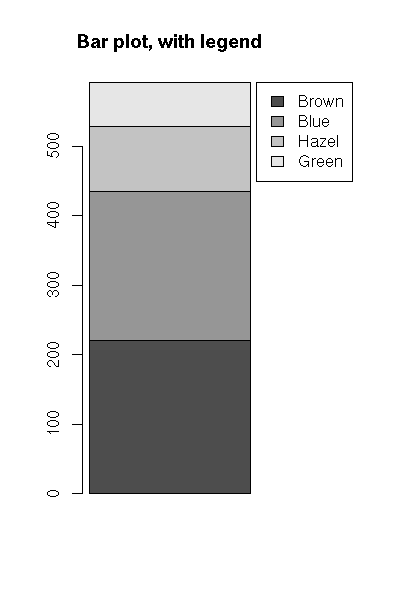

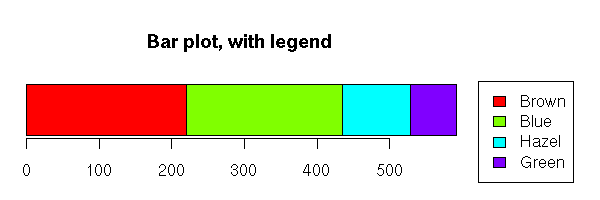

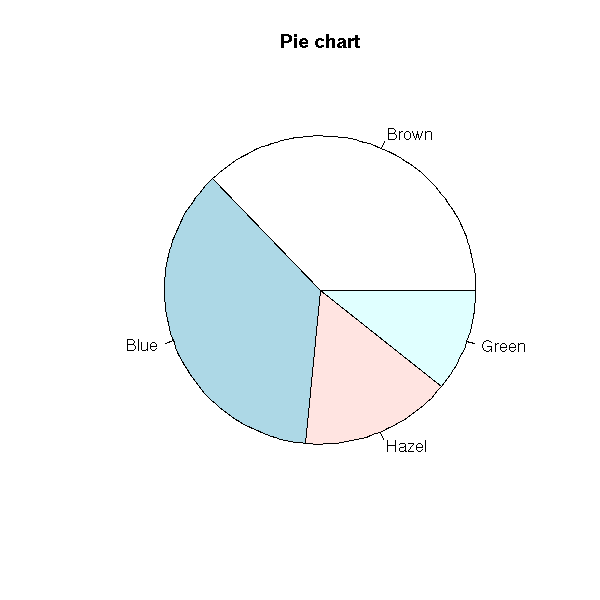

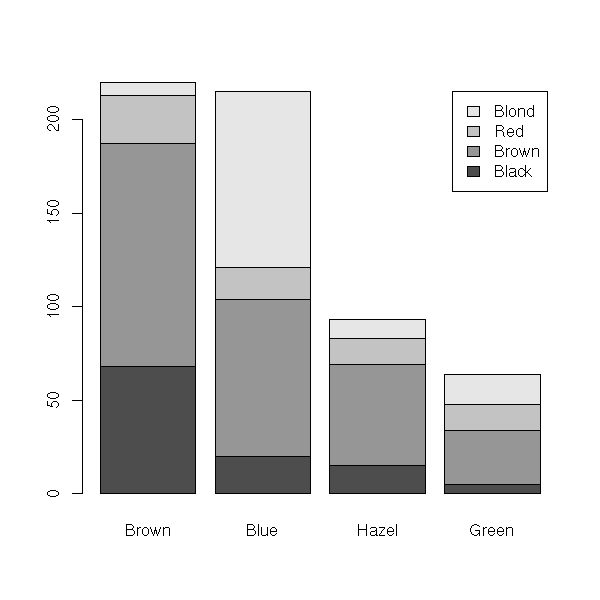

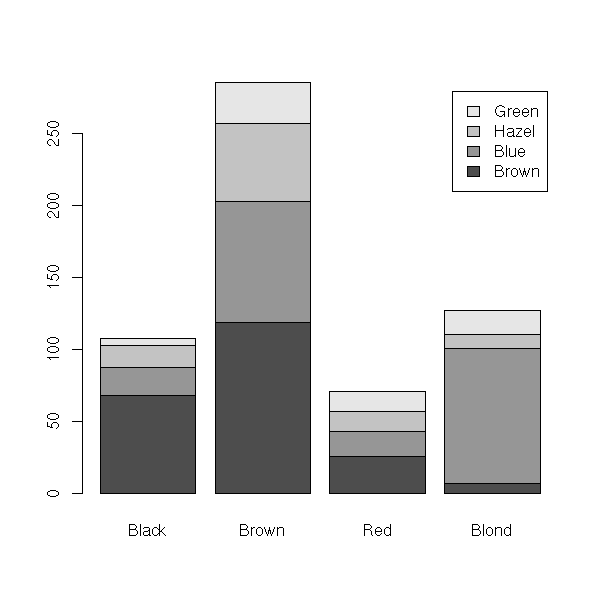

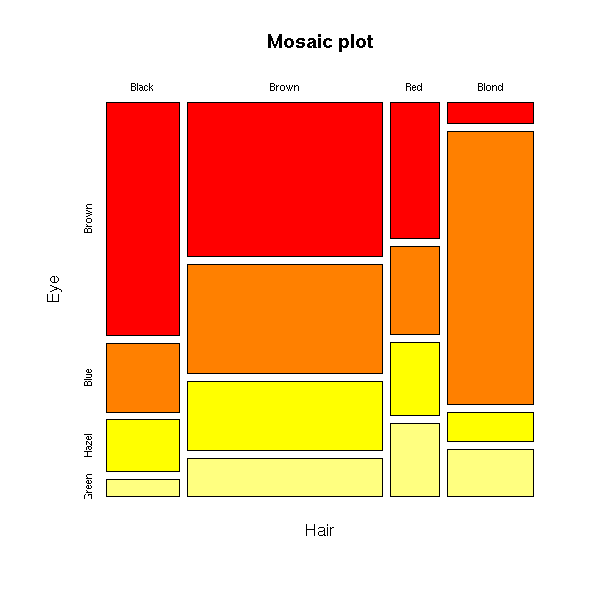

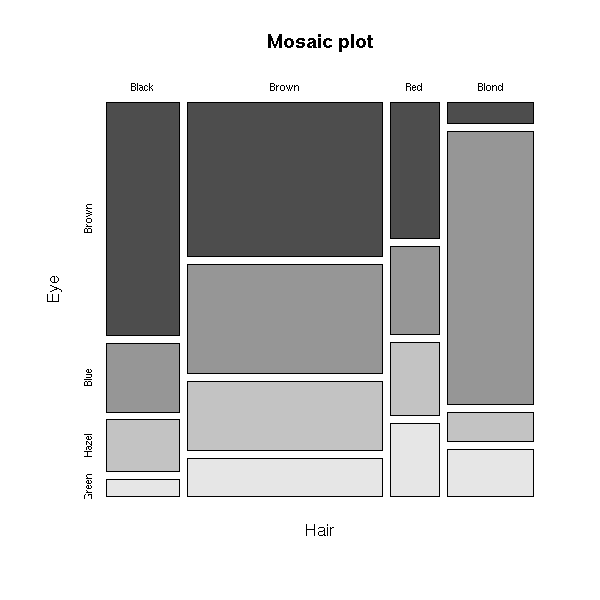

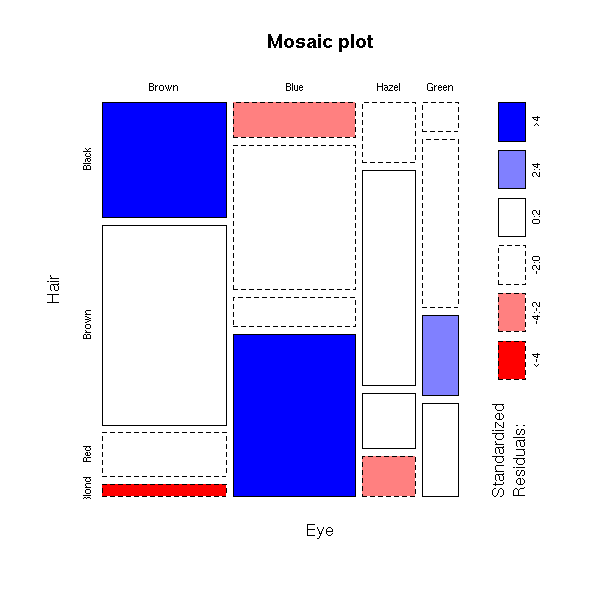

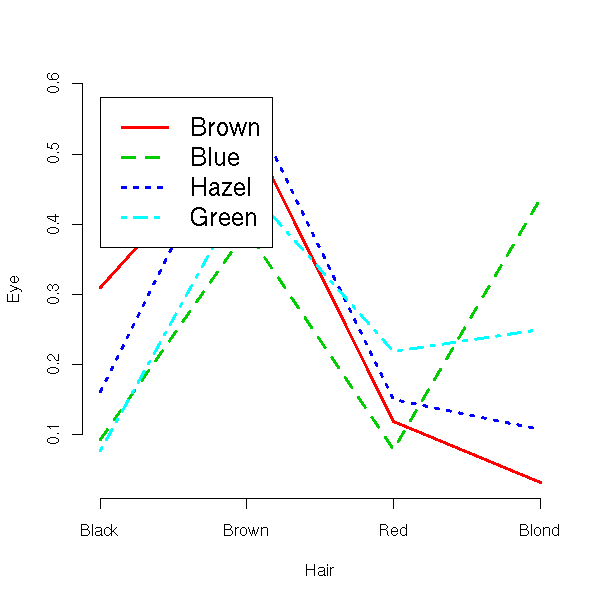

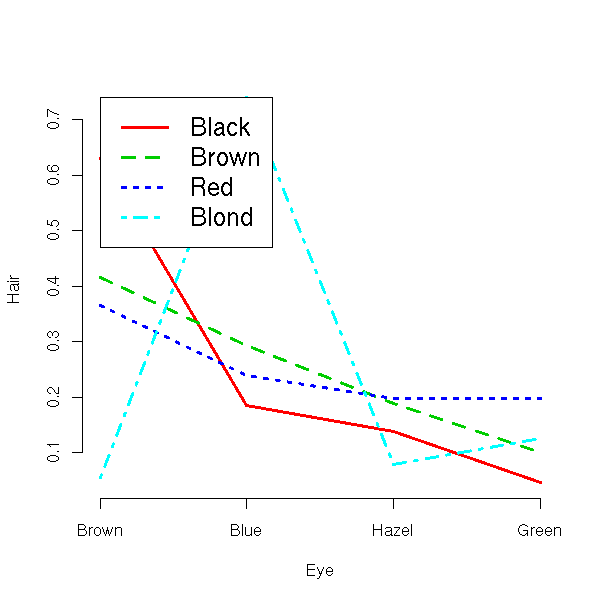

> data(HairEyeColor)

> HairEyeColor

, , Sex = Male

Eye

Hair Brown Blue Hazel Green

Black 32 11 10 3

Brown 38 50 25 15

Red 10 10 7 7

Blond 3 30 5 8

, , Sex = Female

Eye

Hair Brown Blue Hazel Green

Black 36 9 5 2

Brown 81 34 29 14

Red 16 7 7 7

Blond 4 64 5 8On the contrary, if you have both qualitative and quantitative variables, or if you have many qualitative variables, you will prefer the first representation.

> data(chickwts) > str(chickwts) `data.frame': 71 obs. of 2 variables: $ weight: num 179 160 136 227 217 168 108 124 143 140 ... $ feed : Factor w/ 6 levels "casein","horseb..",..: 2 2 2 2 2 2 2 2 2 2 ...

But let us stay, for the moment, with univariate data sets.