The R language

Data structures

Debugging

Object Oriented Programming: S3 Classes

Object Oriented Programming: S3 Classes

Data storage, Data import, Data export

Packages

Other languages

(Graphical) User Interface

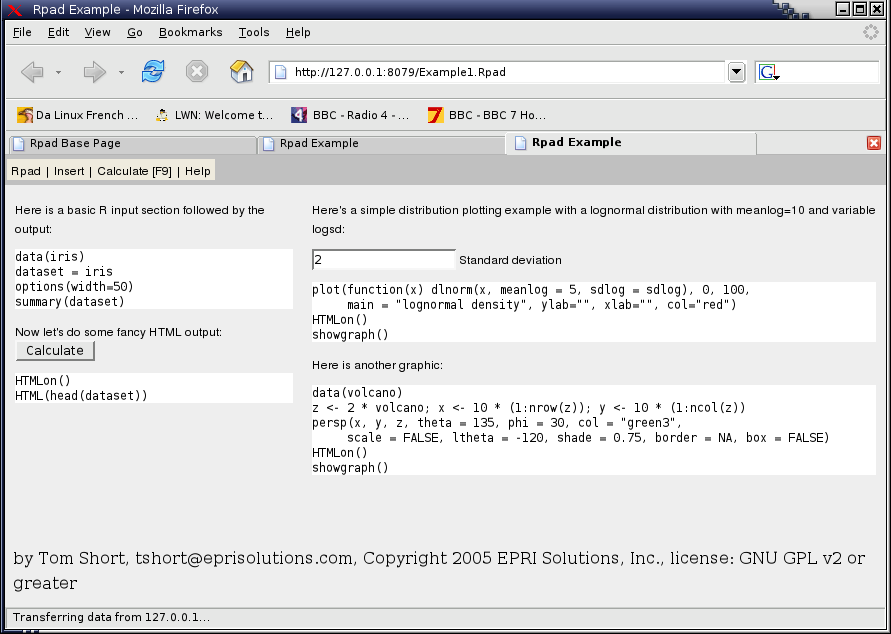

Web interface: Rpad

Web programming: RZope

Web services

Clusters, parallel programming

Miscellaneous

Numerical optimization

Miscellaneous

Dirty Tricks

In this part, after quickly listing the main characteristics of the language, we present the basic data types, how to create them, how to explore them, how to extract pieces of them, how to modify them.

We then jump to more advanced subjects (most of which can -- should? -- be omitted by first-time readers): debugging, profiling, namespaces, objects, interface with other programs, with data bases, with other languages.

Actually, R is a programming language: as such, we have the usual control structures (loops, conditionnals, recursion, etc.)

Conditionnal statements:

if(...) {

...

} else {

...

}Conditionnals may be used inside other constructions.

x <- if(...) 3.14 else 2.71

You can also construct vectors from conditionnal expressions, with the "ifelse" function.

x <- rnorm(100) y <- ifelse(x>0, 1, -1) z <- ifelse(x>0, 1, ifelse(x<0, -1, 0))

Switch (I do not like this command -- this is probably the last time you see it in this document):

x <- letters[floor(1+runif(1,0,4))]

y <- switch(x,

a='Bonjour',

b='Gutten Tag',

c='Hello',

d='Konnichi wa',

)For loop (we loop over the elements of a vector or list):

for (i in 1:10) {

...

if(...) { next }

...

if(...) { break }

...

}While loop:

while(...) {

...

}Repeat loop:

repeat {

...

if(...) { break }

...

}

R belongs to the family of functionnal languages (Lisp, OCaML, but also Python): the notion of function is central. In particular, if you need it, you can write functions that take other functions as argument -- and in case you wonder, yes, you need it.

A function is defined as follows.

f <- function(x) {

x^2 + x + 1

}The return value is the last value computed -- but you can also use the "return" function.

f <- function(x) {

return( x^2 + x + 1 )

}Arguments can have default values.

f <- function(x, y=3) { ... }When you call a function you can use the argument names, without any regard to their order (this is very useful for functions that expect many arguments -- in particular arguments with default values).

f(y=4, x=3.14)

After the arguments, in the definition of a function, you can put three dots represented the arguments that have not been specified and that can passed through another function (very often, the "plot" function).

f <- function(x, ...) {

plot(x, ...)

}But you can also use this to write functions that take an arbitrary number of arguments:

f <- function (...) {

query <- paste(...) # Concatenate all the arguments to form a string

con <- dbConnect(dDriver("SQLite"))

dbGetQuery(con, query)

dbDisconnect(con)

}

f <- function (...) {

l <- list(...) # Put the arguments in a (named) list

for (i in seq(along=l)) {

cat("Argument name:", names(l)[i], "Value:", l[[i]], "\n")

}

}Functions have NO SIDE EFFECTS: all the modifications are local. In particular, you cannot write a function that modifies a global variable. (Well, if you really want, you can: see the "Dirty Tricks" part -- but you should not).

To get the code of a function, you can just type its name -- wit no brackets.

> IQR function (x, na.rm = FALSE) diff(quantile(as.numeric(x), c(0.25, 0.75), na.rm = na.rm, names = FALSE)) <environment: namespace:stats>

But sometimes, it does not work that well: if we want to peer inside the "predict" function that we use for predictions of linear models, we get.

> predict

function (object, ...)

UseMethod("predict")

<environment: namespace:stats>This is a generic function: we can use the same function on different objects (lm for linear regression, glm for Poisson or logistic regression, lme for mixed models, etc.). The actual function called is "predict.Foo" where "Foo" is the class of the object given as a first argument.

> methods("predict")

[1] predict.ar* predict.Arima*

[3] predict.arima0* predict.glm

[5] predict.HoltWinters* predict.lm

[7] predict.loess* predict.mlm

[9] predict.nls* predict.poly

[11] predict.ppr* predict.prcomp*

[13] predict.princomp* predict.smooth.spline*

[15] predict.smooth.spline.fit* predict.StructTS*

Non-visible functions are asteriskedAs we wanted the one for the "lm" object, we just type (I do not include all the code, it would take several pages):

> predict.lm

function (object, newdata, se.fit = FALSE, scale = NULL, df = Inf,

interval = c("none", "confidence", "prediction"), level = 0.95,

type = c("response", "terms"), terms = NULL, na.action = na.pass,

...)

{

tt <- terms(object)

if (missing(newdata) || is.null(newdata)) {

mm <- X <- model.matrix(object)

mmDone <- TRUE

offset <- object$offset

(...)

else if (se.fit)

list(fit = predictor, se.fit = se, df = df, residual.scale = sqrt(res.var))

else predictor

}

<environment: namespace:stats>But if we wanted the "predict.prcomp" function (to add new observations to a principal component analysis), it does not work:

> predict.prcomp Error: Object "predict.prcomp" not found

The problem is that the function is in a given namespace (R functions are stored in "packages" and each function is hidden in a namespace; the functions that a normal user is likely to use directly are exported and visible -- but the others, that are not supposed to be invoked directly by the user are hidden, invisible). We can get it with the "getAnywhere" function (here again, I do not include all the resulting code).

> getAnywhere("predict.prcomp")

A single object matching "predict.prcomp" was found

It was found in the following places

registered S3 method for predict from namespace stats

namespace:stats

with value

function (object, newdata, ...)

{

if (missing(newdata)) {

(...)

}

<environment: namespace:stats>Alternatively, we can use the getS3Method function.

> getS3method("predict", "prcomp")

function (object, newdata, ...)

{

(...)Alternatively, if we know in which package a function (or any object, actually is), we can access it with the "::" operator if it is exported (it can be exported but hidden by another object with the same name) or the ":::" operator if it is not.

> stats::predict.prcomp

Error: 'predict.prcomp' is not an exported object from 'namespace:stats'

> stats:::predict.prcomp

function (object, newdata, ...)

{

(...)

> lm <- 1

> lm

[1] 1

> stats::lm

function (formula, data, subset, weights, na.action, method = "qr",

model = TRUE, x = FALSE, y = FALSE, qr = TRUE, singular.ok = TRUE,

contrasts = NULL, offset, ...)

(...)Things can get even more complicated. The most common reason you want to peer into the code of a function is to extract some information that gets printed when it is run (typically, a p-value when performing a regression). Actually, quite often, this information is not printed when the function is run: the function performs some computations and returns an object, with a certain class (with our example, this would be the "lm" function and the "lm" class) which is then printed, with the "print" function.

> print

function (x, ...)

UseMethod("print")

<environment: namespace:base>As the object belong to the "lm" class:

> print.lm

function (x, digits = max(3, getOption("digits") - 3), ...)

{

cat("\nCall:\n", deparse(x$call), "\n\n", sep = "")

if (length(coef(x))) {

cat("Coefficients:\n")

print.default(format(coef(x), digits = digits), print.gap = 2,

quote = FALSE)

}

else cat("No coefficients\n")

cat("\n")

invisible(x)

}

<environment: namespace:stats>Same for the "summary" function: it takes the result of a function (say, the result of the "lm" function), builds another object (here, of class "summary.lm") on which the "print" function is called.

> class(r)

[1] "lm"

> s <- summary(r)

> class(s)

[1] "summary.lm"

> summary

function (object, ...)

UseMethod("summary")

<environment: namespace:base>

> summary.lm

function (object, correlation = FALSE, symbolic.cor = FALSE, ...)

{

z <- object

p <-

(...)

> print.summary.lm

Error: Object "print.summary.lm" not found

> getAnywhere("print.summary.lm")

A single object matching "print.summary.lm" was found

It was found in the following places

registered S3 method for print from namespace stats

namespace:stats

with value

function (x, digits = max(3, getOption("digits") - 3), symbolic.cor = x$symbolic.cor,

signif.stars = getOption("show.signif.stars"), ...)

{

cat("\nCall:\n")

cat(paste(deparse(x$cal

(...)But it does not always work... There are two object-oriented programming paradigms in R: what we have explained works for the first (old, simple, understandandable) one. Here is an example for the other.

> class(r)

[1] "lmer"

attr(,"package")

[1] "lme4"

> print.lmer

Error: Object "print.lmer" not found

> getAnywhere("print.lmer")

no object named "print.lmer" was foundThe function is no longer called "print" but "show"...

> getMethod("show", "lmer")

Method Definition:

function (object)

show(new("summary.lmer", object, useScale = TRUE, showCorrelation = FALSE))

<environment: namespace:lme4>

Signatures:

object

target "lmer"

defined "lmer"In this case, it simply calls the "summary" function (with arguments that are not the default arguments) and the "show" on the result.

> getMethod("summary", "lmer")

Method Definition:

function (object, ...)

new("summary.lmer", object, useScale = TRUE, showCorrelation = TRUE)

<environment: namespace:lme4>

Signatures:

object

target "lmer"

defined "lmer"

> getMethod("show", "summary.lmer")

Method Definition:

function (object)

{

fcoef <- fixef(object)

useScale <- object@useScale

(...)

invisible(object)

}

<environment: namespace:lme4>

Signatures:

object

target "summary.lmer"

defined "summary.lmer"

Plotting functions are used for their side effect (the plot that appears on the screen), but they can also return a value.

That value can be the result of the computations that lead to the plot. Usually, you do not want the result to be printed, because most users will to see the plot and nothing else, and those who actually want the data, want it for further processing and will store it in a variable. To this end, you can return the value as invisible(): it will not be printed.

f <- function (x, y, N=10, FUN=median, ...) {

x <- cut(x, quantile(x, seq(0,1,length=N)), include.lowest=TRUE)

y <- tapply(y, x, FUN, na.rm=TRUE)

x <- levels(x)

plot(1:length(x), y, ...)

result <- cbind(x=x, y=y)

invisible(result)

}

f(rnorm(100), rnorm(100),

type="o", pch=15, xlab="x fractiles", ylab="y median", las=1)

res <- f(rnorm(100), rnorm(100),

type="o", pch=15, xlab="x fractiles", ylab="y median", las=1)

str(res)

res # Now its gets printedSome plotting functions return a "plotting object", that can be stored, modified and later plotted, with the print() function.

r <- xyplot(rnorm(10) ~ rnorm(10)) # Does not plot anything

print(r) # Plots the data

r # Plots the data: print() is implicitely called

str(r) # An object of class "treillis", so that print(r)

# actually calls

r$panel.args.common$pch <- 15 # Modify the plot

r # Replot it

The following operators mean what you thing they mean -- but they tend to be applied to vectors.

+ * / - ^ < <= > >= == !=

The boolean operators are !, & et | (but you can write && or || instead of | and &: the result is then a scalar, even if the arguments are vectors).

The : (colon) operator creates vectors.

> -5:7 [1] -5 -4 -3 -2 -1 0 1 2 3 4 5 6 7

The [ operator retrieves one or several elements of a vector, matrix, data frame or arrow.

> x <- floor(10*runif(10)) > x [1] 3 6 5 1 0 6 7 8 5 8 > x[3] [1] 5 > x[1:3] [1] 3 6 5 > x[c(1,2,5)] [1] 3 6 0

The $ operator retrieves an element in a list, with no need to put its name between quotes, contrary to the [[ operator. The interest of the [[ operator is that is argument can be a variable.

> op <- par() > op$col [1] "black" > op[["col"]] [1] "black" > a <- "col" > op[[a]] [1] "black"

Assignment is written "<-". Some people use "=" instead: this will work most of the time, but not always (for instance, in "try" statements) -- it is easier, safer and more readable to use "<-".

x <- 1.17 y <- c(1, 2, 3, 4)

The matrix product is %*%, tensor product (aka Kronecker product) is %x%.

> A <- matrix(c(1,2,3,4), nr=2, nc=2)

> J <- matrix(c(1,0,2,1), nr=2, nc=2)

> A

[,1] [,2]

[1,] 1 3

[2,] 2 4

> J

[,1] [,2]

[1,] 1 2

[2,] 0 1

> J %x% A

[,1] [,2] [,3] [,4]

[1,] 1 3 2 6

[2,] 2 4 4 8

[3,] 0 0 1 3

[4,] 0 0 2 4The %o% operator builds multiplication tables (it calls the "outer" function with the multiplication).

> A <- 1:5 > B <- 11:15 > names(A) <- A > names(B) <- B > A %o% B 11 12 13 14 15 1 11 12 13 14 15 2 22 24 26 28 30 3 33 36 39 42 45 4 44 48 52 56 60 5 55 60 65 70 75 > outer(A,B, '*') 11 12 13 14 15 1 11 12 13 14 15 2 22 24 26 28 30 3 33 36 39 42 45 4 44 48 52 56 60 5 55 60 65 70 75

Euclidian division is written %/%, its remainder %%.

> 1234 %% 3 [1] 1 > 1234 %/% 3 [1] 411 > 411*3 + 1 [1] 1234

"Set" membership is written %in%.

> 17 %in% 1:100 [1] TRUE > 17.1 %in% 1:100 [1] FALSE

The ~ and | operators are used to describe statistical model: more about them later.

For more details (and for the operators I have not mentionned, such as <<- -> ->> @ :: ::: _ =), read the manual.

?"+" ?"<" ?"<-" ?"!" ?"[" ?Syntax ?kronecker ?match library(methods) ?slot TODO: mention <<- (and the reverse ->, ->>)

You can also define your own operators: these are just 2-arguments functions whose name starts and ends with "%". The following example comes from the manual.

> "%w/o%" <- function(x,y) x[!x %in% y] > (1:10) %w/o% c(3,7,12) [1] 1 2 4 5 6 8 9 10

Other example, to turn a 2-argument function into an operator, that can be easily used for more than two arguments:

"%i%" <- intersect intersect(x,y) # Only two arguments intersect( intersect(x,y), z ) x %i% y %i% z

TODO: See below ("dirty tricks") for actual global variables -- avoid them

TODO: options(), par()

This is a tricky bit. Object Orientation was added to R as an afterthought -- even worse, it has been added twice.

The first flavour, S3 classes, is rather simple: you add a "class" attribute to a normal object (list, vector, etc.); you then define a "generic" (C++ programmers would say "virtual" function), e.g., "plot", that looks at the class of its first argument and dispatches the call to the right function (e.g., for an object of class "Foo", the plot.Foo() function would be called).

The second flavour, S4 classes, is more intricate: it tries to copy the paradigm used in most object-oriented programming languages. For large projects, it might be a good idea, but think carefully!

More recently, several packages suggested other ways of programming with objects within R: R.oo and proto

As all Matlab-like software (remember that "Matlab" stands for "Matrix Laboratory" -- it has noting to do with Mathematics), R handles tables of numbers. Yet, there are different kinds of tables: vectors (tables of dimension 1), matrices (tables of dimension 2), arrays (tables of any dimension), "Data Frames" (tables of dimension 2, in which each column may have a different type -- for instance, a table containing the results of an experiment, with one row per subject and one column per variable). We shall now present in more detail each of these, explain how to build them, to manipulate them, to transform them, to convert them -- in the next chapter, we shall plot them.

Here are several ways to define them (here, "c" stands for "concatenate").

> c(1,2,3,4,5) [1] 1 2 3 4 5 > 1:5 [1] 1 2 3 4 5 > seq(1, 5, by=1) [1] 1 2 3 4 5 > seq(1, 5, lenth=5) [1] 1 2 3 4 5

Here are several ways to select a part of a vector.

> x <- seq(-1, 1, by=.1) > x [1] -1.0 -0.9 -0.8 -0.7 -0.6 -0.5 -0.4 -0.3 -0.2 -0.1 0.0 0.1 0.2 0.3 0.4 [16] 0.5 0.6 0.7 0.8 0.9 1.0 > x[5:10] [1] -0.6 -0.5 -0.4 -0.3 -0.2 -0.1 > x[c(5,7:10)] [1] -0.6 -0.4 -0.3 -0.2 -0.1 > x[-(5:10)] # We remove the elements whose index lies between 5 and 10 [1] -1.0 -0.9 -0.8 -0.7 0.0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1.0 > x>0 [1] FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE TRUE [13] TRUE TRUE TRUE TRUE TRUE TRUE TRUE TRUE TRUE > x[ x>0 ] [1] 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1.0

We can name the coordinates of a vector -- and then access its elements by their names.

> names(x)

NULL

> names(x) <- letters[1:length(x)] # "letters" is a vector of strings,

# containing 26 lower case letters.

# There is also LETTERS for upper

# case letters.

> x

a b c d e f g h i j k l m n o p

-1.0 -0.9 -0.8 -0.7 -0.6 -0.5 -0.4 -0.3 -0.2 -0.1 0.0 0.1 0.2 0.3 0.4 0.5

q r s t u

0.6 0.7 0.8 0.9 1.0

> x["r"]

r

0.7One can also define those names while creating the vector.

> c(a=1, b=5, c=10, d=7) a b c d 1 5 10 7

A few operations on vectors:

> x <- rnorm(10)

> sort(x)

[1] -1.4159893 -1.1159279 -1.0598020 -0.2314716 0.3117607 0.5376470

[7] 0.6922798 0.9316789 0.9761509 1.1022298

> rev(sort(x))

[1] 1.1022298 0.9761509 0.9316789 0.6922798 0.5376470 0.3117607

[7] -0.2314716 -1.0598020 -1.1159279 -1.4159893

> o <- order(x)

> o

[1] 3 1 9 6 4 7 8 10 2 5

> x[ o[1:3] ]

[1] -1.415989 -1.115928 -1.059802

> x <- sample(1:5, 10, replace=T)

> x

[1] 1 4 5 3 1 3 4 5 3 1

> sort(x)

[1] 1 1 1 3 3 3 4 4 5 5

> unique(x) # We need not sort the data before (this contrasts

# with Unix's "uniq" command)

[1] 1 4 5 3Here are still other ways of creating vectors. The "seq" command generates arithmetic sequences.

> seq(0,10, length=11) [1] 0 1 2 3 4 5 6 7 8 9 10 > seq(0,10, by=1) [1] 0 1 2 3 4 5 6 7 8 9 10

The "rep" command repeats a number or a vector.

> rep(1,10) [1] 1 1 1 1 1 1 1 1 1 1 > rep(1:5,3) [1] 1 2 3 4 5 1 2 3 4 5 1 2 3 4 5

It can also repeat each element several times.

> rep(1:5,each=3) [1] 1 1 1 2 2 2 3 3 3 4 4 4 5 5 5

We can mix the two previous operations.

> rep(1:5,2,each=3) [1] 1 1 1 2 2 2 3 3 3 4 4 4 5 5 5 1 1 1 2 2 2 3 3 3 4 4 4 5 5 5

The "gl" command serves a comparable purpose, mainly to create factors -- more about this in a few pages.

A factor is a vector coding for a qualitatitative variable (a qualitative variable is a non-numeric variable, such as gender, color, species, etc. -- or, at least, a variable whose actual numeric values are meaningless, for example, zip codes). We can create them with the "factor" command.

> x <- factor( sample(c("Yes", "No", "Perhaps"), 5, replace=T) )

> x

[1] Perhaps Perhaps Perhaps Perhaps No

Levels: No PerhapsWe can specify the list of acceptable values, or "levels" of this factor.

> l <- c("Yes", "No", "Perhaps")

> x <- factor( sample(l, 5, replace=T), levels=l )

> x

[1] No Perhaps No Yes Yes

Levels: Yes No Perhaps

> levels(x)

[1] "Yes" "No" "Perhaps"One can summarize a factor with a contingency table.

> table(x)

x

Yes No Perhaps

2 2 1We can create a factor that follows a certain pattern with the "gl" command.

> gl(1,4) [1] 1 1 1 1 Levels: 1 > gl(2,4) [1] 1 1 1 1 2 2 2 2 Levels: 1 2 > gl(2,4, labels=c(T,F)) [1] TRUE TRUE TRUE TRUE FALSE FALSE FALSE FALSE Levels: TRUE FALSE > gl(2,1,8) [1] 1 2 1 2 1 2 1 2 Levels: 1 2 > gl(2,1,8, labels=c(T,F)) [1] TRUE FALSE TRUE FALSE TRUE FALSE TRUE FALSE Levels: TRUE FALSE

The "interaction" command builds a new factor by concatenating the levels of two factors.

> x <- gl(2,4) > x [1] 1 1 1 1 2 2 2 2 Levels: 1 2 > y <- gl(2,1,8) > y [1] 1 2 1 2 1 2 1 2 Levels: 1 2 > interaction(x,y) [1] 1.1 1.2 1.1 1.2 2.1 2.2 2.1 2.2 Levels: 1.1 2.1 1.2 2.2 > data.frame(x,y, int=interaction(x,y)) x y int 1 1 1 1.1 2 1 2 1.2 3 1 1 1.1 4 1 2 1.2 5 2 1 2.1 6 2 2 2.2 7 2 1 2.1 8 2 2 2.2

The "expand.grid" computes a cartesian product (and yields a data.frame).

> x <- c("A", "B", "C")

> y <- 1:2

> z <- c("a", "b")

> expand.grid(x,y,z)

Var1 Var2 Var3

1 A 1 a

2 B 1 a

3 C 1 a

4 A 2 a

5 B 2 a

6 C 2 a

7 A 1 b

8 B 1 b

9 C 1 b

10 A 2 b

11 B 2 b

12 C 2 bWhen playing with factors, people sometimes want to turn them into numbers. This can be ambiguous and/or dangerous.

> x <- factor(c(3,4,5,1)) > as.numeric(x) # No NOT do that [1] 2 3 4 1 > x [1] 3 4 5 1 Levels: 1 3 4 5

What you get is the numbers internally used to code the various levels of the factor -- and it depends on the order of the factors...

Instead, try one of the following:

> x [1] 3 4 5 1 Levels: 1 3 4 5 > levels(x)[ x ] [1] "3" "4" "5" "1" > as.numeric( levels(x)[ x ] ) [1] 3 4 5 1 > as.numeric( as.character(x) ) # probably slower [1] 3 4 5 1

TODO

The missing values are coded as "NA" (it stands for "Not Available").

> x <- c(1,5,9,NA,2) > x [1] 1 5 9 NA 2

The default behaviour of many functions is to reject data containing missing values -- this is natural when the result would depend on the missing value, were it not missing.

> mean(x) [1] NA

But of course, you can ask R to first remove the missing values.

> mean(x, na.rm=T) [1] 4.25

You can do that yourself with the "na.omit" function.

> x [1] 1 5 9 NA 2 > na.omit(x) [1] 1 5 9 2 attr(,"na.action") [1] 4 attr(,"class") [1] "omit"

This also works with data.frames -- it discards the rows containing at least one missing value.

> d <- data.frame(x, y=rev(x)) > d x y 1 1 2 2 5 NA 3 9 9 4 NA 5 5 2 1 > na.omit(d) x y 1 1 2 3 9 9 5 2 1

You should NOT use missing values in boolean tests: if you test wether two numbers are equal, and if one (or both) is (are) missing, then you cannot conclude: the result will be NA.

> x

[1] 1 5 9 NA 2

> x == 5

[1] FALSE TRUE FALSE NA FALSE

> x == NA # If we compare with something unknown, the

# result is unknown.

[1] NA NA NA NA NATo test if a value is missing, use the "is.na" function.

> is.na(x) [1] FALSE FALSE FALSE TRUE FALSE

This is not the only way of getting non-numeric values in a numeric vector: you can also get +Inf, -Inf (positive and negative infinites), and NaN (Not a Number).

> x <- c(-1, 0,1,2,NA)

> cbind(X=x, LogX=log(x))

X LogX

[1,] -1 NaN

[2,] 0 -Inf

[3,] 1 0.0000000

[4,] 2 0.6931472

[5,] NA NA

Warning message:

NaNs produced in: log(x)You can check wether a numeric value is actually numeric with the "is.finite" function.

> is.finite(log(x)) [1] FALSE FALSE TRUE TRUE FALSE

A data frame may be seen as a list of vectors, each with the same length. Usually, the table has one row for each subject in the experiment, and one column for each variable measured in the experiement -- as the different variables measure different things, they maight have different types: some will be quantitative (numbers; each column may contain a measurement in a different unit), others will be qualitative (i.e., factors).

> n <- 10 > df <- data.frame( x=rnorm(n), y=sample(c(T,F),n,replace=T) )

The "str" command prints out the structure of an object (any object) and display a part of the data it contains.

> str(df) `data.frame': 10 obs. of 2 variables: $ x: num 0.515 -1.174 -0.523 -0.146 0.410 ... $ y: logi FALSE TRUE FALSE FALSE FALSE TRUE FALSE FALSE FALSE FALSE

The "summary" command print concise information about an object (here, a data.frame, but it could be anything).

> summary(df)

x y

Min. :-1.17351 Length:10

1st Qu.:-0.42901 Mode :logical

Median : 0.13737

Mean : 0.09217

3rd Qu.: 0.48867

Max. : 1.34213

> df

x y

1 0.51481130 FALSE

2 -1.17350867 TRUE

3 -0.52338041 FALSE

4 -0.14589347 FALSE

5 0.41022626 FALSE

6 1.34213009 TRUE

7 0.77715729 FALSE

8 -0.55460889 FALSE

9 -0.03843468 FALSE

10 0.31318467 FALSEDifferent ways to access the columns of a data.frame.

> df$x [1] 0.51481130 -1.17350867 -0.52338041 -0.14589347 0.41022626 1.34213009 [7] 0.77715729 -0.55460889 -0.03843468 0.31318467 > df[,1] [1] 0.51481130 -1.17350867 -0.52338041 -0.14589347 0.41022626 1.34213009 [7] 0.77715729 -0.55460889 -0.03843468 0.31318467 > df[["x"]] [1] 0.51481130 -1.17350867 -0.52338041 -0.14589347 0.41022626 1.34213009 [7] 0.77715729 -0.55460889 -0.03843468 0.31318467 > dim(df) [1] 10 2 > names(df) [1] "x" "y" > row.names(df) [1] "1" "2" "3" "4" "5" "6" "7" "8" "9" "10"

One may change the colomn/row names.

> names(df) <- c("a", "b")

> row.names(df) <- LETTERS[1:10]

> names(df)

[1] "a" "b"

> row.names(df)

[1] "A" "B" "C" "D" "E" "F" "G" "H" "I" "J"

> str(df)

`data.frame': 10 obs. of 2 variables:

$ a: num 0.515 -1.174 -0.523 -0.146 0.410 ...

$ b: logi FALSE TRUE FALSE FALSE FALSE TRUE FALSE FALSE FALSE FALSEWe can turn the columns the data.frame into actual variables with the "attach" command (it is the same principle as namespaces in C++). Do not forget to "detach" the data.frame after use.

> data(faithful) > str(faithful) `data.frame': 272 obs. of 2 variables: $ eruptions: num 3.60 1.80 3.33 2.28 4.53 ... $ waiting : num 79 54 74 62 85 55 88 85 51 85 ... > attach(faithful) > str(eruptions) num [1:272] 3.60 1.80 3.33 2.28 4.53 ... > detach()

The "merge" command joins two data frames -- it is the same JOIN as in Databases. More precisely you have two data frames a (with columns x, y, z) and b (with columns x1, x2, y,z) and certain observations (rows) of a correspond to certain observations of b: the command merges them to yield a data frame with columns x, x1, x2, y, z. In this example, the command

merge(a,b)

is equivalent to the SQL command

SELECT * FROM a,b WHERE a.y = b.y AND a.z = b.z

In SQL, this is called an inner join can also be written as

SELECT * FROM a INNER JOIN b ON a.y = b.y AND a.z = b.z

There are several types of SQL JOINs: in an INNER JOIN, we only get the rows that are present in both tables; in a LEFT JOIN, we get all the elements of the first table and the corresponding elements of the second (if any); a RIGHT JOIN is the opposite; an OUTER JOIN is the union of the LEFT and RIGHT JOINs. In R, you can get the other types of JOIN with the "all", "all.x" and "all.y" arguments.

merge(x, y, all.x = TRUE) # LEFT JOIN merge(x, y, all.y = TRUE) # RIGHT JOIN merge(x, y, all = TRUE) # OUTER JOIN

By default, the join is over the columns common present in both data frames, but you can restrict it to a subset of them, with the "by" argument.

merge(a, b, by=c("y", "z"))Data frames are often used to store data to be analyzed. We shall detail those examples later -- do not be frightened if you have never heard of "regression", we shall shortly demystify this notion.

# Regression data(cars) # load the "cars" data frame lm( dist ~ speed, data=cars) # Polynomial regression lm( dist ~ poly(speed,3), data=cars) # Regression with splines library(Design) lm( y ~ rcs(x) ) # TODO: Find some data # Logistic regression glm(y ~ x1 + x2, family=binomial, data=...) # TODO: Find some data library(Design) lrm(death ~ blood.presure + age) # TODO: Find some data # Non linear regression nls( y ~ a + b * exp(c * x), start = c(a=1, b=1, c=-1) ) # TODO: Find some data ?selfStart # Principal Component Analysis data(USArrest) princomp( ~ Murder + Assault + UrbanPop, data=USArrest) # Treillis graphics xyplot( x ~ y | group ) # TODO: Find some data

We shall see in a separate section how to transform data frames, because there are several ways of putting the result of an experiment in a table -- but usually, we shall prefer the one with the most rows and the fewer columns.

Some people may advise you to use the "subset" command to extract subsets of a data.frame. Actually, you can do the same thing with the basic subsetting syntax -- which is more general: the "subset" function is but a convenience wrapper around it.

d[ d$subject == "laika", ] d[ d$day %in% c(1, 3, 9, 10, 11), ] d[ d$value < .1 | d$value > .9, ] d[ d$x < d$y, ]

TODO

d <- data.frame(...) as.matrix(d) data.matrix(d)

Vectors only contain simple types (numbers, booleans or strings); lists, on the contrary, may contain anything, for instance sata frames or other lists. They can be used to store complex data, for instamce, trees. They can also be used, simply, as hash tables.

> h <- list()

> h[["foo"]] <- 1

> h[["bar"]] <- c("a", "b", "c")

> str(h)

List of 2

$ foo: num 1

$ bar: chr [1:3] "a" "b" "c"You can access one element with the "[[" operator, you can access several elements with the "[" operator.

> h[["bar"]]

[1] "a" "b" "c"

> h[[2]]

[1] "a" "b" "c"

> h[1:2]

$foo

[1] 1

$bar

[1] "a" "b" "c"

> h[2] # Beware, the result is not the second element, but a

# list containing this second element.

$bar

[1] "a" "b" "c"

> str(h[2])

List of 1

$ bar: chr [1:3] "a" "b" "c"For instance, the graphic parameters are stored in a list, used as a hash table.

> str( par() ) List of 68 $ adj : num 0.5 $ ann : logi TRUE $ ask : logi FALSE $ bg : chr "transparent" $ bty : chr "o" $ cex : num 1 $ cex.axis : num 1 $ cex.lab : num 1 $ cex.main : num 1.2 $ cex.sub : num 1 $ cin : num [1:2] 0.147 0.200 ... $ xpd : logi FALSE $ yaxp : num [1:3] 0 1 5 $ yaxs : chr "r" $ yaxt : chr "s" $ ylog : logi FALSE

The results of most statistical analyses is not a simple number or array, but a list containing all the relevant values.

> n <- 100 > x <- rnorm(n) > y <- 1 - 2 * x + rnorm(n) > r <- lm(y~x) > str(r) List of 12 $ coefficients : Named num [1:2] 0.887 -2.128 ..- attr(*, "names")= chr [1:2] "(Intercept)" "x" $ residuals : Named num [1:100] 0.000503 0.472182 -1.079153 -2.423841 0.168424 ... ..- attr(*, "names")= chr [1:100] "1" "2" "3" "4" ... $ effects : Named num [1:100] -9.5845 -19.5361 -1.0983 -2.5001 0.0866 ... ..- attr(*, "names")= chr [1:100] "(Intercept)" "x" "" "" ... $ rank : int 2 $ fitted.values: Named num [1:100] 0.67 1.65 1.75 4.20 4.44 ... ..- attr(*, "names")= chr [1:100] "1" "2" "3" "4" ... $ assign : int [1:2] 0 1 $ qr :List of 5 ..$ qr : num [1:100, 1:2] -10 0.1 0.1 0.1 0.1 0.1 0.1 0.1 0.1 0.1 ... .. ..- attr(*, "dimnames")=List of 2 .. .. ..$ : chr [1:100] "1" "2" "3" "4" ... .. .. ..$ : chr [1:2] "(Intercept)" "x" .. ..- attr(*, "assign")= int [1:2] 0 1 ..$ qraux: num [1:2] 1.10 1.04 ..$ pivot: int [1:2] 1 2 ..$ tol : num 1e-07 ..$ rank : int 2 ..- attr(*, "class")= chr "qr" ... > str( summary(r) ) List of 11 $ call : language lm(formula = y ~ x) $ terms :Classes 'terms', 'formula' length 3 y ~ x .. ..- attr(*, "variables")= language list(y, x) .. ..- attr(*, "factors")= int [1:2, 1] 0 1 .. .. ..- attr(*, "dimnames")=List of 2 .. .. .. ..$ : chr [1:2] "y" "x" .. .. .. ..$ : chr "x" .. ..- attr(*, "term.labels")= chr "x" .. ..- attr(*, "order")= int 1 .. ..- attr(*, "intercept")= int 1 .. ..- attr(*, "response")= int 1 .. ..- attr(*, ".Environment")=length 6 <environment> .. ..- attr(*, "predvars")= language list(y, x) .. ..- attr(*, "dataClasses")= Named chr [1:2] "numeric" "numeric" .. .. ..- attr(*, "names")= chr [1:2] "y" "x" $ residuals : Named num [1:100] 0.000503 0.472182 -1.079153 -2.423841 0.168424 ... ..- attr(*, "names")= chr [1:100] "1" "2" "3" "4" ... ...

To delete an element from a list:

> h[["bar"]] <- NULL > str(h) List of 1 $ foo: num 1

Matrices are 2-dimensional tables, but contrary to data frames (whose type may vary from one column to the next), their elements all have the same type.

A matrix:

> m <- matrix( c(1,2,3,4), nrow=2 )

> m

[,1] [,2]

[1,] 1 3

[2,] 2 4Caution: by default, the elements of a matrix are given vertically, column after column.

> matrix( 1:3, nrow=3, ncol=3 )

[,1] [,2] [,3]

[1,] 1 1 1

[2,] 2 2 2

[3,] 3 3 3

> matrix( 1:3, nrow=3, ncol=3, byrow=T )

[,1] [,2] [,3]

[1,] 1 2 3

[2,] 1 2 3

[3,] 1 2 3

> t(matrix( 1:3, nrow=3, ncol=3 ))

[,1] [,2] [,3]

[1,] 1 2 3

[2,] 1 2 3

[3,] 1 2 3Matrix product (beware: A * B is an element-by-element product):

> x <- matrix( c(6,7), nrow=2 )

> x

[,1]

[1,] 6

[2,] 7

> m %*% x

[,1]

[1,] 27

[2,] 40Determinant:

> det(m) [1] -2

Transpose:

> t(m)

[,1] [,2]

[1,] 1 2

[2,] 3 4A diagonal matrix:

> diag(c(1,2))

[,1] [,2]

[1,] 1 0

[2,] 0 2Identity matrix (or, more generally, a scalar matrix, i.e., the matrix of a homothety):

> diag(1,2)

[,1] [,2]

[1,] 1 0

[2,] 0 1

> diag(rep(1,2))

[,1] [,2]

[1,] 1 0

[2,] 0 1

> diag(2)

[,1] [,2]

[1,] 1 0

[2,] 0 1There is also a "Matrix" package, in case you prefer a full object-oriented framework and/or you need other operations on matrices.

library(help=Matrix)

We have already seen the "cbind" and "rbind" functions that put data frames side by side or on top of each other: they also work with matrices.

> cbind( c(1,2), c(3,4) )

[,1] [,2]

[1,] 1 3

[2,] 2 4

> rbind( c(1,3), c(2,4) )

[,1] [,2]

[1,] 1 3

[2,] 2 4The trace of a matrix:

> sum(diag(m)) [1] 5

The inverse of a matrix:

> solve(m)

[,1] [,2]

[1,] -2 1.5

[2,] 1 -0.5Actually, one rarely need the inverse of a matrix -- we usually just want to multiply a given vector by this inverse: this operation is simpler, faster and numerically more stable.

> solve(m, x)

[,1]

[1,] -1.5

[2,] 2.5

> solve(m) %*% x

[,1]

[1,] -1.5

[2,] 2.5Eigenvalues:

> eigen(m)$values [1] 5.3722813 -0.3722813

Eigenvectors:

> eigen(m)$vectors

[,1] [,2]

[1,] -0.5742757 -0.9093767

[2,] -0.8369650 0.4159736Let us check that the matrix has actually been diagonalized:

> p <- eigen(m)$vectors

> d <- diag(eigen(m)$values)

> p %*% d %*% solve(p)

[,1] [,2]

[1,] 1 3

[2,] 2 4It might be the good moment to recall the main matrix decompositions.

The LU decomposition, or more precisely, the PA = LDU decomposition (P: permutation matrix; L, U: lower (or upper) triangular matrix, with 1's on the diagonal) expresses the result of Gauss's Pivot Algorithm (L contains the operations on the lines; D contains the pivots).

We do not really need it, because the Pivot Algorithm is already implemented in the "solve" command.

The Choleski decomposition is a particular case of the LU decomposition: if A is real symetric definite positive matrix, it can be written as B * B' where B is upper triangular. It is used to solve linear systems AX=Y where A is symetric positive -- this is the case for the equations defining least squares estimators.

We shall see later another application to the simulation of non independant normal variables, with a given variance-covariance matrix.

When you look at them, matrices are rather complicated (there are a lot of coefficients). However, if you look at the way they act on vectors, it looks rather simple: they often seem to extend or shrink the vectors, depending on their direction. A matrix M of size n is said to be diagonalizable if there exists a basis e_1,...,e_n of R^n so that M e_i = lambda_i e_i for all i, for some (real or sometimes complex) numbers. Geometrically, it means that, in the direction of each e_i, the matrix acts like a homothety. The e_i are said to be eigen vectors of the matrix M, the lambda_i are said to be its eigen values. in matrix terms, this means that there exists an invertible matrix P (whose columns will be the eigen vectors) and a diagonal matrix D (whose elements will be the corresponding eigen values) such that

M = P D P^-1.

Diagonalizable matrices sound good, but there may still be a few problems. First, the eigen values (and the eigen vectors) can be complex -- if you want to interpret them as a real-world quantity, it is a bad start. However, the matrices you will want to diagonalize are often symetric real matrices: they are diagonalizable with real eigen values (and eigen vectors). Second, not all matrices are diagonalizable. For instance,

1 1 0 1

is not diagonalizable. However, the set of non-diagonalizable matrices has zero measure: in particular, if you take a matrix at random, in some "reasonable" way ("reasonable" means "along a probability measure absolutely continuous with respect to the Lebesgue measure on the set of square matrices of size n), the probability that it be diagonalizable (over the complex numbers) is 1 -- we say that matrices are almost surely diagonalizable.

Should you be interested in the rare cases when the matrices are not diagonalizable (for instance, if you are interested in matrices with integer, bounded coefficients), you can look into the Jordan decomposition, that generalizes the diagonalization and works with any matrix.

There are also many decompositions based on the matrix t(A)*A.

The A=QR decomposition (R: upper triangular, Q: unitary) expresses the Gram-Schmidt orthonormalization of the columns of A -- we can compute it from the LU decomposition of t(A)*A.

?qr

It may be used to do a regression:

Model: Y = b X + noise X = QR \hat Y = Q Q' Y b = R^-1 Q' Y

The Singular Value Decomposition (SVD) A=Q1*S*Q2 (Q1, Q2: matrices containing the eigenvectors of A*t(A) and t(A)*A; S: diagonal matrix containing the square roots of the eigenvalues of A*t(A) or t(A)*A (they are the same)) which yields, when A is symetrix, its diagonalization in an orthonormal basis; it also used in the computation of the pseudo inverse. This decomposition may also be seen as a sum of matrices of rank 1, such that the first matrices in this sum approximate "best" the initial matrix.

?svd

The polar decomposition, A=QR (Q: orthogonal, R: symetric positive definite) is an analogue of the polar decomposition of a complex number: it decomposes the correspondiong linear transformation into rotation and "stretching". We can meet this decomposition in Least Squares Estimates: when we try to minimize the absolute value of Ax-b, this amounts to solve

t(A) A x = t(A) b

(Usually, t(A)*A is invertible, otherwise we would use pseudo-inverses.)

TODO: speak a bit more about pseudo-inverses.

There is also an "array" type, that generalizes matrices in higher dimensions.

> d <- array(rnorm(3*3*2), dim=c(3,3,2))

> d

, , 1

[,1] [,2] [,3]

[1,] 0.97323599 -0.7319138 -0.7355852

[2,] 0.06624588 -0.5732781 -0.4133584

[3,] 1.65808464 -1.3011671 -0.4556735

, , 2

[,1] [,2] [,3]

[1,] 0.6314685 0.6263645 1.2429024

[2,] -0.2562622 -1.5338054 0.9634999

[3,] 0.1652014 -0.9791350 -0.2040375

> str(d)

num [1:3, 1:3, 1:2] 0.9732 0.0662 1.6581 -0.7319 -0.5733 ...Contigency tables are arrays (computed with the "table" function), when there are more than two variables.

> data(HairEyeColor)

> HairEyeColor

, , Sex = Male

Eye

Hair Brown Blue Hazel Green

Black 32 11 10 3

Brown 38 50 25 15

Red 10 10 7 7

Blond 3 30 5 8

, , Sex = Female

Eye

Hair Brown Blue Hazel Green

Black 36 9 5 2

Brown 81 34 29 14

Red 16 7 7 7

Blond 4 64 5 8

> str(HairEyeColor)

table [1:4, 1:4, 1:2] 32 38 10 3 11 50 10 30 10 25 ...

- attr(*, "dimnames")=List of 3

..$ Hair: chr [1:4] "Black" "Brown" "Red" "Blond"

..$ Eye : chr [1:4] "Brown" "Blue" "Hazel" "Green"

..$ Sex : chr [1:2] "Male" "Female"

- attr(*, "class")= chr "table"It says "table", but a "table" is an "array":

> is.array(HairEyeColor) [1] TRUE

One may attach meta-data to an object: these are called "attributes". For instance, names of the elements of a list are in an attribute.

> l <- list(a=1, b=2, c=3) > str(l) List of 3 $ a: num 1 $ b: num 2 $ c: num 3 > attributes(l) $names [1] "a" "b" "c"

Similarly, the row and columns names of a data.frame

> a <- data.frame(a=1:2, b=3:4) > str(a) `data.frame': 2 obs. of 2 variables: $ a: int 1 2 $ b: int 3 4 > attributes(a) $names [1] "a" "b" $row.names [1] "1" "2" $class [1] "data.frame" > a <- matrix(1:4, nr=2) > rownames(a) <- letters[1:2] > colnames(a) <- LETTERS[1:2] > str(a) int [1:2, 1:2] 1 2 3 4 - attr(*, "dimnames")=List of 2 ..$ : chr [1:2] "a" "b" ..$ : chr [1:2] "A" "B" > attributes(a) $dim [1] 2 2 $dimnames $dimnames[[1]] [1] "a" "b" $dimnames[[2]] [1] "A" "B" > data(HairEyeColor) > str(HairEyeColor) table [1:4, 1:4, 1:2] 32 38 10 3 11 50 10 30 10 25 ... - attr(*, "dimnames")=List of 3 ..$ Hair: chr [1:4] "Black" "Brown" "Red" "Blond" ..$ Eye : chr [1:4] "Brown" "Blue" "Hazel" "Green" ..$ Sex : chr [1:2] "Male" "Female" - attr(*, "class")= chr "table"

It is also used to hold the code of a function if you want to keep the comments.

> f <- function (x) {

+ # Useless function

+ x + 1

+ }

> f

function (x) {

# Useless function

x + 1

}

> str(f)

function (x)

- attr(*, "source")= chr [1:4] "function (x) {" ...

> attr(f, "source") <- NULL

> str(f)

function (x)

> f

function (x)

{

x + 1

}Some people even suggest to use this to "hide" code -- but choosing an interpreted language is a very bad idea if you want to hide your code.

> attr(f, "source") <- "Forbidden" > f Forbidden > attr(f, "source") <- "Remember to use brackets to call a function, e.g., f()" > f Remember to use brackets to call a function, e.g., f()

Typically, when the data has a complex structure, you use a list; but when the bulk of the data has a very simple, table-like structure, you store it in an array or data frame and put the rest in the attributes. For instance, here is a chunk of an "lm" object (the result of a regression):

> str(r$model) `data.frame': 100 obs. of 2 variables: $ y: num 5.087 -1.587 -0.637 2.023 2.207 ... $ x: num -1.359 0.993 0.587 -0.627 -0.853 ... - attr(*, "terms")=Classes 'terms', 'formula' length 3 y ~ x .. ..- attr(*, "variables")= language list(y, x) .. ..- attr(*, "factors")= int [1:2, 1] 0 1 .. .. ..- attr(*, "dimnames")=List of 2 .. .. .. ..$ : chr [1:2] "y" "x" .. .. .. ..$ : chr "x" .. ..- attr(*, "term.labels")= chr "x" .. ..- attr(*, "order")= int 1 .. ..- attr(*, "intercept")= int 1 .. ..- attr(*, "response")= int 1 .. ..- attr(*, ".Environment")=length 149 <environment> .. ..- attr(*, "predvars")= language list(y, x) .. ..- attr(*, "dataClasses")= Named chr [1:2] "numeric" "numeric" .. .. ..- attr(*, "names")= chr [1:2] "y" "x"

We shall soon see another application of attributes: the notion of class -- the class of an object is just the value of its "class" attribute, if any.

If you want to use a complex object, obtained as the result of a certain command, by extracting some of its elements, or if you want to browse through it, the printing command is not enough: we need other means to peer inside an object.

The "unclass" command removes the class of an object: only remains the underlying type (usually, "list"). As a result, it is printed by the "print.default" function that displays its actual contents.

> data(USArrests)

> r <- princomp(USArrests)$loadings

> r

Loadings:

Comp.1 Comp.2 Comp.3 Comp.4

Murder 0.995

Assault -0.995

UrbanPop -0.977 -0.201

Rape -0.201 0.974

Comp.1 Comp.2 Comp.3 Comp.4

SS loadings 1.00 1.00 1.00 1.00

Proportion Var 0.25 0.25 0.25 0.25

Cumulative Var 0.25 0.50 0.75 1.00

> class(r)

[1] "loadings"

> unclass(r)

Comp.1 Comp.2 Comp.3 Comp.4

Murder -0.04170432 0.04482166 0.07989066 0.99492173

Assault -0.99522128 0.05876003 -0.06756974 -0.03893830

UrbanPop -0.04633575 -0.97685748 -0.20054629 0.05816914

Rape -0.07515550 -0.20071807 0.97408059 -0.07232502You cound also directly use the "print.default" function.

> print.default(r)

Comp.1 Comp.2 Comp.3 Comp.4

Murder -0.04170432 0.04482166 0.07989066 0.99492173

Assault -0.99522128 0.05876003 -0.06756974 -0.03893830

UrbanPop -0.04633575 -0.97685748 -0.20054629 0.05816914

Rape -0.07515550 -0.20071807 0.97408059 -0.07232502

attr(,"class")

[1] "loadings"The "str" function prints the contents of an objects and truncates all the vectors it encounters: thus, you can peer into large objects.

> str(r) loadings [1:4, 1:4] -0.0417 -0.9952 -0.0463 -0.0752 0.0448 ... - attr(*, "dimnames")=List of 2 ..$ : chr [1:4] "Murder" "Assault" "UrbanPop" "Rape" ..$ : chr [1:4] "Comp.1" "Comp.2" "Comp.3" "Comp.4" - attr(*, "class")= chr "loadings" > str(USArrests) `data.frame': 50 obs. of 4 variables: $ Murder : num 13.2 10 8.1 8.8 9 7.9 3.3 5.9 15.4 17.4 ... $ Assault : int 236 263 294 190 276 204 110 238 335 211 ... $ UrbanPop: int 58 48 80 50 91 78 77 72 80 60 ... $ Rape : num 21.2 44.5 31 19.5 40.6 38.7 11.1 15.8 31.9 25.8 ...

Finally, to get an idea of what you can do with an object, you can always look the code of its "print" or "summary" methods.

> print.lm

function (x, digits = max(3, getOption("digits") - 3), ...)

{

cat("\nCall:\n", deparse(x$call), "\n\n", sep = "")

if (length(coef(x))) {

cat("Coefficients:\n")

print.default(format(coef(x), digits = digits), print.gap = 2,

quote = FALSE)

}

else cat("No coefficients\n")

cat("\n")

invisible(x)

}

<environment: namespace:base>

> summary.lm

function (object, correlation = FALSE, symbolic.cor = FALSE,

...)

{

z <- object

p <- z$rank

if (p == 0) {

r <- z$residuals

n <- length(r)

etc.

> print.summary.lm

function (x, digits = max(3, getOption("digits") - 3), symbolic.cor = x$symbolic.cor,

signif.stars = getOption("show.signif.stars"), ...)

{

cat("\nCall:\n")

cat(paste(deparse(x$call), sep = "\n", collapse = "\n"),

"\n\n", sep = "")

resid <- x$residuals

df <- x$df

rdf <- df[2]

cat(if (!is.null(x$w) && diff(range(x$w)))

"Weighted ", "Residuals:\n", sep = "")

if (rdf > 5) {

etc.The "deparse" command produces a character string whose evaluation will yield the initial object (the resulting syntax is a bit strange: if you were to build such an object from scratch, you would not proceed that way).

> deparse(r)

[1] "structure(c(-0.0417043206282872, -0.995221281426497, -0.0463357461197109, "

[2] "-0.075155500585547, 0.0448216562696701, 0.058760027857223, -0.97685747990989, "

[3] "-0.200718066450337, 0.0798906594208107, -0.0675697350838044, "

[4] "-0.200546287353865, 0.974080592182492, 0.994921731246978, -0.0389382976351601, "

[5] "0.0581691430589318, -0.0723250196376096), .Dim = c(4, 4), .Dimnames = list("

[6] " c(\"Murder\", \"Assault\", \"UrbanPop\", \"Rape\"), c(\"Comp.1\", \"Comp.2\", "

[7] " \"Comp.3\", \"Comp.4\")), class = \"loadings\")"

> cat(deparse(r)); cat("\n")

structure(c(-0.0417043206282872, -0.995221281426497,

-0.0463357461197109, -0.075155500585547, 0.0448216562696701,

0.058760027857223, -0.97685747990989, -0.200718066450337,

0.0798906594208107, -0.0675697350838044, -0.200546287353865,

0.974080592182492, 0.994921731246978, -0.0389382976351601,

0.0581691430589318, -0.0723250196376096), .Dim = c(4, 4),

.Dimnames = list( c("Murder", "Assault", "UrbanPop", "Rape"),

c("Comp.1", "Comp.2", "Comp.3", "Comp.4")), class = "loadings")

TODO: Check that I mention apply, sapply, lapply,

rapply (recursive apply), rollapply (zoo)

TODO: Mention the "reshape" packageThere are several ways to code the results of an experiment.

First example: we have measured several qualitative variables on several (hundred) subjects. The data may be written down as a table, one line per subject, one column per variable. We can also use a contingency table (it is only a good idea of there are few variables, otherwise the array would mainly contain zeroes; if there are k variables the array would hane k dimensions).

How can we switch from one formulation to the next.

In one direction, the "table" function computes a contingency table.

n <- 1000 x1 <- factor( sample(1:3, n, replace=T), levels=1:3 ) x2 <- factor( sample(LETTERS[1:5], n, replace=T), levels=LETTERS[1:5] ) x3 <- factor( sample(c(F,T),n,replace=T), levels=c(F,T) ) d <- data.frame(x1,x2,x3) r <- table(d)

This yields:

> r , , x3 = FALSE x2 x1 A B C D E 1 27 45 31 38 25 2 41 33 30 35 33 3 33 30 28 35 39 , , x3 = TRUE x2 x1 A B C D E 1 26 30 28 42 29 2 35 33 22 37 40 3 42 31 31 36 35

The "ftable" command presents the result in a slightly different way (more readable if there are more variables).

> ftable(d)

x3 FALSE TRUE

x1 x2

1 A 27 26

B 45 30

C 31 28

D 38 42

E 25 29

2 A 41 35

B 33 33

C 30 22

D 35 37

E 33 40

3 A 33 42

B 30 31

C 28 31

D 35 36

E 39 35Let us now see how to turn a contingency table into a data.frame.

Case 1: 1-dimensional table

n <- 100 k <- 10 x <- factor( sample(LETTERS[1:k], n, replace=T), levels=LETTERS[1:k] ) d <- table(x) factor( rep(names(d),d), levels=names(d) )

Case 2: 2-dimensional table

n <- 100

k <- 4

x1 <- factor( sample(LETTERS[1:k], n, replace=T), levels=LETTERS[1:k] )

x2 <- factor( sample(c('x','y','z'),n,replace=T), levels=c('x','y','z') )

d <- data.frame(x1,x2)

d <- table(d)

y2 <- rep(colnames(d)[col(d)], d)

y1 <- rep(rownames(d)[row(d)], d)

dd <- data.frame(y1,y2)General case:

n <- 1000

x1 <- factor( sample(1:3, n, replace=T), levels=1:3 )

x2 <- factor( sample(LETTERS[1:5], n, replace=T), levels=LETTERS[1:5] )

x3 <- factor( sample(c(F,T),n,replace=T), levels=c(F,T) )

d <- data.frame(x1,x2,x3)

r <- table(d)

# A function generalizing "row" and "col" in higher dimensions

foo <- function (r,i) {

d <- dim(r)

rep(

rep(1:d[i], each=prod(d[0:(i-1)])),

prod(d[(i+1):(length(d)+1)], na.rm=T)

)

}

k <- length(dimnames(r))

y <- list()

for (i in 1:k) {

y[[i]] <- rep( dimnames(r)[[i]][foo(r,i)], r )

}

d <- data.frame(y)

colnames(d) <- LETTERS[1:k]

# Test

r - table(d)

Other example: we made the same experiment, with the same subject, three times. We can represent the data with one row per subject, with several results for each

subject, result1, result2, result3

We can also use one row per experiment, with the number of the subject, the number of the experiment (1, 2 or 3) and the result.

subject, retry, result

Exercice: Write function to turn one representation into the other. (Hint: you may use the "split" command that separates data along a factor).

Other example: Same situation, but this time, the numner or experiments per subject is not constant. The first representation can no longer be a data frame: it can be a list of vectors (one vector for each subject). The second representation is unchanged.

n <- 100 k <- 10 subject <- factor( sample(1:k,n,replace=T), levels=1:k ) x <- rnorm(n) d1 <- data.frame(subject, x) # Data.frame to vector list d2 <- split(d1$x, d1$subject) # vector list to data.frame rep(names(d2), sapply(d2, length))

(I never use those functions: fell free to skip to the next section that present more general and powerful alternatives.)

In SQL (this is the language spoken by databases -- to simplify things, you can consider that a database is a (set of) data.frame(s)), we often want to apply a function (sum, mean, sd, etc.) to groups of records ("record" is the database word for "line in a data.frame). For instance, if you store you personnal accounting in a database, giving, for each expense, the amount and the nature (rent, food, transortation, taxes, books, cinema, etc.),

amount nature ------------------ 390 rent 4.90 cinema 6.61 food 10.67 food 6.40 books 14.07 food 73.12 books 4.90 cinema

you might want to compute the total expenses for each type of expense. In SQL, you would say:

SELECT nature, SUM(amount) FROM expenses GROUP BY nature;

You can do the same in R:

nature <- c("rent", "cinema", "books", "food")

p <- length(nature):1

p <- sum(p)/p

n <- 10

d <- data.frame(

nature = sample( nature, n, replace=T, prob=p ),

amount = 10*round(rlnorm(n),2)

)

by(d$amount, d$nature, sum)This yields:

> d nature amount 1 books 59.9 2 rent 3.0 3 books 6.7 4 cinema 4.7 5 food 7.3 6 books 11.3 7 rent 12.2 8 cinema 6.5 9 food 3.2 10 food 4.7 > by(d$amount, d$nature, sum) INDICES: books [1] 77.9 ------------------------------------------------------------ INDICES: cinema [1] 11.2 ------------------------------------------------------------ INDICES: food [1] 15.2 ------------------------------------------------------------ INDICES: rent [1] 15.2

The "by" function assumes that you have a vector, that you want to cut into pieces and on whose pieces you want to apply a function. Sometimes, it is not a vector, but several: all the columns in a data.frame. You can then replace the "by" function by "aggregate".

> N <- 50 > k1 <- 4 > g1 <- sample(1:k1, N, replace=TRUE) > k2 <- 3 > g2 <- sample(1:k2, N, replace=TRUE) > d <- data.frame(x=rnorm(N), y=rnorm(N), z=rnorm(N)) > aggregate(d, list(g1, g2), mean) Group.1 Group.2 x y z 1 1 1 -0.5765 0.07474 -0.01558 2 2 1 0.4246 0.12450 -0.05569 3 3 1 -0.3418 0.30908 -0.32289 4 4 1 0.7405 -0.79703 0.18489 5 1 2 -0.5855 -0.07166 -0.16581 6 2 2 -0.4230 -0.15215 0.24693 7 3 2 0.4329 0.32154 -0.82883 8 4 2 -1.0167 -0.18424 0.12709 9 1 3 0.3961 -0.86940 0.68552 10 2 3 -0.8808 0.62404 0.79728 11 3 3 -0.4884 -0.67295 0.03346 12 4 3 0.1605 -0.68522 -0.35144 TODO: Replace this example by real data...

These two functions, "by" and "aggregate", are actually special cases of the apply/tapply/lapply/sapply/mapply functions, that are more general and that we shall now present.

> by.data.frame

function (data, INDICES, FUN, ...) {

(...)

ans <- eval(substitute(tapply(1:nd, IND, FUNx)), data)

(...)

}

> aggregate.data.frame

function (x, by, FUN, ...) {

(...)

y <- lapply(x, tapply, by, FUN, ..., simplify = FALSE)

(...)

}

The "apply" function applies a function (mean, quartile, etc.) to each column or row of a data.frame, matrix or array.

> options(digits=4)

> df <- data.frame(x=rnorm(20),y=rnorm(20),z=rnorm(20))

> apply(df,2,mean)

x y z

0.04937 -0.11279 -0.02171

> apply(df,2,range)

x y z

[1,] -1.564 -1.985 -1.721

[2,] 1.496 1.846 1.107It also works in higher dimensions. The second argument indicates the indices along which the program should loop, i.e., the dimensions used to slice the data, i.e., the dimensions that will remain after the computation.

> options(digits=2)

> m <- array(rnorm(10^3), dim=c(10,10,10))

> a <- apply(m, 1, mean)

> a

[1] 0.060 -0.027 0.037 0.160 0.054 0.012 -0.039 -0.064 -0.013 0.061

> b <- apply(m, c(1,2), mean)

> b

[,1] [,2] [,3] [,4] [,5] [,6] [,7] [,8] [,9] [,10]

[1,] -0.083 -0.7297 0.547 0.283 0.182 -0.409 -0.0029 0.170 -0.131 0.7699

[2,] -0.044 0.3618 -0.206 -0.095 0.062 -0.568 -0.4841 0.334 0.362 0.0056

[3,] 0.255 0.2359 -0.331 0.040 0.213 -0.547 -0.1852 0.492 -0.257 0.4525

[4,] -0.028 0.7422 0.417 -0.088 0.205 -0.521 -0.1981 0.042 0.604 0.4244

[5,] -0.085 0.3461 0.047 0.683 -0.018 -0.173 0.1825 -0.826 -0.037 0.4153

[6,] -0.139 -0.4761 0.276 0.174 0.145 0.232 -0.1194 -0.010 0.176 -0.1414

[7,] -0.139 0.0054 -0.328 -0.264 0.078 0.496 0.2812 -0.336 0.124 -0.3110

[8,] -0.060 0.1291 0.313 -0.199 -0.325 0.338 -0.2703 0.166 -0.133 -0.5998

[9,] 0.091 0.2250 0.155 -0.277 0.075 -0.044 -0.4169 0.050 0.200 -0.1849

[10,] -0.157 -0.3316 -0.103 0.373 -0.034 0.116 0.0660 0.249 -0.040 0.4689

> apply(b, 1, mean)

[1] 0.060 -0.027 0.037 0.160 0.054 0.012 -0.039 -0.064 -0.013 0.061The "tapply" function groups the observations along the value of one (or several) factors and applies a function (mean, etc.) to the resulting groups. The "by" command is similar.

> tapply(1:20, gl(2,10,20), sum) 1 2 55 155 > by(1:20, gl(2,10,20), sum) INDICES: 1 [1] 55 ------------------------------------------------------------ INDICES: 2 [1] 155

The "sapply" function applies a function to each element of a list (or vector, etc.) and returns, if possible, a vector. The "lapply" function is similar but returns a list.

> x <- list(a=rnorm(10), b=runif(100), c=rgamma(50,1))

> lapply(x,sd)

$a

[1] 1.041

$b

[1] 0.294

$c

[1] 1.462

> sapply(x,sd)

a b c

1.041 0.294 1.462In particular, the "sapply" function can apply a function to each column of a data.frame without specifying the dimension numbers required by the "apply" command (at the beginning, you never know if it sould be 1 or 2 and you end up trying both to retain the one whose result has the expected dimension).

The "split" command cuts the data, as the "tapply" function, but does not apply any function afterwards.

> str(InsectSprays)

`data.frame': 72 obs. of 2 variables:

$ count: num 10 7 20 14 14 12 10 23 17 20 ...

$ spray: Factor w/ 6 levels "A","B","C","D",..: 1 1 1 1 1 1 1 1 1 1 ...

> str( split(InsectSprays$count, InsectSprays$spray) )

List of 6

$ A: num [1:12] 10 7 20 14 14 12 10 23 17 20 ...

$ B: num [1:12] 11 17 21 11 16 14 17 17 19 21 ...

$ C: num [1:12] 0 1 7 2 3 1 2 1 3 0 ...

$ D: num [1:12] 3 5 12 6 4 3 5 5 5 5 ...

$ E: num [1:12] 3 5 3 5 3 6 1 1 3 2 ...

$ F: num [1:12] 11 9 15 22 15 16 13 10 26 26 ...

> sapply( split(InsectSprays$count, InsectSprays$spray), mean )

A B C D E F

14.500 15.333 2.083 4.917 3.500 16.667

> tapply( InsectSprays$count, InsectSprays$spray, mean )

A B C D E F

14.500 15.333 2.083 4.917 3.500 16.667

TODO: This is a VERY important section.

At the begining of this document, list the most important sections, list what the reader is expected to be able to do after reading this document.

In R, many commands handle vectors or tables, allowing an (almost) loop-less programming style -- parallel programming. Thus, the computations are faster than with an explicitely written loop (because R is an interpreted language). The resulting programming style is very different from what you may be used to: here are a few exercises to warm you up. We shall need the table functions we have just introduced, in particular "apply".

Many people consider the "apply" function as a loop: in the current implementation of R, it might be implemented as a loop, if if you run R on a parallel machine, it could be different -- all the operations could be run at once. This really is parallelization.

Exercice: Let x be a table. Compute the sum of its rows, the sum of each of its columns. If x is the contingency table of two qualitative variables, compute the theoretical contingency table under the hypothesis that the two variables are independant. If you already know what it is, computhe the corresponding Chi^2.

# To avoid any row/column confusion, I choose a non-square table n <- 4 m <- 5 x <- matrix( rpois(n*m,10), nr=n, nc=m ) rownames(x) <- 1:n colnames(x) <- LETTERS[1:m] x apply(x,1,sum) # Actually, there is already a "rowSums" function apply(x,2,sum) # Actually, there is already a "colSums" function # Theoretical contingency table y <- matrix(apply(x,1,sum),nr=n,nc=m) * matrix(apply(x,2,sum),nr=n,nc=m,byrow=T) / sum(x) # Theoretical contingency table y <- apply(x,1,sum) %*% t(apply(x,2,sum)) / sum(x) # Computing the Chi^2 by hand sum((x-y)^2/y) # Let us check... chisq.test(x)$statistic

Exercice: Let x be a boolean vector. Count the number of sequences ("runs") of zeros (for instance, in 00101001010110, there are 6 runs: 00 0 00 0 0 0). Count the number of sequences of 1. Counth the total number of sequences. Same question for a factor with more tham two levels.

n <- 50

x <- sample(0:1, n, replace=T, p=c(.2,.8))

# Number of runs

sum(abs(diff(x)))+1

# Number of runs of 1's.

f <- function (x, v=1) { # If someone has a simpler idea...

x <- diff(x==v)

x <- x[x!=0]

if(x[1]==1)

sum(x==1)

else

1+sum(x==1)

}

f(x,1)

# Number of runs of 0's.

f(x,0)

n <- 50

k <- 4

x <- sample(1:k, n, replace=T)

# With a loop

s <- 0

for (i in 1:4) {

s <- s + f(x,i)

}

s

# With no loop (less readable)

a <- apply(matrix(1:k,nr=1,nc=k), 2, function (i) { f(x,i) } )

a

sum(a)In a binary vector of length n, find the position of the runs of 1's of length greater than k.

n <- 100 k <- 10 M <- sample(0:1, n, replace=T, p=c(.2,.8)) x <- c(0,M,0) # Start of the runs of 1's deb <- which( diff(x) == 1 ) # End of the runs of 1's fin <- which( diff(x) == -1 ) -1 # Length of those runs long <- fin - deb # Location of those whose lengths exceed k cbind(deb,fin)[ fin-deb > k, ]

Exercise: same question, but we are looking for runs of 1's of length at least k in an n*m matrix. Present the result as a table.

foo <- function (M,k) {

x <- c(0,M,0)

deb <- which( diff(x) == 1 )

fin <- which( diff(x) == -1 ) -1

cbind(deb,fin)[ fin-deb >= k, ]

}

n <- 50

m <- 50

M <- matrix( sample(0:1, n*m, replace=T, prob=c(.2,.8)), nr=n, nc=m )

res <- apply(M, 1, foo, k=10)

# Add the line number (not very pretty -- if someone has a better idea)

i <- 0

res <- lapply(res, function (x) {

x <- matrix(x, nc=2)

i <<- i+1

#if (length(x)) {

cbind(ligne=rep(i,length(x)/2), deb=x[,1], fin=x[,2])

#} else {

# x

#}

})

# Present the result as a table

do.call('rbind', res) # The line numbers are still missingTODO: check that I mention the "do.call" function somewhere in this document...

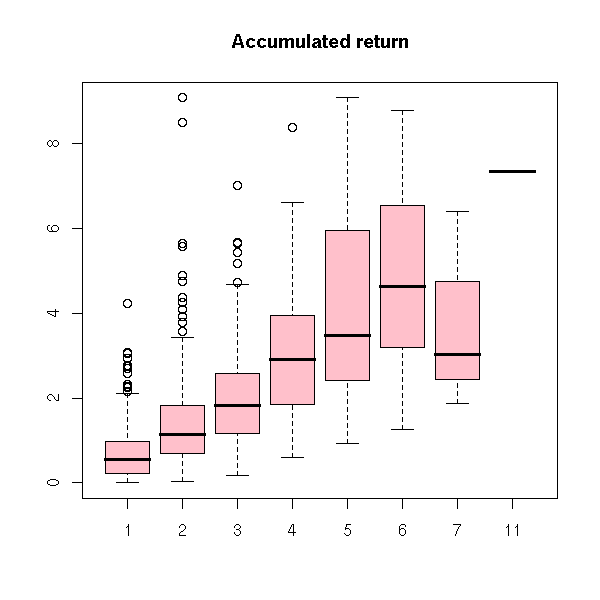

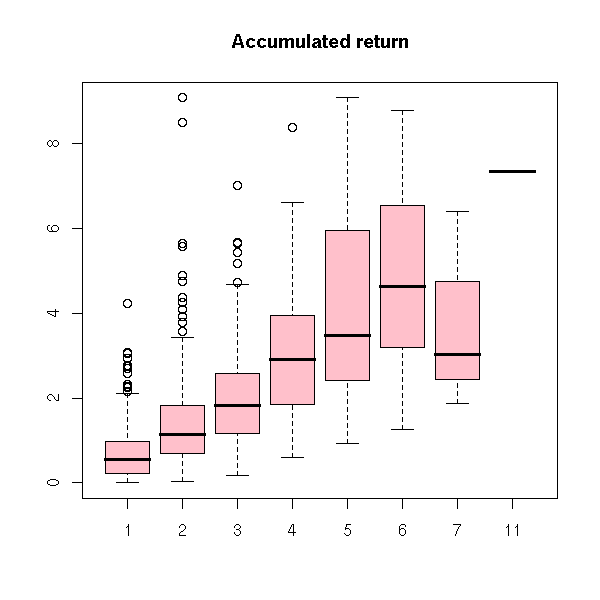

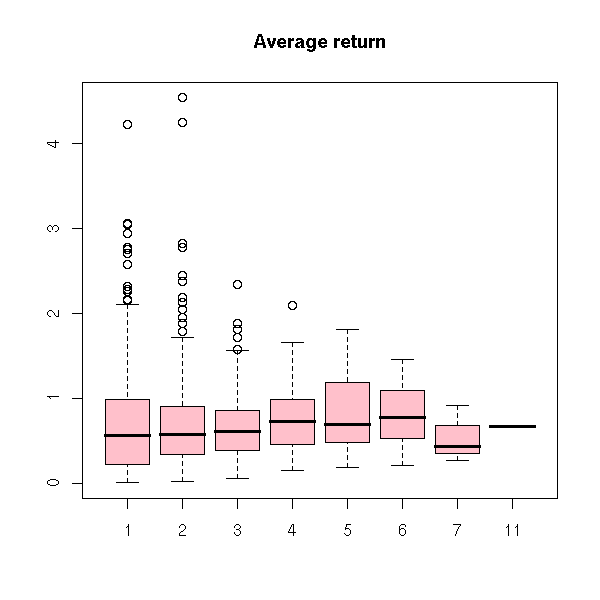

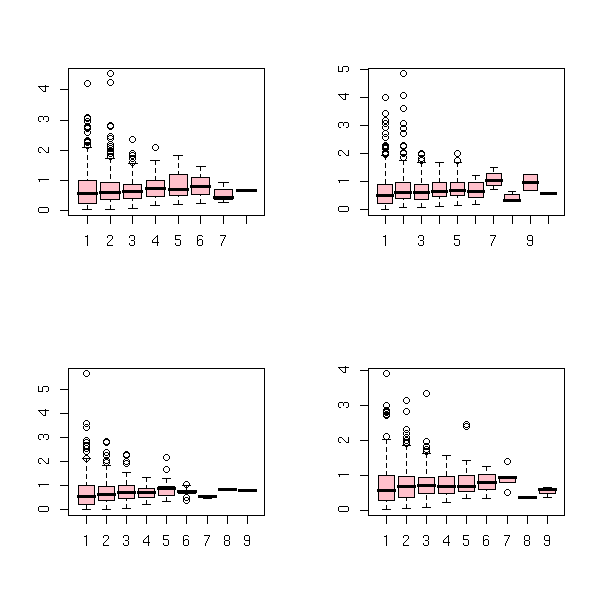

Let r be the return of a financial asset. The clustered return is the accumulated return for a sequence of returns of the same sign. The trend number is the number of steps in such a sequence. The average return is their ratio. Compute these quantities.

data(EuStockMarkets)

x <- EuStockMarkets

# We aren't interested in the spot prices, but in the returns

# return[i] = ( price[i] - price[i-1] ) / price[i-1]

y <- apply(x, 2, function (x) { diff(x)/x[-length(x)] })

# We normalize the data

z <- apply(y, 2, function (x) { (x-mean(x))/sd(x) })

# A single time series

r <- z[,1]

# The runs

f <- factor(cumsum(abs(diff(sign(r))))/2)

r <- r[-1]

accumulated.return <- tapply(r, f, sum)

trend.number <- table(f)

boxplot(abs(accumulated.return) ~ trend.number, col='pink',

main="Accumulated return")

boxplot(abs(accumulated.return)/trend.number ~ trend.number,

col='pink', main="Average return")

op <- par(mfrow=c(2,2))

for (i in 1:4) {

r <- z[,i]

f <- factor(cumsum(abs(diff(sign(r))))/2)

r <- r[-1]

accumulated.return <- tapply(r, f, sum)

trend.number <- table(f)

boxplot(abs(accumulated.return) ~ trend.number, col='pink')

}

par(op)

op <- par(mfrow=c(2,2))

for (i in 1:4) {

r <- z[,i]

f <- factor(cumsum(abs(diff(sign(r))))/2)

r <- r[-1]

accumulated.return <- tapply(r, f, sum)

trend.number <- table(f)

boxplot(abs(accumulated.return)/trend.number ~ trend.number, col='pink')

}

par(op)

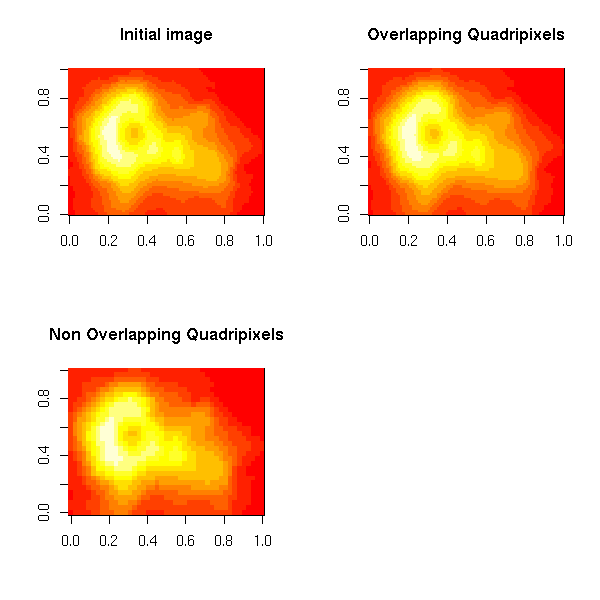

Let M be an n*m matrix (representing a grayscale image); compute the mean value of each quadripixel.

data(volcano) M <- volcano n <- dim(M)[1] m <- dim(M)[2] M1 <- M [1:(n-1),] [,1:(m-1)] M2 <- M [2:n,] [,1:(m-1)] M3 <- M [1:(n-1),] [,2:m] M4 <- M [2:n,] [,2:m] # Overlapping quadripixels M0 <- (M1+M2+M3+M4)/4 # Non-overlapping quadripixels nn <- floor((n-1)/2) mm <- floor((m-1)/2) M00 <- M0 [2*(1:nn),] [,2*(1:mm)] op <- par(mfrow=c(2,2)) image(M, main="Initial image") image(M0, main="Overlapping Quadripixels") image(M00, main="Non Overlapping Quadripixels") par(op)

Construct a Van der Monde matrix.

outer(x, 0:n, '^')

Draw a graph from its indicence matrix.

n <- 100

m <- matrix(runif(2*n),nc=2)

library(ape)

r <- mst(dist(m)) # The incidence matrix (of the minimum spanning

# tree of the points)

plot(m)

n <- dim(r)[1]

w <- which(r!=0)

i <- as.vector(row(r))[w]

j <- as.vector(col(r))[w]

segments( m[i,1], m[i,2], m[j,1], m[j,2], col='red' )

TODO: Find other exercises.

R is not the best tool to process strings, but you sometimes have to do it.

Strings are delimited by double or single quotes.

> "Hello" == 'Hello' [1] TRUE

You do not print a string with the "print" function but with the "cat" function. The "print" function only gives you the representation of the string.

> print("Hello\n")

[1] "Hello\n"

> cat("Hello\n")

Hello

> s <- "C:\\Program Files\\" # At work, I am compelled to use Windows...

> print(s)

[1] "C:\\Program Files\\"

> cat(s, "\n")

C:\Program Files\You can concatenate strings with the "paste" function. To get the desired result, you may have to play with the "sep" argument.

> paste("Hello", "World", "!")

[1] "Hello World !"

> paste("Hello", "World", "!", sep="")

[1] "HelloWorld!"

> paste("Hello", " World", "!", sep="")

[1] "Hello World!"

> x <- 5

> paste("x=", x)

[1] "x= 5"

> paste("x=", x, paste="")

[1] "x= 5 "The "cat" function also accepts a "sep" argument.

> cat("x=", x, "\n")

x= 5

> cat("x=", x, "\n", sep="")

x=5Sometimes, you do not want to concatenate strings stored in different variables, but the elements of a vector of strings. If you want the result to be a single string, and not a vector of strings, you must add a "collapse" argument.

> s <- c("Hello", " ", "World", "!")

> paste(s)

[1] "Hello" " " "World" "!"

> paste(s, sep="")

[1] "Hello" " " "World" "!"

> paste(s, collapse="")

[1] "Hello World!"In some circumstances, you can even need both (the "cat" function does not accept this "collapse" argument).

> s <- c("Hello", "World!")

> paste(1:3, "Hello World!")

[1] "1 Hello World!" "2 Hello World!" "3 Hello World!"

> paste(1:3, "Hello World!", sep=":")

[1] "1:Hello World!" "2:Hello World!" "3:Hello World!"

> paste(1:3, "Hello World!", sep=":", collapse="\n")

[1] "1:Hello World!\n2:Hello World!\n3:Hello World!"

> cat(paste(1:3, "Hello World!", sep=":", collapse="\n"), "\n")

1:Hello World!

2:Hello World!

3:Hello World!The "nchar" function gives the length of a string (I am often looking for a "strlen" function: there it is).

> nchar("Hello World!")

[1] 12The "substring" function extract part of a string (the second argument is the starting position, the third argument is 1 + the end position).

> s <- "Hello World" > substring(s, 4, 6) [1] "lo "

The "strsplit" function splits a string into chunks, at each occurrence of a given "string".

> s <- "foo, bar, baz" > strsplit(s, ", ") [[1]] [1] "foo" "bar" "baz" > s <- "foo-->bar-->baz" > strsplit(s, "-->") [[1]] [1] "foo" "bar" "baz"

Actually, it is not a string, but a regular expression.

> s <- "foo, bar, baz" > strsplit(s, ", *") [[1]] [1] "foo" "bar" "baz"

You can also use it to get the individual characters of a string.

> strsplit(s, "") [[1]] [1] "f" "o" "o" "," " " "b" "a" "r" "," " " "b" "a" "z" > str(strsplit(s, "")) List of 1 $ : chr [1:13] "f" "o" "o" "," ...

The grep function looks for a "string" in a vector of strings.

> s <- apply(matrix(LETTERS[1:24], nr=4), 2, paste, collapse="")

> s

[1] "ABCD" "EFGH" "IJKL" "MNOP" "QRST" "UVWX"

> grep("O", s)

[1] 4

> grep("O", s, value=T)

[1] "MNOP"Actually, it does not look for a string, but for a regular expression.

If Perl is installed on your machine, you can simply type (to the shell)

man perlretut

and read its Regular Expression TUTorial.

(It may seem out of place to speak of regular expressions in a document about statistics: it is not. We shall see (well, not in the current version of this document, but soon -- I hope) that stochastic regular expressions are a generalization of Hidden Markov Models (HMM), which are the analogue of State Space Models for qualitative time series. If you understood the last sentence, you probably should not be reading this.)

The "regexpr" performs the same task as the "grep" function, but gives a different result: the position and length of the first match (or -1 if there is none)

> regexpr("o", "Hello")

[1] 5

attr(,"match.length")

[1] 1

> regexpr("o", c("Hello", "World!"))

[1] 5 2

attr(,"match.length")

[1] 1 1

> s <- c("Hello", "World!")

> i <- regexpr("o", s)

> i

[1] 5 2

attr(,"match.length")

[1] 1 1

> attr(i, "match.length")

[1] 1 1Sometimes, you want an "approximate" matches, not exact matches, accounting for potential spelling or typing mistakes: the "agrep" function provides suc a "fuzzy" matching. It is used by the "help.search" function.

> grep ("abc", c("abbc", "jdfja", "cba"))

numeric(0)

> agrep ("abc", c("abbc", "jdfja", "cba"))

[1] 1The "gsub" function replaces each occurrence of a string (a regular expression, actually) by a strin.

> s <- "foo bar baz"

> gsub(" ", "", s) # Remove all the spaces

[1] "foobarbaz"

> s <- "foo bar baz"

> gsub(" ", "", s)

[1] "foobarbaz"

> gsub(" ", " ", s)

[1] "foo bar baz"

> gsub(" +", "", s)

[1] "foobarbaz"

> gsub(" +", " ", s) # Remove multiple spaces and replace them by single spaces

[1] "foo bar baz"The "sub" is similar to "gsub" but only replaces the first occurrence.

> s <- "foo bar baz"

> sub(" ", "", s)

[1] "foobar baz"

When you read data from various sources, you often run into date format problem: different people, different software use different formats, different conventions. For instance, 01/02/03 can mean the first of february 2003 for some and the second of january 2003 for others -- and perhaps even the third of february 2001 for some. The only unambiguous, universal format is the ISO 8601 one, not really used by people but rather by programmers: dates are coded as

2005-15-05

The main rationale for this format is that when you write a numeric quantity you start with the largest units and end with the smallest; e.g., when you write "123", everyone understands "a hundred and twenty three": you start with the hundreds, proceed with the tens, and end with the units. Why should it be different for dates? We should start with the largest unit, the years, procedd with the next largest the months, and end with the smallest, the days.

This format has an advantage: if you want to sort data according to the date, your program just has to be able to sort strings, it need not be aware of dates.

You can extend the format with a time, but it becomes ambiguous:

2005-05-15 21:34:10.03

It does not look ambiguous (hours, minutes, seconds, hundredths of seconds -- for some applications, you may even need thousandths of seconds), but the time zone is missing. Most of the problems you have with times comes from those time zones.

To convert a string into a Date object:

> as.Date("2005-05-15")

[1] "2005-05-15"If you convert from an ambiguous format, you must specify the format:

> as.Date("15/05/2005", format="%d/%m/%Y")

[1] "2005-05-15"

> as.Date("15/05/05", format="%d/%m/%y")

[1] "2005-05-15"

> as.Date("01/02/03", format="%y/%m/%d")

[1] "2001-02-03"

> as.Date("01/02/03", format="%y/%d/%m")

[1] "2001-03-02"You can compute the difference between two dates -- it is a number of days.

> a <- as.Date("01/02/03", format="%y/%m/%d")

> b <- as.Date("01/02/03", format="%y/%d/%m")

> a - b

Time difference of -27 daysToday's date:

> Sys.Date() [1] "2005-05-16"

You can add a Date and a number (a number of days).

> Sys.Date() + 21 [1] "2005-06-06"

You can format the date to produce one of those ambiguous formats your clients like.

> format(Sys.Date(), format="%d%m%y") [1] "160505" > format(Sys.Date(), format="%A, %d %B %Y") [1] "Monday, 16 May 2005"

The format is described in the manpage of the "strftime" function.

If you want to extract part of a date, you can use the format" function. For instance, if I want to aggregate my data by month, I can use

d$month <- format( d$date, format="%Y-%m" )

For looping purposes, you might need series of dates: you may want to use the "seq" function.

?seq.Date

> seq(as.Date("2005-01-01"), as.Date("2005-07-01"), by="month")

[1] "2005-01-01" "2005-02-01" "2005-03-01" "2005-04-01" "2005-05-01"

[6] "2005-06-01" "2005-07-01"

# A month is not always 31 days...

> seq(as.Date("2005-01-01"), as.Date("2005-07-01"), by=31)

[1] "2005-01-01" "2005-02-01" "2005-03-04" "2005-04-04" "2005-05-05"

[6] "2005-06-05"

> seq(as.Date("2005-01-01"), as.Date("2005-03-01"), by="2 weeks")

[1] "2005-01-01" "2005-01-15" "2005-01-29" "2005-02-12" "2005-02-26"However, you should be aware that loops tend to turn Dates into numbers.

> a <- seq(as.Date("2005-01-01"), as.Date("2005-03-01"), by="2 weeks")

> str(a)

Class 'Date' num [1:5] 12784 12798 12812 12826 12840

> for (i in a) {

+ str(i)

+ }

num 12784

num 12798

num 12812

num 12826

num 12840Inside the loop, you may want to add

for (i in dates) {

i <- as.Date(i)

...

}There is another caveat about the use of dates as indices to arrays: as a date is actually a number, if you use it as an index, R will understand the number used to code the date (say 12784 for 2005-01-01) as a row or column number, nor a row or column name. When using dates as indices, always convert them into strings.

a <- matrix(NA, nr=10, nc=12)

rownames(a) <- LETTERS[1:10]

dates <- seq(as.Date("2004-01-01"), as.Date("2004-12-01"),

by="month"))

colnames(a) <- as.character( dates )

for (i in dates) {

i <- as.Date(i)

a[, as.character(i)] <- 1

}There are other methods:

> methods(class="Date") [1] as.character.Date as.data.frame.Date as.POSIXct.Date c.Date [5] cut.Date -.Date [<-.Date [.Date [9] [[.Date +.Date diff.Date format.Date [13] hist.Date* julian.Date Math.Date mean.Date [17] months.Date Ops.Date plot.Date* print.Date [21] quarters.Date rep.Date round.Date seq.Date [25] summary.Date Summary.Date trunc.Date weekdays.Date

For the time (up to the second, only):

> as.POSIXct("2005-05-15 21:45:17")

[1] "2005-05-15 21:45:17 BST"

> as.POSIXlt("2005-05-15 21:45:17")

[1] "2005-05-15 21:45:17"The two classes are interchangeable, only the internal representation changes (use the first, more compact, one in data.frames).

> unclass(as.POSIXct("2005-05-15 21:45:17"))

[1] 1116189917

attr(,"tzone")

[1] ""

> unclass(as.POSIXlt("2005-05-15 21:45:17"))

$sec

[1] 17

$min

[1] 45

$hour

[1] 21

$mday

[1] 15

$mon

[1] 4

$year

[1] 105

$wday

[1] 0

$yday

[1] 134

$isdst

[1] 1You can also perform a few computations

> as.POSIXlt("2005-05-15 21:45:17") - Sys.time()

Time difference of -1.007523 daysThis is actually a call to the "difftime" function (the unit is automatically chose so that the result be readable).

> difftime(as.POSIXlt("2005-05-15 21:45:17"), Sys.time(), units="secs")

Time difference of -87246 secsShould you be unhappy with those date and time classes, there is host of packages that provide replacements for them.

date (only dates, not times; rather limited, probably old,

ignores ISO 8601)

chron (no timezones or daylight saving times: this is a

limitation, but as many problems come from timezones, it

may be an advantage)

zoo (Important)When reading a data.frame containing dates in a column, from a file, you can either read the column as strings and convert it afterwards,

d <- read.table("foo.txt")

d$Date <- as.Date( as.character( d$Date ) )or explicitely state it is a Date